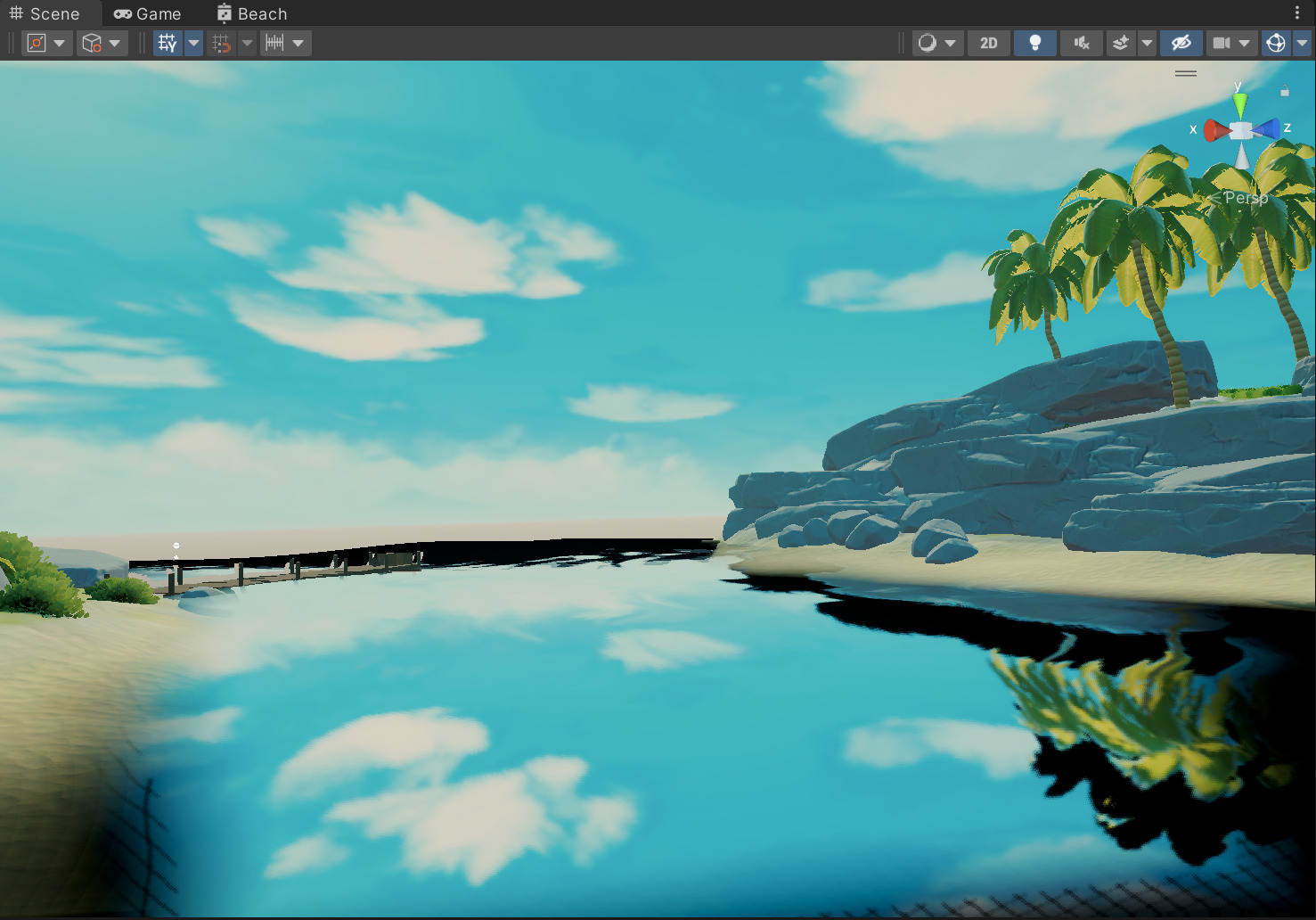

前言

笔者曾使用UE实现过一个较为完整的写实水材质系统,但是因为UE的管线是定死的,导致高光无法实现,且后来做笔试题发现关于水的交互还未实现,因此本文将实现一个完整的风格化水,考虑到水的实现部分过多,这里不再使用代码展示,而是使用ASE,原理都差不多

管线设置

- “Shader Type” 为 “Universal/Unlit”

- “Surface” 为 “Transparent”

- “Vertex Position” 为 “Relative”

深度

-

水深不必多讲,在UE的water shader中曾实现过,就是用scene depth - pixel depth

-

实现如下

这里需要根据相机的深度图重建世界坐标,请注意该方法会忽略半透明物体

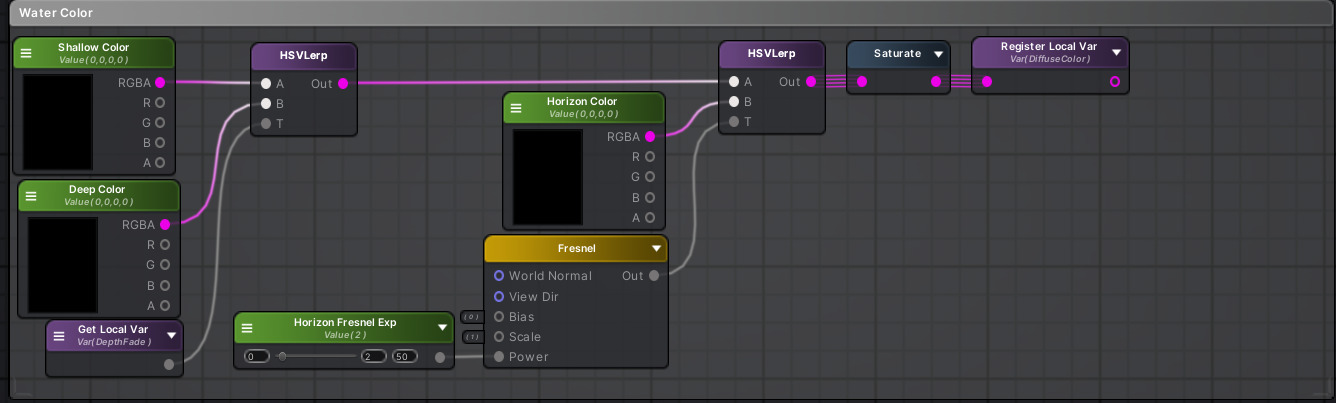

颜色

有了深度就可以用深度来实现水的颜色了,这里会实现浅水、深水、海岸线、水底的颜色

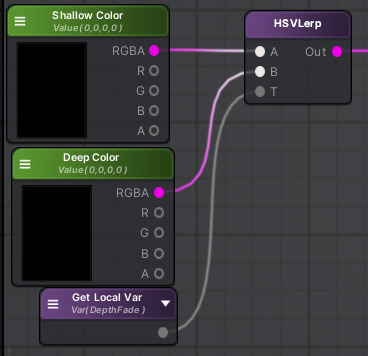

浅水、深水

-

思路:根据深度对浅水颜色和深水颜色进行lerp

-

实现

HSV颜色空间

-

因为人眼对灰颜色更加敏感,所以对RGB的lerp是不对的,而HSV空间可以解决这个问题

-

实现

-

RGB转HSV

half3 RGBToHSV(half3 In) { half4 K = half4(0.0, -1.0 / 3.0, 2.0 / 3.0, -1.0); half4 P = lerp(half4(In.bg, K.wz), half4(In.gb, K.xy), step(In.b, In.g)); half4 Q = lerp(half4(P.xyw, In.r), half4(In.r, P.yzx), step(P.x, In.r)); half D = Q.x - min(Q.w, Q.y); half E = 1e-10; return half3(abs(Q.z + (Q.w - Q.y)/(6.0 * D + E)), D / (Q.x + E), Q.x); } -

HSV转RGB

half3 HSVToRGB(half3 In) { half4 K = half4(1.0, 2.0 / 3.0, 1.0 / 3.0, 3.0); half3 P = abs(frac(In.xxx + K.xyz) * 6.0 - K.www); return In.z * lerp(K.xxx, saturate(P - K.xxx), In.y); } -

在HSV空间进行lerp

half4 HSVLerp(half4 A, half4 B, half T) { half4 Out = 0.f; A.xyz = RGBToHSV(A.xyz); B.xyz = RGBToHSV(B.xyz); half t = T; // used to lerp alpha, needs to remain unchanged half hue; half d = B.x - A.x; // hue difference if(A.x > B.x) { half temp = B.x; B.x = A.x; A.x = temp; d = -d; T = 1-T; } if(d > 0.5) { A.x = A.x + 1; hue = (A.x + T * (B.x - A.x)) % 1; } if(d <= 0.5) hue = A.x + T * d; half sat = A.y + T * (B.y - A.y); half val = A.z + T * (B.z - A.z); half alpha = A.w + t * (B.w - A.w); half3 rgb = HSVToRGB(half3(hue,sat,val)); Out = half4(rgb, alpha); return Out; }

-

海岸线

-

海岸线是因为fresnel效应,导致水平看向水面的颜色肯定是不同的

-

实现

使用Fresnel即可

-

效果

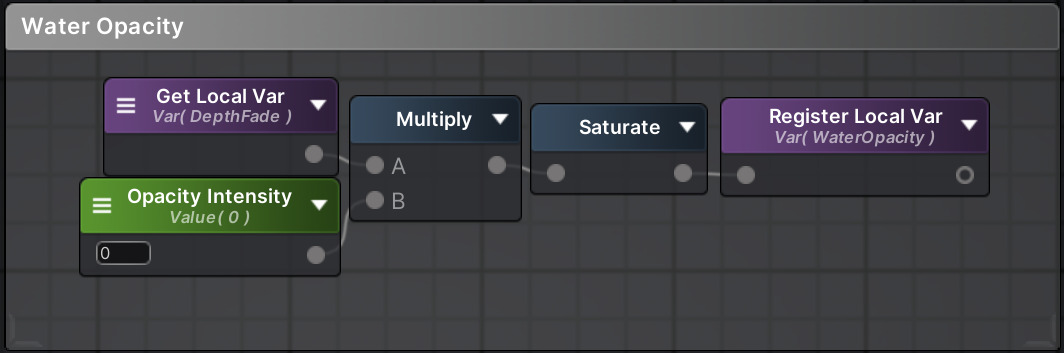

不透明度

-

思路:根据深度控制opacity

-

实现

表面涟漪

-

思路:使用normal map来模拟凹凸感,为了防止涟漪看着太假,可以使用两张normal map最后进行blend即可

-

实现

高光

-

思路:Blinn-Phong

-

实现

Light Hardness用于控制高光的软硬程度,step计算结果是最硬的高光 -

效果

折射

-

思路:基于normalized screen space(uv : 0 - 1)进行扰动

-

实现

-

效果

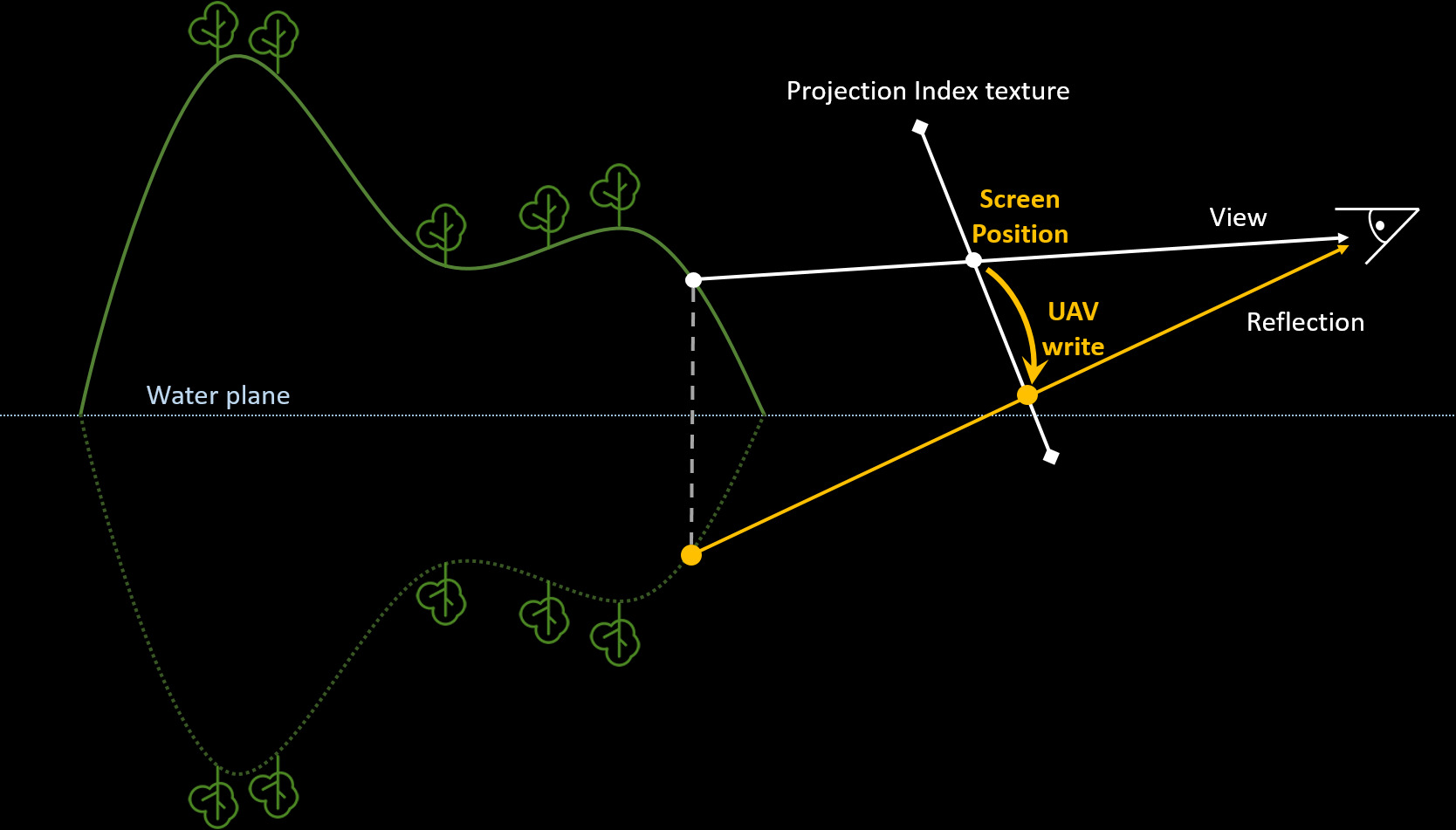

反射

-

因为平面反射使用一个摄像机从镜像角度渲染场景到RenderTexture上,再将结果合并至反射平面,开销不小,适用于PC端,这种方式实现的反射效果十分准确,但缺点是需要重新渲染一次场景,想想如果有多个镜面,那开销着实受不了,所以笔者选择使用对移动端友好的屏幕空间平面反射(ssrp),原理是在屏幕空间中计算镜面反射,开销很低,但效果不是很准确,只能反射屏幕中的像素,容易露馅。对于水面来说,ssrp足以

-

实现

-

因为ssrp基于屏幕空间,可以将其看作一种后处理技术,Volume代码如下

using UnityEngine; using UnityEngine.Rendering; namespace UnityEditor.Rendering.Universal { [SerializeField] public class SSPRVolume : VolumeComponent { [Tooltip("是否启用SSPR")] public BoolParameter m_Enable = new BoolParameter(false); [Tooltip("是否启用HDR")] public BoolParameter m_EnableHDR = new BoolParameter(false); [Tooltip("反射平面分辨率")] public ClampedIntParameter m_RTSize = new ClampedIntParameter(512, 128, 1024, false); [Tooltip("反射平面深度")] public FloatParameter m_ReflectPlaneHeight = new FloatParameter(0.5f, false); [Tooltip("根据距离对屏幕边缘进行平滑")] public ClampedFloatParameter m_FadeOutEdge = new ClampedFloatParameter(0.5f, 0f, 1f, false); [Tooltip("控制分辨率")] public ClampedIntParameter m_Downsample = new ClampedIntParameter(1, 1, 5); [Tooltip("模糊算法循环次数,越高效果越好")] public ClampedIntParameter m_PassLoop = new ClampedIntParameter(1, 1, 5); [Tooltip("模糊强度")] public ClampedFloatParameter m_BlurIntensity = new ClampedFloatParameter(1, 0, 10); public bool IsActive() => m_Enable.value; public bool IsTileCompatible() => false; } } -

compute shader

-

参数

#define THREADCOUNTX 8 #define THREADCOUNTY 8 #define THREADCOUNTZ 1 RWTexture2D<half4> _ResultRT; // 反射后的RT RWTexture2D<half> _ResultDepthRT; // 反射后的depth Texture2D<float4> _ScreenColorTex; // scene color Texture2D<float4> _ScreenDepthTex; // scene depth float2 _RTSize; // 反射后的RT分辨率 float _ReflectPlaneHeight; // 反射平面高度(基于screen space uv) float _FadeOutEdge; // 屏幕边缘的衰减程度 float _BlurIntensity; // DualBlur 模糊强度 SamplerState PointClampSampler; // 点采样 SamplerState LinearClampSampler; // 线性采样 -

初始化

将RT和Depth清0

[numthreads(THREADCOUNTX, THREADCOUNTY, THREADCOUNTZ)] void ClearRT(uint3 id : SV_DispatchThreadID) { _ResultRT[id.xy] = half4(0.f, 0.f, 0.f, 0.f); _ResultDepthRT[id.xy] = half4(0.f, 0.f, 0.f, 0.f); } -

SSRP计算

-

重建世界坐标

[numthreads(THREADCOUNTX, THREADCOUNTY, THREADCOUNTZ)] void CSMain (uint3 id : SV_DispatchThreadID) { float2 uvSS = id.xy / _RTSize; // [0,RTSize-1] -> screen [0,1] uv float posNDCZ = _ScreenDepthTex.SampleLevel(PointClampSampler, uvSS, 0.f).r; // raw depth float3 absoluteWolrdPos = ComputeWorldSpacePosition(uvSS, posNDCZ, UNITY_MATRIX_I_VP); // 求得重建后的世界坐标 } -

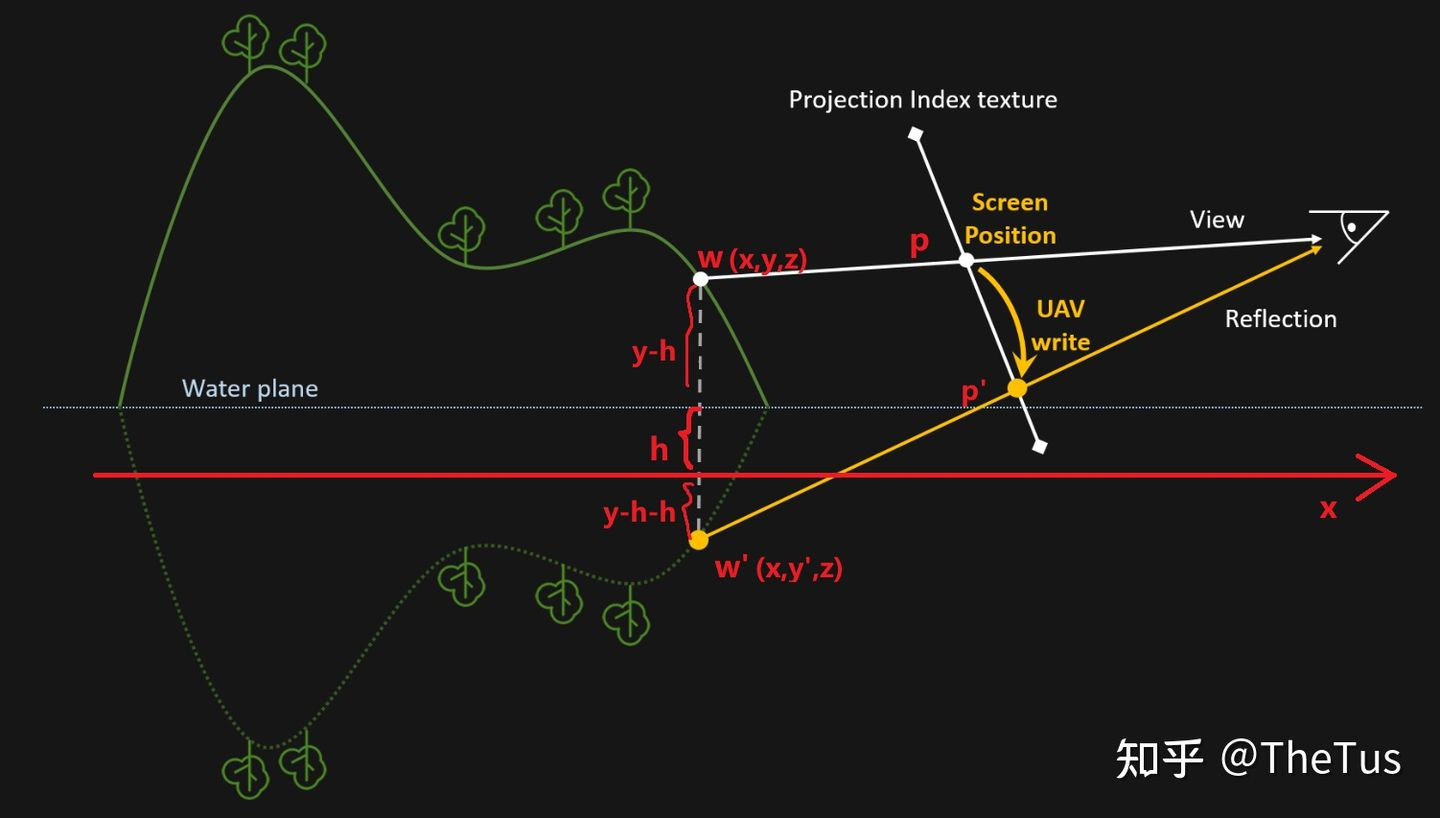

重建反射后的世界坐标

if(absoluteWolrdPos.y < _ReflectPlaneHeight) return; // 丢弃位于平面下方的像素 float3 reflectPosWS = absoluteWolrdPos; reflectPosWS.y = -(reflectPosWS.y - _ReflectPlaneHeight) + _ReflectPlaneHeight; // 先将坐标减去_ReflectPlaneHeight,使其基于y = 0进行翻转(取负)

-

构建并测试屏幕空间uv

// 因为重建后的屏幕空间uv可以超出屏幕范围,需要进行测试 float4 reflectPosCS = mul(UNITY_MATRIX_VP, float4(reflectPosWS, 1.f)); // posWS -> posCS float2 reflectPosNDCxy = reflectPosCS.xy / reflectPosCS.w; // posCS -> posNDC if(abs(reflectPosNDCxy.x) > 1.f || abs(reflectPosNDCxy.y) > 1.f) return; // uv范围在[0,1] float2 reflectPosSSxy = reflectPosNDCxy * 0.5 + 0.5; // ndc->ss // dx平台uv坐标起点在上方 #if defined UNITY_UV_STARTS_AT_TOP reflectPosSSxy.y = 1 - reflectPosSSxy.y; #endif uint2 reflectSSUVID = reflectPosSSxy * _RTSize; // 根据正确的屏幕uv计算得到新的thread id -

输出

_ResultRT[reflectSSUVID] = float4(_ScreenColorTex.SampleLevel(LinearClampSampler, uvSS, 0).rgb, 1);

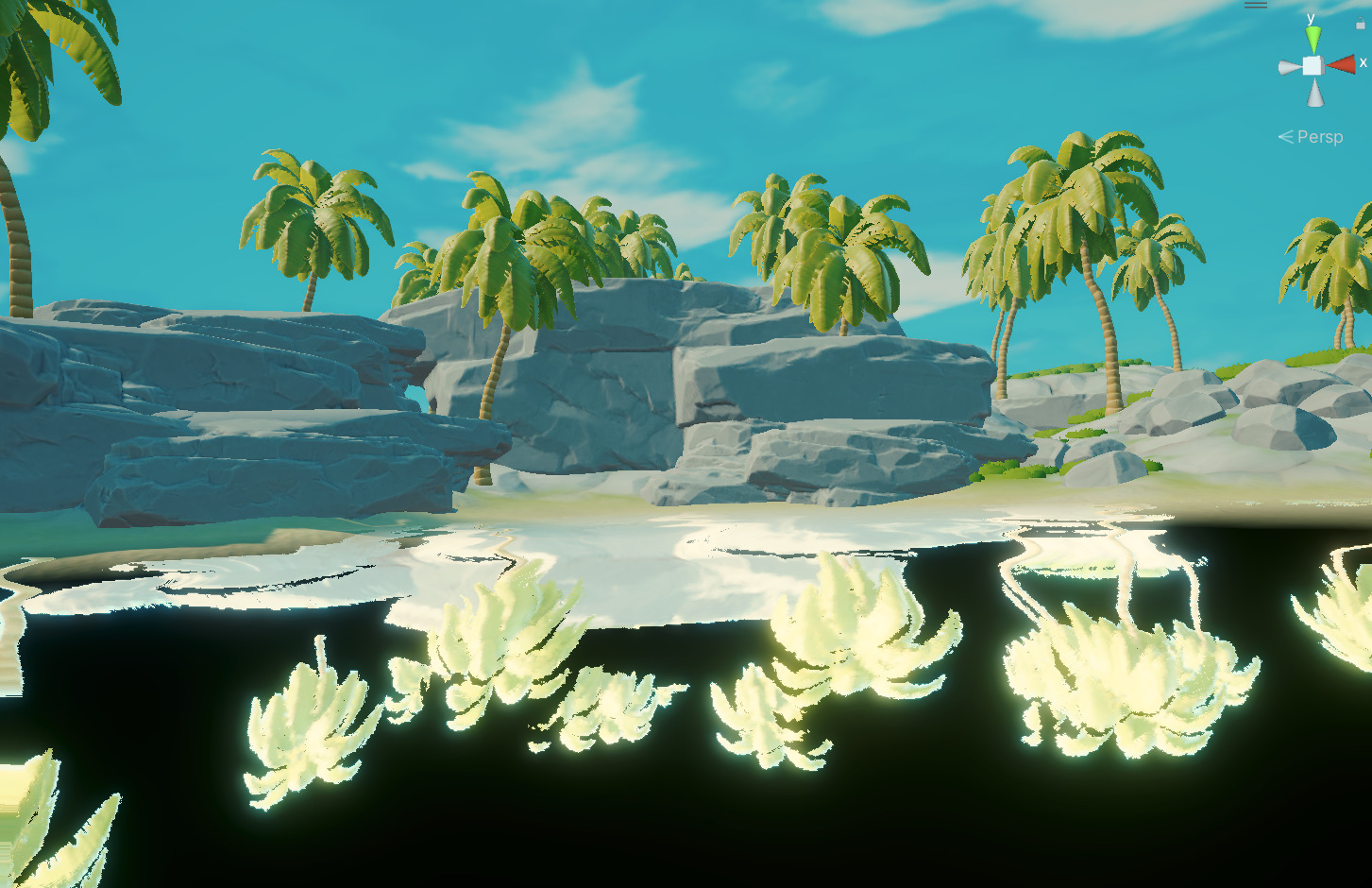

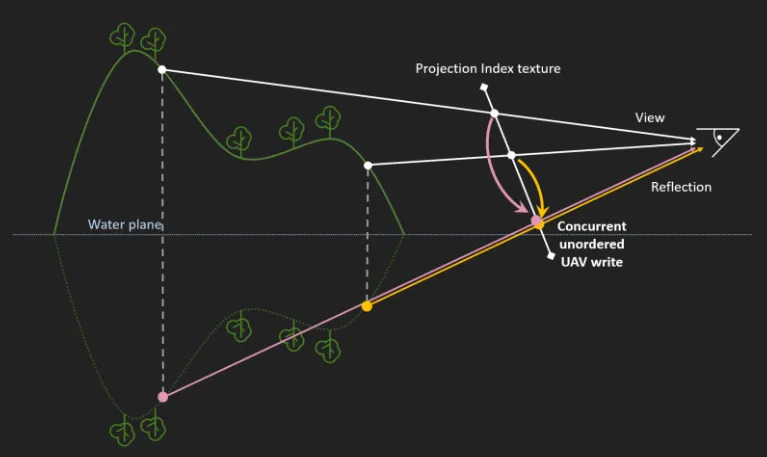

可以看到这存在一定的遮挡问题(物体的反射渲染顺序错误)。如下图所示,造成这一现象的原因是两个不同的像素反射后都位于同一UV

-

深度顺序矫正

很显然,这里可以用到深度缓冲区,写入深度更小的像素(dx平台因为z值翻转,需要写入深度更大的像素)。这样会导致无法反射sky box,后续需要在PS中合并

#if defined UNITY_REVERSED_Z if(reflectPosCS.z / reflectPosCS.w <= _ResultDepthRT[reflectSSUVID]) return; #else if(rePosCS.z / rePosCS.w >= _ResultDepthRT[reSSUVID]) return; #endif _ResultDepthRT[reflectSSUVID] = reflectPosCS.z / reflectPosCS.w; -

边缘缺失

在某些视角下,若反射所需要的信息在渲染后的RT外,会生成不少空白像素,这里有两种解决办法:拉伸uv和2d sdf

考虑到性能,笔者使用SDF。具体原理是:sdf 作为不透明度

// 计算pixel到屏幕边缘的距离 float SDF(float2 pos) { float2 distance = abs(pos) - float2(1, 1); return length(max(0.f, distance) - min(max(distance.x, distance.y), 0.f)); } float mask = SDF(uvSS * 2.f - 1.f); mask = smoothstep(0.f, _FadeOutEdge, abs(mask)); // 做一个平滑,避免生硬 _ResultRT[reflectSSUVID] = float4(_ScreenColorTex.SampleLevel(LinearClampSampler, uvSS, 0).rgb, mask); -

填充空洞

如下图所示,反射后的平面会存在许多黑点空洞。这是因为计算世界坐标进行了透视投影的计算(近大远小),导致最后的像素索引进行了偏移(如本该渲染到(1,1)的,却渲染到(1,2))

解决方法有两个:计算像素明度 和 根据像素的透明度,这里笔者选择透明度

[numthreads(THREADCOUNTX, THREADCOUNTY, THREADCOUNTZ)] void FillHoles (uint3 id : SV_DispatchThreadID) { //////////////////////////////// // //////////////////////////////// float4 center = _ResultRT[id.xy]; float4 top = _ResultRT[id.xy + uint2(0, 1)]; float4 bottom = _ResultRT[id.xy + uint2(0, -1)]; float4 right = _ResultRT[id.xy + uint2(1, 0)]; float4 left = _ResultRT[id.xy + uint2(-1, 0)]; // 查找不是空洞的pixel float4 best = center; best = top.a > best.a + 0.5 ? top : best; best = bottom.a > best.a + 0.5 ? bottom : best; best = right.a > best.a + 0.5 ? right : best; best = left.a > best.a + 0.5 ? left : best; // 填充空洞 _ResultRT[id.xy] = best.a > center.a + 0.5 ? best : center; _ResultRT[id.xy + uint2(0, 1)] = best.a > top.a + 0.5 ? best : top; _ResultRT[id.xy + uint2(0, -1)] = best.a > bottom.a + 0.5 ? best : bottom; _ResultRT[id.xy + uint2(1, 0)] = best.a > right.a + 0.5 ? best : right; _ResultRT[id.xy + uint2(-1, 0)] = best.a > left.a + 0.5 ? best : left; }

-

闪烁

没有找到好的解决方案,对于水体可以使用折射来遮羞

-

skybox 截断

目前的实现还存在一个问题:当观察角度过于低时会绘制截断的skybox

-

解决之道:跳过skybox

void CSMain (uint3 id : SV_DispatchThreadID) { float2 uvSS = id.xy / _RTSize; // [0,RTSize-1] -> screen [0,1] uv float posNDCZ = _ScreenDepthTex.SampleLevel(PointClampSampler, uvSS, 0.f).r; // raw depth if(Linear01Depth(posNDCZ, _ZBufferParams) > 0.99) return; }

-

-

-

-

Render Feature

反射是有一定的模糊效果的,这里使用Dual Blur

using System; using UnityEngine; using UnityEngine.Rendering; using UnityEngine.Rendering.Universal; using UnityEditor.Rendering.Universal; public class SSPRPassFeature : ScriptableRendererFeature { [System.Serializable] public class PassSetting { [Tooltip("显示于frame debugger")] public readonly string m_ProfilerTag = "SSRP Pass"; [Tooltip("Pass执行位置")] public RenderPassEvent passEvent = RenderPassEvent.AfterRenderingTransparents; [Tooltip("compute shader")] public ComputeShader CS = null; [Tooltip("Pixel Shader")] public Material material = null; } class SSPRRenderPass : ScriptableRenderPass { // profiler tag will show up in frame debugger private const string m_ProfilerTag = "SSPR Pass"; // 用于存储pass setting private PassSetting m_passSetting; private SSPRVolume m_SSPRVolume; private ComputeShader m_CS; private Material m_Material; // 反射RT的分辨率 private int RTSizeWidth; private int RTSizeHeight; struct RTID { public static RenderTargetIdentifier m_ResultRT; public static RenderTargetIdentifier m_ResultDepthRT; public static RenderTargetIdentifier m_TempRT; } struct ShaderID { public static readonly int RTSizeID = Shader.PropertyToID("_RTSize"); public static readonly int ReflectPlaneHeightID = Shader.PropertyToID("_ReflectPlaneHeight"); public static readonly int FadeOutEdgeID = Shader.PropertyToID("_FadeOutEdge"); public static readonly int ReflectColorRTID = Shader.PropertyToID("_ResultRT"); public static readonly int ResultDepthRTID = Shader.PropertyToID("_ResultDepthRT"); public static readonly int SceneColorRTID = Shader.PropertyToID("_ScreenColorTex"); public static readonly int SceneDepthRTID = Shader.PropertyToID("_ScreenDepthTex"); public static readonly int BlurIntensityID = Shader.PropertyToID("_BlurIntensity"); public static readonly int TempRTID = Shader.PropertyToID("_TempRT"); } private struct BlurLevelShaderID { internal int downLevelID; internal int upLevelID; } private BlurLevelShaderID[] blurLevel; private static int m_maxBlurLevel = 16; struct Dispatch { // 线程组id public static int ThreadGroupCountX; public static int ThreadGroupCountY; public static int ThreadGroupCountZ; // 线程id public static int ThreadCountX; public static int ThreadCountY; public static int ThreadCountZ; } struct Kernal { public static int ClearRT; public static int CSMain; public static int FillHoles; } // 用于设置material 属性 public SSPRRenderPass(SSPRPassFeature.PassSetting passSetting) { this.m_passSetting = passSetting; renderPassEvent = m_passSetting.passEvent; this.m_CS = m_passSetting.CS; this.m_Material = m_passSetting.material; Kernal.ClearRT = m_CS.FindKernel("ClearRT"); Kernal.CSMain = m_CS.FindKernel("CSMain"); Kernal.FillHoles = m_CS.FindKernel("FillHoles"); } public override void OnCameraSetup(CommandBuffer cmd, ref RenderingData renderingData) { // 获取定义的volume var POSTStack = VolumeManager.instance.stack; m_SSPRVolume = POSTStack.GetComponent<SSPRVolume>(); float aspect = (float)Screen.height / Screen.width; // 屏幕分辨率 // 线程对应compute shader中的线程数 Dispatch.ThreadCountX = 8; Dispatch.ThreadCountY = 8; Dispatch.ThreadCountZ = 1; // 使得一个线程对应一个uv Dispatch.ThreadGroupCountY = m_SSPRVolume.m_RTSize.value / Dispatch.ThreadCountY; // RT高度 Dispatch.ThreadGroupCountX = Mathf.RoundToInt(Dispatch.ThreadGroupCountY / aspect); // RT 宽度 Dispatch.ThreadGroupCountZ = 1; // 反射面的分辨率大小 this.RTSizeWidth = Dispatch.ThreadGroupCountX * Dispatch.ThreadCountX; this.RTSizeHeight = Dispatch.ThreadGroupCountY * Dispatch.ThreadCountY; // alpha作为mask RenderTextureDescriptor descriptor = new RenderTextureDescriptor(this.RTSizeWidth, this.RTSizeHeight, RenderTextureFormat.ARGB32); if (m_SSPRVolume.m_EnableHDR.value == true) { // 每位16字节,支持HDR descriptor.colorFormat = RenderTextureFormat.ARGBHalf; } descriptor.enableRandomWrite = true; // 用于D3D的UAV(无序视图) // 申请RT cmd.GetTemporaryRT(ShaderID.ReflectColorRTID, descriptor); RTID.m_ResultRT = new RenderTargetIdentifier(ShaderID.ReflectColorRTID); descriptor.colorFormat = RenderTextureFormat.R16; // 深度图只有r channel cmd.GetTemporaryRT(ShaderID.ResultDepthRTID, descriptor); RTID.m_ResultDepthRT = new RenderTargetIdentifier(ShaderID.ResultDepthRTID); blurLevel = new BlurLevelShaderID[m_maxBlurLevel]; for (int t = 0; t < m_maxBlurLevel; ++t) { blurLevel[t] = new BlurLevelShaderID { downLevelID = Shader.PropertyToID("_BlurMipDown" + t), upLevelID = Shader.PropertyToID("_BlurMipU" + t) }; } } public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData) { if (m_CS == null) { Debug.LogError("Custom:SSPR compute shader missing"); } if (m_SSPRVolume.m_Enable.value == true) { // Grab a command buffer. We put the actual execution of the pass inside of a profiling scope CommandBuffer cmd = CommandBufferPool.Get(); using (new ProfilingScope(cmd, new ProfilingSampler(m_ProfilerTag))) { cmd.SetComputeTextureParam(this.m_CS, Kernal.ClearRT, ShaderID.ReflectColorRTID, RTID.m_ResultRT); cmd.SetComputeTextureParam(this.m_CS, Kernal.ClearRT, ShaderID.ResultDepthRTID, RTID.m_ResultDepthRT); cmd.DispatchCompute(this.m_CS, Kernal.ClearRT, Dispatch.ThreadGroupCountX, Dispatch.ThreadGroupCountY, Dispatch.ThreadGroupCountZ); cmd.SetComputeVectorParam(this.m_CS, ShaderID.RTSizeID, new Vector2(this.RTSizeWidth, this.RTSizeHeight)); cmd.SetComputeFloatParam(this.m_CS, ShaderID.ReflectPlaneHeightID, m_SSPRVolume.m_ReflectPlaneHeight.value); cmd.SetComputeFloatParam(this.m_CS, ShaderID.FadeOutEdgeID, m_SSPRVolume.m_FadeOutEdge.value); cmd.SetComputeTextureParam(this.m_CS, Kernal.CSMain, ShaderID.ReflectColorRTID, RTID.m_ResultRT); cmd.SetComputeTextureParam(this.m_CS, Kernal.CSMain, ShaderID.ResultDepthRTID, RTID.m_ResultDepthRT); cmd.SetComputeTextureParam(this.m_CS, Kernal.CSMain, ShaderID.SceneColorRTID, new RenderTargetIdentifier("_CameraOpaqueTexture")); cmd.SetComputeTextureParam(this.m_CS, Kernal.CSMain, ShaderID.SceneDepthRTID, new RenderTargetIdentifier("_CameraDepthTexture")); cmd.DispatchCompute(this.m_CS, Kernal.CSMain, Dispatch.ThreadGroupCountX, Dispatch.ThreadGroupCountY, Dispatch.ThreadGroupCountZ); cmd.SetComputeTextureParam(this.m_CS, Kernal.FillHoles, ShaderID.ReflectColorRTID, RTID.m_ResultRT); cmd.DispatchCompute(this.m_CS, Kernal.FillHoles, Dispatch.ThreadGroupCountX, Dispatch.ThreadGroupCountY, Dispatch.ThreadGroupCountZ); } if (m_Material == null) { Debug.LogError("Custom:SSPR shader missing"); } else { m_Material.SetFloat(ShaderID.BlurIntensityID, m_SSPRVolume.m_BlurIntensity.value); RenderTextureDescriptor descriptor = new RenderTextureDescriptor(this.RTSizeWidth, this.RTSizeHeight, RenderTextureFormat.Default); descriptor.width /= m_SSPRVolume.m_Downsample.value; descriptor.height /= m_SSPRVolume.m_Downsample.value; //descriptor.depthBufferBits = 0; // 不会用到深度,精度设为0 cmd.GetTemporaryRT(ShaderID.TempRTID, descriptor); RTID.m_TempRT = new RenderTargetIdentifier(ShaderID.TempRTID); Blit(cmd, RTID.m_ResultRT, RTID.m_TempRT); RenderTargetIdentifier lastDown = RTID.m_TempRT; for (uint i = 0; i < m_SSPRVolume.m_PassLoop.value; ++i) { int midDown = blurLevel[i].downLevelID; int midUp = blurLevel[i].upLevelID; cmd.GetTemporaryRT(midDown, descriptor, FilterMode.Bilinear); cmd.GetTemporaryRT(midUp, descriptor, FilterMode.Bilinear); Blit(cmd, lastDown, midDown, m_Material, 1); lastDown = midDown; descriptor.width = Mathf.Max(descriptor.width / 2, 1); descriptor.height = Mathf.Max(descriptor.height / 2, 1); } int lastUp = blurLevel[m_SSPRVolume.m_PassLoop.value - 1].downLevelID; for (int i = m_SSPRVolume.m_PassLoop.value - 2; i >= 0; --i) { int midUp = blurLevel[i].upLevelID; cmd.Blit(lastUp, midUp, m_Material, 2); lastUp = midUp; } cmd.Blit(lastUp, RTID.m_ResultRT, m_Material, 2); } context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); } } public override void OnCameraCleanup(CommandBuffer cmd) { if(cmd == null) throw new ArgumentNullException("cmd"); cmd.ReleaseTemporaryRT(ShaderID.ReflectColorRTID); cmd.ReleaseTemporaryRT(ShaderID.SceneDepthRTID); } } public PassSetting m_Setting = new PassSetting(); SSPRRenderPass m_SSPRPass; public override void Create() { m_SSPRPass = new SSPRRenderPass(m_Setting); } public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData) { // can queue up multiple passes after each other renderer.EnqueuePass(m_SSPRPass); } }- Shader

最终效果还是得用material(pixel shader)实现(不过不用置于物体上)

Shader "URP/reflect" { Properties { [HideInInspector] _MainTex("", 2D) = "white" {} } SubShader { Tags { "RenderPipeline"="UniversalRenderPipeline" "Queue"="Overlay" } Cull Off ZWrite Off ZTest Always HLSLINCLUDE #include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl" CBUFFER_START(UnityPerMaterial) float _BlurIntensity; float4 _MainTex_TexelSize; CBUFFER_END TEXTURE2D(_MainTex); SAMPLER(sampler_MainTex); TEXTURE2D(_ResultRT); sampler LinearClampSampler; struct VSInput { float4 positionOS : POSITION; float4 normalOS : NORMAL; float2 uv : TEXCOORD; }; struct PSInput { float4 positionCS : SV_POSITION; float2 uv:TEXCOORD; float4 uv01 : TEXCOORD1; float4 uv23 : TEXCOORD2; float4 uv45 : TEXCOORD3; float4 uv67 : TEXCOORD4; float4 positionSS : TEXCOORD5; float3 positionWS : TEXCOORD6; float3 viewDirWS : TEXCOORD7; float3 normalWS : NORMAL; }; ENDHLSL pass { NAME "Copy SSRP" Tags { "LightMode"="UniversalForward" "RenderType"="Overlay" } HLSLPROGRAM #pragma vertex VS #pragma fragment PS PSInput VS(VSInput vsInput) { PSInput vsOutput; VertexPositionInputs vertexPos = GetVertexPositionInputs(vsInput.positionOS); vsOutput.positionWS = vertexPos.positionWS; vsOutput.positionCS = vertexPos.positionCS; vsOutput.positionSS = ComputeScreenPos(vsOutput.positionCS); VertexNormalInputs vertexNormal = GetVertexNormalInputs(vsInput.normalOS); vsOutput.normalWS = vertexNormal.normalWS; return vsOutput; } half4 PS(PSInput psInput) : SV_Target { half2 screenUV = psInput.positionSS.xy / psInput.positionSS.w; float4 SSPRResult = SAMPLE_TEXTURE2D(_ResultRT, LinearClampSampler, screenUV); SSPRResult.xyz *= SSPRResult.w; return SSPRResult; } ENDHLSL } Pass { NAME "Down Samp[le" HLSLPROGRAM #pragma vertex VS #pragma fragment PS PSInput VS(VSInput vsInput) { PSInput vsOutput; vsOutput.positionCS = TransformObjectToHClip(vsInput.positionOS); // 在D3D平台下,若开启抗锯齿,_TexelSize.y会变成负值,需要进行oneminus,否则会导致图像上下颠倒 #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; vsOutput.uv01.xy = vsInput.uv + float2(1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(-1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1.f, -1.f) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1.f, 1.f) * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput; } float4 PS(PSInput psInput) : SV_TARGET { float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv.xy) * 0.5; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 0.125; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 0.125; return outputColor; } ENDHLSL } Pass { NAME "Up Sample" HLSLPROGRAM #pragma vertex VS #pragma fragment PS PSInput VS(VSInput vsInput) { PSInput vsOutput; vsOutput.positionCS = TransformObjectToHClip(vsInput.positionOS); #ifdef UNITY_UV_STARTS_AT_TOP if(_MainTex_TexelSize.y < 0.f) vsInput.uv.y = 1 - vsInput.uv.y; #endif vsOutput.uv = vsInput.uv; // 1/12 vsOutput.uv01.xy = vsInput.uv + float2(0, 1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv01.zw = vsInput.uv + float2(0, -1) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.xy = vsInput.uv + float2(1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv23.zw = vsInput.uv + float2(-1, 0) * _MainTex_TexelSize.xy * _BlurIntensity; // 1/6 vsOutput.uv45.xy = vsInput.uv + float2(1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv45.zw = vsInput.uv + float2(-1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.xy = vsInput.uv + float2(1, -1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; vsOutput.uv67.zw = vsInput.uv + float2(-1, 1) * 0.5 * _MainTex_TexelSize.xy * _BlurIntensity; return vsOutput; } float4 PS(PSInput psInput) : SV_TARGET { float4 outputColor = 0.f; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv01.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.xy) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv23.zw) * 1/12; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv45.zw) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.xy) * 1/6; outputColor += SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, psInput.uv67.zw) * 1/6; return outputColor; } ENDHLSL } } } -

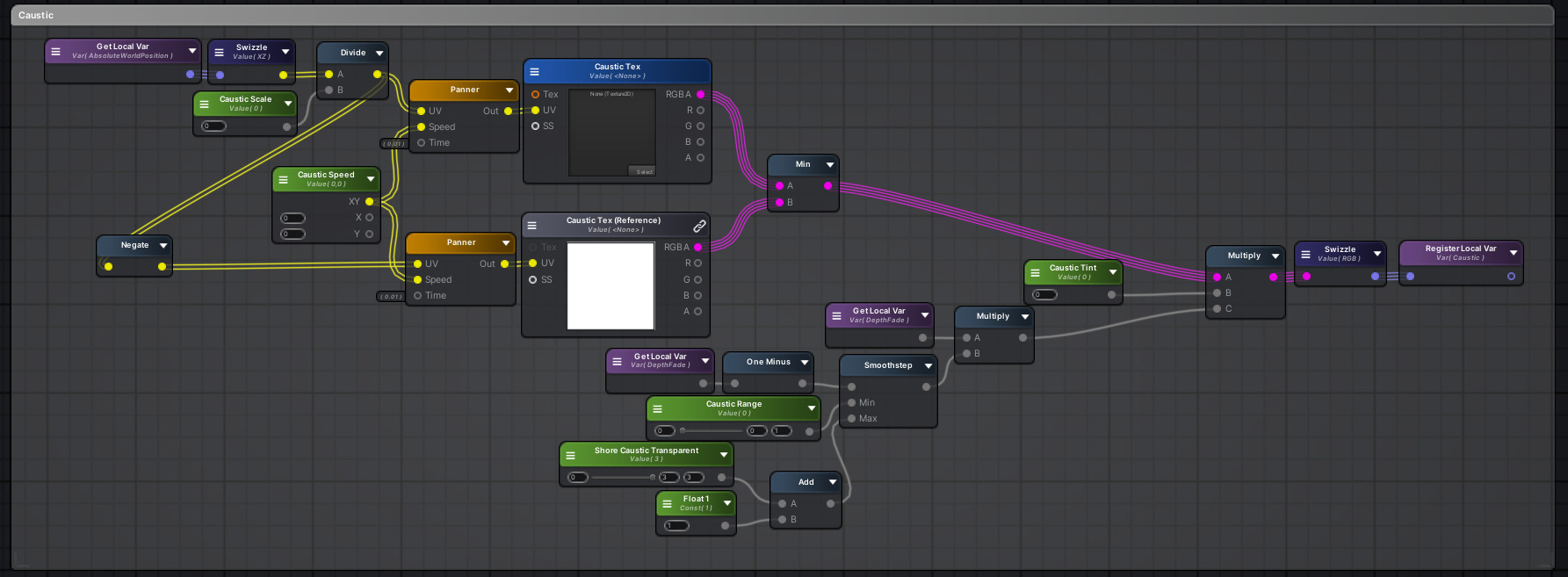

焦散

-

思路:在UE中使用后处理实现过,这里尝试一种新方法,基于xz坐标绘制

-

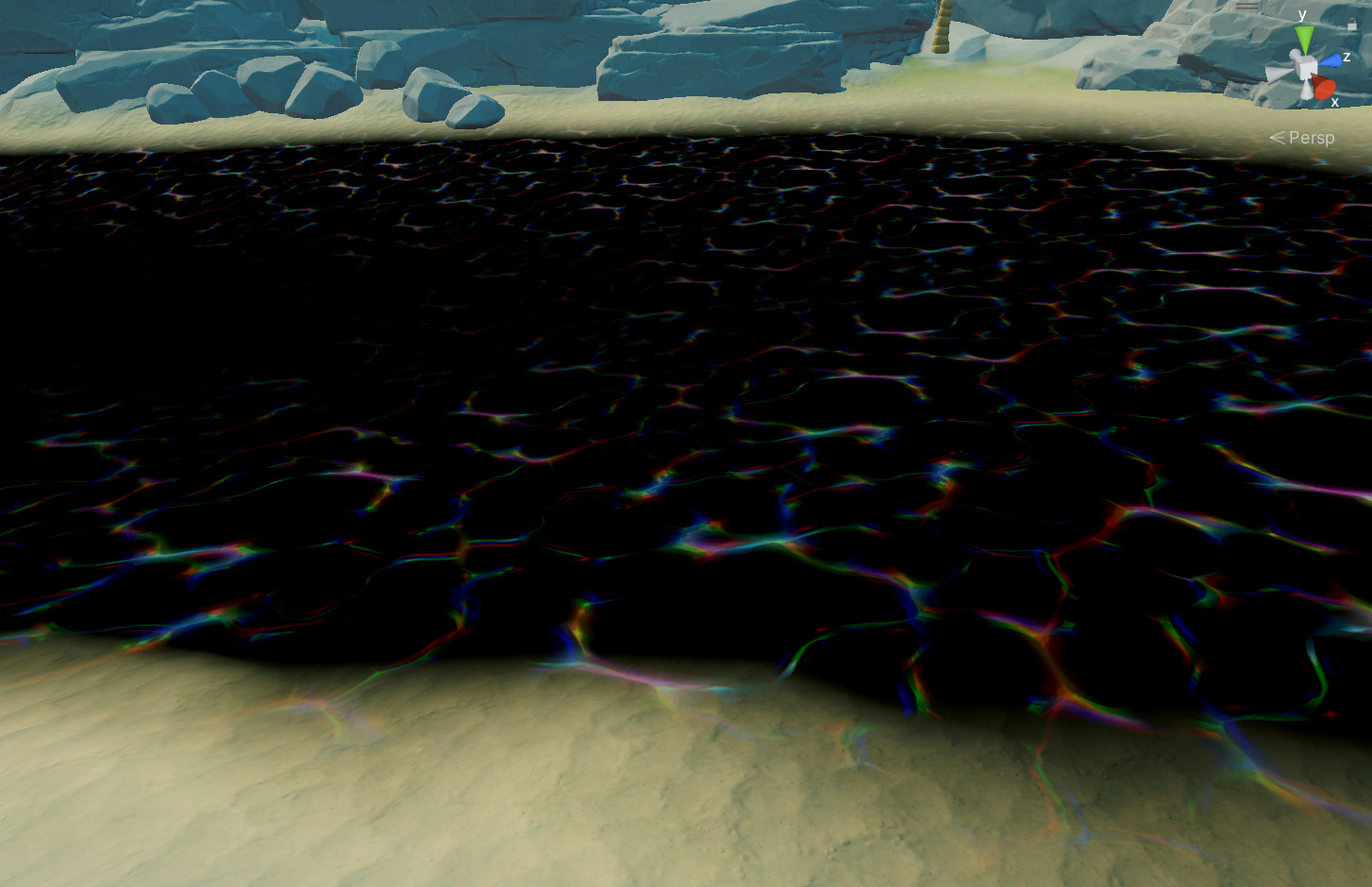

实现

panner反向uv模拟焦散的运动

-

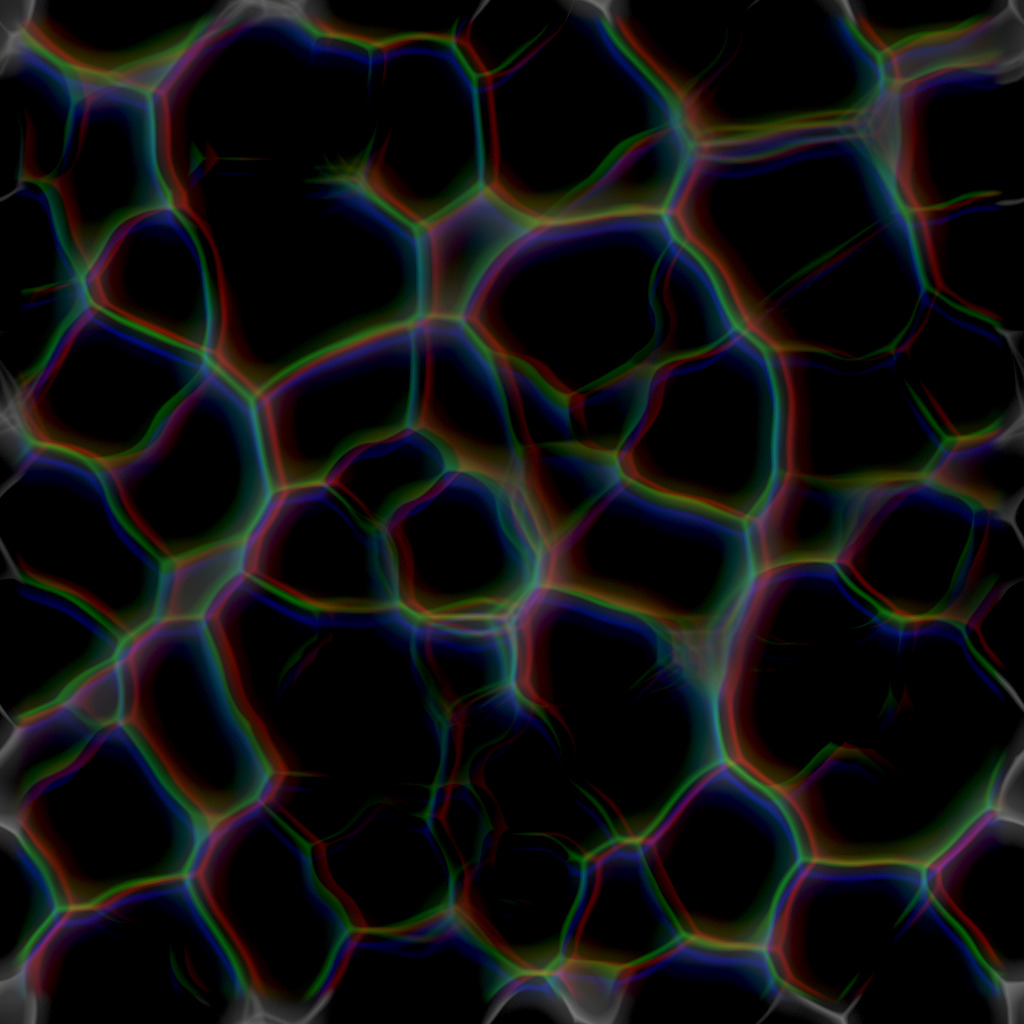

纹理

-

效果

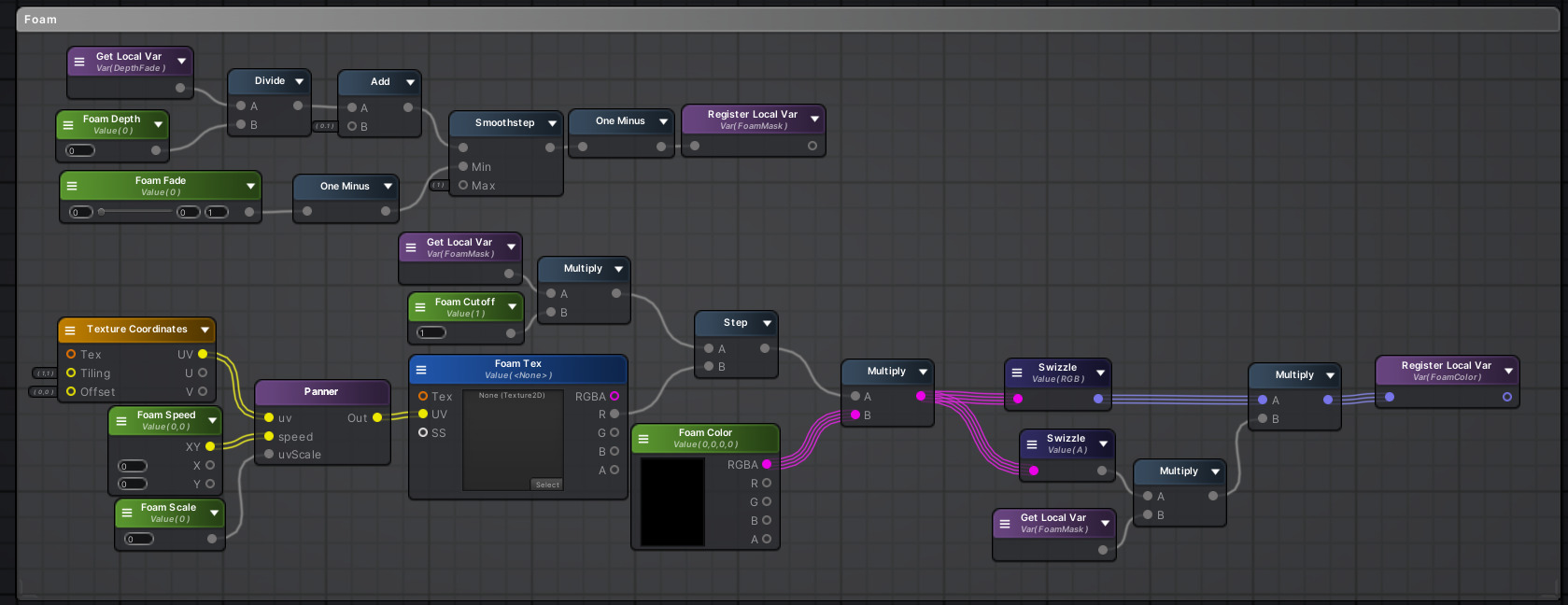

浪花

-

参考了很多游戏中的风格化水体效果,发现很少实现波峰浪花的,仅仅实现了近岸浪花,这里偷个懒也只实现近岸浪花叭

-

实现

FoamMask基于深度实现,使用Foam Cutoff来控制Foam区域

-

效果

Wave

-

老朋友了GerstnerWave,考虑到移动端的性能,最多叠加4个波

-

实现

float3 GerstnerWave(float3 positionWS, float steepness, float wavelength, float speed, float direction, inout float3 tangentWS, inout float3 binormalWS) { direction = direction * 2 - 1; float2 d = normalize(float2(cos(3.14 * direction), sin(3.14 * direction))); float k = 2 * 3.14 / wavelength; // 频率周期 float f = k * (dot(d, positionWS.xz) - speed * _Time.y); // sin/cos参数 float a = steepness / k; // 振幅(防止打结) tangentWS += float3( -d.x * d.x * (steepness * sin(f)), d.x * (steepness * cos(f)), -d.x * d.y * (steepness * sin(f)) ); binormalWS += float3( -d.x * d.y * (steepness * sin(f)), d.y * (steepness * cos(f)), -d.y * d.y * (steepness * sin(f)) ); return float3( d.x * (a * cos(f)), a * sin(f), d.y * (a * cos(f)) ); } float3 GerstnerWave4(float3 positionWS, float3 normalWS, float steepness, float waveLength, float speed, float4 direction) { float3 offset = 0.f; float3 tangent = float3(1, 0, 0); float3 binormal = float3(0, 0, 1); offset += GerstnerWave(positionWS, steepness, waveLength, speed, direction.x, tangent, binormal); offset += GerstnerWave(positionWS, steepness, waveLength, speed, direction.y, tangent, binormal); offset += GerstnerWave(positionWS, steepness, waveLength, speed, direction.z, tangent, binormal); offset += GerstnerWave(positionWS, steepness, waveLength, speed, direction.w, tangent, binormal); normalWS = cross(tangent, binormal); return offset; }

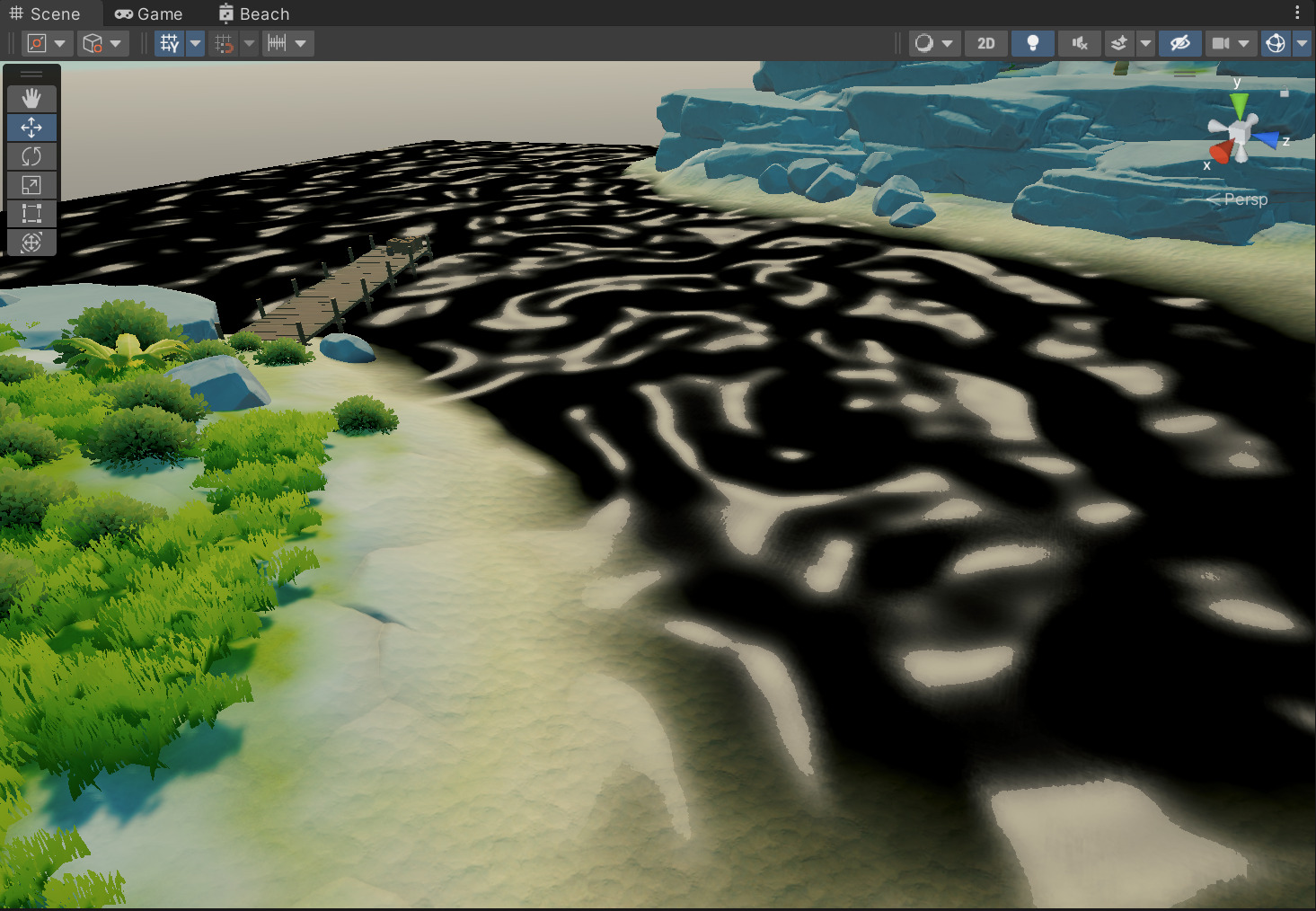

整合

最终效果

reference

The Secrets of Colour Interpolation

Simulating Waves Using The Water Waves Asset

https://zhuanlan.zhihu.com/p/357714920

UnityURP-MobileScreenSpacePlanarReflection

标签:cmd,MainTex,int,uv,Unity,xy,URP,风格化,public From: https://www.cnblogs.com/chenglixue/p/17931028.html