zotero-key: HP5VFNPQ

zt-attachments:

- "413"

title: "Lift, Splat, Shoot: Encoding Images From Arbitrary Camera Rigs by Implicitly Unprojecting to 3D"

citekey: philionLiftSplatShoot2020

Lift, Splat, Shoot: Encoding Images From Arbitrary Camera Rigs by Implicitly Unprojecting to 3D

Abstract

The goal of perception for autonomous vehicles is to extract semantic representations from multiple sensors and fuse these representations into a single "bird's-eye-view" coordinate frame for consumption by motion planning. We propose a new end-to-end architecture that directly extracts a bird's-eye-view representation of a scene given image data from an arbitrary number of cameras. The core idea behind our approach is to "lift" each image individually into a frustum of features for each camera, then "splat" all frustums into a rasterized bird's-eye-view grid. By training on the entire camera rig, we provide evidence that our model is able to learn not only how to represent images but how to fuse predictions from all cameras into a single cohesive representation of the scene while being robust to calibration error. On standard bird's-eye-view tasks such as object segmentation and map segmentation, our model outperforms all baselines and prior work. In pursuit of the goal of learning dense representations for motion planning, we show that the representations inferred by our model enable interpretable end-to-end motion planning by "shooting" template trajectories into a bird's-eye-view cost map output by our network. We benchmark our approach against models that use oracle depth from lidar. Project page with code: https://nv-tlabs.github.io/lift-splat-shoot .

Comments

LSS: 通过隐式非投影到3D的方式,对任意配置的camera图像(不同数量相机)进行encoding

输入多视角的环视图像,输出BEV坐标下的语义信息,车辆、可通行区域、车道线等

LSS一般被称作是BEV的开山之作,后续一系列的工作都是基于LSS做的

Q&A

0.背景动机

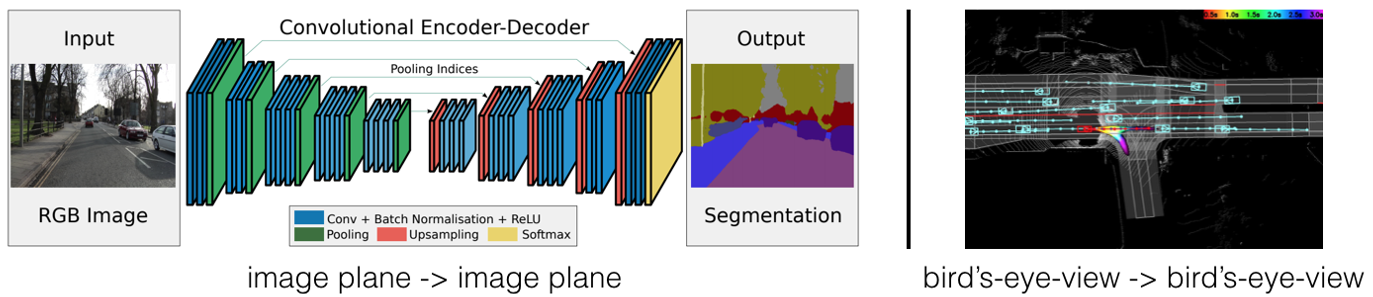

传统的一般的计算机视觉任务,比如目标检测、语义分割等,输入一张图片,在和输入图片相同的坐标系中做出预测,这和自动驾驶真正需要的感知任务不符合。在自动驾驶中,不同坐标系下的传感器数据会作为输入,感知的下游规划模块需要的是ego坐标下的感知结果:

有许多简单实用的策略可以将single-image的范式扩展到multi-view的。

对于从n个camera中进行3D目标检测这个问题来说,可以在各个视角的图片上使用一个相同的single-image检测器做2D检测,然后根据相机内外参将检测结果投影到ego坐标系下。

这种扩展方式有三个有价值的对称性:

- 平移不变性: 图片中的像素坐标系发生偏移,输出结果也会对应偏移相同数量

- 排列不变性:n个相机不同的排列对最终的输出结果没有影响

- ego坐标系等距等差性:无论捕获图像的相机相对于ego位于何处,都将在给定图像中检测到相同的物体。也就是说如果ego坐标系发生平移旋转,输出结果也会对应平移旋转。

这种简易的处理方式缺点也很明显:

无法使用data-driven的方法找到最合适用于 融合跨相机的信息的方法。也没有办法使用反向传播来利用下游planner的反馈来自动优化感知系统。

提出了一个名为“Lift-Splat”的模型,它保留了上面设计中确定的 3 个对称性,同时也是端到端可微分的,是data-driven的。

LSS这种从多视图相机中学习内在表示的方法是建立在 当时最新的传感器融合和单目目标检测的基础上的。nuscense,lyft,waymo和argo等360环视相机数据集的建立也功不可没。

Monocular Object Detection

单目目标检测器是根据它们如何对从图像平面到给定 3D 坐标系的变换进行建模来定义的。

一种标准的技术路线是二阶段的:首先在图像上应用一个成熟的2D目标检测网络,然后训练第二个网络将2D框回归为3D框(SSD-6D、Monogrnet等)

另外一种思路是