安装包下载(百度网盘)链接: https://pan.baidu.com/s/1XrnbpNNqcG20QG_hL4RJoQ?pwd=aec9

提取码: aec9

基础配置(所有节点)

关闭防火墙,selinux安全子系统

#关闭防火墙,设置开机自动关闭

[root@localhost ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

#配置selinux,设置开机自动关闭

[root@localhost ~]# vim /etc/selinux/config

SELINUX=disable

网络配置

- master节点IP:192.168.88.10

- slave1节点IP:192.168.88.20

- slave2节点IP:192.168.88.30

#以master节点为例

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO=static #修改

ONBOOT=yes #修改

IPADDR=192.168.88.10 #添加

NETMASK=255.255.255.0 #添加

GATEWAY=192.168.88.2 #添加

DNS1=8.8.8.8 #添加

DNS2=114.114.114.114 #添加

主机名配置

#master节点

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# bash

[root@master ~]#

#slave1节点

[root@localhost ~]# hostnamectl set-hostname slave1

[root@localhost ~]# bash

[root@slave1 ~]#

#slave2节点

[root@localhost ~]# hostnamectl set-hostname slave2

[root@localhost ~]# bash

[root@slave2 ~]#

主机名映射

#以master节点为例

[root@localhost ~]# cat >>/etc/hosts<<EOF

> 192.168.88.10 master

> 192.168.88.20 slave1

> 192.168.88.30 slave2

> EOF

#查看一下

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.10 master

192.168.88.20 slave1

192.168.88.30 slave2

创建hadoop用户

#以master节点为例

[root@master ~]# useradd hadoop

[root@master ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: The password is a palindrome

Retype new password:

passwd: all authentication tokens updated successfully.

#登录一下

[root@master ~]# su - hadoop

[hadoop@master ~]$

配置ssh无密钥登录

每个节点安装和启动 SSH 协议

#查看有无openssh和rsync服务,没有就安装,以master节点为例

[root@master ~]# rpm -qa | grep openssh

openssh-clients-8.0p1-5.el8.x86_64

openssh-server-8.0p1-5.el8.x86_64

openssh-8.0p1-5.el8.x86_64

[root@master ~]# rpm -qa | grep rsync

#无rsync,安装一下

[root@master ~]# dnf -y install rsync

[root@master ~]# rpm -qa | grep rsync

rsync-3.1.3-19.el8.1.x86_64

切换到 hadoop 用户

#以master节点为例

[root@master ~]# su - hadoop

Last login: Wed May 10 10:21:56 CST 2023 on pts/0

[hadoop@master ~]$

每 个节点生成密钥,然后分配

#以master节点为例

[hadoop@master ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:hglbm9uHKgdbBUOiQ0GIoPD7a0YkWkyDFX79R4cZCbI hadoop@master

The key's randomart image is:

+---[RSA 3072]----+

|==*o..o .... |

|*+o. ooo .+ |

|.o=.o Eo + . |

| ++.+ *.. . |

| o.o. =.S . |

|. .o .+ o |

| ..+. o . |

| =... . |

| o.o. |

+----[SHA256]-----+

#分配,将master节点的密钥给slave1,slave2,master

[hadoop@master ~]$ ssh-copy-id slave1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/hadoop/.ssh/id_rsa.pub"

The authenticity of host 'slave1 (192.168.88.20)' can't be established.

ECDSA key fingerprint is SHA256:VFHZhTsFKE09nm+UJ8Vi4QMQkDtUTMxPo3EptG6i5xg.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@slave1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'slave1'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@master ~]$ ssh-copy-id slave2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/hadoop/.ssh/id_rsa.pub"

The authenticity of host 'slave2 (192.168.88.30)' can't be established.

ECDSA key fingerprint is SHA256:4u3w7uOpcfSH3p0l84RNYx4Ik705SP6rImUPtUfccQ0.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@slave2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'slave2'"

and check to make sure that only the key(s) you wanted were added.

[hadoop@master ~]$ ssh-copy-id master

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/hadoop/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@master's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'master'"

and check to make sure that only the key(s) you wanted were added.

测试免密登录

#在master节点

[hadoop@master ~]$ ssh slave1

Last login: Wed May 10 10:29:54 2023

[hadoop@slave1 ~]$ exit

logout

Connection to slave1 closed.

[hadoop@master ~]$ ssh slave2

Last login: Wed May 10 10:29:54 2023

[hadoop@slave2 ~]$ exit

logout

Connection to slave2 closed.

集群部署服务

安装JAVA环境

安装JDK

#master节点安装并解压java,利用xftp将java包传到主节点上

[root@master ~]# tar -xf jdk-8u152-linux-x64.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

jdk1.8.0_152

#重命名

[root@master src]# mv jdk1.8.0_152 jdk

[root@master src]# ls

jdk

#配置环境变量,添加jdk.sh文件,会在切换用户时自动导入环境变量,环境变量可通过echo $PATH命令查看

[root@master src]# vi /etc/profile.d/jdk.sh

export JAVA_HOME=/usr/local/src/jdk

export PATH=$PATH:$JAVA_HOME/bin

#查看java版本信息

[root@master src]# java -version

java version "1.8.0_152"

Java(TM) SE Runtime Environment (build 1.8.0_152-b16)

Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

将配置传到slave1,slave2节点

#传输jdk包

[root@master ~]# scp -r /usr/local/src/jdk slave1:/usr/local/src/

[root@master ~]# scp -r /usr/local/src/jdk slave2:/usr/local/src/

#传输环境变量文件

[root@master ~]# scp /etc/profile.d/jdk.sh slave1:/etc/profile.d/

[root@master ~]# scp /etc/profile.d/jdk.sh slave2:/etc/profile.d/

#查看slave1,slave2的java版本信息

[root@slave1 ~]# java -version

java version "1.8.0_152"

Java(TM) SE Runtime Environment (build 1.8.0_152-b16)

Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

[root@slave2 src]# java -version

java version "1.8.0_152"

Java(TM) SE Runtime Environment (build 1.8.0_152-b16)

Java HotSpot(TM) 64-Bit Server VM (build 25.152-b16, mixed mode)

安装Hadoop环境

安装hadoop

#master节点安装并解压hadoop,利用xftp将hadoop包传到主节点上

[root@master ~]# tar -xf hadoop-2.7.1.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

hadoop-2.7.1 jdk

#重命名

[root@master src]# mv hadoop-2.7.1 hadoop

[root@master src]# ls

hadoop jdk

#配置环境变量,添加hadoop.sh文件,会在切换用户时自动导入环境变量,环境变量可通过echo $PATH命令查看

[root@master src]# vi /etc/profile.d/hadoop.sh

export HADOOP_HOME=/usr/local/src/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

编辑hadoop配置文件

#修改hadoop-env.sh配置文件

[root@master ~]# cd /usr/local/src/hadoop/etc/hadoop/

[root@master hadoop]# vi hadoop-env.sh

#文件末尾添加以下参数

export JAVA_HOME=/usr/local/src/jdk

#配置hdfs-site.xml

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

#在文件中<configuration>和</configuration>一对标签之间追加以下配置信息

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/src/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/src/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

#配置core-site.xml

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/core-site.xml

#在文件中<configuration>和</configuration>一对标签之间追加以下配置信息

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/src/hadoop/tmp</value>

</property>

</configuration>

#配置mapred-site.xml

#在“/usr/local/src/hadoop/etc/hadoop”目录下有一个 mapred-site.xml.template,需要修改文件名称,把它重命名为 mapred-site.xml,然后把 mapred-site.xml 文件配置成如下内容:

[root@master ~]# cp /usr/local/src/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

#在文件中<configuration>和</configuration>一对标签之间追加以下配置信息

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

#配置yarn-site.xml

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/yarn-site.xml

#在文件中<configuration>和</configuration>一对标签之间追加以下配置信息

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.ma

preduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

创建目录,文件

#创建masters文件,写入

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/masters

192.168,88,10 #添加master主机 ip地址

#配置slaves文件

[root@master ~]# vi /usr/local/src/hadoop/etc/hadoop/slaves

#删除 localhost,加入以下配置信息

192.168.88.20 #slave1 主机 IP 地址

192.168.88.30 #slave1 主机 IP 地址

#新建/usr/local/src/hadoop/tmp,/usr/local/src/hadoop/dfs/name,/usr/local/src/hadoop/dfs/data 三个目录

[root@master ~]# mkdir /usr/local/src/hadoop/tmp

[root@master ~]# mkdir /usr/local/src/hadoop/dfs/name -p

[root@master ~]# mkdir /usr/local/src/hadoop/dfs/data -p

将配置传到slave1,slave2节点

#传输hadoop包

[root@master src]# scp -r /usr/local/src/hadoop slave1:/usr/local/src/

[root@master src]# scp -r /usr/local/src/hadoop slave2:/usr/local/src/

#传输环境变量文件

[root@master src]# scp /etc/profile.d/hadoop.sh slave1:/etc/profile.d/

[root@master src]# scp /etc/profile.d/hadoop.sh slave2:/etc/profile.d/

每个节点赋予权限

#master

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

#slave1

[root@slave1 ~]# chown -R hadoop.hadoop /usr/local/src/

#slave2

[root@slave2 ~]# chown -R hadoop.hadoop /usr/local/src/

配置Hadoop格式化

NameNode 格式化

将 NameNode 上的数据清零,第一次启动 HDFS 时要进行格式化,以后启动无需再格式

化,否则会缺失 DataNode 进程。另外,只要运行过 HDFS,Hadoop 的工作目录(本书设置为

/usr/local/src/hadoop/tmp)就会有数据,如果需要重新格式化,则在格式化之前一定要先删

除工作目录下的数据,否则格式化时会出问题

#格式化

[hadoop@master ~]$ hdfs namenode -format

#结尾出现这样就行

........

23/05/12 15:15:16 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.88.10

************************************************************/

启动集群各项服务

#启动 NameNode

[hadoop@master ~]$ hadoop-daemon.sh start namenode

#slave节点启动 DataNode

[hadoop@slave1 ~]$ hadoop-daemon.sh start datanode

[hadoop@slave2 ~]$ hadoop-daemon.sh start datanode

#启动 SecondaryNameNode

[hadoop@master ~]$ hadoop-daemon.sh start secondarynamenode

#启动 ResourceManager

[hadoop@master ~]$ start-yarn.sh

#一次性启动hadoop集群所有服务的命令

[hadoop@master ~]$ start-all.sh

#一次性关闭hadoop集群所有服务的命令

[hadoop@master ~]$ stop-all.sh

#jps查看服务进程状态

[hadoop@master ~]$ jps

2963 NameNode

3320 ResourceManager

3579 Jps

3164 SecondaryNameNode

[hadoop@slave1 ~]$ jps

1654 DataNode

1767 NodeManager

1866 Jps

[hadoop@slave2 ~]$ jps

1654 DataNode

1767 NodeManager

1866 Jps

查看 HDFS 数据存放位置

HDFS 的数据保存在/usr/local/src/hadoop/dfs 目录下,NameNode、DataNode

和/usr/local/src/hadoop/tmp/目录下,SecondaryNameNode 各有一个目录存放数据

[hadoop@master ~]$ ll /usr/local/src/hadoop/dfs/

total 0

drwxrwxr-x. 2 hadoop hadoop 6 May 12 15:40 data

drwxrwxr-x. 3 hadoop hadoop 40 May 12 15:43 name

[hadoop@master ~]$ ll /usr/local/src/hadoop/tmp/dfs/

total 0

drwxrwxr-x. 3 hadoop hadoop 40 May 12 15:44 namesecondary

查看HDFS的报告

[hadoop@master ~]$ hdfs dfsadmin -report

Configured Capacity: 100681220096 (93.77 GB)

Present Capacity: 94562549760 (88.07 GB)

DFS Remaining: 94562541568 (88.07 GB)

DFS Used: 8192 (8 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (2):

Name: 192.168.88.30:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 50340610048 (46.88 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 3059306496 (2.85 GB)

DFS Remaining: 47281299456 (44.03 GB)

DFS Used%: 0.00%

DFS Remaining%: 93.92%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Fri May 12 15:46:49 CST 2023

Name: 192.168.88.20:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 50340610048 (46.88 GB)

DFS Used: 4096 (4 KB)

Non DFS Used: 3059363840 (2.85 GB)

DFS Remaining: 47281242112 (44.03 GB)

DFS Used%: 0.00%

DFS Remaining%: 93.92%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Fri May 12 15:46:49 CST 2023

HDFS 中的文件

#查看目录

[hadoop@master ~]$ hdfs dfs -ls /

#创建文件夹

[hadoop@master ~]$ hdfs dfs -mkdir /input

#删除文件夹

[hadoop@master ~]$ hdfs dfs -rm -r -f /output

#将本地文件添加到hdfs根目录下面的目录中

[hadoop@master ~]$ hdfs dfs -put ~/data.txt /input

#查看文件内容

[hadoop@master ~]$ hdfs dfs -cat /input/data.txt

运行 WordCount 案例,计算数据文件中各单词的频度

MapReduce 命令指定数据输出目录为/output,/output 目录在 HDFS 文件系统中已经存

在,则执行相应的 MapReduce 命令就会出错。所以如果不是第一次运行 MapReduce,就要先

查看HDFS中的文件,是否存在/output目录。如果已经存在/output目录,就要先删除/output

目录

#先在本地创建一份问价,写入内容

[hadoop@master ~]$ vi data.txt

Hello HDFS

Hello Mili

Hello world.execute(me);

#然后创建在HDFS根目录下input输入目录

[hadoop@master ~]$ hdfs dfs -mkdir /input

[hadoop@master ~]$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2023-05-12 15:57 /input

#然后将本地data.txt文件复制过去

[hadoop@master ~]$ hdfs dfs -put ~/data.txt /input

[hadoop@master ~]$ hdfs dfs -ls /input

Found 1 items

-rw-r--r-- 3 hadoop supergroup 47 2023-05-12 15:58 /input/data.txt

#执行命令跑任务,统计单词

[hadoop@master ~]$ hadoop jar /usr/local/src/hadoop/share/hadoop/mapreduce/ \

hadoop-mapreduce-examples-2.7.1.jar wordcount /input/data.txt /output

#查看就多了output输出目录和tmp缓存目录

[hadoop@master ~]$ hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hadoop supergroup 0 2023-05-12 15:58 /input

drwxr-xr-x - hadoop supergroup 0 2023-05-12 16:01 /output

drwx------ - hadoop supergroup 0 2023-05-12 16:01 /tmp

#查看output里面,part-r-00000文件就是任务执行完成的结果

[hadoop@master ~]$ hdfs dfs -ls /output

Found 2 items

-rw-r--r-- 3 hadoop supergroup 0 2023-05-12 16:01 /output/_SUCCESS

-rw-r--r-- 3 hadoop supergroup 43 2023-05-12 16:01 /output/part-r-00000

#查看内容

[hadoop@master ~]$ hdfs dfs -cat /output/part-r-00000

HDFS 1

Hello 3

Mili 1

world.execute(me);

安装配置Hive组件

删除节点mariadb

#如果有mariadb的软件则删除

[root@master ~]# rpm -qa | grep mariadb

mariadb-libs-5.5.56-2.el7.x86_64

查看:rpm -qa | grep mariadb

删除:rpm -e mariadb-libs --nodeps

master节点安装解压mysql相关包

#按如下顺序依次按照 MySQL 数据库的 mysql common、mysql libs、mysql client

软件包

#安装mysql包

[root@master mysql]# rpm -ivh mysql-community-common-5.7.18-1.el7.x86_64.rpm

warning: mysql-community-common-5.7.18-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:mysql-community-common-5.7.18-1.e################################# [100%]

[root@master mysql]# rpm -ivh mysql-community-libs-5.7.18-1.el7.x86_64.rpm

warning: mysql-community-libs-5.7.18-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:mysql-community-libs-5.7.18-1.el7################################# [100%]

[root@master mysql]# rpm -ivh mysql-community-client-5.7.18-1.el7.x86_64.rpm

warning: mysql-community-client-5.7.18-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:mysql-community-client-5.7.18-1.e################################# [100%]

[root@master mysql]# rpm -ivh mysql-community-devel-5.7.18-1.el7.x86_64.rpm

warning: mysql-community-devel-5.7.18-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:mysql-community-devel-5.7.18-1.el################################# [100%]

[root@master mysql]# yum -y install mysql-community-server-5.7.18-1.el7.x86_64.rpm

Loaded plugins: fastestmirror

Examining mysql-community-server-5.7.18-1.el7.x86_64.rpm: mysql-community-server-5.7.18-1.el7.x86_64

Marking mysql-community-server-5.7.18-1.el7.x86_64.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package mysql-community-server.x86_64 0:5.7.18-1.el7 will be installed

--> Processing Dependency: net-tools for package: mysql-community-server-5.7.18-1.el7.x86_64

Loading mirror speeds from cached hostfile

* base: ftp.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

--> Processing Dependency: perl(Getopt::Long) for package: mysql-community-server-5.7.18-1.el7.x86_64

--> Processing Dependency: perl(strict) for package: mysql-community-server-5.7.18-1.el7.x86_64

--> Running transaction check

---> Package net-tools.x86_64 0:2.0-0.25.20131004git.el7 will be installed

---> Package perl.x86_64 4:5.16.3-299.el7_9 will be installed

--> Processing Dependency: perl-libs = 4:5.16.3-299.el7_9 for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl(Socket) >= 1.3 for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl(Scalar::Util) >= 1.10 for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl-macros for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl-libs for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl(threads::shared) for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl(threads) for package: 4:perl-5.16.3-299.el7_9.x86_64

--> Processing Dependency: perl(constant) for package: 4:perl-5.16.3-299.el7_9.x86_64

....

Verifying : perl-Filter-1.49-3.el7.x86_64 26/29

Verifying : perl-Getopt-Long-2.40-3.el7.noarch 27/29

Verifying : perl-Text-ParseWords-3.29-4.el7.noarch 28/29

Verifying : 4:perl-libs-5.16.3-299.el7_9.x86_64 29/29

Installed:

mysql-community-server.x86_64 0:5.7.18-1.el7

Complete!

配置my.cnf文件

[root@master ~]# vi /etc/my.cnf

[mysqld]

#

# Remove leading # and set to the amount of RAM for the most important data

# cache in MySQL. Start at 70% of total RAM for dedicated server, else 10%.

# innodb_buffer_pool_size = 128M

#

# Remove leading # to turn on a very important data integrity option: logging

# changes to the binary log between backups.

# log_bin

#

# Remove leading # to set options mainly useful for reporting servers.

# The server defaults are faster for transactions and fast SELECTs.

# Adjust sizes as needed, experiment to find the optimal values.

# join_buffer_size = 128M

# sort_buffer_size = 2M

# read_rnd_buffer_size = 2M

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

default-storage-engine=innodb

innodb_file_per_table

collation-server=utf8_general_ci

init-connect='SET NAMES utf8'

character-set-server=utf8

#在[mysql]将以下配置信息添加到/etc/my.cnf 文件 symbolic-links=0 配置信息的下方加入以上几行

启动mysql,并设置开机自启

[root@master ~]# systemctl enable --now mysqld

[root@master ~]# systemctl status mysqld

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2023-05-15 15:53:50 CST; 13s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 2532 ExecStart=/usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid $MYSQLD_OPT>

Process: 2459 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 2535 (mysqld)

Tasks: 27 (limit: 12221)

Memory: 297.3M

CGroup: /system.slice/mysqld.service

└─2535 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid

May 15 15:53:44 master systemd[1]: Starting MySQL Server...

May 15 15:53:50 master systemd[1]: Started MySQL Server.

查询数据库默认密码

[root@master ~]# grep password /var/log/mysqld.log

2023-05-15T07:53:47.011748Z 1 [Note] A temporary password is generated for root@localhost: yhoE+ggh2Cg<

初始化数据库

#设置数据库密码为:Password@123_

[root@master ~]# mysql_secure_installation

Securing the MySQL server deployment.

Enter password for user root: # 输入/var/log/mysqld.log 文件中查询

到的默认 root 用户登录密码

The existing password for the user account root has expired. Please set a new password.

New password: # 输入新密码 Password123$

Re-enter new password: # 再次输入新密码 Password123$

The 'validate_password' plugin is installed on the server.

The subsequent steps will run with the existing configuration

of the plugin.

Using existing password for root.

Estimated strength of the password: 100

Change the password for root ? ((Press y|Y for Yes, any other key for No) : #回车

... skipping.

By default, a MySQL installation has an anonymous user,

allowing anyone to log into MySQL without having to have

a user account created for them. This is intended only for

testing, and to make the installation go a bit smoother.

You should remove them before moving into a production

environment.

Remove anonymous users? (Press y|Y for Yes, any other key for No) : y

Success.

Normally, root should only be allowed to connect from

'localhost'. This ensures that someone cannot guess at

the root password from the network.

Disallow root login remotely? (Press y|Y for Yes, any other key for No) : n

... skipping.

By default, MySQL comes with a database named 'test' that

anyone can access. This is also intended only for testing,

and should be removed before moving into a production

environment.

Remove test database and access to it? (Press y|Y for Yes, any other key for No) : y

- Dropping test database...

Success.

- Removing privileges on test database...

Success.

Reloading the privilege tables will ensure that all changes

made so far will take effect immediately.

Reload privilege tables now? (Press y|Y for Yes, any other key for No) : y

Success.

All done!

登录数据库,添加 root 用户从本地和远程访问 MySQL 数据库表单的授权

#登录如果报错缺失libncurses...的文件或目录,就yum install libncurses* -y,装一下就好了

[root@master ~]# mysql -uroot -pPassword@123_

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 6

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> grant all privileges on *.* to root@'localhost' identified by 'Password@123_';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> grant all privileges on *.* to root@'%' identified by 'Password@123_';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> exit

Bye

安装解压hive相关包

[root@master ~]# tar xf apache-hive-2.0.0-bin.tar.gz -C /usr/local/src

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

apache-hive-2.0.0-bin hadoop jdk

#重命名文件

[root@master src]# mv apache-hive-2.0.0-bin/ hive

[root@master src]# ls

hadoop hive jdk

#赋予hadoop权限

[root@master ~]# chown -R hadoop.hadoop /usr/local/src

[root@master ~]# ll /usr/local/src/

total 0

drwxr-xr-x. 12 hadoop hadoop 183 May 12 15:39 hadoop

drwxr-xr-x. 8 hadoop hadoop 159 May 15 16:04 hive

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

配置hive环境变量

#添加配置文件hive.sh

[root@master ~]# vi /etc/profile.d/hive.sh

export HIVE_HOME=/usr/local/src/hive

export PATH=${HIVE_HOME}/bin:$PATH

#写入如上两行

配置hive参数

#切换为hadoop用户

[root@master ~]# su - hadoop

Last login: Fri May 12 15:09:48 CST 2023 on pts/0

Last failed login: Fri May 12 15:27:55 CST 2023 from 127.0.0.1 on ssh:notty

There were 3 failed login attempts since the last successful login.

[hadoop@master ~]$

#进入文件目录,备份hive的配置文件并修改

[hadoop@master ~]$ cp /usr/local/src/hive/conf/hive-default.xml.template /usr/local/src/hive/conf/hive-site.xml

1)设置 MySQL 数据库连接

<name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

2)配置 MySQL 数据库 root 的密码。

<name>javax.jdo.option.ConnectionPassword</name>

<value>Password@123_</value>

3)验证元数据存储版本一致性。若默认 false,则不用修改。

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

4)配置数据库驱动

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

5)配置数据库用户名 javax.jdo.option.ConnectionUserName 为 root

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

6)将以下位置的 ${system:java.io.tmpdir}/${system:user.name} 替换为

“/usr/local/src/hive/tmp”目录及其子目录。

需要替换以下 4 处配置内容:

<name>hive.querylog.location</name>

<value>/usr/local/src/hive/tmp</value>

<name>hive.exec.local.scratchdir</name>

<value>/usr/local/src/hive/tmp</value>

<name>hive.downloaded.resources.dir</name>

<value>/usr/local/src/hive/tmp/resources</value>

<name>hive.server2.logging.operation.log.location</name>

<value>/usr/local/src/hive/tmp/operation_logs</value>

创建operation_logs和resources目录

[hadoop@master ~]$ mkdir -p /usr/local/src/hive/tmp/{operation_logs,resources}

[hadoop@master ~]$ tree /usr/local/src/hive/tmp/

/usr/local/src/hive/tmp/

├── operation_logs

└── resources

2 directories, 0 files

初始化Hive元数据

-

将 MySQL 数据库驱动(/opt/software/mysql-connector-java-5.1.46.jar)拷贝到 Hive 安装目录的 lib 下,并更改权限

[root@master ~]# cp mysql-connector-java-5.1.46.jar /usr/local/src/hive/lib/ #保证这个包的所有者是hadoop用户,更改它的所属 [root@master ~]# chown -R hadoop.hadoop /usr/local/src/ [root@master ~]# ll /usr/local/src/hive/lib/mysql-connector-java-5.1.46.jar -rw-r--r-- 1 hadoop hadoop 1004838 Mar 25 18:24 /usr/local/src/hive/lib/mysql-connector-java-5.1.46.jar -

启动Hadoop

[hadoop@master ~]$ start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out 192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out 192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out 192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out 192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out [hadoop@master ~]$ jps 4081 SecondaryNameNode 4498 Jps 4238 ResourceManager 3887 NameNode [hadoop@slave1 ~]$ jps 1521 Jps 1398 NodeManager 1289 DataNode [hadoop@slave2 ~]$ jps 1298 DataNode 1530 Jps 1407 NodeManager -

初始化数据库

[hadoop@master conf]$ schematool -initSchema -dbType mysql which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: root Starting metastore schema initialization to 2.0.0 Initialization script hive-schema-2.0.0.mysql.sql Initialization script completed schemaTool completed -

启动Hive

[hadoop@master ~]$ hive which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.0.0.jar!/hive-log4j2.properties Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>

安装配置sqoop组件

安装解压hive相关包

#解压并更改文件夹名

[root@master ~]# tar -xf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

hadoop hbase hive jdk sqoop-1.4.7.bin__hadoop-2.6.0 zookeeper

[root@master src]# mv sqoop-1.4.7.bin__hadoop-2.6.0/ sqoop

[root@master src]# ls

hadoop hbase hive jdk sqoop zookeeper

修改sqoop-env.sh文件

#复制 sqoop-env-template.sh 模板,并将模板重命名为 sqoop-env.sh

[root@master src]# cd /usr/local/src/sqoop/conf/

[root@master conf]# ls

oraoop-site-template.xml sqoop-env-template.sh sqoop-site.xml

sqoop-env-template.cmd sqoop-site-template.xml

[root@master conf]# cp sqoop-env-template.sh sqoop-env.sh

[root@master conf]# ls

oraoop-site-template.xml sqoop-env-template.cmd sqoop-site-template.xml

sqoop-env.sh sqoop-env-template.sh sqoop-site.xml

#修改

#下面各组件的安装路径需要与实际环境中的安装路径保持一致

[root@master ~]# vi /usr/local/src/sqoop/conf/sqoop-env.sh

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/usr/local/src/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/usr/local/src/hadoop

#set the path to where bin/hbase is available

#export HBASE_HOME=/usr/local/src/hbase

#Set the path to where bin/hive is available

export HIVE_HOME=/usr/local/src/hive

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=/usr/local/src/zookeeper

配置sqoop环境变量

[root@master ~]# vi /etc/profile.d/sqoop.sh

#写入如下

export SQOOP_HOME=/usr/local/src/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

export CLASSPATH=$CLASSPATH:$SQOOP_HOME/lib

连接数据库

#需要将/opt/software/mysql-connector-java-5.1.46.jar 文件放入 sqoop 的 lib 目录中

[root@master ~]# cp mysql-connector-java-5.1.46.jar /usr/local/src/sqoop/lib/

更改文件夹所属用户权限

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

[root@master ~]# ll /usr/local/src/

total 4

drwxr-xr-x. 12 hadoop hadoop 183 Mar 15 16:11 hadoop

drwxr-xr-x 9 hadoop hadoop 183 Apr 6 22:44 hbase

drwxr-xr-x 9 hadoop hadoop 170 Mar 25 18:14 hive

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 9 hadoop hadoop 318 Dec 19 2017 sqoop

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:56 zookeeper

启动sqoop

-

启动Hadoop

#切换用户 [root@master ~]# su - hadoop Last login: Wed Apr 12 13:16:11 CST 2023 on pts/0 #启动hadoop [hadoop@master ~]$ start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out 192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out 192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out 192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out 192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out #查看集群状态 [hadoop@master ~]$ jps 1538 SecondaryNameNode 1698 ResourceManager 1957 Jps 1343 NameNode -

测试 Sqoop 是否能够正常连接 MySQL 数据库

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://master:3306/ --username root -P Warning: /usr/local/src/sqoop/../hbase does not exist! HBase imports will fail. Please set $HBASE_HOME to the root of your HBase installation. Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /usr/local/src/sqoop/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 23/05/15 18:23:30 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: 23/05/15 18:23:37 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. Mon May 15 18:23:37 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. information_schema hive mysql performance_schema sys -

连接 hive

#为了使 Sqoop 能够连接 Hive,需要将 hive 组件/usr/local/src/hive/lib 目录下的hive-common-2.0.0.jar 也放入 Sqoop 安装路径的 lib 目录中 [hadoop@master ~]$ cp /usr/local/src/hive/lib/hive-common-2.0.0.jar /usr/local/src/sqoop/lib/ #赋予hadoop用户权限 [root@master ~]# chown -R hadoop.hadoop /usr/local/src/

Hadoop集群验证

hadoop集群运行状态

[hadoop@master ~]$ jps

1609 SecondaryNameNode

3753 Jps

1404 NameNode

1772 ResourceManager

[hadoop@slave1 ~]$ jps

1265 DataNode

1387 NodeManager

5516 Jps

[hadoop@slave2 ~]$ jps

1385 NodeManager

5325 Jps

1263 DataNode

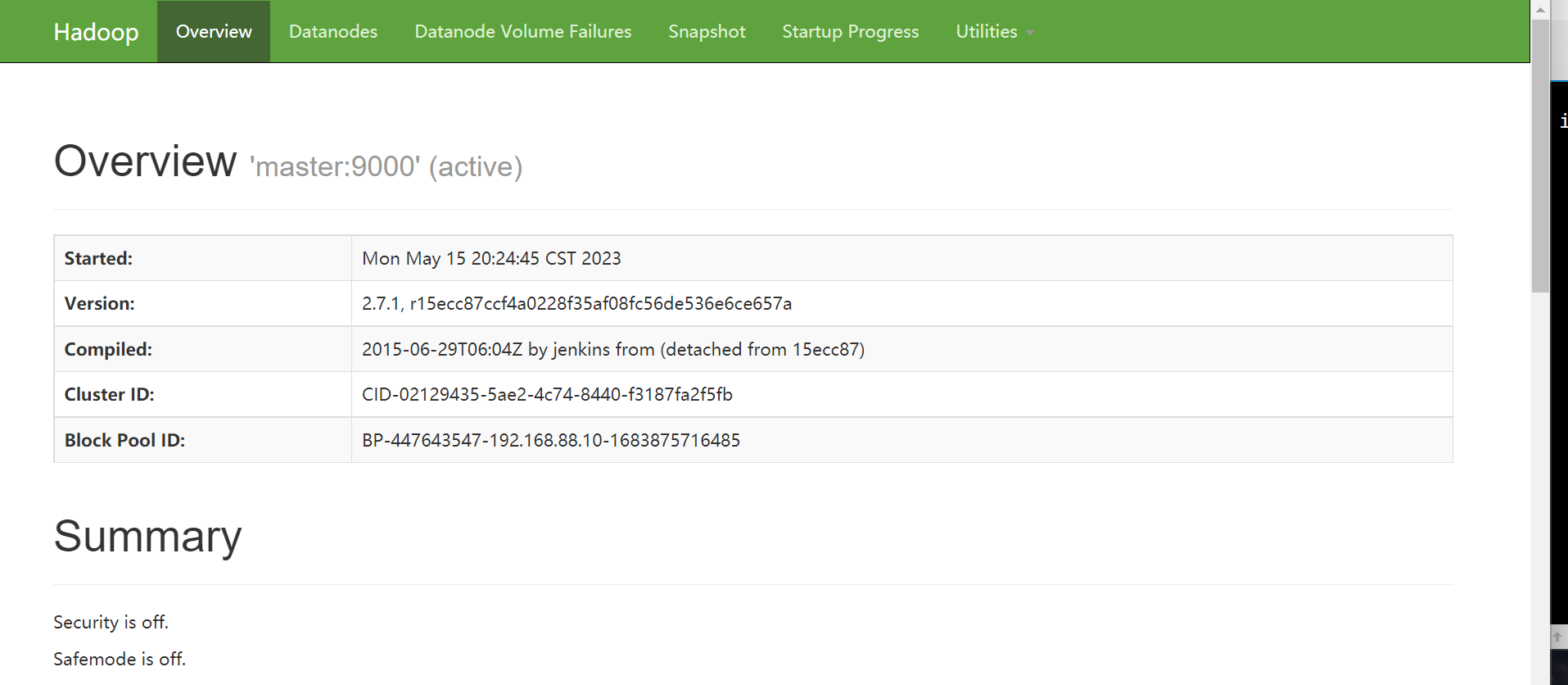

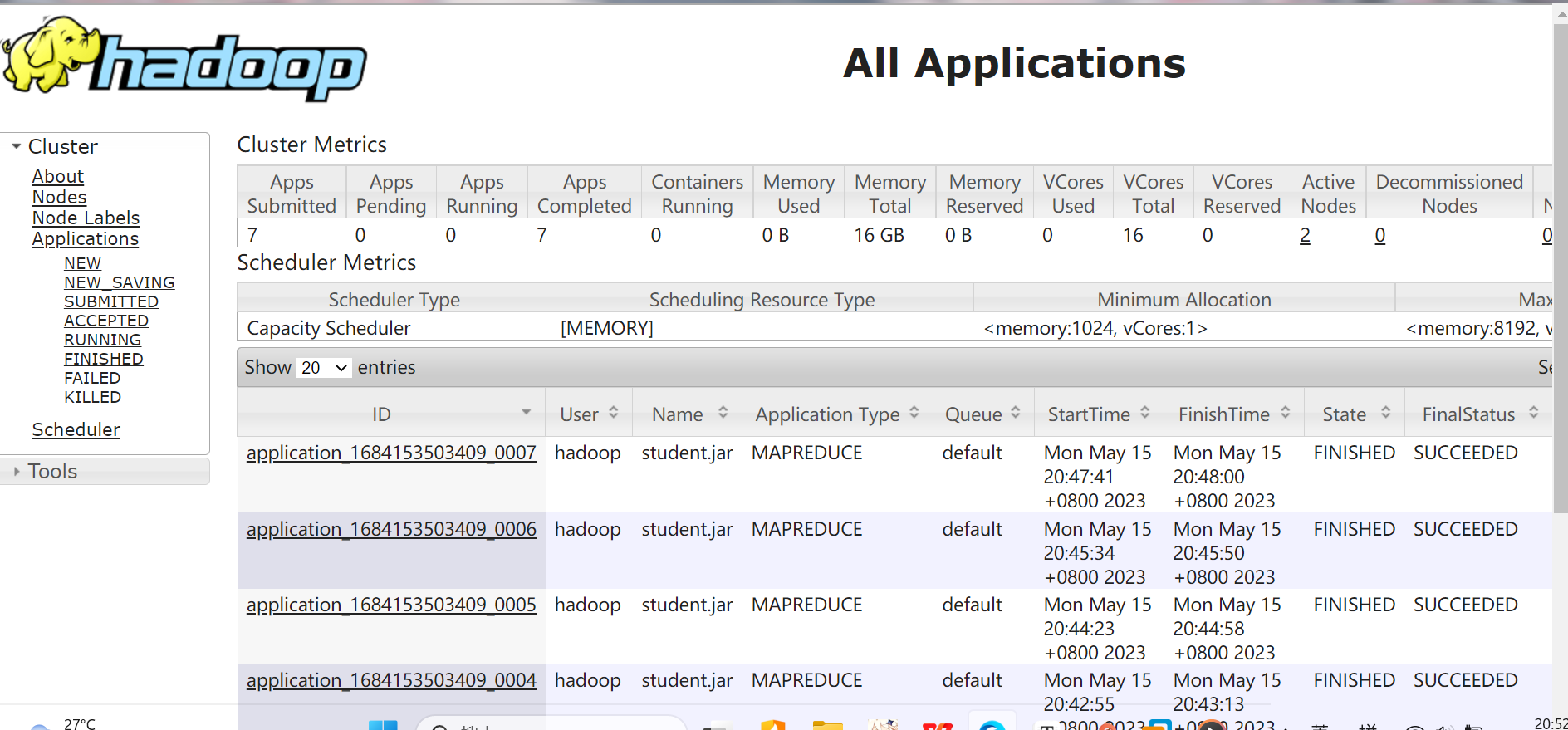

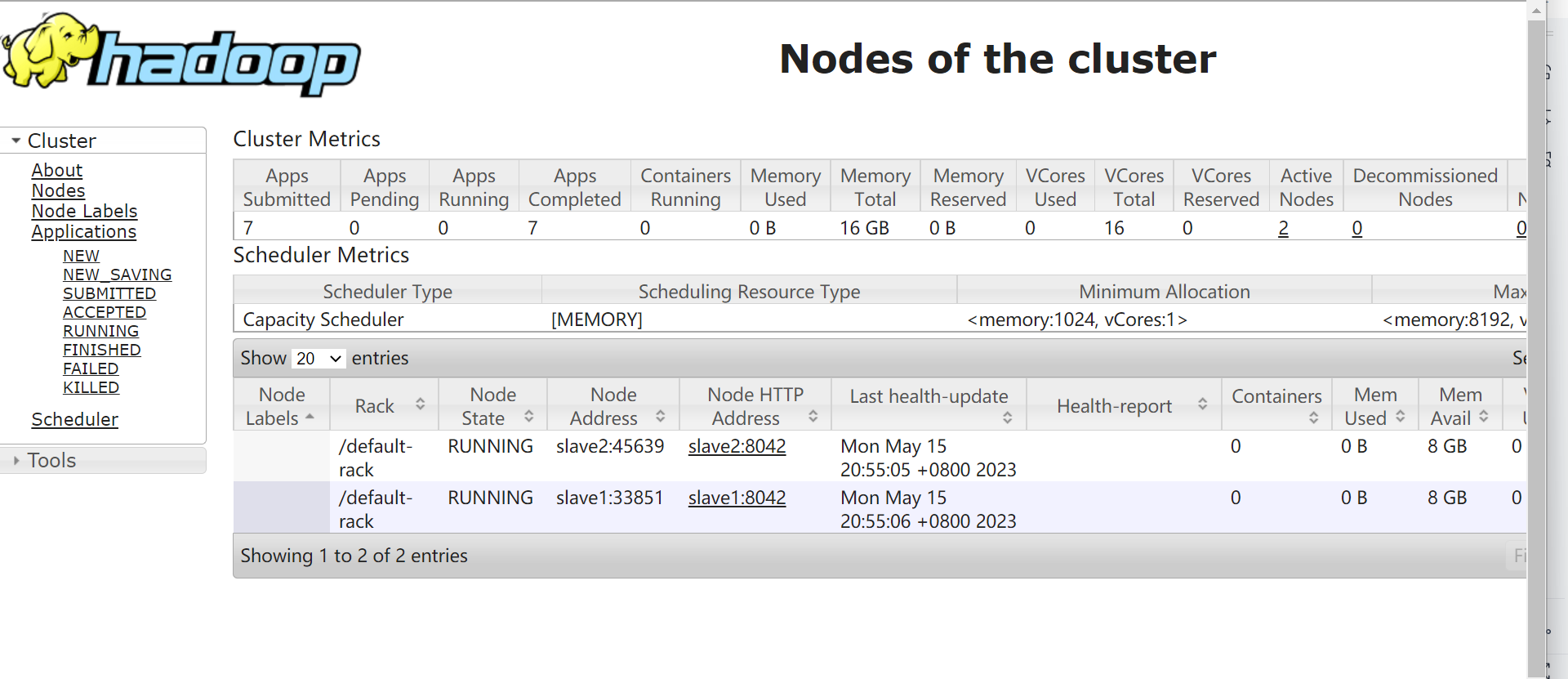

web监控界面

使用浏览器浏览主节点机http://master:50070,能查看 NameNode节点状态说明系统启

动正常

http://master:8088,查看所有应用说明系统启动正常

浏览 Nodes 说明系统启动正常

查看Sqoop版本

[hadoop@master ~]$ sqoop version

Warning: /usr/local/src/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /usr/local/src/sqoop/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

23/05/15 20:59:29 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

Sqoop连接MySQL数据库

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://master:3306/ --username root -P

Warning: /usr/local/src/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /usr/local/src/sqoop/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

23/05/15 21:00:44 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Enter password:

23/05/15 21:00:51 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

Mon May 15 21:00:51 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

information_schema

hive

mysql

performance_schema

sample

sys

Sqoop将HDFS数据导入到MySQL

#使用 Sqoop 命令,将 HDFS 中“/user/test”数据导入到 MySQL 中(其中 HDFS 数据源已经上传到大数据平台,MySQL 相关数据库、数据表在第九章已经创建,删除原数据库 student 表的数据

[hadoop@master ~]$ mysql -u root -pPassword@123_

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 80

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> use sample ;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from student;

+--------+----------+

| number | name |

+--------+----------+

| 1 | zhangsan |

| 2 | lisi |

| 3 | wangwu |

| 4 | sss |

| 5 | ss2 |

| 6 | ss3 |

+--------+----------+

6 rows in set (0.00 sec)

mysql> delete from student;

Query OK, 6 rows affected (0.00 sec)

mysql> select * from student;

Empty set (0.00 sec)

mysql> exit;

Bye

#将 HDFS 数据导入到 MySQL

[hadoop@master ~]$ sqoop export --connect "jdbc:mysql://master:3306/sample?useUnicode=true&characterEncoding=utf-8" --username root --password Password@123_ --table student --input-fields-terminated-by ',' --ex

port-dir /user/test

Warning: /usr/local/src/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /usr/local/src/sqoop/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

23/05/15 21:07:52 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

23/05/15 21:07:52 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

23/05/15 21:07:52 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

23/05/15 21:07:52 INFO tool.CodeGenTool: Beginning code generation

Mon May 15 21:07:52 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

23/05/15 21:07:52 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student` AS t LIMIT 1

23/05/15 21:07:52 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `student` AS t LIMIT 1

23/05/15 21:07:53 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/src/hadoop

Note: /tmp/sqoop-hadoop/compile/5002644d50ead7a138bb702aa278fab9/student.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

23/05/15 21:07:54 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/5002644d50ead7a138bb702aa278fab9/student.jar

23/05/15 21:07:54 INFO mapreduce.ExportJobBase: Beginning export of student

23/05/15 21:07:54 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

23/05/15 21:07:55 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

23/05/15 21:07:55 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

23/05/15 21:07:55 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

23/05/15 21:07:55 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.88.10:8032

23/05/15 21:07:57 INFO input.FileInputFormat: Total input paths to process : 2

23/05/15 21:07:57 INFO input.FileInputFormat: Total input paths to process : 2

23/05/15 21:07:57 INFO mapreduce.JobSubmitter: number of splits:3

23/05/15 21:07:57 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

23/05/15 21:07:58 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1684153503409_0008

23/05/15 21:07:58 INFO impl.YarnClientImpl: Submitted application application_1684153503409_0008

23/05/15 21:07:58 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1684153503409_0008/

23/05/15 21:07:58 INFO mapreduce.Job: Running job: job_1684153503409_0008

23/05/15 21:08:07 INFO mapreduce.Job: Job job_1684153503409_0008 running in uber mode : false

23/05/15 21:08:07 INFO mapreduce.Job: map 0% reduce 0%

23/05/15 21:08:26 INFO mapreduce.Job: map 100% reduce 0%

23/05/15 21:08:26 INFO mapreduce.Job: Job job_1684153503409_0008 completed successfully

23/05/15 21:08:27 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=402075

FILE: Number of read operations=0

...............

#进入数据库查看

[hadoop@master ~]$ mysql -u root -pPassword@123_

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 85

Server version: 5.7.18 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> use sample;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from student;

+--------+----------+

| number | name |

+--------+----------+

| 1 | zhangsan |

| 2 | lisi |

| 3 | wangwu |

| 4 | sss |

| 5 | ss2 |

| 6 | ss3 |

+--------+----------+

6 rows in set (0.00 sec)

Hive组件验证,初始化

[hadoop@master conf]$ schematool -initSchema -dbType mysql

which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 2.0.0

Initialization script hive-schema-2.0.0.mysql.sql

Initialization script completed

schemaTool completed

启动hive

[hadoop@master ~]$ hive

which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin:/usr/local/src/sqoop/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.0.0.jar!/hive-log4j2.properties

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>