标签:src 部署 hadoop master usr hbase local HBase

HBase部署-基于Hbase自带的Zookeeper

时间同步

#查看三台主机时间是否同步,可容忍5秒内偏差

[root@master ~]# for i in master slave1 slave2;do ssh root@$i 'date';done

Thu Apr 6 17:26:38 CST 2023

Thu Apr 6 17:26:38 CST 2023

Thu Apr 6 17:26:38 CST 2023

#若要实现时间同步,则安装ntpdate包

[root@master ~]# yum -y install ntpdate

#执行命令

[root@master ~]# ntpdate time1.aliyun.com

#注意三个节点都要做

解压安装包,改名

[root@master ~]# ls

apache-hive-2.0.0-bin.tar.gz mysql

hadoop-2.7.1.tar.gz mysql-5.7.18.zip

hbase-1.2.1-bin.tar.gz mysql-connector-java-5.1.46.jar

jdk-8u152-linux-x64.tar.gz zookeeper-3.4.8.tar.gz

[root@master ~]# tar xf hbase-1.2.1-bin.tar.gz -C /usr/local/src

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

hadoop hbase-1.2.1 hive jdk zookeeper

[root@master src]# mv hbase-1.2.1 hbase

[root@master src]# ls

hadoop hbase hive jdk zookeeper

[root@master ~]# cd /usr/local/src/hbase/conf/

[root@master conf]# ls

hadoop-metrics2-hbase.properties hbase-env.sh hbase-site.xml regionservers

hbase-env.cmd hbase-policy.xml log4j.properties

配置hbase环境变量

#创建hbase.sh文件

[root@master ~]# vi /etc/profile.d/hbase.sh

#添加如下内容

export HBASE_HOME=/usr/local/src/hbase

export PATH=${HBASE_HOME}/bin:$PATH

#进入/usr/local/src/hbase/conf/目录,在文件hbase-env.sh中修改以下内容

export JAVA_HOME=/usr/local/src/jdk

export HBASE_CLASSPATH=/usr/local/src/hadoop/etc/hadoop

export HBASE_MANAGES_ZK=true

#export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

#export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

在master节点配置hbase-site.xml

[root@master conf]# vi hbase-site.xml

#在<configuration></configuration>添加

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>60010</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>120000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/usr/local/src/hbase/tmp</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

在master节点修改 regionservers文件

[root @master conf]$ vi regionservers

#删除 localhost,每一行写一个 slave 节点主机机器名

slave1

slave2

在 master 节点创建hbase.tmp.dir目录

[root@master hbase]# mkdir /usr/local/src/hbase/tmp

[root@master hbase]# ls

bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt NOTICE.txt README.txt tmp

将 master上的 hbase 安装文件同步到 slave1 slave2

[root@master ~]# scp -r /usr/local/src/hbase/ root@slave1:/usr/local/src/

[root@master ~]# scp -r /usr/local/src/hbase/ root@slave2:/usr/local/src/

在所有节点修改hbase 目录权限

#master

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

[root@master ~]# ll /usr/local/src/

total 4

drwxr-xr-x. 12 hadoop hadoop 183 Mar 15 16:11 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 20:43 hbase

drwxr-xr-x 9 hadoop hadoop 170 Mar 25 18:14 hive

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:56 zookeeper

#slave1

[root@slave1 ~]# chown -R hadoop.hadoop /usr/local/src/

[root@slave1 ~]# ll /usr/local/src/

total 4

drwxr-xr-x 12 hadoop hadoop 183 Mar 15 16:14 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 20:48 hbase

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:58 zookeeper

#slave2

[root@slave2 ~]# chown -R hadoop.hadoop /usr/local/src/

[root@slave2 ~]# ll /usr/local/src/

total 4

drwxr-xr-x 12 hadoop hadoop 183 Mar 15 16:14 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 20:48 hbase

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:58 zookeeper

将master配置的hbase.sh环境变量传到slave节点

[root@master ~]# scp /etc/profile.d/hbase.sh root@slave1:/etc/profile.d/

hbase.sh 100% 75 67.7KB/s 00:00

[root@master ~]# scp /etc/profile.d/hbase.sh root@slave2:/etc/profile.d/

hbase.sh 100% 75 75.3KB/s 00:00

#source导入环境变量后,查看三台主机环境变量

[root@master ~]# source /etc/profile.d/hbase.sh

[root@master ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/src/hive/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

[root@slave1 ~]# source /etc/profile.d/hbase.sh

[root@slave1 ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

[root@slave2 ~]# source /etc/profile.d/hbase.sh

[root@slave2 ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

切换hadoop用户

[root@master ~]# su - hadoop

Last login: Wed Mar 29 10:20:38 CST 2023 on pts/0

[hadoop@master ~]$

[root@slave1 ~]# su - hadoop

Last login: Wed Mar 29 10:20:41 CST 2023 on pts/0

[hadoop@slave1 ~]$

[root@slave2 ~]# su - hadoop

Last login: Wed Mar 29 10:20:48 CST 2023 on pts/0

[hadoop@slave2 ~]$

启动 HBase

#先启动 Hadoop,然后启动 HBase

#启动Hadoop

[hadoop@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out

192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out

192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out

192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out

192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

1497 NameNode

1851 ResourceManager

1692 SecondaryNameNode

2111 Jps

[hadoop@slave1 ~]$ jps

1367 NodeManager

1256 DataNode

1487 Jps

[hadoop@slave2 ~]$ jps

1428 NodeManager

1317 DataNode

1549 Jps

#启动Hbase

[hadoop@master ~]$ start-hbase.sh

slave2: starting zookeeper, logging to /usr/local/src/hbase/logs/hbase-hadoop-zookeeper-slave2.out

master: starting zookeeper, logging to /usr/local/src/hbase/logs/hbase-hadoop-zookeeper-master.out

slave1: starting zookeeper, logging to /usr/local/src/hbase/logs/hbase-hadoop-zookeeper-slave1.out

starting master, logging to /usr/local/src/hbase/logs/hbase-hadoop-master-master.out

slave2: starting regionserver, logging to /usr/local/src/hbase/logs/hbase-hadoop-regionserver-slave2.out

slave1: starting regionserver, logging to /usr/local/src/hbase/logs/hbase-hadoop-regionserver-slave1.out

[hadoop@master ~]$ jps

2519 Jps

1497 NameNode

2393 HMaster

1851 ResourceManager

1692 SecondaryNameNode

2335 HQuorumPeer

[hadoop@slave1 ~]$ jps

1600 HRegionServer

1367 NodeManager

1256 DataNode

1533 HQuorumPeer

1757 Jps

[hadoop@slave2 ~]$ jps

1428 NodeManager

1317 DataNode

1848 Jps

1594 HQuorumPeer

1661 HRegionServer

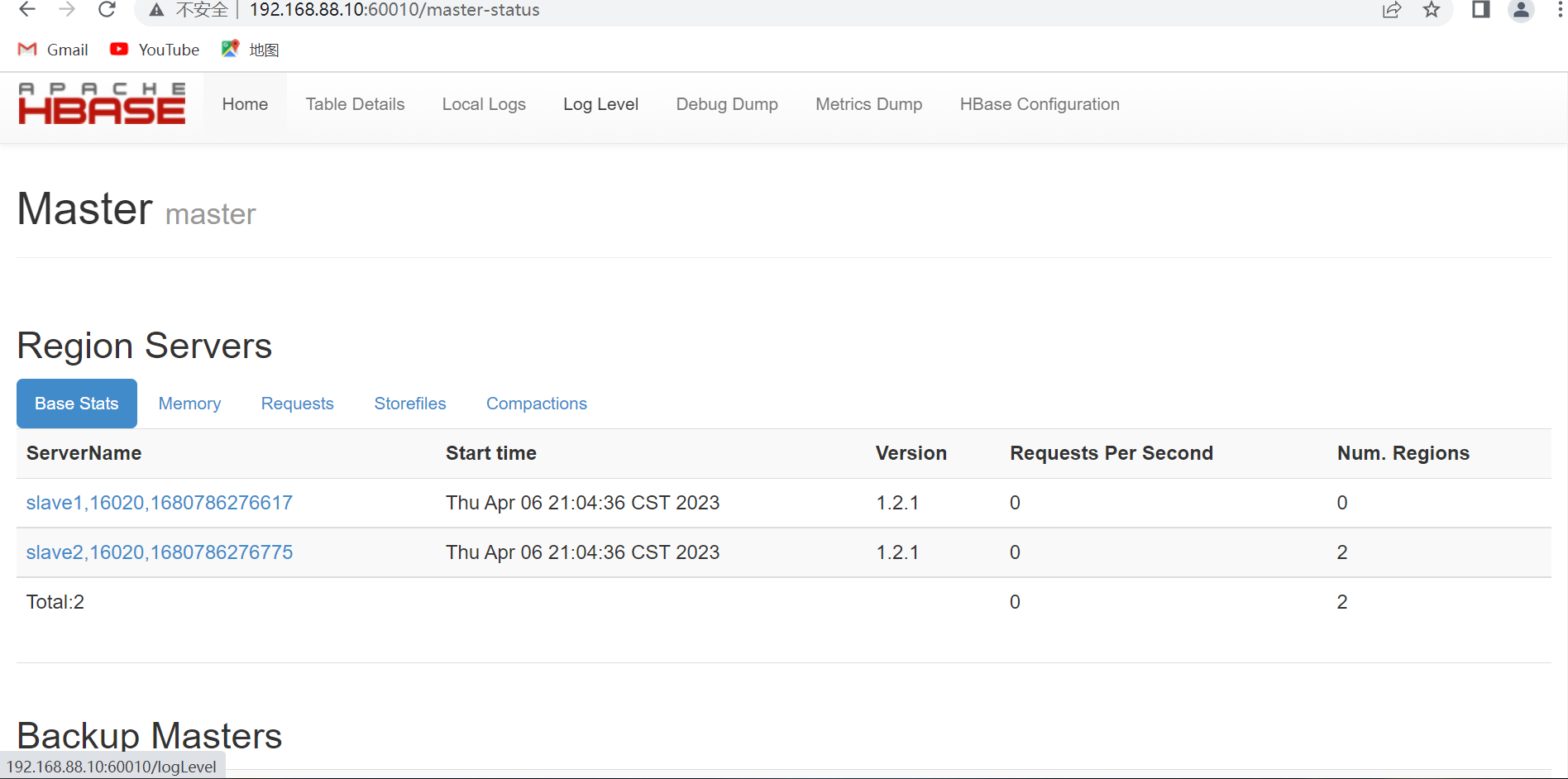

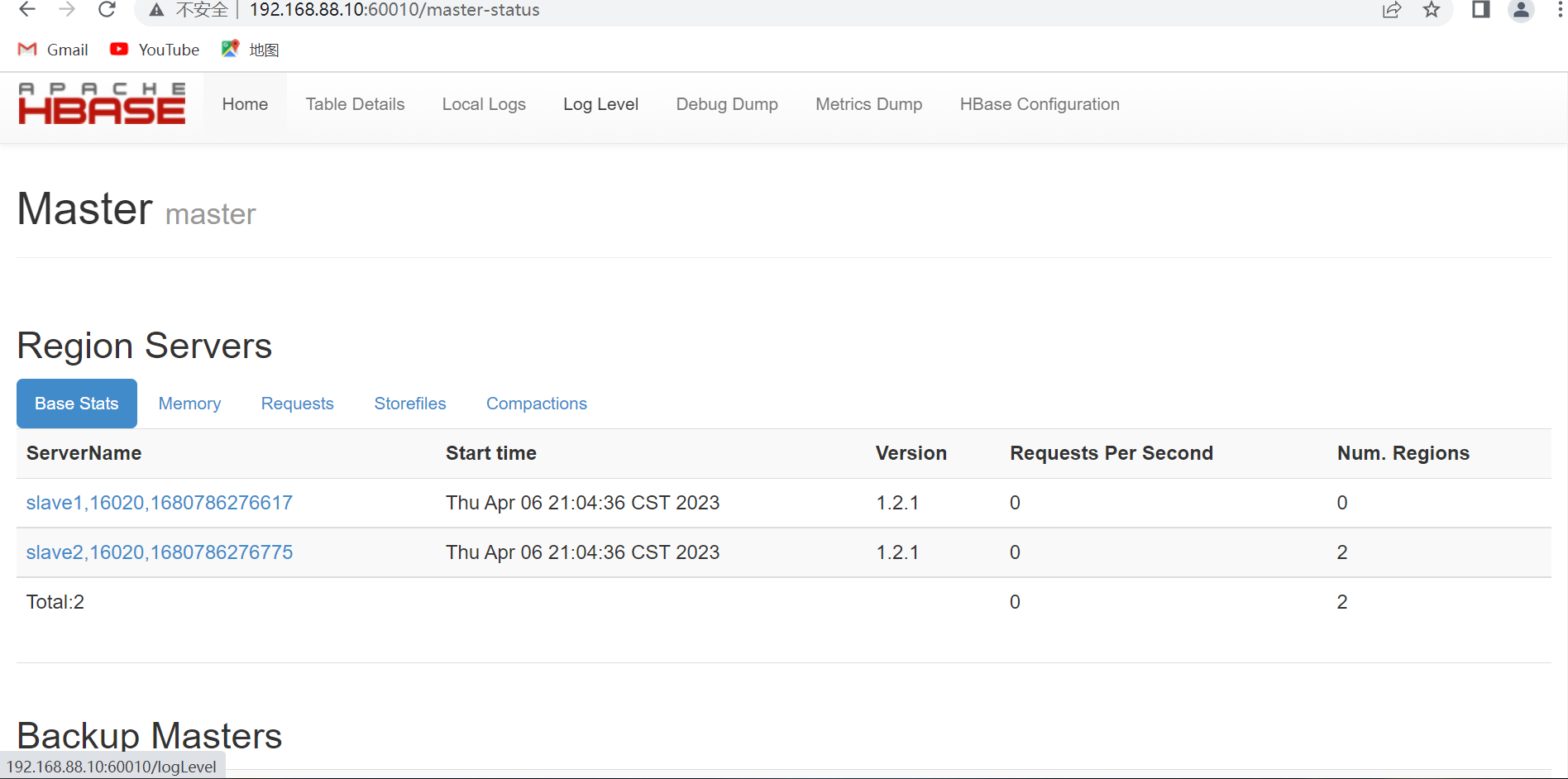

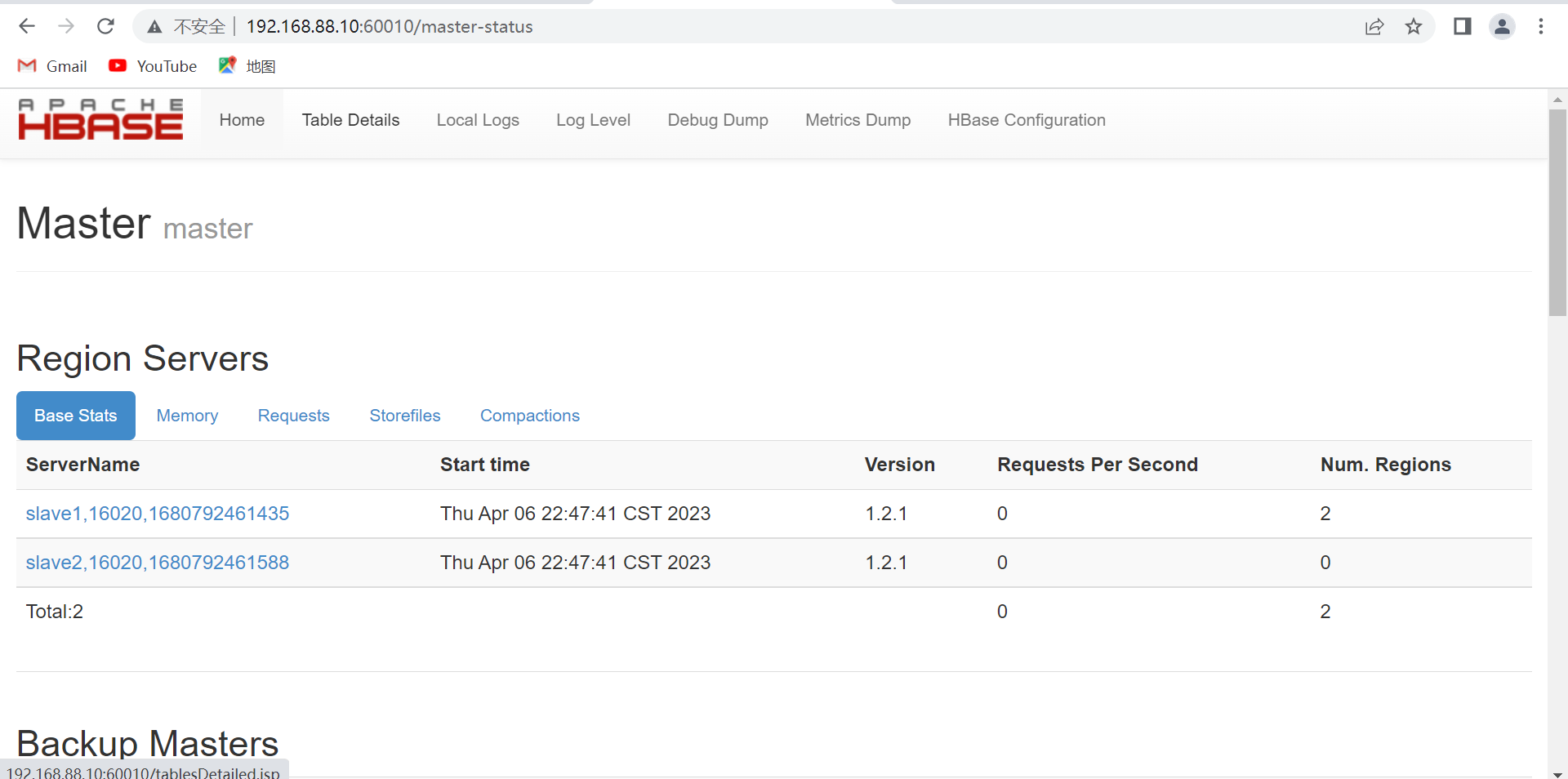

在浏览器输 master:60010出现如下图所示的界面

HBase部署-基于主机安装的Zookeeper

实现时间同步

#安装ntpdate包

[root@master ~]# yum -y install ntpdate

#执行命令

[root@master ~]# ntpdate time1.aliyun.com

#三个节点都要做

#查看三台主机时间是否同步,可容忍5秒内偏差

[root@master ~]# for i in master slave1 slave2;do ssh root@$i 'date';done

Thu Apr 6 22:13:47 CST 2023

Thu Apr 6 22:13:47 CST 2023

Thu Apr 6 22:13:47 CST 2023

解压安装包,改名

[root@master ~]# ls

apache-hive-2.0.0-bin.tar.gz mysql

hadoop-2.7.1.tar.gz mysql-5.7.18.zip

hbase-1.2.1-bin.tar.gz mysql-connector-java-5.1.46.jar

jdk-8u152-linux-x64.tar.gz zookeeper-3.4.8.tar.gz

[root@master ~]# tar xf hbase-1.2.1-bin.tar.gz -C /usr/local/src

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

hadoop hbase-1.2.1 hive jdk zookeeper

[root@master src]# mv hbase-1.2.1 hbase

[root@master src]# ls

hadoop hbase hive jdk zookeeper

[root@master ~]# cd /usr/local/src/hbase/conf/

[root@master conf]# ls

hadoop-metrics2-hbase.properties hbase-env.sh hbase-site.xml regionservers

hbase-env.cmd hbase-policy.xml log4j.properties

配置hbase环境变量

#创建hbase.sh文件

[root@master ~]# vi /etc/profile.d/hbase.sh

#添加如下内容

export HBASE_HOME=/usr/local/src/hbase

export PATH=${HBASE_HOME}/bin:$PATH

#进入/usr/local/src/hbase/conf/目录,在文件hbase-env.sh中修改以下内容

export JAVA_HOME=/usr/local/src/jdk

export HBASE_CLASSPATH=/usr/local/src/hadoop/etc/hadoop

export HBASE_MANAGES_ZK=false #不使用默认的zookeeper

#export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

#export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

配置Hbase

在master 节点配置hbase-site.xml

[root@master conf]# vi hbase-site.xml

#在<configuration></configuration>添加

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>60010</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>120000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/usr/local/src/hbase/tmp</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

在master节点修改regionservers文件

[root @master conf]$ vi regionservers

#删除 localhost,每一行写一个 slave 节点主机机器名

slave1

slave2

在master节点创建hbase.tmp.dir目录

[root@master hbase]# mkdir /usr/local/src/hbase/tmp

[root@master hbase]# ls

bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt NOTICE.txt README.txt tmp

将master上的hbase安装文件同步到slave1 slave2

[root@master ~]# scp -r /usr/local/src/hbase/ root@slave1:/usr/local/src/

[root@master ~]# scp -r /usr/local/src/hbase/ root@slave2:/usr/local/src/

在所有节点修改hbase目录权限

#master

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

[root@master ~]# ll /usr/local/src/

total 4

drwxr-xr-x. 12 hadoop hadoop 183 Mar 15 16:11 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 22:43 hbase

drwxr-xr-x 9 hadoop hadoop 170 Mar 25 18:14 hive

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:56 zookeeper

#slave1

[root@slave1 ~]# chown -R hadoop.hadoop /usr/local/src/

[root@slave1 ~]# ll /usr/local/src/

total 4

drwxr-xr-x 12 hadoop hadoop 183 Mar 15 16:14 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 22:48 hbase

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:58 zookeeper

#slave2

[root@slave2 ~]# chown -R hadoop.hadoop /usr/local/src/

[root@slave2 ~]# ll /usr/local/src/

total 4

drwxr-xr-x 12 hadoop hadoop 183 Mar 15 16:14 hadoop

drwxr-xr-x 8 hadoop hadoop 171 Apr 6 22:48 hbase

drwxr-xr-x. 8 hadoop hadoop 255 Sep 14 2017 jdk

drwxr-xr-x 12 hadoop hadoop 4096 Mar 29 09:58 zookeeper

将master配置的hbase.sh环境变量传到slave节点

[root@master ~]# scp /etc/profile.d/hbase.sh root@slave1:/etc/profile.d/

hbase.sh 100% 75 67.7KB/s 00:00

[root@master ~]# scp /etc/profile.d/hbase.sh root@slave2:/etc/profile.d/

hbase.sh 100% 75 75.3KB/s 00:00

#source导入环境变量后,查看三台主机环境变量

[root@master ~]# source /etc/profile.d/hbase.sh

[root@master ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/src/hive/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

[root@slave1 ~]# source /etc/profile.d/hbase.sh

[root@slave1 ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

[root@slave2 ~]# source /etc/profile.d/hbase.sh

[root@slave2 ~]# cat $PATH

cat: /usr/local/src/hbase/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/local/src/zookeeper/bin:/usr/local/src/jdk/bin:/root/bin: No such file or directory

切换hadoop用户

[root@master ~]# su - hadoop

Last login: Wed Mar 29 10:20:38 CST 2023 on pts/0

[hadoop@master ~]$

[root@slave1 ~]# su - hadoop

Last login: Wed Mar 29 10:20:41 CST 2023 on pts/0

[hadoop@slave1 ~]$

[root@slave2 ~]# su - hadoop

Last login: Wed Mar 29 10:20:48 CST 2023 on pts/0

[hadoop@slave2 ~]$

启动 服务

#先启动 Hadoop,然后启动 ZooKeeper,最后启动 HBase

#启动Hadoop

[hadoop@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out

192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out

192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out

192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out

192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

1497 NameNode

1851 ResourceManager

1692 SecondaryNameNode

2111 Jps

[hadoop@slave1 ~]$ jps

1367 NodeManager

1256 DataNode

1487 Jps

[hadoop@slave2 ~]$ jps

1428 NodeManager

1317 DataNode

1549 Jps

#启动zookeeper

[hadoop@master ~]$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@master ~]$ jps

1473 NameNode

1827 ResourceManager

1640 SecondaryNameNode

2136 Jps

2109 QuorumPeerMain

[hadoop@slave1 ~]$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@slave1 ~]$ jps

1301 DataNode

1369 NodeManager

1582 Jps

1551 QuorumPeerMain

[hadoop@slave2 ~]$ zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@slave2 ~]$ jps

1296 DataNode

1571 Jps

1364 NodeManager

1546 QuorumPeerMain

#启动 HBase

[hadoop@master conf]$ start-hbase.sh

starting master, logging to /usr/local/src/hbase/logs/hbase-hadoop-master-master.out

slave1: starting regionserver, logging to /usr/local/src/hbase/logs/hbase-hadoop-regionserver-slave1.out

slave2: starting regionserver, logging to /usr/local/src/hbase/logs/hbase-hadoop-regionserver-slave2.out

[hadoop@master conf]$ jps

1473 NameNode

1827 ResourceManager

2691 Jps

1640 SecondaryNameNode

2474 HMaster

2109 QuorumPeerMain

[hadoop@slave1 conf]$ jps

1301 DataNode

1369 NodeManager

1771 Jps

1629 HRegionServer

1551 QuorumPeerMain

[hadoop@slave2 conf]$ jps

1296 DataNode

1762 Jps

1619 HRegionServer

1364 NodeManager

1546 QuorumPeerMain

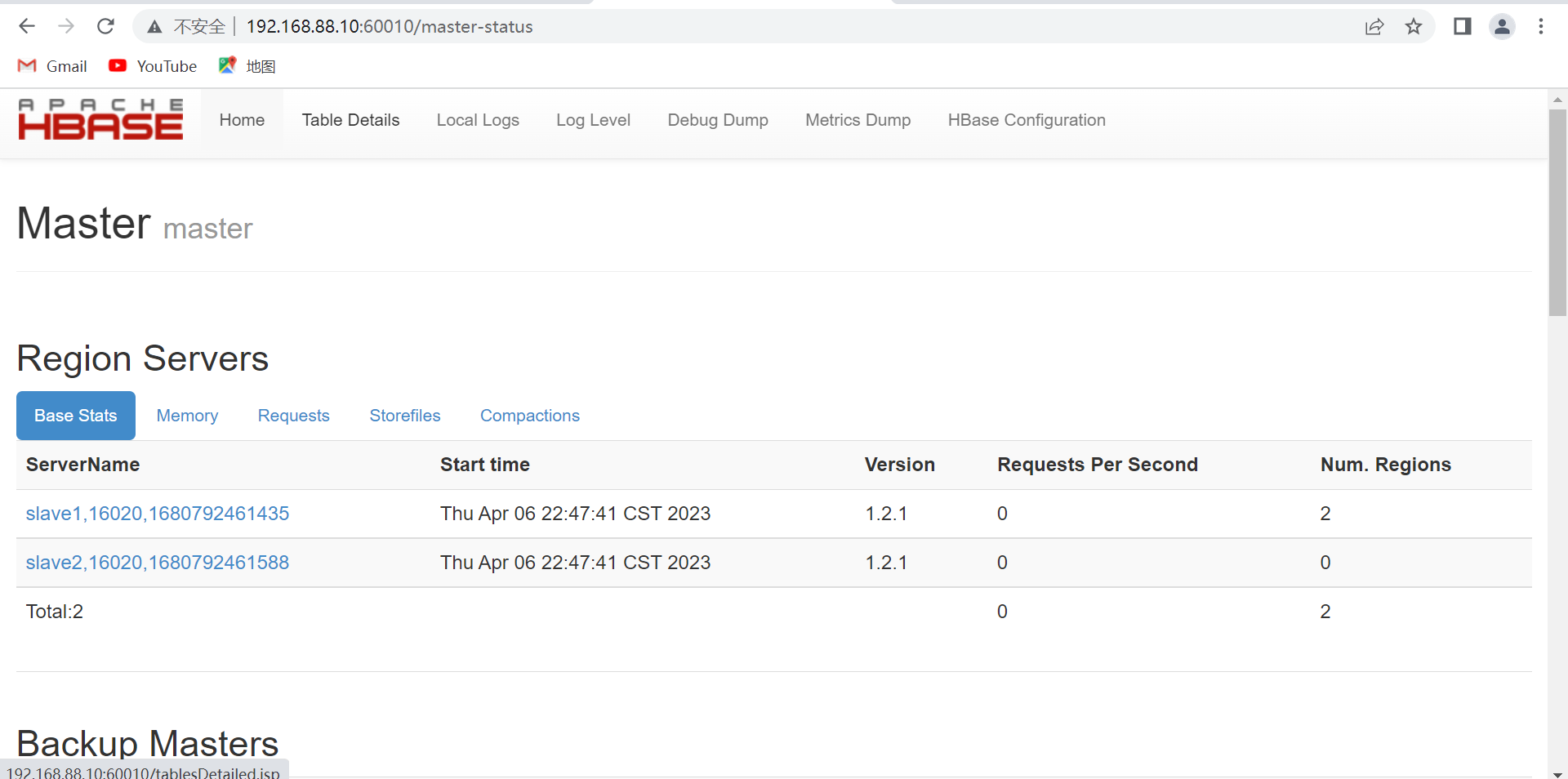

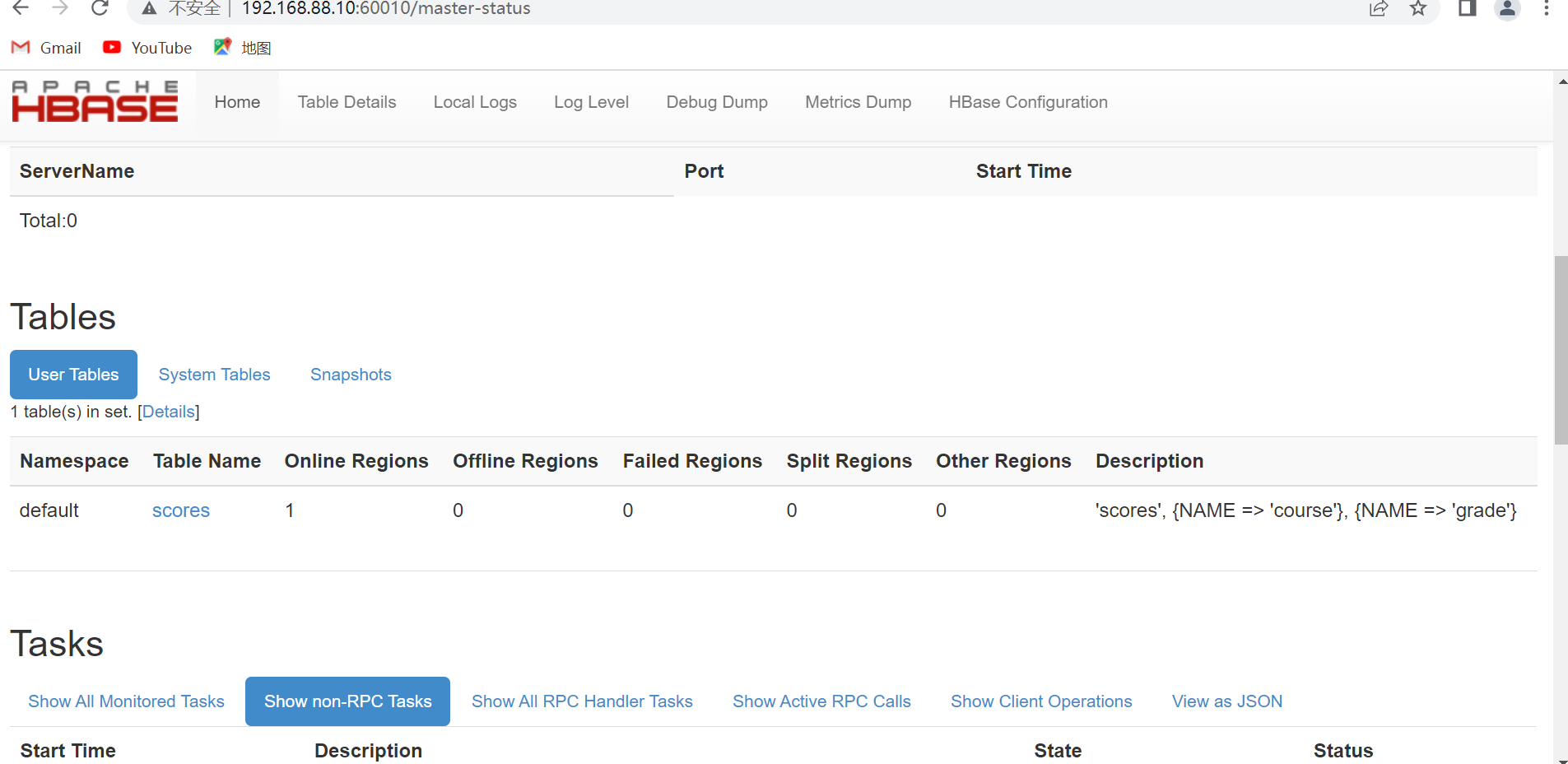

在浏览器输 master:60010出现如下图所示的界面

HBase常用 Shell 命令

进入 HBase 命令行

[hadoop@master ~]$ hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.1, r8d8a7107dc4ccbf36a92f64675dc60392f85c015, Wed Mar 30 11:19:21 CDT 2016

hbase(main):001:0>

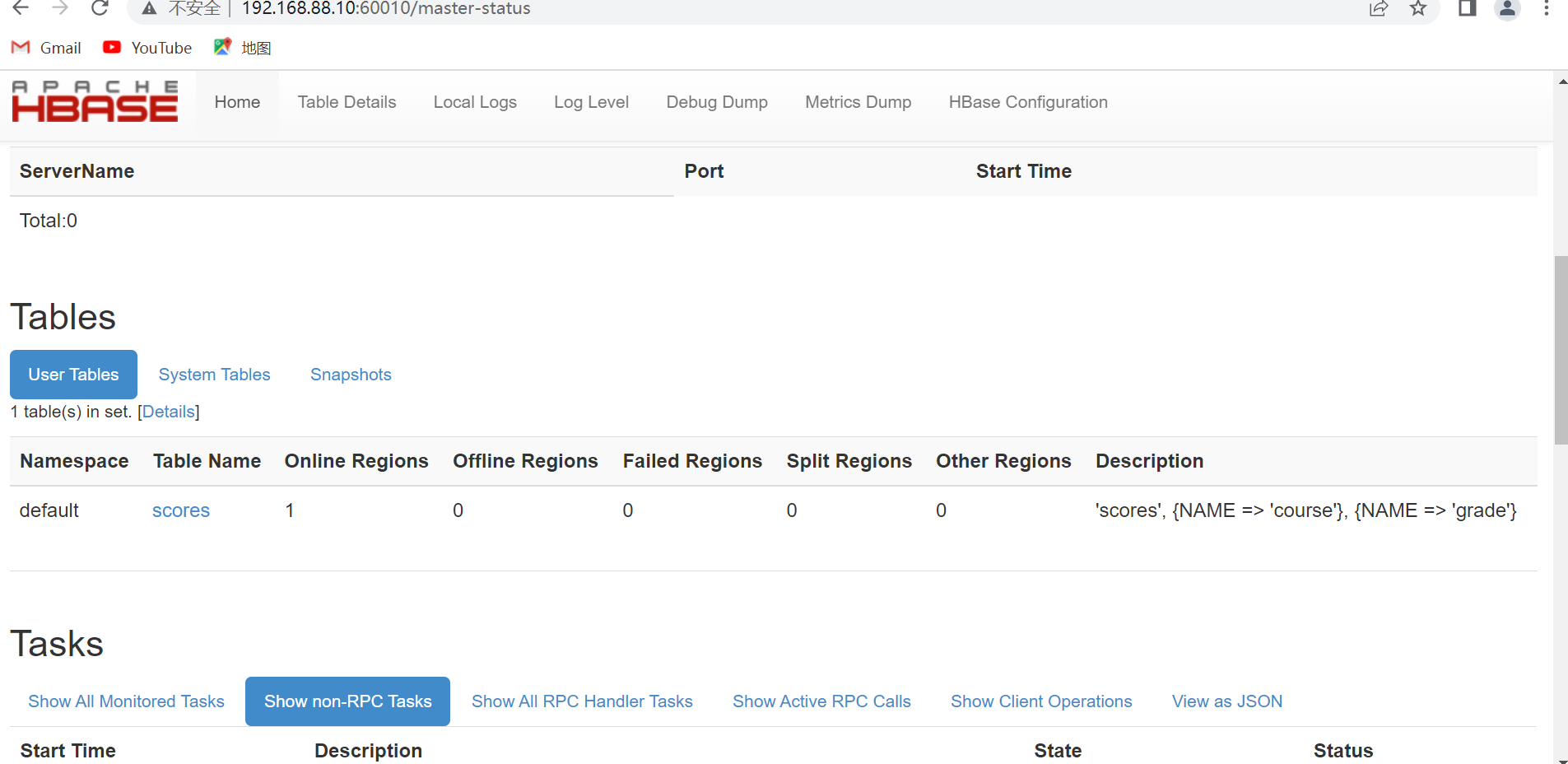

建立表 scores,两个列簇:grade和course

hbase(main):001:0> create 'scores','grade','course'

0 row(s) in 2.4730 seconds

=> Hbase::Table - scores

查看数据库状态

hbase(main):002:0> status

1 active master, 0 backup masters, 2 servers, 0 dead, 1.5000 average load

查看数据库版本

hbase(main):003:0> version

1.2.1, r8d8a7107dc4ccbf36a92f64675dc60392f85c015, Wed Mar 30 11:19:21 CDT 2016

查看表

hbase(main):004:0> list

TABLE

scores

1 row(s) in 0.0200 seconds

=> ["scores"]

插入记录 1:jie,grade: 143cloud

hbase(main):005:0> put 'scores','jie','grade:','146cloud'

0 row(s) in 0.1690 seconds

插入记录2:jie,course:math,86

hbase(main):006:0> put 'scores','jie','course:math','86'

0 row(s) in 0.0250 seconds

插入记录3:jie,course:cloud,92

hbase(main):007:0> put 'scores','jie','course:cloud','92'

0 row(s) in 0.0120 seconds

插入记录 4:shi,grade:133soft

hbase(main):008:0> put 'scores','shi','grade:','133soft'

0 row(s) in 0.0110 seconds

插入记录5:shi,grade:math,87

hbase(main):009:0> put 'scores','shi','course:math','87'

0 row(s) in 0.0080 seconds

插入记录6:shi,grade:cloud,96

hbase(main):010:0> put 'scores','shi','course:cloud','96'

0 row(s) in 0.0100 seconds

读取jie的记录

hbase(main):011:0> get 'scores','jie'

COLUMN CELL

course:cloud timestamp=1680787218638, value=92

course:math timestamp=1680787127230, value=86

grade: timestamp=1680787034649, value=146cloud

3 row(s) in 0.0500 seconds

读取jie的班级

hbase(main):012:0> get 'scores','jie','grade'

COLUMN CELL

grade: timestamp=1680787034649, value=146cloud

1 row(s) in 0.0100 seconds

查看整个表记录

hbase(main):013:0> scan 'scores'

ROW COLUMN+CELL

jie column=course:cloud, timestamp=1680787218638, value=92

jie column=course:math, timestamp=1680787127230, value=86

jie column=grade:, timestamp=1680787034649, value=146cloud

shi column=course:cloud, timestamp=1680787431479, value=96

shi column=course:math, timestamp=1680787369769, value=87

shi column=grade:, timestamp=1680787290357, value=133soft

2 row(s) in 0.0400 seconds

按例查看表记录

hbase(main):014:0> scan 'scores',{COLUMN=>'course'}

ROW COLUMN+CELL

jie column=course:cloud, timestamp=1680787218638, value=92

jie column=course:math, timestamp=1680787127230, value=86

shi column=course:cloud, timestamp=1680787431479, value=96

shi column=course:math, timestamp=1680787369769, value=87

2 row(s) in 0.0220 seconds

删除指定记录

hbase(main):015:0> delete 'scores','shi','grade'

0 row(s) in 0.0270 seconds

删除后,执行scan命令

hbase(main):016:0> scan 'scores'

ROW COLUMN+CELL

jie column=course:cloud, timestamp=1680787218638, value=92

jie column=course:math, timestamp=1680787127230, value=86

jie column=grade:, timestamp=1680787034649, value=146cloud

shi column=course:cloud, timestamp=1680787431479, value=96

shi column=course:math, timestamp=1680787369769, value=87

2 row(s) in 0.0300 seconds

增加新的列簇

hbase(main):017:0> alter 'scores',NAME=>'age'

Updating all regions with the new schema...

0/1 regions updated.

1/1 regions updated.

Done.

0 row(s) in 2.9720 seconds

查看表结构

hbase(main):018:0> describe 'scores'

Table scores is ENABLED

scores

COLUMN FAMILIES DESCRIPTION

{NAME => 'age', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE',

DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE =>

'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'course', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALS

E', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE

=> 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'grade', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE

', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE

=> 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

3 row(s) in 0.0240 seconds

删除列簇

hbase(main):019:0> alter 'scores',NAME=>'age',METHOD=>'delete'

Updating all regions with the new schema...

1/1 regions updated.

Done.

0 row(s) in 2.1690 seconds

删除表

hbase(main):020:0> disable 'scores'

0 row(s) in 2.2660 seconds

hbase(main):021:0> drop 'scores'

0 row(s) in 1.2680 seconds

hbase(main):022:0> list

TABLE

0 row(s) in 0.0070 seconds

=> []

退出

hbase(main):023:0> quit

[hadoop@master ~]$

关闭所有服务

在 master 节点关闭 HBase

[hadoop@master ~]$ stop-hbase.sh

stopping hbase...............

[hadoop@master ~]$ jps

1473 NameNode

1827 ResourceManager

1640 SecondaryNameNode

2109 QuorumPeerMain

3311 Jps

[hadoop@slave1 ~]$ jps

1301 DataNode

1369 NodeManager

1933 Jps

1551 QuorumPeerMain

[hadoop@slave2 ~]$ jps

1296 DataNode

1922 Jps

1364 NodeManager

1546 QuorumPeerMain

在所有节点关闭 ZooKeeper

[hadoop@master ~]$ zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

[hadoop@master ~]$ jps

1473 NameNode

1827 ResourceManager

1640 SecondaryNameNode

3338 Jps

[hadoop@slave1 ~]$ zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

[hadoop@slave1 ~]$ jps

1301 DataNode

1369 NodeManager

1964 Jps

[hadoop@slave2 ~]$ zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

[hadoop@slave2 ~]$ jps

1296 DataNode

1953 Jps

1364 NodeManager

在 master 节点关闭 Hadoop

[hadoop@master ~]$ stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [master]

master: stopping namenode

192.168.88.20: stopping datanode

192.168.88.30: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

192.168.88.20: stopping nodemanager

192.168.88.30: stopping nodemanager

no proxyserver to stop

[hadoop@master ~]$ jps

3771 Jps

[hadoop@slave1 ~]$ jps

2043 Jps

[hadoop@slave2 ~]$ jps

2031 Jps

标签:src,

部署,

hadoop,

master,

usr,

hbase,

local,

HBase

From: https://www.cnblogs.com/skyrainmom/p/17438944.html