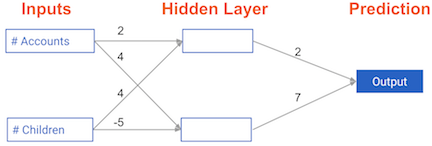

1. Which of the models in the diagrams has greater ability to account for interactions?

ans:Model 2, Each node adds to the model's ability to capture interactions. So the more nodes you have, the more interactions you can capture.

video

(Hidden Layer: node_0, node_1)

2. In this exercise, you'll write code to do forward propagation (prediction) for your first neural network:

# Calculate node 0 value: node_0_value # 输入数据*node0的权重 加和 node_0_value = (input_data * weights['node_0']).sum() # Calculate node 1 value: node_1_value # 输入数据*node1的权重 加和 node_1_value = (input_data * weights['node_1']).sum() # Put node values into array: hidden_layer_outputs hidden_layer_outputs = np.array([node_0_value, node_1_value]) # Calculate output: output output = (hidden_layer_outputs*weights['output']).sum() # Print output print(output)

video

Iinear vs. non-linear functions

ReLU (Rectified Linear Activation)

code: 用activation function (tanh) 对hidden layer数据进行处理

3. Fill in the definition of the relu() function, Apply the relu() function to node_0_input, node_1_input to calculate node_0_output, node_1_output

def relu(input):

# ReLU函数

'''Define your relu activation function here'''

# Calculate the value for the output of the relu function: output

output = max(0, input)

# Return the value just calculated

return(output)

# Calculate node 0 value: node_0_output

node_0_input = (input_data * weights['node_0']).sum()

# 对node_0_output应用ReLU函数

node_0_output = relu(node_0_input)

# Calculate node 1 value: node_1_output

node_1_input = (input_data * weights['node_1']).sum()

# 对node_1_output应用ReLU函数

node_1_output = relu(node_1_input)

# Put node values into array: hidden_layer_outputs

hidden_layer_outputs = np.array([node_0_output, node_1_output])

# Calculate model output (do not apply relu)

model_output = (hidden_layer_outputs * weights['output']).sum()

# Print model output

print(model_output)

4. You'll now define a function called predict_with_network() which will generate predictions for multiple data observations, which are pre-loaded as input_data. As before, weights are also pre-loaded. In addition, the relu() function you defined in the previous exercise has been pre-loaded.

# Define predict_with_network()

def predict_with_network(input_data_row, weights):

# Calculate node 0 value

node_0_input = (input_data_row * weights['node_0']).sum()

node_0_output = relu(node_0_input)

# Calculate node 1 value

node_1_input = (input_data_row * weights['node_1']).sum()

node_1_output = relu(node_1_input)

# Put node values into array: hidden_layer_outputs

hidden_layer_outputs = np.array([node_0_output, node_1_output])

# Calculate model output

# 和上一题不同的是output也应用了ReLU函数

input_to_final_layer = (hidden_layer_outputs * weights['output']).sum()

model_output = relu(input_to_final_layer)

# Return model output

return(model_output)

# Create empty list to store prediction results

results = []

for input_data_row in input_data:

# 遍历输入数据的每一行(对多行数据应用network)

# Append prediction to results

results.append(predict_with_network(input_data_row, weights))

# Print results

print(results)

video

Multiple hidden layers (例子应用了relu函数)

Representation learning (表示学习/表征学习)

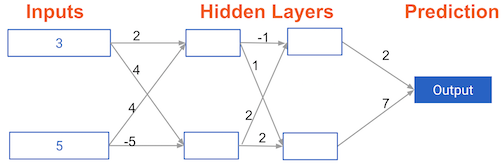

5. You now have a model with 2 hidden layers. The values for an input data point are shown inside the input nodes. The weights are shown on the edges/lines. What prediction would this model make on this data point?

Assume the activation function at each node is the identity function. That is, each node's output will be the same as its input. So the value of the bottom node in the first hidden layer is -1, and not 0, as it would be if the ReLU activation function was used.

ans: Output = 0

6. We then create a model output from the hidden nodes using weights pre-loaded as weights['output'].

def predict_with_network(input_data):

# Calculate node 0 in the first hidden layer

node_0_0_input = (input_data * weights['node_0_0']).sum()

node_0_0_output = relu(node_0_0_input)

# Calculate node 1 in the first hidden layer

node_0_1_input = (input_data * weights['node_0_1']).sum()

node_0_1_output = relu(node_0_1_input)

# Put node values into array: hidden_0_outputs

hidden_0_outputs = np.array([node_0_0_output, node_0_1_output])

# Calculate node 0 in the second hidden layer

node_1_0_input = (hidden_0_outputs * weights['node_1_0']).sum()

node_1_0_output = relu(node_1_0_input)

# Calculate node 1 in the second hidden layer

node_1_1_input = (hidden_0_outputs * weights['node_1_1']).sum()

node_1_1_output = relu(node_1_1_input)

# Put node values into array: hidden_1_outputs

hidden_1_outputs = np.array([node_1_0_output, node_1_1_output])

# Calculate model output: model_output

# 不用relu

model_output = (hidden_1_outputs * weights['output']).sum()

# Return model_output

return model_output

output = predict_with_network(input_data)

print(output)

7. How are the weights that determine the features/interactions in Neural Networks created?

ans: The model training process sets them to optimize predictive accuracy.

8. Which layers of a model capture more complex or "higher level" interactions? ans: The last layers capture the most complex interactions. 标签:node,Basics,neural,Camp,weights,input,output,hidden,model From: https://www.cnblogs.com/Eternal-Glory/p/16885927.html