UWA发布|本期UWA发布的内容是第十五期Unity版本使用统计,统计周期为2024年5月至2024年11月,数据来源于UWA网站(www.uwa4d.com)性能诊断提测的项目。希望给Unity开发者提供相关的行业趋势作为参考。

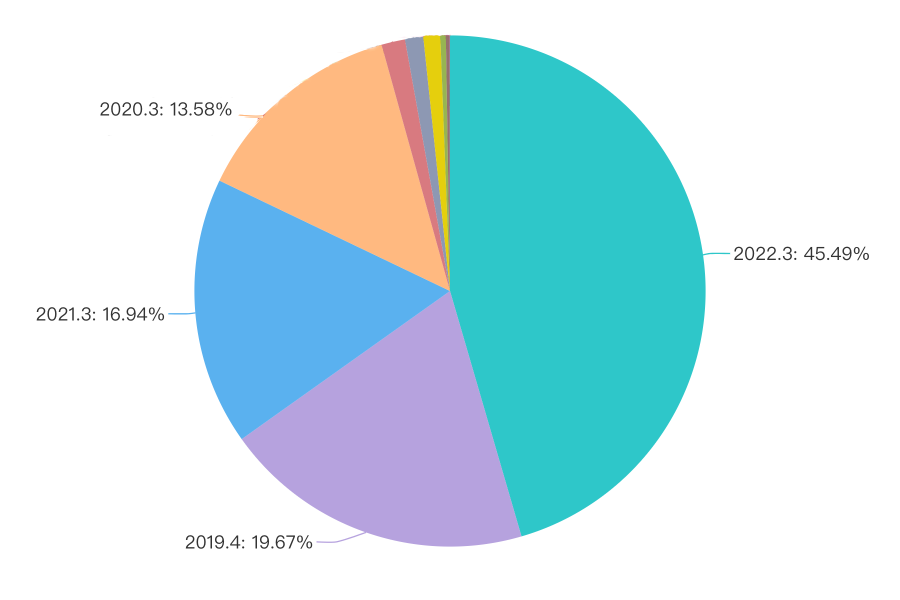

2024年5月 - 2024年11月版本分布

以近半年的数据统计来看,如图1所示,2022.3的版本在开发团队中的使用率较高,达到45.49%;其次分别为2019.4、2021.3和2020.3;相较于2023年11月至2024年4月期间的数据统计,2022.3的版本使用率明显提升。

近半年Unity版本使用占比和趋势

从近半年的使用趋势来看,如图2所示,2022.3、2019.4和2021.3是Unity开发者使用率较高的版本。

下面,我们对近半年使用率较高的这几个版本做详细的分析。

2022.3版本使用分布

2022.3系列中,版本普及率依次为2022.3.12(26.64%)、2022.3.18(25.58%)、2022.3.28(13.34%)、2022.3.20(3.56%)、2022.3.13(3.32%)、2022.3.17(3.25%)、2022.3.2(2.19%)和2022.3.36(2%)。

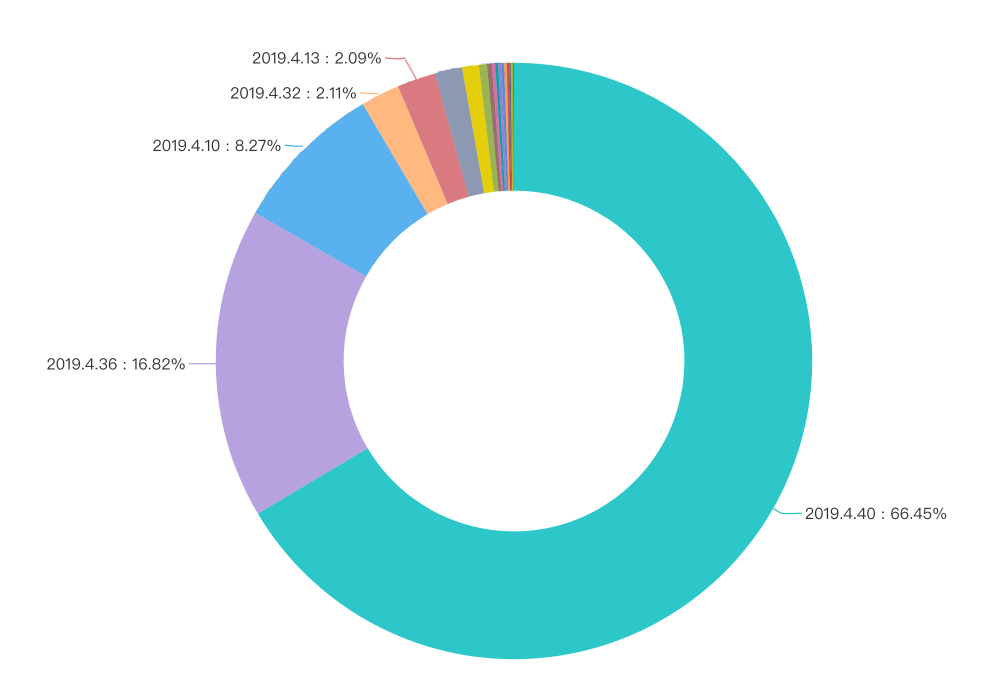

2019.4版本使用分布

2019.4系列中,版本普及率依次为2019.4.40(66.45%)、2019.4.36(16.82%)、2019.4.10(8.27%)、2019.4.32(2.11%)和2019.4.13(2.09%)。

2021.3版本使用分布

2021.3系列中,版本普及率依次为2021.3.9(11.82%)、2021.3.12(9.89%)、2021.3.26(9.84%)、2021.3.17(8.75%)、2021.3.14(6.33%)、2021.3.32(5.63%)和2021.3.21(3.31%)。

同时,为大家挑选了与Unity版本相关的UWA问答网站上的问题,供大家参考,也欢迎补充更多您的经验。

版本统计

常见问答

Q:项目升到2022.3.28后观察到在真机上出现了之前没有的内存泄漏状况,用Memory Profiler排查后发现除了一些正常的资源和堆内存有轻微上升外,主要是有一部分Native-UnitySubsystemsObjects-泄露很明显,但没法定位。请问有没有人遇到这个问题?怎么解决?

A:可以参考下这篇官方论坛讨论和复现测试链接:

https://issuetracker.unity3d.com/issues/memory-leak-when-using-serializereference-in-il2cpp-build

根据我们项目中的情况加上自己试验了下,发现触发条件比帖子中还要简单,都不用ScriptableObject+Addressable,只要任意带有[SerializableReference]特性的资源被销毁就会发生泄漏。而且看样子这个问题大概率会出现在所有2022以上及团结引擎的项目中,且目前未被修复。

考虑到使用这些Unity版本一般是基于支持鸿蒙或支持小游戏的主要目的,不大可能回退版本,那么目前处理方法可能就是要尽量避免使用这个特性。甚至像DoTween这种可能涉及该特性的插件建议都要换掉。

针对以上问题,有经验的朋友欢迎转至社区交流:

https://answer.uwa4d.com/question/670c8262682c7e5cd61bf95c

访问UWA问答社区 (answer.uwa4d.com),我们会将最新的研究发现与大家共享。

标签:11,2021.3,2019.4,2024,Unity,版本,2022.3 From: https://www.cnblogs.com/uwatech/p/18567201