目录

RDB 块存储介绍

Ceph Block Device

* Thin-provisioned (受分配,使用多少分配多少,慢慢扩大)

* Images up to 16 exabytes (单个镜像最大16EB)

* Configurable striping(配置切片)

* In-memory caching()

* Snapshots(支持快照)

* Copy-on-write cloning(快照克隆)

* Kernel driver support(内核支持)

* KVM/libvirt support(kvm/librirt支持)

* Back-end for cloud solutions(后端支持云解决方案)

* Incremental backup(增量备份)

* Disaster recovery (multisite asynchronous replication)(灾难恢复)

About Pools(资源池)

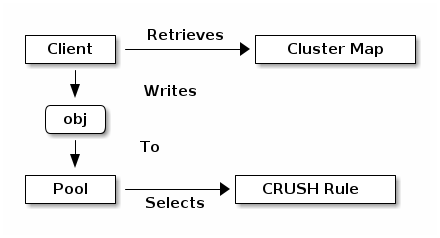

The Ceph storage system supports the notion of ‘Pools’, which are logical

partitions for storing objects.

Ceph存储系统支持池的概念,这是用于存储对象的逻辑分区

Ceph Clients retrieve a Cluster Map from a Ceph Monitor, and write objects to

pools. The pool’s size or number of replicas, the CRUSH rule and the

number of placement groups determine how Ceph will place the data.

Ceph客户端从Ceph监视器中检索Cluster Map,并将对象写入池中。池的大小或副本的数量、CRUSH规则和放置组的数量决定了Ceph将如何放置数据。

Pools set at least the following parameters:

Pools 至少设置以下参数

- Ownership/Access to Objects

- The Number of Placement Groups, and

- The CRUSH Rule to Use.

See Set Pool Values for details.

创建 pools

创建 pool 池

# 查看 pool 池信息

$ ceph osd lspools

# 创建 pool 池

$ ceph osd pool create

Invalid command: missing required parameter pool(<poolname>)

osd pool create <poolname> <int[0-]> {<int[0-]>} {replicated|erasure} {<erasure_code_profile>} {<rule>} {<int>} {<int>} {<int[0-]>} {<int[0-]>} {<float[0.0-1.0]>} : create pool

Error EINVAL: invalid command

$ ceph osd pool create ceph-demo 64 64

pool 'ceph-demo' created

# 查看 pool 池信息

$ ceph osd lspools

1 ceph-demo

查看 pool 池信息

# 查看 pool 池 pg_num 信息

$ ceph osd pool get ceph-demo pg_num

pg_num: 64

# 查看 pool 池 pgp_num 信息

$ ceph osd pool get ceph-demo pgp_num

pgp_num: 64

# 查看 pool 池 size 信息

$ ceph osd pool get ceph-demo size

size: 3

# 查看 pool 池 crush_rule 信息

$ ceph osd pool get ceph-demo crush_rule

crush_rule: replicated_rule

# 查看帮助信息

$ ceph osd pool get -h

General usage:

==============

usage: ceph [-h] [-c CEPHCONF] [-i INPUT_FILE] [-o OUTPUT_FILE]

[--setuser SETUSER] [--setgroup SETGROUP] [--id CLIENT_ID]

[--name CLIENT_NAME] [--cluster CLUSTER]

[--admin-daemon ADMIN_SOCKET] [-s] [-w] [--watch-debug]

[--watch-info] [--watch-sec] [--watch-warn] [--watch-error]

[--watch-channel {cluster,audit,*}] [--version] [--verbose]

[--concise] [-f {json,json-pretty,xml,xml-pretty,plain}]

[--connect-timeout CLUSTER_TIMEOUT] [--block] [--period PERIOD]

Ceph administration tool

optional arguments:

-h, --help request mon help

-c CEPHCONF, --conf CEPHCONF

ceph configuration file

-i INPUT_FILE, --in-file INPUT_FILE

input file, or "-" for stdin

-o OUTPUT_FILE, --out-file OUTPUT_FILE

output file, or "-" for stdout

--setuser SETUSER set user file permission

--setgroup SETGROUP set group file permission

--id CLIENT_ID, --user CLIENT_ID

client id for authentication

--name CLIENT_NAME, -n CLIENT_NAME

client name for authentication

--cluster CLUSTER cluster name

--admin-daemon ADMIN_SOCKET

submit admin-socket commands ("help" for help

-s, --status show cluster status

-w, --watch watch live cluster changes

--watch-debug watch debug events

--watch-info watch info events

--watch-sec watch security events

--watch-warn watch warn events

--watch-error watch error events

--watch-channel {cluster,audit,*}

which log channel to follow when using -w/--watch. One

of ['cluster', 'audit', '*']

--version, -v display version

--verbose make verbose

--concise make less verbose

-f {json,json-pretty,xml,xml-pretty,plain}, --format {json,json-pretty,xml,xml-pretty,plain}

--connect-timeout CLUSTER_TIMEOUT

set a timeout for connecting to the cluster

--block block until completion (scrub and deep-scrub only)

--period PERIOD, -p PERIOD

polling period, default 1.0 second (for polling

commands only)

Local commands:

===============

ping <mon.id> Send simple presence/life test to a mon

<mon.id> may be 'mon.*' for all mons

daemon {type.id|path} <cmd>

Same as --admin-daemon, but auto-find admin socket

daemonperf {type.id | path} [stat-pats] [priority] [<interval>] [<count>]

daemonperf {type.id | path} list|ls [stat-pats] [priority]

Get selected perf stats from daemon/admin socket

Optional shell-glob comma-delim match string stat-pats

Optional selection priority (can abbreviate name):

critical, interesting, useful, noninteresting, debug

List shows a table of all available stats

Run <count> times (default forever),

once per <interval> seconds (default 1)

Monitor commands:

=================

osd pool get <poolname> size|min_size|pg_num|pgp_num|crush_rule|hashpspool| get pool parameter <var>

nodelete|nopgchange|nosizechange|write_fadvise_dontneed|noscrub|nodeep-scrub|hit_

set_type|hit_set_period|hit_set_count|hit_set_fpp|use_gmt_hitset|target_max_

objects|target_max_bytes|cache_target_dirty_ratio|cache_target_dirty_high_ratio|

cache_target_full_ratio|cache_min_flush_age|cache_min_evict_age|erasure_code_

profile|min_read_recency_for_promote|all|min_write_recency_for_promote|fast_read|

hit_set_grade_decay_rate|hit_set_search_last_n|scrub_min_interval|scrub_max_

interval|deep_scrub_interval|recovery_priority|recovery_op_priority|scrub_

priority|compression_mode|compression_algorithm|compression_required_ratio|

compression_max_blob_size|compression_min_blob_size|csum_type|csum_min_block|csum_

max_block|allow_ec_overwrites|fingerprint_algorithm|pg_autoscale_mode|pg_

autoscale_bias|pg_num_min|target_size_bytes|target_size_ratio

osd pool get-quota <poolname> obtain object or byte limits for pool

修改 pool 池默认信息

# 修改 pool 池 size 信息

$ ceph osd pool set ceph-demo size 2

set pool 1 size to 2

$ ceph osd pool get ceph-demo size

size: 2

# 修改 pool 池 pg_num pgp_num 信息

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 6d)

mgr: node0(active, since 6d), standbys: node1, node2

osd: 3 osds: 3 up (since 6d), 3 in (since 6d)

task status:

data:

pools: 1 pools, 64 pgs # 此处信息发生变化

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs: 64 active+clean

$ ceph osd pool set ceph-demo pg_num 128

set pool 1 pg_num to 128

$ ceph osd pool set ceph-demo pgp_num 128

set pool 1 pgp_num to 128

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 6d)

mgr: node0(active, since 6d), standbys: node1, node2

osd: 3 osds: 3 up (since 6d), 3 in (since 6d)

data:

pools: 1 pools, 128 pgs # 此处信息发生变化

objects: 0 objects, 0 B

usage: 3.0 GiB used, 147 GiB / 150 GiB avail

pgs: 128 active+clean

RDB 创建和映射

RDB 创建

# 查看 RDB 信息

$ rbd -p ceph-demo ls

# RDB 帮助信息

$ rbd help create

usage: rbd create [--pool <pool>] [--namespace <namespace>] [--image <image>]

[--image-format <image-format>] [--new-format]

[--order <order>] [--object-size <object-size>]

[--image-feature <image-feature>] [--image-shared]

[--stripe-unit <stripe-unit>]

[--stripe-count <stripe-count>] [--data-pool <data-pool>]

[--journal-splay-width <journal-splay-width>]

[--journal-object-size <journal-object-size>]

[--journal-pool <journal-pool>]

[--thick-provision] --size <size> [--no-progress]

<image-spec>

Create an empty image.

Positional arguments

<image-spec> image specification

(example: [<pool-name>/[<namespace>/]]<image-name>)

Optional arguments

-p [ --pool ] arg pool name

--namespace arg namespace name

--image arg image name

--image-format arg image format [1 (deprecated) or 2]

--new-format use image format 2

(deprecated)

--order arg object order [12 <= order <= 25]

--object-size arg object size in B/K/M [4K <= object size <= 32M]

--image-feature arg image features

[layering(+), exclusive-lock(+*), object-map(+*),

deep-flatten(+-), journaling(*)]

--image-shared shared image

--stripe-unit arg stripe unit in B/K/M

--stripe-count arg stripe count

--data-pool arg data pool

--journal-splay-width arg number of active journal objects

--journal-object-size arg size of journal objects [4K <= size <= 64M]

--journal-pool arg pool for journal objects

--thick-provision fully allocate storage and zero image

-s [ --size ] arg image size (in M/G/T) [default: M]

--no-progress disable progress output

Image Features:

(*) supports enabling/disabling on existing images

(-) supports disabling-only on existing images

(+) enabled by default for new images if features not specified

# RDB 的2种创建方式

$ rbd create -p ceph-demo --image rdb-demo.img --size 10G

$ rbd create ceph-demo/rdb-demo1.img --size 10G

# 查看新建的 RDB

$ rbd -p ceph-demo ls

rdb-demo.img

rdb-demo1.img

查看 RDB 信息

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 10 GiB in 2560 objects # rbd 10G大小, 2560 objects

order 22 (4 MiB objects) # 每个 objects 大小 4M

snapshot_count: 0

id: 11a95e44ddd2 # id

block_name_prefix: rbd_data.11a95e44ddd2 # object file 的前缀

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten # features 内核中的高级特性,挂载时可能会有问题,只保留 layering 其他去掉

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

删除 RDB

$ rbd -p ceph-demo ls

rdb-demo.img

rdb-demo1.img

$ rbd rm -p ceph-demo --image rdb-demo1.img

Removing image: 100% complete...done.

$ rbd -p ceph-demo ls

rdb-demo.img

挂载 RDB

- 直接挂载回报错,有些 features 内核不支持

$ rbd map ceph-demo/rdb-demo.img

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable ceph-demo/rdb-demo.img object-map fast-diff deep-flatten".

In some cases useful info is found in syslog - try "dmesg | tail".

rbd: map failed: (6) No such device or address

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11a95e44ddd2

block_name_prefix: rbd_data.11a95e44ddd2

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

- 去除 features

# 获取 feature 命令帮助

$ rbd -h | grep fea

feature disable Disable the specified image feature.

feature enable Enable the specified image feature.

# 去除 feature

$ rbd feature disable ceph-demo/rdb-demo.img deep-flatten

$ rbd feature disable ceph-demo/rdb-demo.img fast-diff

$ rbd feature disable ceph-demo/rdb-demo.img object-map

rbd: failed to update image features: 2022-10-19 19:49:35.576 7f6e43cf9c80 -1 librbd::Operations: one or more requested features are already disabled

(22) Invalid argument

$ rbd feature disable ceph-demo/rdb-demo.img exclusive-lock

# 查看去除 feature 后的信息

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11a95e44ddd2

block_name_prefix: rbd_data.11a95e44ddd2

format: 2

features: layering

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

- 挂载 RDB

# 挂载 RDB

$ rbd map ceph-demo/rdb-demo.img

/dev/rbd0

# 查看 块设备列表

$ rbd device list

id pool namespace image snap device

0 ceph-demo rdb-demo.img - /dev/rbd0

# 格式化块设备

$ mkfs.ext4 /dev/rbd0

mke2fs 1.42.9 (28-Dec-2013)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=1024 blocks, Stripe width=1024 blocks

655360 inodes, 2621440 blocks

131072 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2151677952

80 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

# 挂载 块设备

$ mount /dev/rbd0 /mnt/

# 查看块设备 和 df

$ ls /mnt/

lost+found

$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 18M 893M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 2.4G 35G 7% /

/dev/sda1 1014M 151M 864M 15% /boot

tmpfs 910M 52K 910M 1% /var/lib/ceph/osd/ceph-0

tmpfs 182M 0 182M 0% /run/user/0

/dev/rbd0 9.8G 37M 9.2G 1% /mnt

# 写入文件信息,为后续磁盘动态扩容做验证

[root@node0 mnt]# !echo

echo test > test

[root@node0 mnt]# ls

lost+found test

RDB 块存储扩容

# 查看块设备大小,当前为 10G

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11a95e44ddd2

block_name_prefix: rbd_data.11a95e44ddd2

format: 2

features: layering

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

# 获取 resize 扩容命令的帮助信息

$ rbd help resize

usage: rbd resize [--pool <pool>] [--namespace <namespace>]

[--image <image>] --size <size> [--allow-shrink]

[--no-progress]

<image-spec>

Resize (expand or shrink) image.

Positional arguments

<image-spec> image specification

(example: [<pool-name>/[<namespace>/]]<image-name>)

Optional arguments

-p [ --pool ] arg pool name

--namespace arg namespace name

--image arg image name

-s [ --size ] arg image size (in M/G/T) [default: M]

--allow-shrink permit shrinking

--no-progress disable progress output

# 扩容块设备

$ rbd resize ceph-demo/rdb-demo.img --size 20G

Resizing image: 100% complete...done.

# 重新查看块设置,查看块大小是否扩容为 20G

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11a95e44ddd2

block_name_prefix: rbd_data.11a95e44ddd2

format: 2

features: layering

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

# 查看文件系统大小是否扩容

$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 18M 893M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 2.4G 35G 7% /

/dev/sda1 1014M 151M 864M 15% /boot

tmpfs 910M 52K 910M 1% /var/lib/ceph/osd/ceph-0

tmpfs 182M 0 182M 0% /run/user/0

/dev/rbd0 9.8G 37M 9.2G 1% /mnt

# 扩容文件系统

$ resize2fs /dev/rbd0

resize2fs 1.42.9 (28-Dec-2013)

Filesystem at /dev/rbd0 is mounted on /mnt; on-line resizing required

old_desc_blocks = 2, new_desc_blocks = 3

The filesystem on /dev/rbd0 is now 5242880 blocks long.

# 查看文件系统大小是否扩容

$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 18M 893M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 2.4G 35G 7% /

/dev/sda1 1014M 151M 864M 15% /boot

tmpfs 910M 52K 910M 1% /var/lib/ceph/osd/ceph-0

tmpfs 182M 0 182M 0% /run/user/0

/dev/rbd0 20G 44M 19G 1% /mnt

# 查看扩容后,原块设备文件数据是否 OK

$ cd /mnt/

$ ls

lost+found test

$ cat test

test

Ceph 数据写入流程

# 查看 RDB 信息

$ rbd info ceph-demo/rdb-demo.img

rbd image 'rdb-demo.img':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11a95e44ddd2

block_name_prefix: rbd_data.11a95e44ddd2

format: 2

features: layering

op_features:

flags:

create_timestamp: Wed Oct 19 19:36:17 2022

access_timestamp: Wed Oct 19 19:36:17 2022

modify_timestamp: Wed Oct 19 19:36:17 2022

# 看到 RDB 对应的 object

$ rados -p ceph-demo ls | grep rbd_data.11a95e44ddd2

rbd_data.11a95e44ddd2.0000000000000e03

rbd_data.11a95e44ddd2.0000000000000800

rbd_data.11a95e44ddd2.0000000000000427

rbd_data.11a95e44ddd2.0000000000000e00

rbd_data.11a95e44ddd2.0000000000000434

rbd_data.11a95e44ddd2.0000000000000a20

rbd_data.11a95e44ddd2.0000000000000021

......

$ rados -p ceph-demo stat rbd_data.11a95e44ddd2.0000000000000e03

ceph-demo/rbd_data.11a95e44ddd2.0000000000000e03 mtime 2022-10-19 20:03:39.000000, size 4194304

# 看到 object 所存放的 pg 和 osd

$ ceph osd map ceph-demo rbd_data.11a95e44ddd2.0000000000000e03

osdmap e27 pool 'ceph-demo' (1) object 'rbd_data.11a95e44ddd2.0000000000000e03' -> pg 1.aefd8300 (1.0) -> up ([1,2], p1) acting ([1,2], p1)

$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.14639 root default

-3 0.04880 host node0

0 hdd 0.04880 osd.0 up 1.00000 1.00000

-5 0.04880 host node1

1 hdd 0.04880 osd.1 up 1.00000 1.00000

-7 0.04880 host node2

2 hdd 0.04880 osd.2 up 1.00000 1.00000

Ceph RDB 告警排查

# 查看 Ceph 集群信息

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_WARN

application not enabled on 1 pool(s)

services:

mon: 3 daemons, quorum node0,node1,node2 (age 6d)

mgr: node0(active, since 6d), standbys: node1, node2

osd: 3 osds: 3 up (since 6d), 3 in (since 6d)

data:

pools: 1 pools, 128 pgs

objects: 103 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 128 active+clean

# 获取集群健康信息

$ ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'ceph-demo'

use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications.

# 查看当前 RDB 的 application 定义

$ ceph osd pool application get ceph-demo

{}

# 设置 ceph-demo application 为 rbd,设置 资源池的的类型,方便管理

$ ceph osd pool application enable ceph-demo rbd

enabled application 'rbd' on pool 'ceph-demo'

$ ceph osd pool application get ceph-demo

{

"rbd": {}

}

# 查看 Ceph 集群信息

$ ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 6d)

mgr: node0(active, since 6d), standbys: node1, node2

osd: 3 osds: 3 up (since 6d), 3 in (since 6d)

data:

pools: 1 pools, 128 pgs

objects: 103 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 128 active+clean