- 格式化HDFS分布式文件系统

hadoop namenode –format

启动Hadoop

start-all.sh

停止Hadoop

stop-all.sh

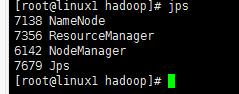

jps命令可以看到Hadoop的所有守护进程

用hdfs dfsadmin -report 命令来检查,能看到DataNode状态才是正常

可以通过Hadoop NameNode和JobTracker的Web接口来查看集群是否启动成功,其访问地址如下

http://192.168.96.128:8088/cluster

NameNode为http://192.168.96.128:50070/dfshealth.html#tab-overview

JobTracker为http://localhost:50030/

到此仅仅是在linux上布置成功

在eclipse上运行hdfs

很多博客说要下载插件hadoop-eclipse-plugin-2.6.0.jar,我下载后也放在eclipse安装文件夹的插件plugins下了,那头小象始终不见出来,发现不装插件也可以运行wordCount,与版本有关,但是不是必须对应的,

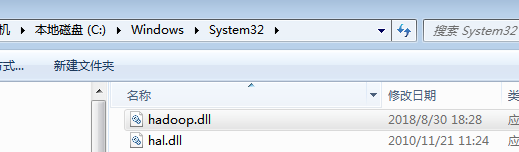

1、下载hadoop.dll和winutils.exe

https://github.com/steveloughran/winutils/blob/master/hadoop-2.8.3/bin/hadoop.dll

winutils.exe放在hadoop-2.8.4\bin

hadoop.dll放在C:\Windows\System32

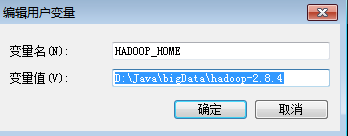

- 环境变量

访问hdfs时报权限错误

Permission denied: user=administrator, access=WRITE,

配置环境变量

配置服务器上的hadoop用户,因为执行login.login的时候调用了hadoop里面的HadoopLoginModule方法,会先读取HADOOP_USER_NAME系统环境变量,然后是java环境变量,如果再没有就从NTUserPrincipal里面取

配置好环境,重启Eclipse

- Maven中配置

<?xml version="1.0"?>

-<project xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://maven.apache.org/POM/4.0.0">

<modelVersion>4.0.0</modelVersion>

<groupId>HadoopJar</groupId>

<artifactId>Hadoop</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>Hadoop</name>

<url>http://maven.apache.org</url>

-<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.version>2.8.4</hadoop.version>

</properties>

-<dependencies>

-<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-core -->

-<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

-<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-common -->

-<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-jobclient -->

-<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-jobclient</artifactId>

<version>${hadoop.version}</version>

</dependency>

-<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

-<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>D:\Java\jdk1.8.0_101\lib/tools.jar</systemPath>

</dependency>

</dependencies>

-<build>

<finalName>Hadoop</finalName>

-<plugins>

-<plugin>

<artifactId>maven-compiler-plugin</artifactId>

-<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

-<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

-<configuration>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

</project>```

+ wordCount Demo

+ 配置文件

主机名要替换成IP

hadoop.tmp.dir /usr/local/hadoop-2.8.4/tmp Abase for other temporary directories. fs.defaultFS hdfs://192.168.96.128:9000 io.file.buffer.size 4096

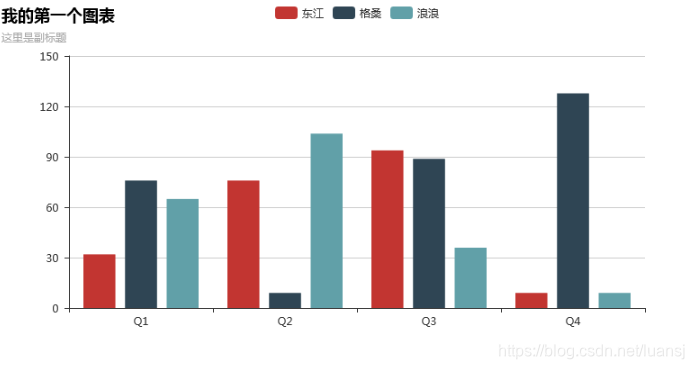

+ 运行结果

Hdfs dfs –mkdir /hi

+

+

+