前言

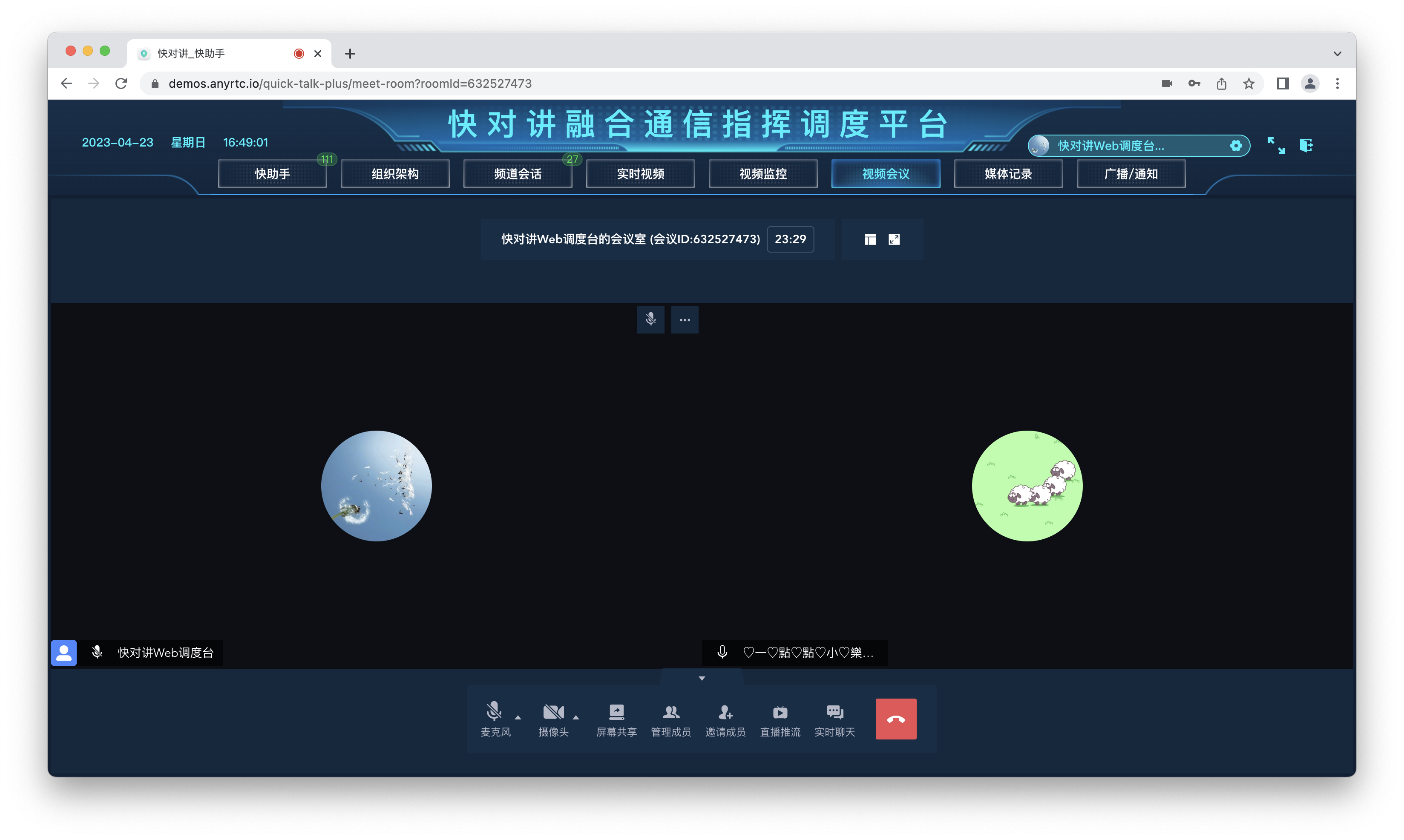

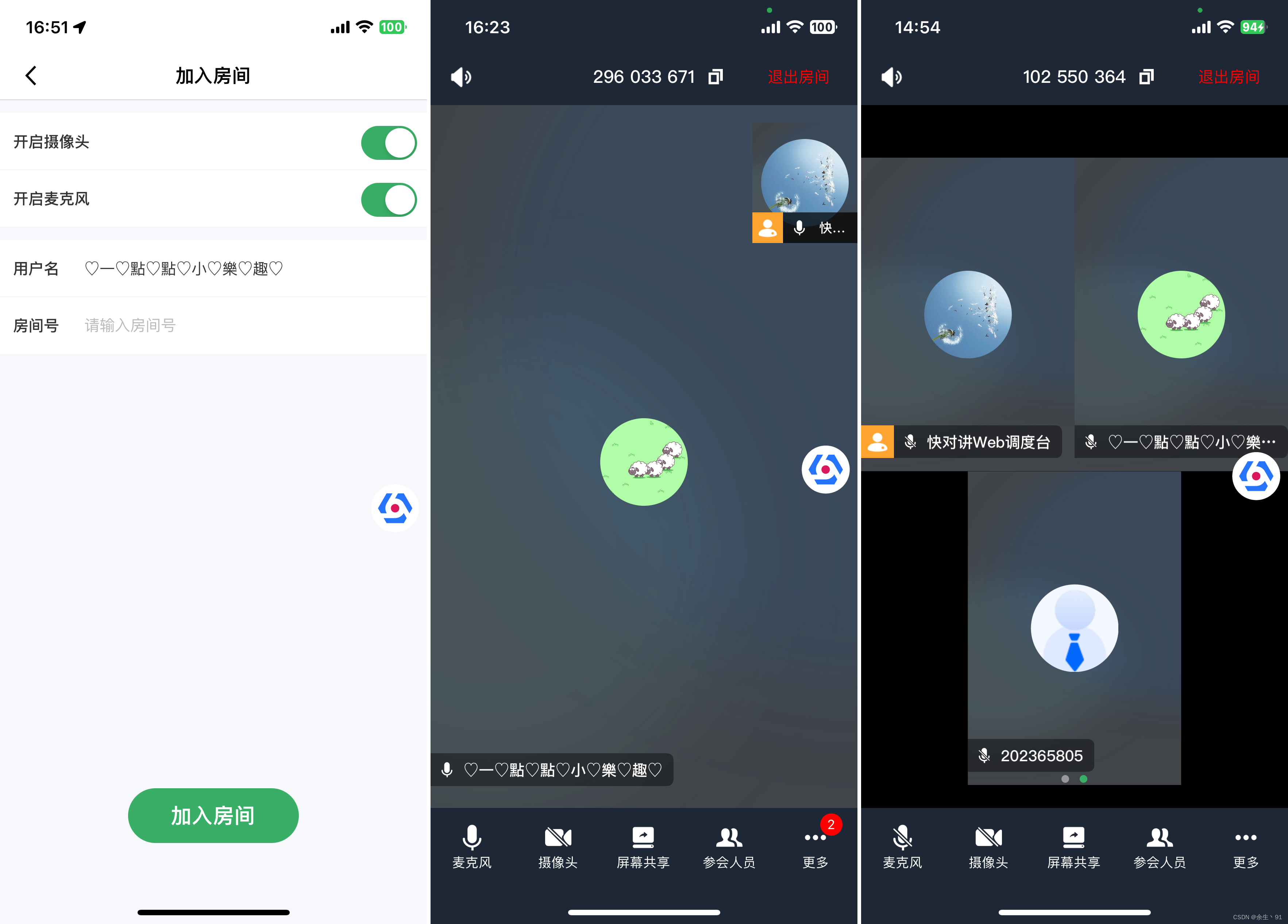

快对讲2.0,全新升级,新增多人音视频会议模块,让沟通更高效!会议模块包含会控、成员管理、聊天、屏幕共享、音视频相关、AI降噪等实用功能,支持iOS、Android 和 Web等多终端接入,让远程协作更加轻松自如。

功能体验

会议场景功能

基础会议

效果预览

部分代码实现

NS_ASSUME_NONNULL_BEGIN

@class ARUIRoomKit;

@protocol ARUIRoomKitDelegate <NSObject>

@optional

/// 加入会议事件回调

/// - Parameter code: 错误码

- (void)onEnterRoom:(ARUIConferenceErrCode)code;

/// 退出会议事件回调

/// - Parameter code: 错误码

- (void)onExitRoom:(ARUIConferenceErrCode)code;

@end

@interface ARUIRoomKit : NSObject

/// 会议事件回调

/// - Parameter delegate: 回调

+ (void)setDelegate:(id<ARUIRoomKitDelegate> _Nullable)delegate;

/// 创建会议房间

/// - Parameter info: 房间信息

+ (void)createRoom:(ARUIRoomInfo *_Nonnull)info;

/// 加入会议房间

/// - Parameter info: 房间信息

+ (void)enterRoom:(ARUIRoomInfo *_Nonnull)info;

/// 结束会议

+ (void)exitRoom;

@end

@interface ARTCMeeting : NSObject

+ (ARTCMeeting *)shareInstance;

/// 开始会议

/// - Parameter roomInfo: 会议信息

- (void)startMeeing:(ARUIRoomInfo *)roomInfo

NS_SWIFT_NAME(startMeeing(roomInfo:));

/// 离开会议

- (void)leaveRoom

NS_SWIFT_NAME(leaveRoom());

/// 设置ARTCMeetingDelegate回调

/// @param delegate 回调实例

- (void)addDelegate:(id<ARTCMeetingDelegate>)delegate

NS_SWIFT_NAME(addDelegate(delegate:));

/// 开启远程用户视频渲染

/// @param userId 远程用户 ID

/// @param view 渲染视图

- (void)startRemoteView:(NSString *)userId view:(UIView *)view

NS_SWIFT_NAME(startRemoteView(userId:view:));

/// 关闭远程用户视频渲染

/// @param userId 远程用户 ID

- (void)stopRemoteView:(NSString *)userId

NS_SWIFT_NAME(stopRemoteView(userId:));

/// 打开摄像头

- (void)openCamera:(BOOL)frontCamera view:(UIView *)view

NS_SWIFT_NAME(openCamera(frontCamera:view:));

/// 切换摄像头

- (void)switchCamera

NS_SWIFT_NAME(switchCamera());

/// 开关本地视频流

/// - Parameter mute: YES 禁止,NO 恢复

- (void)muteLocalVideo:(BOOL)mute

NS_SWIFT_NAME(muteLocalVideo(mute:));

/// 开关本地音频流

/// - Parameter mute: YES 禁止,NO 恢复

- (void)muteLocalAudio:(BOOL)mute

NS_SWIFT_NAME(muteLocalAudio(mute:));

/// 免提

- (void)setHandsFree:(BOOL)isHandsFree

NS_SWIFT_NAME(setHandsFree(isHandsFree:));

/// 停止/恢复接收指定视频流

/// - Parameters:

/// - userID: 用户ID

/// - mute: YES 停止,NO 恢复

- (void)muteRemoteVideoStream:(NSString *)userID mute:(BOOL)mute

NS_SWIFT_NAME(muteRemoteVideoStream(userID:mute:));

/// 停止/恢复接收指定音频流

/// - Parameters:

/// - userID: 用户ID

/// - mute: YES 停止,NO 恢复

- (void)muteRemoteAudioStream:(NSString *)userID mute:(BOOL)mute;

/// 本地镜像

/// - Parameter mirror: YES 镜像打开,NO 镜像关闭

- (void)setLocalRenderMirrorMode:(BOOL)mirror

NS_SWIFT_NAME(setLocalRenderMirrorMode(mirror:));

/// 说话提示音

/// - Parameter enable: YES 打开,NO 关闭

- (void)enableAudioVolumeIndication:(BOOL)enable

NS_SWIFT_NAME(enableAudioVolumeIndication(enable:));

/// 调节录音音量

/// - Parameter volume: 录音音量 [0, 400]

- (void)adjustRecordingSignalVolume:(NSInteger)volume

NS_SWIFT_NAME(adjustRecordingSignalVolume(volume:));

/// 调节本地播放的所有远端用户音量

/// - Parameter volume: 播放音量 [0, 400]

- (void)adjustPlaybackSignalVolume:(NSInteger)volume

NS_SWIFT_NAME(adjustPlaybackSignalVolume(volume:));

/// 设置视频编码配置

- (void)updateVideoEncoderParam

NS_SWIFT_NAME(updateVideoEncoderParam());

/// AI 降噪

/// - Parameter state: 1打开,0 关闭

- (void)setAudioAiNoise:(BOOL)isOpen

NS_SWIFT_NAME(setAudioAiNoise(isOpen:));

/// 收到主持人禁画信令

/// - Parameter mute: YES 禁画,NO 禁画

/// - Parameter isAll: YES 全体,NO 个人

- (void)receiveMuteVideo:(BOOL)mute all:(BOOL)isAll

NS_SWIFT_NAME(receiveMuteVideo(mute:all:));

/// 同步用户禁画状态变化

/// - Parameters:

/// - uid: 用户ID

/// - mute: YES 禁止,NO 恢复

- (void)syncVidState:(NSString *)uid mute:(BOOL)mute

NS_SWIFT_NAME(syncVidState(uid:mute:));

/// 同步禁言状态变化

/// - Parameter mute: YES 禁止,NO 恢复

- (void)syncChatState:(BOOL)mute

NS_SWIFT_NAME(syncChatState(mute:));

@end

会控逻辑

效果预览

部分代码实现

@implementation ARTCMeeting

+ (ARTCMeeting *)shareInstance {

static dispatch_once_t onceToken;

static ARTCMeeting * g_sharedInstance = nil;

dispatch_once(&onceToken, ^{

g_sharedInstance = [[ARTCMeeting alloc] init];

});

return g_sharedInstance;

}

- (ARtcEngineKit *)rtcEngine {

if (!_rtcEngine) {

_rtcEngine = [ARtcEngineKit sharedEngineWithAppId:[ARUILogin getSdkAppID] delegate:self];

[_rtcEngine setEnableSpeakerphone: YES];

[_rtcEngine enableDualStreamMode:YES];

}

return _rtcEngine;

}

- (void)addObserver {

/// 监听前后台状态

[NSNotificationCenter.defaultCenter addObserver:self selector:@selector(enterBackground:) name:UIApplicationDidEnterBackgroundNotification object:nil];

[NSNotificationCenter.defaultCenter addObserver:self selector:@selector(becomeActive:) name:UIApplicationWillEnterForegroundNotification object:nil];

}

- (void)removeObserver {

/// 移除监听

[NSNotificationCenter.defaultCenter removeObserver:self name:UIApplicationDidEnterBackgroundNotification object:nil];

[NSNotificationCenter.defaultCenter removeObserver:self name:UIApplicationWillEnterForegroundNotification object:nil];

}

- (void)enterBackground:(NSNotification *)notification {

/// 进入后台,本地视频非关闭状态 && 房间非全员禁画

if(!self.roomInfo.vidMuteState && !self.roomInfo.confVidMuteState) {

[self muteLocalVideo:YES];

}

}

- (void)becomeActive:(NSNotification *)notification {

if(self.autoMuteLocalVideo && !self.roomInfo.confVidMuteState) {

/// 进入前台,已自动关闭本地视频 && 房间非全员禁画

[self muteLocalVideo:NO];

}

}

//MARK: - Public

- (void)startMeeing:(ARUIRoomInfo *)roomInfo {

self.roomInfo = roomInfo;

self.curRoomID = roomInfo.confId;

[self enableAudioVolumeIndication:YES];

UIApplication.sharedApplication.idleTimerDisabled = YES;

[self joinRoom];

}

- (void)addDelegate:(id<ARTCMeetingDelegate>)delegate {

[self.listenerArray addObject:delegate];

}

- (void)startRemoteView:(NSString *)userID view:(UIView *)view {

/// 开启远程用户视频渲染

if (userID.length != 0) {

ARtcVideoCanvas *canvas = [[ARtcVideoCanvas alloc] init];

canvas.uid = userID;

canvas.view = view;

[self.rtcEngine setupRemoteVideo:canvas];

}

[self.rtcEngine setRemoteRenderMode:userID renderMode:ARVideoRenderModeFit mirrorMode:ARVideoMirrorModeAuto];

}

- (void)stopRemoteView:(NSString *)userID {

/// 关闭远程用户视频渲染

ARtcVideoCanvas *canvas = [[ARtcVideoCanvas alloc] init];

canvas.uid = userID;

canvas.view = nil;

[self.rtcEngine setupRemoteVideo:canvas];

}

- (void)openCamera:(BOOL)frontCamera view:(UIView *)view {

/// 打开摄像头

[self.rtcEngine enableVideo];

ARtcVideoCanvas *canvas = [[ARtcVideoCanvas alloc] init];

canvas.uid = [ARUILogin getUserID];

canvas.view = view;

[self.rtcEngine setupLocalVideo:canvas];

[self.rtcEngine startPreview];

}

- (void)switchCamera {

/// 切换摄像头

[self.rtcEngine switchCamera];

self.isReal = !self.isReal;

}

- (void)muteLocalVideo:(BOOL)mute {

/// 开关本地视频流

self.roomInfo.vidMuteState = mute;

[self.rtcEngine muteLocalVideoStream:mute];

}

- (void)muteLocalAudio:(BOOL)mute {

/// 开关本地音频流

self.roomInfo.audMuteState = mute;

[self.rtcEngine muteLocalAudioStream:mute];

}

- (void)setHandsFree:(BOOL)isHandsFree {

/// 免提操作

[self.rtcEngine setEnableSpeakerphone:isHandsFree];

self.isHandsFreeOn = isHandsFree;

NSLog(@"setHandsFree == %d", isHandsFree);

}

- (void)muteRemoteVideoStream:(NSString *)userID mute:(BOOL)mute {

/// 停止/恢复接收指定视频流

[self.rtcEngine muteRemoteVideoStream:userID mute:mute];

}

- (void)muteRemoteAudioStream:(NSString *)userID mute:(BOOL)mute {

/// 停止/恢复接收指定音频流

[self.rtcEngine muteRemoteAudioStream:userID mute:mute];

}

- (void)setLocalRenderMirrorMode:(BOOL)mirror {

/// 本地镜像

self.videoConfig.mirror = mirror;

[self.rtcEngine setLocalRenderMode:ARVideoRenderModeHidden mirrorMode:mirror ? ARVideoMirrorModeAuto : ARVideoMirrorModeDisabled];

}

- (void)enableAudioVolumeIndication:(BOOL)enable {

/// 启用说话者音量提示

self.audioConfig.volumePrompt = enable;

[self.rtcEngine enableAudioVolumeIndication:(enable ? 2000 : 0) smooth:3 report_vad: YES];

}

- (void)adjustRecordingSignalVolume:(NSInteger)volume {

/// 调节录音音量

self.audioConfig.captureVolume = volume;

[self.rtcEngine adjustRecordingSignalVolume:volume];

}

- (void)adjustPlaybackSignalVolume:(NSInteger)volume {

/// 调节本地播放的所有远端用户音量

self.audioConfig.playVolume = volume;

[self.rtcEngine adjustPlaybackSignalVolume:volume];

}

- (void)modifyUserName:(NSString *)uid nickName:(NSString *)name {

if([delegate respondsToSelector:@selector(onModifyUserName:uid:)]) {

[delegate onModifyUserName:name uid:uid];

}

}

- (void)transferMaster:(NSString *)masterId {

NSString *oldMasterId = self.roomInfo.ownerId;

self.roomInfo.ownerId = masterId;

if([delegate respondsToSelector:@selector(onTransferMaster:old:)]) {

[delegate onTransferMaster:masterId old:oldMasterId];

}

}

- (void)setAudioAiNoise:(BOOL)isOpen {

/// AI 降噪

self.audioConfig.noise = isOpen;

NSDictionary *dic = [[NSDictionary alloc] initWithObjectsAndKeys:@"SetAudioAiNoise", @"Cmd",[NSNumber numberWithInt:isOpen ? 1 : 0], @"Enable",nil];

[self.rtcEngine setParameters:[ARUICoreUtil fromDicToJsonStr:dic]];

}

//MARK: - Private

- (void)joinRoom {

NSString *rtcToken = nil;

if (ARCoreConfig.defaultConfig.needToken) {

rtcToken = ARCoreConfig.defaultConfig.rtcConfig.token;

if (rtcToken.length == 0) {

ARLog(@"ARTCMeeting - rtc JoinChannel Token is Null");

//return;

}

}

@weakify(self);

[self.rtcEngine joinChannelByToken:rtcToken channelId:self.roomInfo.confId uid:[ARUILogin getUserID] joinSuccess:^(NSString * _Nonnull channel, NSString * _Nonnull uid, NSInteger elapsed) {

ARLog(@"ARTCMeeting - joinChannel Sucess %@ %@", channel, uid);

@strongify(self)

if (!self) return;

[self startTimer];

[self addObserver];

[self createMeetingChannel];

}];

}

- (void)startTimer {

/// 上传用户状态

@weakify(self);

self.timerName = [ARTCGCDTimer timerTask:^{

@strongify(self)

if (!self) return;

[self uploadUserStatus];

} start:0 interval:5 repeats:YES async:NO];

}

//MARK: - ARtcEngineDelegate

- (void)rtcEngine:(ARtcEngineKit *)engine didOccurError:(ARErrorCode)errorCode {

/// 发生错误回调

}

- (void)rtcEngine:(ARtcEngineKit *)engine didOccurWarning:(ARWarningCode)warningCode {

/// 发生警告回调

if(warningCode == ARWarningCodeLookupChannelTimeout) {

[self dealWithException];

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine connectionChangedToState:(ARConnectionStateType)state reason:(ARConnectionChangedReason)reason {

/// 网络连接状态已改变回调

}

- (void)rtcEngine:(ARtcEngineKit *)engine tokenPrivilegeWillExpire:(NSString *)token {

/// Token 服务即将过期回调 30s

}

- (void)rtcEngineRequestToken:(ARtcEngineKit *)engine {

/// Token 服务过期回调 24h

[self dealWithException];

}

- (void)rtcEngine:(ARtcEngineKit *)engine didJoinedOfUid:(NSString *)uid elapsed:(NSInteger)elapsed {

/// 远端用户/主播加入回调

if([delegate respondsToSelector:@selector(onUserEnter:)]) {

[delegate onUserEnter:uid];

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine didOfflineOfUid:(NSString *)uid reason:(ARUserOfflineReason)reason {

/// 远端用户(通信场景)/主播(直播场景)离开当前频道回调

if([delegate respondsToSelector:@selector(onUserLeave:)]) {

[delegate onUserLeave:uid];

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine remoteVideoStateChangedOfUid:(NSString *)uid state:(ARVideoRemoteState)state reason:(ARVideoRemoteStateReason)reason elapsed:(NSInteger)elapsed {

/// 远端视频状态发生改变回调

if (reason == ARVideoRemoteStateReasonRemoteMuted || reason == ARVideoRemoteStateReasonRemoteUnmuted) {

if([delegate respondsToSelector:@selector(onUserVideoAvailable:available:)]) {

[delegate onUserVideoAvailable:uid available:(reason == ARVideoRemoteStateReasonRemoteMuted) ? NO : YES];

}

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine remoteAudioStateChangedOfUid:(NSString *)uid state:(ARAudioRemoteState)state reason:(ARAudioRemoteStateReason)reason elapsed:(NSInteger)elapsed {

/// 远端音频状态发生改变回调

if (reason == ARAudioRemoteReasonRemoteMuted || reason == ARAudioRemoteReasonRemoteUnmuted) {

if([delegate respondsToSelector:@selector(onUserAudioAvailable:available:)]) {

[delegate onUserAudioAvailable:uid available:(reason == ARAudioRemoteReasonRemoteMuted) ? NO : YES];

}

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine networkQuality:(NSString *)uid txQuality:(ARNetworkQuality)txQuality rxQuality:(ARNetworkQuality)rxQuality {

/// 通话中每个用户的网络上下行 last mile 质量报告回调

if([delegate respondsToSelector:@selector(onUserNetworkQualityChanged:quality:)]) {

NSUInteger quality = 0;

if(txQuality == ARNetworkQualityPoor|| txQuality == ARNetworkQualityBad) {

quality = 1;

} else if (txQuality == ARNetworkQualityExcellent || txQuality == ARNetworkQualityGood) {

quality = 2;

}

[delegate onUserNetworkQualityChanged:uid quality:quality];

}

}

- (void)rtcEngine:(ARtcEngineKit *)engine reportAudioVolumeIndicationOfSpeakers:(NSArray<ARtcAudioVolumeInfo *> *)speakers totalVolume:(NSInteger)totalVolume {

/// 提示频道内谁正在说话、说话者音量及本地用户是否在说话的回调

if([delegate respondsToSelector:@selector(onUserVoiceVolumeChanged:volume:)] && volumeInfo.volume > 20) {

[delegate onUserVoiceVolumeChanged:([volumeInfo.uid isEqualToString:@"0"] ? [ARUILogin getUserID] : volumeInfo.uid) volume:volumeInfo.volume];

}

}

音视频相关

效果预览

部分代码实现

- (void)setupData {

_data = [NSMutableArray array];

ARUIMeetVideoConfiguration *videoConfig = ARUIRTCMeeting.videoConfig;

ARUIRoomVideoModel *videoModel = self.dimensionsTable[videoConfig.dimensionsIndex];

ARCommonTextCellData *resolutionStatus = [ARCommonTextCellData new];

resolutionStatus.key = @"分辨率";

resolutionStatus.value = videoModel.resolutionName;

resolutionStatus.showAccessory = YES;

ARCommonTextCellData *rateStatus = [ARCommonTextCellData new];

rateStatus.key = @"帧率";

rateStatus.value = [NSString stringWithFormat:@"%@", self.frameRateTable[videoConfig.frameRateIndex]];

rateStatus.showAccessory = YES;

ARCommonSwitchCellData *mirrorStatus = [ARCommonSwitchCellData new];

mirrorStatus.title = @"本地镜像";

mirrorStatus.cswitchSelector = @selector(onSwitchMirrorStatus:);

if(ARUIRTCMeeting.isReal) {

mirrorStatus.on = NO;

mirrorStatus.enable = NO;

} else {

mirrorStatus.on = videoConfig.mirror;

}

[_data addObject:@[resolutionStatus, rateStatus,mirrorStatus]];

ARUIMeetAudioConfiguration *audioConfig = ARUIRTCMeeting.audioConfig;

ARCommonSwitchCellData *volumeStatus = [ARCommonSwitchCellData new];

volumeStatus.title = @"音量提示";

volumeStatus.cswitchSelector = @selector(onAudioStatus:);

volumeStatus.on = audioConfig.volumePrompt;

ARCommonSwitchCellData *noiseStatus = [ARCommonSwitchCellData new];

noiseStatus.title = @"AI 降噪";

noiseStatus.cswitchSelector = @selector(onNoiseStatus:);

noiseStatus.on = audioConfig.noise;

ARCommonSliderCellData *recordStatus = [ARCommonSliderCellData new];

recordStatus.key = @"采集音量";

recordStatus.currentVolume = audioConfig.captureVolume;

recordStatus.csliderSelector = @selector(onRecordStatus:);

recordStatus.maxVolume = 200;

ARCommonSliderCellData *playStatus = [ARCommonSliderCellData new];

playStatus.key = @"播放音量";

playStatus.currentVolume = audioConfig.playVolume;

playStatus.csliderSelector = @selector(onPlayStatus:);

playStatus.maxVolume = 200;

[_data addObject:@[volumeStatus, noiseStatus, recordStatus, playStatus]];

}

- (void)resolutionDidClick {

UIWindow *window = UIApplication.sharedApplication.windows.firstObject;

if(window) {

@weakify(self);

ARUIRoomResolutionAlert *alert = [[ARUIRoomResolutionAlert alloc] initWithStyle:ARUIRoomAlertStyleResolution dataSource:self.dimensionsTable selected:^(NSUInteger index) {

@strongify(self)

if (!self) return;

ARUIRoomVideoModel *videoModel = self.dimensionsTable[index];

[ARTCMeeting.shareInstance videoConfig].dimensionsIndex = index;

[ARTCMeeting.shareInstance videoConfig].videoModel = videoModel;

[self updateVideoEncoderParam];

ARCommonTextCellData *cellData = self.data.firstObject[0];

cellData.value = videoModel.resolutionName;

[self.tableView reloadData];

}];

[window addSubview:alert];

[alert mas_makeConstraints:^(MASConstraintMaker *make) {

make.edges.equalTo(window);

}];

[window layoutIfNeeded];

[alert show];

}

}

- (void)fpsDidClick {

UIWindow *window = UIApplication.sharedApplication.windows.firstObject;

if(window) {

@weakify(self);

ARUIRoomResolutionAlert *alert = [[ARUIRoomResolutionAlert alloc] initWithStyle:ARUIRoomAlertStyleFrameRate dataSource:self.frameRateTable selected:^(NSUInteger index) {

@strongify(self)

if (!self) return;

[ARTCMeeting.shareInstance videoConfig].frameRateIndex = index;

[self updateVideoEncoderParam];

ARCommonTextCellData *cellData = self.data.firstObject[1];

cellData.value = [NSString stringWithFormat:@"%@", self.frameRateTable[index]];

[self.tableView reloadData];

}];

[window addSubview:alert];

[alert mas_makeConstraints:^(MASConstraintMaker *make) {

make.edges.equalTo(window);

}];

[window layoutIfNeeded];

[alert show];

}

}

- (void)updateVideoEncoderParam {

[ARTCMeeting.shareInstance updateVideoEncoderParam];

}

- (void)onSwitchMirrorStatus:(ARCommonSwitchCell *)cell {

/// 本地镜像

[ARUIRTCMeeting setLocalRenderMirrorMode:cell.switcher.isOn];

}

- (void)onAudioStatus:(ARCommonSwitchCell *)cell {

/// 音量提示

[ARUIRTCMeeting enableAudioVolumeIndication:cell.switcher.isOn];

}

- (void)onNoiseStatus:(ARCommonSwitchCell *)cell {

/// AI 降噪

[ARUIRTCMeeting setAudioAiNoise:cell.switcher.isOn];

}

- (void)onRecordStatus:(ARCommonSliderCell *)cell {

/// 录制音量

int value = cell.slider.value;

[ARUIRTCMeeting adjustRecordingSignalVolume:value];

}

- (void)onPlayStatus:(ARCommonSliderCell *)cell {

/// 播放音量

int value = cell.slider.value;

[ARUIRTCMeeting adjustPlaybackSignalVolume:value];

}

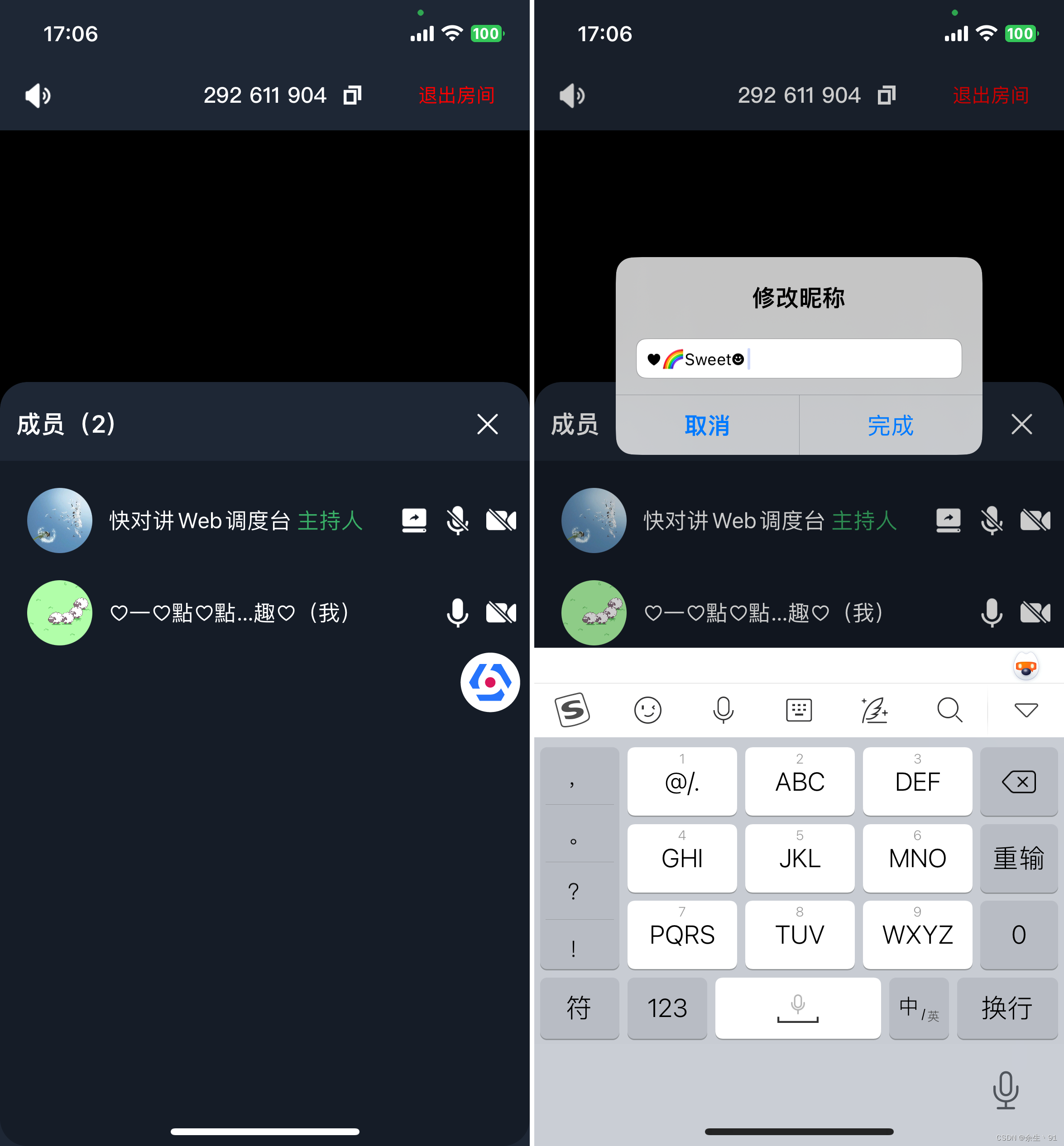

成员管理

效果预览

部分代码实现

//MARK: - ARTCMeetingDelegate

- (void)onUserEnter:(NSString *)uid {

if(![self.attendeeMap.allKeys containsObject:uid]) {

ARUIAttendeeModel * attendeeModel = [[ARUIAttendeeModel alloc] init];

if([uid isEqualToString:ARUIRTCMeeting.roomInfo.ownerId]) {

attendeeModel.isOwner = YES; // 房主

}

attendeeModel.userId = uid;

attendeeModel.userName = uid;

if([attendeeModel.userId isEqualToString:[ARUIRTCMeeting roomInfo].ownerId]) {

[self.attendeeList insertObject:attendeeModel atIndex:0];

} else {

[self.attendeeList addObject:attendeeModel];

}

[self.attendeeMap setValue:attendeeModel forKey:uid];

[self reloadData];

[self updateMemberCount];

}

- (void)onUserLeave:(NSString *)uid {

[self.attendeeMap removeObjectForKey:uid];

for (ARUIAttendeeModel *attendeeModel in self.attendeeList) {

if([attendeeModel.userId isEqualToString:uid]) {

[self.attendeeList removeObject:attendeeModel];

break;

}

}

[self reloadData];

[self updateMemberCount];

}

- (void)onUserVideoAvailable:(NSString *)uid available:(BOOL)available {

if([self.attendeeMap.allKeys containsObject:uid]) {

ARUIAttendeeModel *attendModel = [self.attendeeMap objectForKey:uid];

attendModel.hasVideoStream = available;

[self reloadData];

}

}

- (void)onUserAudioAvailable:(NSString *)uid available:(BOOL)available {

if([self.attendeeMap.allKeys containsObject:uid]) {

ARUIAttendeeModel *attendModel = [self.attendeeMap objectForKey:uid];

attendModel.hasAudioStream = available;

[self reloadData];

}

}

- (void)onUserInfoChanged:(NSString *)uid memberInfo:(ARUIMemberInfo *)info {

ARLog(@"ARTCMeeting - onUserInfoChanged %@", uid);

if([self.attendeeMap.allKeys containsObject:uid]) {

ARUIAttendeeModel *attendModel = [self.attendeeMap objectForKey:uid];

attendModel.userName = info.nickName;

attendModel.avatarUrl = info.faceUrl;

[self reloadData];

}

}

- (void)onModifyUserName:(NSString *)name uid:(NSString *)uid {

ARLog(@"ARTCMeeting - onModifyUserName %@ %@", uid, name);

if([self.attendeeMap.allKeys containsObject:uid]) {

ARUIAttendeeModel *attendModel = [self.attendeeMap objectForKey:uid];

attendModel.userName = name;

[self reloadData];

}

}

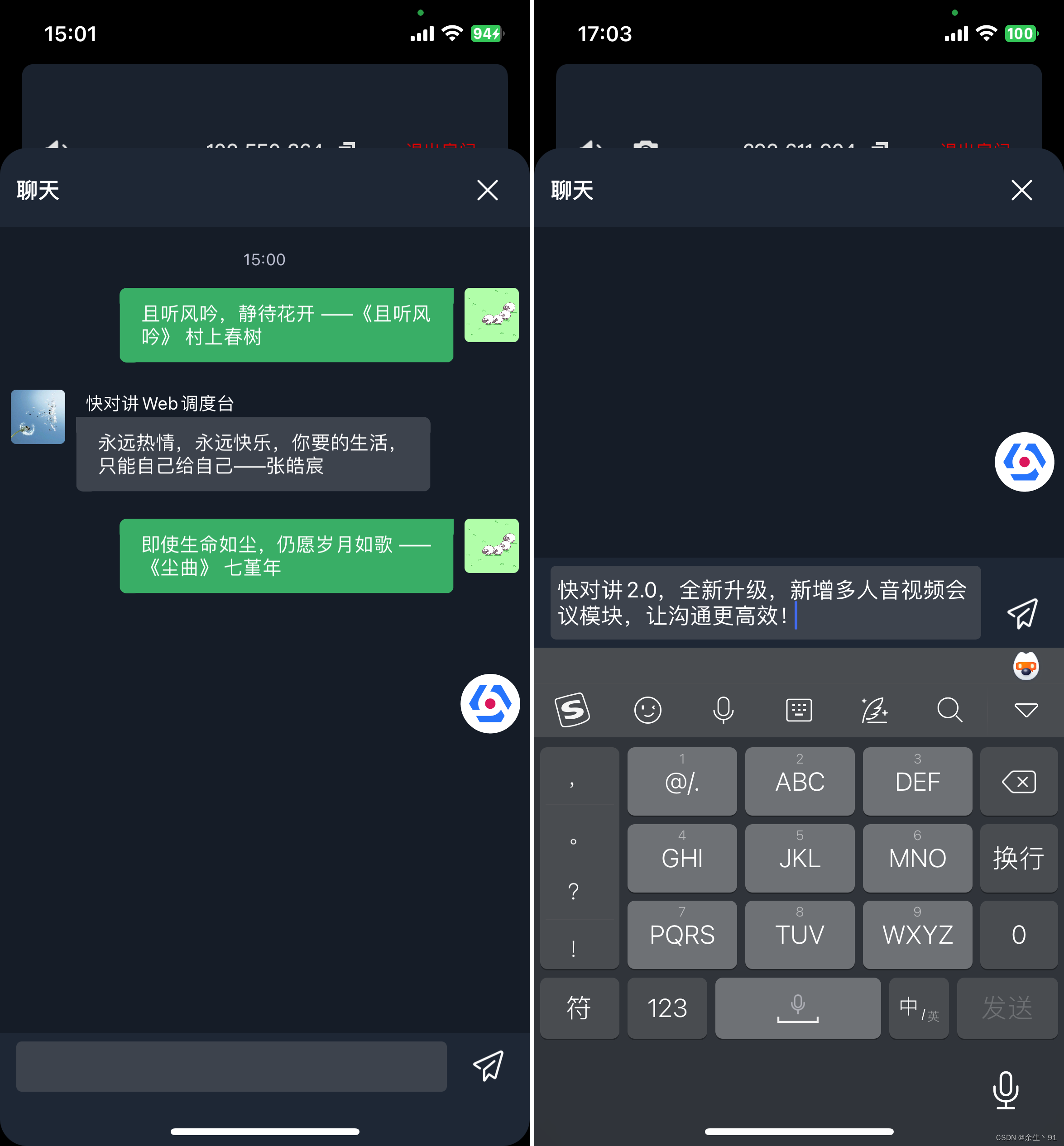

聊天

效果预览

部分代码实现

- (void)createMeetingChannel {

self.rtmChannel = [[ARtmManager.defaultManager getRtmKit] createChannelWithId:self.curRoomID delegate:self];

[self.rtmChannel joinWithCompletion:^(ARtmJoinChannelErrorCode errorCode) {

NSLog(@"ARTCMeeting - Join RTM Channel code = %ld", (long)errorCode);

}];

}

- (void)leaveMeetingChannel {

if(self.rtmChannel) {

[self.rtmChannel leaveWithCompletion:nil];

[ARtmManager.defaultManager releaseChannel:self.curRoomID];

self.rtmChannel = nil;

}

}

- (void)sendUIMsg:(ARUIChatMessageCellData *)cellData success:(void (^)(void))success failed:(void (^)(void))failed {

ARUIChatSystemMessageCellData *dateMsg = [self getSystemMsgFromDate:cellData.barrageModel.sendTime];

if (dateMsg != nil) {

/// 添加系统消息

self.msgForDate = cellData.barrageModel;

[self.uiMsgs addObject:dateMsg];

if(self.dataSource && [self.dataSource respondsToSelector:@selector(dataProviderDataSourceChange:index:)]) {

[self.dataSource dataProviderDataSourceChange:ARUIChatDataSourceChangeTypeInsert index:self.uiMsgs.count - 1];

}

}

cellData.status = ARUIChatMsgStatusSending;

[self.uiMsgs addObject:cellData];

if(self.dataSource && [self.dataSource respondsToSelector:@selector(dataProviderDataSourceChange:index:)]) {

[self.dataSource dataProviderDataSourceChange:ARUIChatDataSourceChangeTypeInsert index:self.uiMsgs.count - 1];

}

NSDictionary *dic = [cellData.barrageModel mj_keyValues];

ARtmMessage *message = [[ARtmMessage alloc] initWithText:[ARUICoreUtil fromDicToJsonStr:dic]];

ARtmSendMessageOptions *messageOptions = [[ARtmSendMessageOptions alloc] init];

@weakify(self);

[self.rtmChannel sendMessage:message sendMessageOptions:messageOptions completion:^(ARtmSendChannelMessageErrorCode errorCode) {

@strongify(self)

if (!self) return;

if(errorCode == ARtmSendChannelMessageErrorOk) {

if(success) {

success();

}

} else {

if(failed) {

failed();

}

}

}];

}

- (ARUIChatSystemMessageCellData * _Nullable)getSystemMsgFromDate:(NSInteger)time {

// 添加系统时间消息

NSInteger temp = time/1000;

NSInteger lastTime = self.msgForDate.sendTime/1000;

if (self.msgForDate == nil || labs(temp - lastTime) > kMaxDateMessageDelay) {

ARUIChatSystemMessageCellData *cellData = [[ARUIChatSystemMessageCellData alloc] initWithDirection:ARUIChatMsgDirectionOutgoing];

cellData.content = [NSDate timeStringWithTimeInterval:(NSTimeInterval)temp];

return cellData;

}

return nil;

}

屏幕共享

效果预览

部分代码实现

SampleHandler

class SampleHandler: ARUIBaseSampleHandler {

override func processSampleBuffer(_ sampleBuffer: CMSampleBuffer, with sampleBufferType: RPSampleBufferType) {

switch sampleBufferType {

case RPSampleBufferType.video:

// Handle video sample buffer

guard let pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else { return }

let timestamp = CMSampleBufferGetPresentationTimeStamp(sampleBuffer)

let videoFrame = ARVideoFrame()

videoFrame.format = 12

videoFrame.time = timestamp

videoFrame.textureBuf = pixelBuffer

videoFrame.rotation = Int32(getRotateByBuffer(sampleBuffer))

rtcEngine?.pushExternalVideoFrame(videoFrame)

case RPSampleBufferType.audioApp:

// Handle audio sample buffer for app audio

if audioAppState {

rtcEngine?.pushExternalAudioFrameSampleBuffer(sampleBuffer, type: .app)

}

case RPSampleBufferType.audioMic:

// Handle audio sample buffer for mic audio

rtcEngine?.pushExternalAudioFrameSampleBuffer(sampleBuffer, type: .mic)

break

@unknown default:

// Handle other sample buffer types

fatalError("Unknown type of sample buffer")

}

}

}

extension SampleHandler {

func initialization(dic: NSDictionary) {

guard let appId = dic.object(forKey: "appId") as? String, let chanId = dic.object(forKey: "channelId") as? String, let screenUid = dic.object(forKey: "uid") as? String else {

finishBroadcast("parameter error")

return

}

rtcEngine = ARtcEngineKit.sharedEngine(withAppId: appId, delegate: self)

rtcEngine?.setChannelProfile(.liveBroadcasting)

rtcEngine?.setClientRole(.broadcaster)

rtcEngine?.enableVideo()

/// 配置外部视频源

rtcEngine?.setExternalVideoSource(true, useTexture: true, pushMode: true)

/// 推送外部音频帧

rtcEngine?.enableExternalAudioSource(withSampleRate: 48000, channelsPerFrame: 2)

rtcEngine?.muteAllRemoteAudioStreams(true)

rtcEngine?.muteAllRemoteVideoStreams(true)

rtcEngine?.enableDualStreamMode(true)

debugPrint("joinChannel chanId = \(chanId)")

let token = dic.object(forKey: "token") as! String

rtcEngine?.joinChannel(byToken: token, channelId: chanId, uid: screenUid, joinSuccess: { [weak self] _, _, _ in

guard let self = self else { return }

/// 正在屏幕共享

})

}

}

iOS 端会议基础库

platform :ios, '11.0'

use_frameworks!

target 'ARUITalking' do

# anyRTC 音视频库

pod 'ARtcKit_iOS', '~> 4.3.0.4'

# anyRTC 实时消息库

pod 'ARtmKit_iOS', '~> 1.1.0.1'

end

anyRTC 提供 SDK 快速集成方案,用户可根据业务需求定制化集成 SDK。