Yolov8 源码解析(十三)

comments: true

description: Dive into our guide on YOLOv8's integration with Kaggle. Find out what Kaggle is, its key features, and how to train a YOLOv8 model using the integration.

keywords: What is Kaggle, What is Kaggle Used For, YOLOv8, Kaggle Machine Learning, Model Training, GPU, TPU, cloud computing

A Guide on Using Kaggle to Train Your YOLOv8 Models

If you are learning about AI and working on small projects, you might not have access to powerful computing resources yet, and high-end hardware can be pretty expensive. Fortunately, Kaggle, a platform owned by Google, offers a great solution. Kaggle provides a free, cloud-based environment where you can access GPU resources, handle large datasets, and collaborate with a diverse community of data scientists and machine learning enthusiasts.

Kaggle is a great choice for training and experimenting with Ultralytics YOLOv8 models. Kaggle Notebooks make using popular machine-learning libraries and frameworks in your projects easy. Let's explore Kaggle's main features and learn how you can train YOLOv8 models on this platform!

What is Kaggle?

Kaggle is a platform that brings together data scientists from around the world to collaborate, learn, and compete in solving real-world data science problems. Launched in 2010 by Anthony Goldbloom and Jeremy Howard and acquired by Google in 2017. Kaggle enables users to connect, discover and share datasets, use GPU-powered notebooks, and participate in data science competitions. The platform is designed to help both seasoned professionals and eager learners achieve their goals by offering robust tools and resources.

With more than 10 million users as of 2022, Kaggle provides a rich environment for developing and experimenting with machine learning models. You don't need to worry about your local machine's specs or setup; you can dive right in with just a Kaggle account and a web browser.

Training YOLOv8 Using Kaggle

Training YOLOv8 models on Kaggle is simple and efficient, thanks to the platform's access to powerful GPUs.

To get started, access the Kaggle YOLOv8 Notebook. Kaggle's environment comes with pre-installed libraries like TensorFlow and PyTorch, making the setup process hassle-free.

Once you sign in to your Kaggle account, you can click on the option to copy and edit the code, select a GPU under the accelerator settings, and run the notebook's cells to begin training your model. For a detailed understanding of the model training process and best practices, refer to our YOLOv8 Model Training guide.

On the official YOLOv8 Kaggle notebook page, if you click on the three dots in the upper right-hand corner, you'll notice more options will pop up.

These options include:

- View Versions: Browse through different versions of the notebook to see changes over time and revert to previous versions if needed.

- Copy API Command: Get an API command to programmatically interact with the notebook, which is useful for automation and integration into workflows.

- Open in Google Notebooks: Open the notebook in Google's hosted notebook environment.

- Open in Colab: Launch the notebook in Google Colab for further editing and execution.

- Follow Comments: Subscribe to the comments section to get updates and engage with the community.

- Download Code: Download the entire notebook as a Jupyter (.ipynb) file for offline use or version control in your local environment.

- Add to Collection: Save the notebook to a collection within your Kaggle account for easy access and organization.

- Bookmark: Bookmark the notebook for quick access in the future.

- Embed Notebook: Get an embed link to include the notebook in blogs, websites, or documentation.

Common Issues While Working with Kaggle

When working with Kaggle, you might come across some common issues. Here are some points to help you navigate the platform smoothly:

- Access to GPUs: In your Kaggle notebooks, you can activate a GPU at any time, with usage allowed for up to 30 hours per week. Kaggle provides the Nvidia Tesla P100 GPU with 16GB of memory and also offers the option of using a Nvidia GPU T4 x2. Powerful hardware accelerates your machine-learning tasks, making model training and inference much faster.

- Kaggle Kernels: Kaggle Kernels are free Jupyter notebook servers that can integrate GPUs, allowing you to perform machine learning operations on cloud computers. You don't have to rely on your own computer's CPU, avoiding overload and freeing up your local resources.

- Kaggle Datasets: Kaggle datasets are free to download. However, it's important to check the license for each dataset to understand any usage restrictions. Some datasets may have limitations on academic publications or commercial use. You can download datasets directly to your Kaggle notebook or anywhere else via the Kaggle API.

- Saving and Committing Notebooks: To save and commit a notebook on Kaggle, click "Save Version." This saves the current state of your notebook. Once the background kernel finishes generating the output files, you can access them from the Output tab on the main notebook page.

- Collaboration: Kaggle supports collaboration, but multiple users cannot edit a notebook simultaneously. Collaboration on Kaggle is asynchronous, meaning users can share and work on the same notebook at different times.

- Reverting to a Previous Version: If you need to revert to a previous version of your notebook, open the notebook and click on the three vertical dots in the top right corner to select "View Versions." Find the version you want to revert to, click on the "..." menu next to it, and select "Revert to Version." After the notebook reverts, click "Save Version" to commit the changes.

Key Features of Kaggle

Next, let's understand the features Kaggle offers that make it an excellent platform for data science and machine learning enthusiasts. Here are some of the key highlights:

- Datasets: Kaggle hosts a massive collection of datasets on various topics. You can easily search and use these datasets in your projects, which is particularly handy for training and testing your YOLOv8 models.

- Competitions: Known for its exciting competitions, Kaggle allows data scientists and machine learning enthusiasts to solve real-world problems. Competing helps you improve your skills, learn new techniques, and gain recognition in the community.

- Free Access to TPUs: Kaggle provides free access to powerful TPUs, which are essential for training complex machine learning models. This means you can speed up processing and boost the performance of your YOLOv8 projects without incurring extra costs.

- Integration with Github: Kaggle allows you to easily connect your GitHub repository to upload notebooks and save your work. This integration makes it convenient to manage and access your files.

- Community and Discussions: Kaggle boasts a strong community of data scientists and machine learning practitioners. The discussion forums and shared notebooks are fantastic resources for learning and troubleshooting. You can easily find help, share your knowledge, and collaborate with others.

Why Should You Use Kaggle for Your YOLOv8 Projects?

There are multiple platforms for training and evaluating machine learning models, so what makes Kaggle stand out? Let's dive into the benefits of using Kaggle for your machine-learning projects:

- Public Notebooks: You can make your Kaggle notebooks public, allowing other users to view, vote, fork, and discuss your work. Kaggle promotes collaboration, feedback, and the sharing of ideas, helping you improve your YOLOv8 models.

- Comprehensive History of Notebook Commits: Kaggle creates a detailed history of your notebook commits. This allows you to review and track changes over time, making it easier to understand the evolution of your project and revert to previous versions if needed.

- Console Access: Kaggle provides a console, giving you more control over your environment. This feature allows you to perform various tasks directly from the command line, enhancing your workflow and productivity.

- Resource Availability: Each notebook editing session on Kaggle is provided with significant resources: 12 hours of execution time for CPU and GPU sessions, 9 hours of execution time for TPU sessions, and 20 gigabytes of auto-saved disk space.

- Notebook Scheduling: Kaggle allows you to schedule your notebooks to run at specific times. You can automate repetitive tasks without manual intervention, such as training your model at regular intervals.

Keep Learning about Kaggle

If you want to learn more about Kaggle, here are some helpful resources to guide you:

- Kaggle Learn: Discover a variety of free, interactive tutorials on Kaggle Learn. These courses cover essential data science topics and provide hands-on experience to help you master new skills.

- Getting Started with Kaggle: This comprehensive guide walks you through the basics of using Kaggle, from joining competitions to creating your first notebook. It's a great starting point for newcomers.

- Kaggle Medium Page: Explore tutorials, updates, and community contributions on Kaggle's Medium page. It's an excellent source for staying up-to-date with the latest trends and gaining deeper insights into data science.

Summary

We've seen how Kaggle can boost your YOLOv8 projects by providing free access to powerful GPUs, making model training and evaluation efficient. Kaggle's platform is user-friendly, with pre-installed libraries for quick setup.

For more details, visit Kaggle's documentation.

Interested in more YOLOv8 integrations? Check out the Ultralytics integration guide to explore additional tools and capabilities for your machine learning projects.

FAQ

How do I train a YOLOv8 model on Kaggle?

Training a YOLOv8 model on Kaggle is straightforward. First, access the Kaggle YOLOv8 Notebook. Sign in to your Kaggle account, copy and edit the notebook, and select a GPU under the accelerator settings. Run the notebook cells to start training. For more detailed steps, refer to our YOLOv8 Model Training guide.

What are the benefits of using Kaggle for YOLOv8 model training?

Kaggle offers several advantages for training YOLOv8 models:

- Free GPU Access: Utilize powerful GPUs like Nvidia Tesla P100 or T4 x2 for up to 30 hours per week.

- Pre-installed Libraries: Libraries like TensorFlow and PyTorch are pre-installed, simplifying the setup.

- Community Collaboration: Engage with a vast community of data scientists and machine learning enthusiasts.

- Version Control: Easily manage different versions of your notebooks and revert to previous versions if needed.

For more details, visit our Ultralytics integration guide.

What common issues might I encounter when using Kaggle for YOLOv8, and how can I resolve them?

Common issues include:

- Access to GPUs: Ensure you activate a GPU in your notebook settings. Kaggle allows up to 30 hours of GPU usage per week.

- Dataset Licenses: Check the license of each dataset to understand usage restrictions.

- Saving and Committing Notebooks: Click "Save Version" to save your notebook's state and access output files from the Output tab.

- Collaboration: Kaggle supports asynchronous collaboration; multiple users cannot edit a notebook simultaneously.

For more troubleshooting tips, see our Common Issues guide.

Why should I choose Kaggle over other platforms like Google Colab for training YOLOv8 models?

Kaggle offers unique features that make it an excellent choice:

- Public Notebooks: Share your work with the community for feedback and collaboration.

- Free Access to TPUs: Speed up training with powerful TPUs without extra costs.

- Comprehensive History: Track changes over time with a detailed history of notebook commits.

- Resource Availability: Significant resources are provided for each notebook session, including 12 hours of execution time for CPU and GPU sessions.

For a comparison with Google Colab, refer to our Google Colab guide.

How can I revert to a previous version of my Kaggle notebook?

To revert to a previous version:

- Open the notebook and click on the three vertical dots in the top right corner.

- Select "View Versions."

- Find the version you want to revert to, click on the "..." menu next to it, and select "Revert to Version."

- Click "Save Version" to commit the changes.

comments: true

description: Learn how to set up and use MLflow logging with Ultralytics YOLO for enhanced experiment tracking, model reproducibility, and performance improvements.

keywords: MLflow, Ultralytics YOLO, machine learning, experiment tracking, metrics logging, parameter logging, artifact logging

MLflow Integration for Ultralytics YOLO

Introduction

Experiment logging is a crucial aspect of machine learning workflows that enables tracking of various metrics, parameters, and artifacts. It helps to enhance model reproducibility, debug issues, and improve model performance. Ultralytics YOLO, known for its real-time object detection capabilities, now offers integration with MLflow, an open-source platform for complete machine learning lifecycle management.

This documentation page is a comprehensive guide to setting up and utilizing the MLflow logging capabilities for your Ultralytics YOLO project.

What is MLflow?

MLflow is an open-source platform developed by Databricks for managing the end-to-end machine learning lifecycle. It includes tools for tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow is designed to work with any machine learning library and programming language.

Features

- Metrics Logging: Logs metrics at the end of each epoch and at the end of the training.

- Parameter Logging: Logs all the parameters used in the training.

- Artifacts Logging: Logs model artifacts, including weights and configuration files, at the end of the training.

Setup and Prerequisites

Ensure MLflow is installed. If not, install it using pip:

pip install mlflow

Make sure that MLflow logging is enabled in Ultralytics settings. Usually, this is controlled by the settings mflow key. See the settings page for more info.

!!! Example "Update Ultralytics MLflow Settings"

=== "Python"

Within the Python environment, call the `update` method on the `settings` object to change your settings:

```py

from ultralytics import settings

# Update a setting

settings.update({"mlflow": True})

# Reset settings to default values

settings.reset()

```

=== "CLI"

If you prefer using the command-line interface, the following commands will allow you to modify your settings:

```py

# Update a setting

yolo settings runs_dir='/path/to/runs'

# Reset settings to default values

yolo settings reset

```

How to Use

Commands

-

Set a Project Name: You can set the project name via an environment variable:

export MLFLOW_EXPERIMENT_NAME=<your_experiment_name>Or use the

project=<project>argument when training a YOLO model, i.e.yolo train project=my_project. -

Set a Run Name: Similar to setting a project name, you can set the run name via an environment variable:

export MLFLOW_RUN=<your_run_name>Or use the

name=<name>argument when training a YOLO model, i.e.yolo train project=my_project name=my_name. -

Start Local MLflow Server: To start tracking, use:

mlflow server --backend-store-uri runs/mlflow'This will start a local server at http://127.0.0.1:5000 by default and save all mlflow logs to the 'runs/mlflow' directory. To specify a different URI, set the

MLFLOW_TRACKING_URIenvironment variable. -

Kill MLflow Server Instances: To stop all running MLflow instances, run:

ps aux | grep 'mlflow' | grep -v 'grep' | awk '{print $2}' | xargs kill -9

Logging

The logging is taken care of by the on_pretrain_routine_end, on_fit_epoch_end, and on_train_end callback functions. These functions are automatically called during the respective stages of the training process, and they handle the logging of parameters, metrics, and artifacts.

Examples

-

Logging Custom Metrics: You can add custom metrics to be logged by modifying the

trainer.metricsdictionary beforeon_fit_epoch_endis called. -

View Experiment: To view your logs, navigate to your MLflow server (usually http://127.0.0.1:5000) and select your experiment and run.

-

View Run: Runs are individual models inside an experiment. Click on a Run and see the Run details, including uploaded artifacts and model weights.

Disabling MLflow

To turn off MLflow logging:

yolo settings mlflow=False

Conclusion

MLflow logging integration with Ultralytics YOLO offers a streamlined way to keep track of your machine learning experiments. It empowers you to monitor performance metrics and manage artifacts effectively, thus aiding in robust model development and deployment. For further details please visit the MLflow official documentation.

FAQ

How do I set up MLflow logging with Ultralytics YOLO?

To set up MLflow logging with Ultralytics YOLO, you first need to ensure MLflow is installed. You can install it using pip:

pip install mlflow

Next, enable MLflow logging in Ultralytics settings. This can be controlled using the mlflow key. For more information, see the settings guide.

!!! Example "Update Ultralytics MLflow Settings"

=== "Python"

```py

from ultralytics import settings

# Update a setting

settings.update({"mlflow": True})

# Reset settings to default values

settings.reset()

```

=== "CLI"

```py

# Update a setting

yolo settings runs_dir='/path/to/runs'

# Reset settings to default values

yolo settings reset

```

Finally, start a local MLflow server for tracking:

mlflow server --backend-store-uri runs/mlflow

What metrics and parameters can I log using MLflow with Ultralytics YOLO?

Ultralytics YOLO with MLflow supports logging various metrics, parameters, and artifacts throughout the training process:

- Metrics Logging: Tracks metrics at the end of each epoch and upon training completion.

- Parameter Logging: Logs all parameters used in the training process.

- Artifacts Logging: Saves model artifacts like weights and configuration files after training.

For more detailed information, visit the Ultralytics YOLO tracking documentation.

Can I disable MLflow logging once it is enabled?

Yes, you can disable MLflow logging for Ultralytics YOLO by updating the settings. Here's how you can do it using the CLI:

yolo settings mlflow=False

For further customization and resetting settings, refer to the settings guide.

How can I start and stop an MLflow server for Ultralytics YOLO tracking?

To start an MLflow server for tracking your experiments in Ultralytics YOLO, use the following command:

mlflow server --backend-store-uri runs/mlflow

This command starts a local server at http://127.0.0.1:5000 by default. If you need to stop running MLflow server instances, use the following bash command:

ps aux | grep 'mlflow' | grep -v 'grep' | awk '{print $2}' | xargs kill -9

Refer to the commands section for more command options.

What are the benefits of integrating MLflow with Ultralytics YOLO for experiment tracking?

Integrating MLflow with Ultralytics YOLO offers several benefits for managing your machine learning experiments:

- Enhanced Experiment Tracking: Easily track and compare different runs and their outcomes.

- Improved Model Reproducibility: Ensure that your experiments are reproducible by logging all parameters and artifacts.

- Performance Monitoring: Visualize performance metrics over time to make data-driven decisions for model improvements.

For an in-depth look at setting up and leveraging MLflow with Ultralytics YOLO, explore the MLflow Integration for Ultralytics YOLO documentation.

comments: true

description: Optimize YOLOv8 models for mobile and embedded devices by exporting to NCNN format. Enhance performance in resource-constrained environments.

keywords: Ultralytics, YOLOv8, NCNN, model export, machine learning, deployment, mobile, embedded systems, deep learning, AI models

How to Export to NCNN from YOLOv8 for Smooth Deployment

Deploying computer vision models on devices with limited computational power, such as mobile or embedded systems, can be tricky. You need to make sure you use a format optimized for optimal performance. This makes sure that even devices with limited processing power can handle advanced computer vision tasks well.

The export to NCNN format feature allows you to optimize your Ultralytics YOLOv8 models for lightweight device-based applications. In this guide, we'll walk you through how to convert your models to the NCNN format, making it easier for your models to perform well on various mobile and embedded devices.

Why should you export to NCNN?

The NCNN framework, developed by Tencent, is a high-performance neural network inference computing framework optimized specifically for mobile platforms, including mobile phones, embedded devices, and IoT devices. NCNN is compatible with a wide range of platforms, including Linux, Android, iOS, and macOS.

NCNN is known for its fast processing speed on mobile CPUs and enables rapid deployment of deep learning models to mobile platforms. This makes it easier to build smart apps, putting the power of AI right at your fingertips.

Key Features of NCNN Models

NCNN models offer a wide range of key features that enable on-device machine learning by helping developers run their models on mobile, embedded, and edge devices:

-

Efficient and High-Performance: NCNN models are made to be efficient and lightweight, optimized for running on mobile and embedded devices like Raspberry Pi with limited resources. They can also achieve high performance with high accuracy on various computer vision-based tasks.

-

Quantization: NCNN models often support quantization which is a technique that reduces the precision of the model's weights and activations. This leads to further improvements in performance and reduces memory footprint.

-

Compatibility: NCNN models are compatible with popular deep learning frameworks like TensorFlow, Caffe, and ONNX. This compatibility allows developers to use existing models and workflows easily.

-

Easy to Use: NCNN models are designed for easy integration into various applications, thanks to their compatibility with popular deep learning frameworks. Additionally, NCNN offers user-friendly tools for converting models between different formats, ensuring smooth interoperability across the development landscape.

Deployment Options with NCNN

Before we look at the code for exporting YOLOv8 models to the NCNN format, let's understand how NCNN models are normally used.

NCNN models, designed for efficiency and performance, are compatible with a variety of deployment platforms:

-

Mobile Deployment: Specifically optimized for Android and iOS, allowing for seamless integration into mobile applications for efficient on-device inference.

-

Embedded Systems and IoT Devices: If you find that running inference on a Raspberry Pi with the Ultralytics Guide isn't fast enough, switching to an NCNN exported model could help speed things up. NCNN is great for devices like Raspberry Pi and NVIDIA Jetson, especially in situations where you need quick processing right on the device.

-

Desktop and Server Deployment: Capable of being deployed in desktop and server environments across Linux, Windows, and macOS, supporting development, training, and evaluation with higher computational capacities.

Export to NCNN: Converting Your YOLOv8 Model

You can expand model compatibility and deployment flexibility by converting YOLOv8 models to NCNN format.

Installation

To install the required packages, run:

!!! Tip "Installation"

=== "CLI"

```py

# Install the required package for YOLOv8

pip install ultralytics

```

For detailed instructions and best practices related to the installation process, check our Ultralytics Installation guide. While installing the required packages for YOLOv8, if you encounter any difficulties, consult our Common Issues guide for solutions and tips.

Usage

Before diving into the usage instructions, it's important to note that while all Ultralytics YOLOv8 models are available for exporting, you can ensure that the model you select supports export functionality here.

!!! Example "Usage"

=== "Python"

```py

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("yolov8n.pt")

# Export the model to NCNN format

model.export(format="ncnn") # creates '/yolov8n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("./yolov8n_ncnn_model")

# Run inference

results = ncnn_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to NCNN format

yolo export model=yolov8n.pt format=ncnn # creates '/yolov8n_ncnn_model'

# Run inference with the exported model

yolo predict model='./yolov8n_ncnn_model' source='https://ultralytics.com/images/bus.jpg'

```

For more details about supported export options, visit the Ultralytics documentation page on deployment options.

Deploying Exported YOLOv8 NCNN Models

After successfully exporting your Ultralytics YOLOv8 models to NCNN format, you can now deploy them. The primary and recommended first step for running a NCNN model is to utilize the YOLO("./model_ncnn_model") method, as outlined in the previous usage code snippet. However, for in-depth instructions on deploying your NCNN models in various other settings, take a look at the following resources:

-

Android: This blog explains how to use NCNN models for performing tasks like object detection through Android applications.

-

macOS: Understand how to use NCNN models for performing tasks through macOS.

-

Linux: Explore this page to learn how to deploy NCNN models on limited resource devices like Raspberry Pi and other similar devices.

-

Windows x64 using VS2017: Explore this blog to learn how to deploy NCNN models on windows x64 using Visual Studio Community 2017.

Summary

In this guide, we've gone over exporting Ultralytics YOLOv8 models to the NCNN format. This conversion step is crucial for improving the efficiency and speed of YOLOv8 models, making them more effective and suitable for limited-resource computing environments.

For detailed instructions on usage, please refer to the official NCNN documentation.

Also, if you're interested in exploring other integration options for Ultralytics YOLOv8, be sure to visit our integration guide page for further insights and information.

FAQ

How do I export Ultralytics YOLOv8 models to NCNN format?

To export your Ultralytics YOLOv8 model to NCNN format, follow these steps:

-

Python: Use the

exportfunction from the YOLO class.from ultralytics import YOLO # Load the YOLOv8 model model = YOLO("yolov8n.pt") # Export to NCNN format model.export(format="ncnn") # creates '/yolov8n_ncnn_model' -

CLI: Use the

yolocommand with theexportargument.yolo export model=yolov8n.pt format=ncnn # creates '/yolov8n_ncnn_model'

For detailed export options, check the Export page in the documentation.

What are the advantages of exporting YOLOv8 models to NCNN?

Exporting your Ultralytics YOLOv8 models to NCNN offers several benefits:

- Efficiency: NCNN models are optimized for mobile and embedded devices, ensuring high performance even with limited computational resources.

- Quantization: NCNN supports techniques like quantization that improve model speed and reduce memory usage.

- Broad Compatibility: You can deploy NCNN models on multiple platforms, including Android, iOS, Linux, and macOS.

For more details, see the Export to NCNN section in the documentation.

Why should I use NCNN for my mobile AI applications?

NCNN, developed by Tencent, is specifically optimized for mobile platforms. Key reasons to use NCNN include:

- High Performance: Designed for efficient and fast processing on mobile CPUs.

- Cross-Platform: Compatible with popular frameworks such as TensorFlow and ONNX, making it easier to convert and deploy models across different platforms.

- Community Support: Active community support ensures continual improvements and updates.

To understand more, visit the NCNN overview in the documentation.

What platforms are supported for NCNN model deployment?

NCNN is versatile and supports various platforms:

- Mobile: Android, iOS.

- Embedded Systems and IoT Devices: Devices like Raspberry Pi and NVIDIA Jetson.

- Desktop and Servers: Linux, Windows, and macOS.

If running models on a Raspberry Pi isn't fast enough, converting to the NCNN format could speed things up as detailed in our Raspberry Pi Guide.

How can I deploy Ultralytics YOLOv8 NCNN models on Android?

To deploy your YOLOv8 models on Android:

- Build for Android: Follow the NCNN Build for Android guide.

- Integrate with Your App: Use the NCNN Android SDK to integrate the exported model into your application for efficient on-device inference.

For step-by-step instructions, refer to our guide on Deploying YOLOv8 NCNN Models.

For more advanced guides and use cases, visit the Ultralytics documentation page.

comments: true

description: Enhance YOLOv8 performance using Neural Magic's DeepSparse Engine. Learn how to deploy and benchmark YOLOv8 models on CPUs for efficient object detection.

keywords: YOLOv8, DeepSparse, Neural Magic, model optimization, object detection, inference speed, CPU performance, sparsity, pruning, quantization

Optimizing YOLOv8 Inferences with Neural Magic's DeepSparse Engine

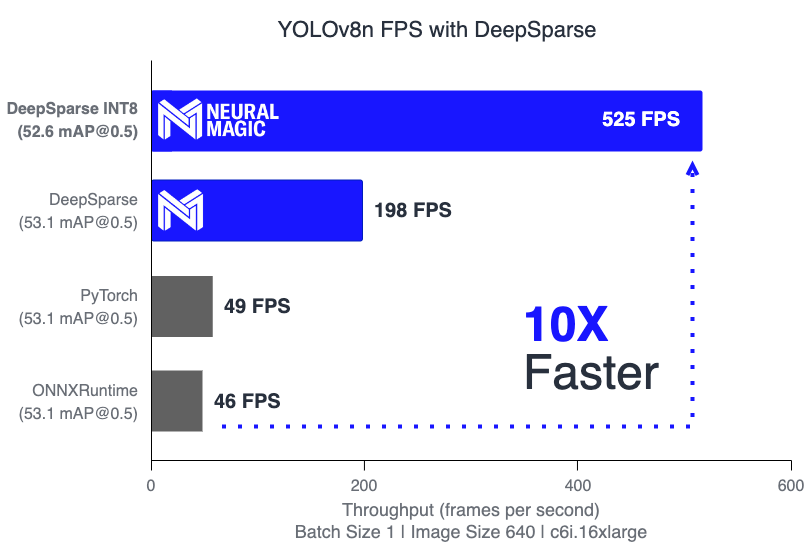

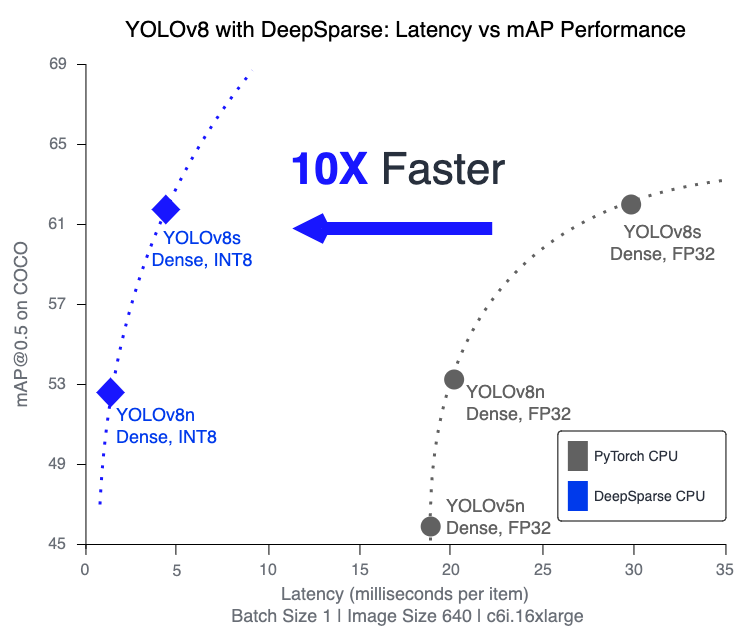

When deploying object detection models like Ultralytics YOLOv8 on various hardware, you can bump into unique issues like optimization. This is where YOLOv8's integration with Neural Magic's DeepSparse Engine steps in. It transforms the way YOLOv8 models are executed and enables GPU-level performance directly on CPUs.

This guide shows you how to deploy YOLOv8 using Neural Magic's DeepSparse, how to run inferences, and also how to benchmark performance to ensure it is optimized.

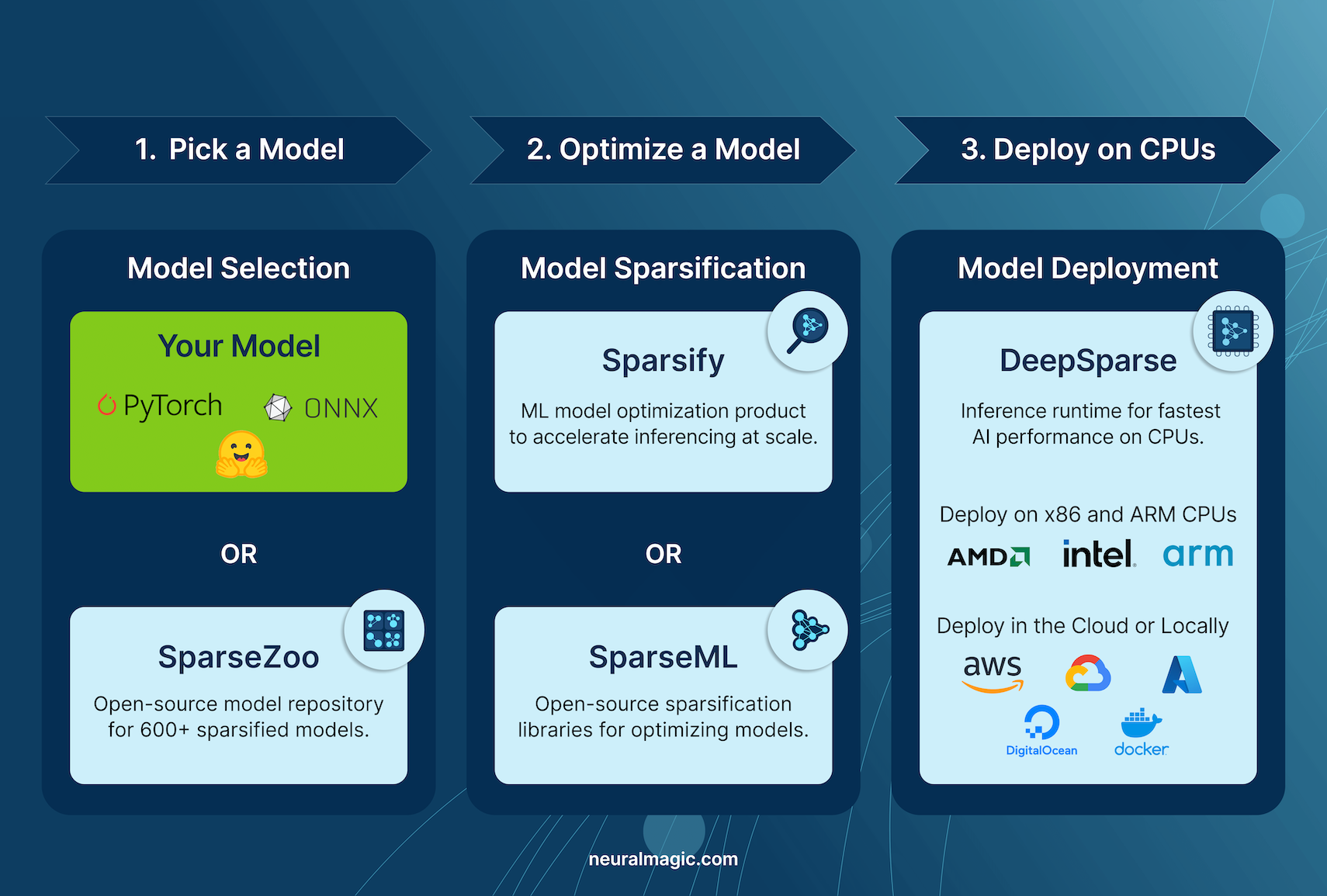

Neural Magic's DeepSparse

Neural Magic's DeepSparse is an inference run-time designed to optimize the execution of neural networks on CPUs. It applies advanced techniques like sparsity, pruning, and quantization to dramatically reduce computational demands while maintaining accuracy. DeepSparse offers an agile solution for efficient and scalable neural network execution across various devices.

Benefits of Integrating Neural Magic's DeepSparse with YOLOv8

Before diving into how to deploy YOLOV8 using DeepSparse, let's understand the benefits of using DeepSparse. Some key advantages include:

- Enhanced Inference Speed: Achieves up to 525 FPS (on YOLOv8n), significantly speeding up YOLOv8's inference capabilities compared to traditional methods.

- Optimized Model Efficiency: Uses pruning and quantization to enhance YOLOv8's efficiency, reducing model size and computational requirements while maintaining accuracy.

-

High Performance on Standard CPUs: Delivers GPU-like performance on CPUs, providing a more accessible and cost-effective option for various applications.

-

Streamlined Integration and Deployment: Offers user-friendly tools for easy integration of YOLOv8 into applications, including image and video annotation features.

-

Support for Various Model Types: Compatible with both standard and sparsity-optimized YOLOv8 models, adding deployment flexibility.

-

Cost-Effective and Scalable Solution: Reduces operational expenses and offers scalable deployment of advanced object detection models.

How Does Neural Magic's DeepSparse Technology Works?

Neural Magic's Deep Sparse technology is inspired by the human brain's efficiency in neural network computation. It adopts two key principles from the brain as follows:

-

Sparsity: The process of sparsification involves pruning redundant information from deep learning networks, leading to smaller and faster models without compromising accuracy. This technique reduces the network's size and computational needs significantly.

-

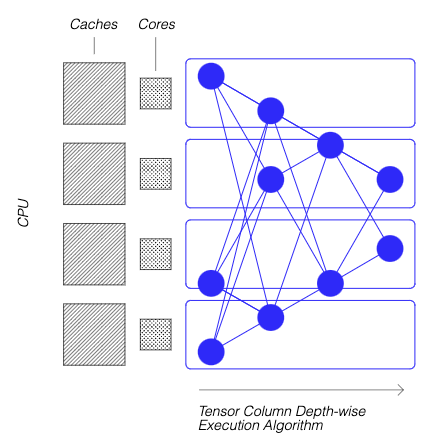

Locality of Reference: DeepSparse uses a unique execution method, breaking the network into Tensor Columns. These columns are executed depth-wise, fitting entirely within the CPU's cache. This approach mimics the brain's efficiency, minimizing data movement and maximizing the CPU's cache use.

For more details on how Neural Magic's DeepSparse technology work, check out their blog post.

Creating A Sparse Version of YOLOv8 Trained on a Custom Dataset

SparseZoo, an open-source model repository by Neural Magic, offers a collection of pre-sparsified YOLOv8 model checkpoints. With SparseML, seamlessly integrated with Ultralytics, users can effortlessly fine-tune these sparse checkpoints on their specific datasets using a straightforward command-line interface.

Checkout Neural Magic's SparseML YOLOv8 documentation for more details.

Usage: Deploying YOLOV8 using DeepSparse

Deploying YOLOv8 with Neural Magic's DeepSparse involves a few straightforward steps. Before diving into the usage instructions, be sure to check out the range of YOLOv8 models offered by Ultralytics. This will help you choose the most appropriate model for your project requirements. Here's how you can get started.

Step 1: Installation

To install the required packages, run:

!!! Tip "Installation"

=== "CLI"

```py

# Install the required packages

pip install deepsparse[yolov8]

```

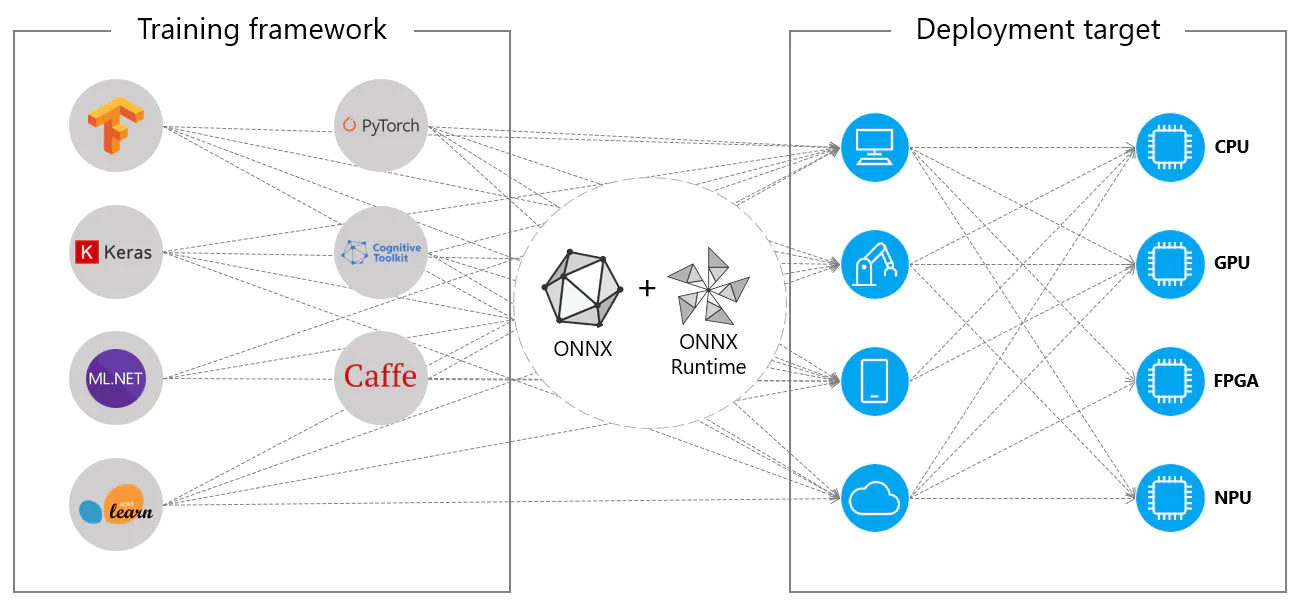

Step 2: Exporting YOLOv8 to ONNX Format

DeepSparse Engine requires YOLOv8 models in ONNX format. Exporting your model to this format is essential for compatibility with DeepSparse. Use the following command to export YOLOv8 models:

!!! Tip "Model Export"

=== "CLI"

```py

# Export YOLOv8 model to ONNX format

yolo task=detect mode=export model=yolov8n.pt format=onnx opset=13

```

This command will save the yolov8n.onnx model to your disk.

Step 3: Deploying and Running Inferences

With your YOLOv8 model in ONNX format, you can deploy and run inferences using DeepSparse. This can be done easily with their intuitive Python API:

!!! Tip "Deploying and Running Inferences"

=== "Python"

```py

from deepsparse import Pipeline

# Specify the path to your YOLOv8 ONNX model

model_path = "path/to/yolov8n.onnx"

# Set up the DeepSparse Pipeline

yolo_pipeline = Pipeline.create(task="yolov8", model_path=model_path)

# Run the model on your images

images = ["path/to/image.jpg"]

pipeline_outputs = yolo_pipeline(images=images)

```

Step 4: Benchmarking Performance

It's important to check that your YOLOv8 model is performing optimally on DeepSparse. You can benchmark your model's performance to analyze throughput and latency:

!!! Tip "Benchmarking"

=== "CLI"

```py

# Benchmark performance

deepsparse.benchmark model_path="path/to/yolov8n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

```

Step 5: Additional Features

DeepSparse provides additional features for practical integration of YOLOv8 in applications, such as image annotation and dataset evaluation.

!!! Tip "Additional Features"

=== "CLI"

```py

# For image annotation

deepsparse.yolov8.annotate --source "path/to/image.jpg" --model_filepath "path/to/yolov8n.onnx"

# For evaluating model performance on a dataset

deepsparse.yolov8.eval --model_path "path/to/yolov8n.onnx"

```

Running the annotate command processes your specified image, detecting objects, and saving the annotated image with bounding boxes and classifications. The annotated image will be stored in an annotation-results folder. This helps provide a visual representation of the model's detection capabilities.

After running the eval command, you will receive detailed output metrics such as precision, recall, and mAP (mean Average Precision). This provides a comprehensive view of your model's performance on the dataset. This functionality is particularly useful for fine-tuning and optimizing your YOLOv8 models for specific use cases, ensuring high accuracy and efficiency.

Summary

This guide explored integrating Ultralytics' YOLOv8 with Neural Magic's DeepSparse Engine. It highlighted how this integration enhances YOLOv8's performance on CPU platforms, offering GPU-level efficiency and advanced neural network sparsity techniques.

For more detailed information and advanced usage, visit Neural Magic's DeepSparse documentation. Also, check out Neural Magic's documentation on the integration with YOLOv8 here and watch a great session on it here.

Additionally, for a broader understanding of various YOLOv8 integrations, visit the Ultralytics integration guide page, where you can discover a range of other exciting integration possibilities.

FAQ

What is Neural Magic's DeepSparse Engine and how does it optimize YOLOv8 performance?

Neural Magic's DeepSparse Engine is an inference runtime designed to optimize the execution of neural networks on CPUs through advanced techniques such as sparsity, pruning, and quantization. By integrating DeepSparse with YOLOv8, you can achieve GPU-like performance on standard CPUs, significantly enhancing inference speed, model efficiency, and overall performance while maintaining accuracy. For more details, check out the Neural Magic's DeepSparse section.

How can I install the needed packages to deploy YOLOv8 using Neural Magic's DeepSparse?

Installing the required packages for deploying YOLOv8 with Neural Magic's DeepSparse is straightforward. You can easily install them using the CLI. Here's the command you need to run:

pip install deepsparse[yolov8]

Once installed, follow the steps provided in the Installation section to set up your environment and start using DeepSparse with YOLOv8.

How do I convert YOLOv8 models to ONNX format for use with DeepSparse?

To convert YOLOv8 models to the ONNX format, which is required for compatibility with DeepSparse, you can use the following CLI command:

yolo task=detect mode=export model=yolov8n.pt format=onnx opset=13

This command will export your YOLOv8 model (yolov8n.pt) to a format (yolov8n.onnx) that can be utilized by the DeepSparse Engine. More information about model export can be found in the Model Export section.

How do I benchmark YOLOv8 performance on the DeepSparse Engine?

Benchmarking YOLOv8 performance on DeepSparse helps you analyze throughput and latency to ensure your model is optimized. You can use the following CLI command to run a benchmark:

deepsparse.benchmark model_path="path/to/yolov8n.onnx" --scenario=sync --input_shapes="[1,3,640,640]"

This command will provide you with vital performance metrics. For more details, see the Benchmarking Performance section.

Why should I use Neural Magic's DeepSparse with YOLOv8 for object detection tasks?

Integrating Neural Magic's DeepSparse with YOLOv8 offers several benefits:

- Enhanced Inference Speed: Achieves up to 525 FPS, significantly speeding up YOLOv8's capabilities.

- Optimized Model Efficiency: Uses sparsity, pruning, and quantization techniques to reduce model size and computational needs while maintaining accuracy.

- High Performance on Standard CPUs: Offers GPU-like performance on cost-effective CPU hardware.

- Streamlined Integration: User-friendly tools for easy deployment and integration.

- Flexibility: Supports both standard and sparsity-optimized YOLOv8 models.

- Cost-Effective: Reduces operational expenses through efficient resource utilization.

For a deeper dive into these advantages, visit the Benefits of Integrating Neural Magic's DeepSparse with YOLOv8 section.

comments: true

description: Learn how to export YOLOv8 models to ONNX format for flexible deployment across various platforms with enhanced performance.

keywords: YOLOv8, ONNX, model export, Ultralytics, ONNX Runtime, machine learning, model deployment, computer vision, deep learning

ONNX Export for YOLOv8 Models

Often, when deploying computer vision models, you'll need a model format that's both flexible and compatible with multiple platforms.

Exporting Ultralytics YOLOv8 models to ONNX format streamlines deployment and ensures optimal performance across various environments. This guide will show you how to easily convert your YOLOv8 models to ONNX and enhance their scalability and effectiveness in real-world applications.

ONNX and ONNX Runtime

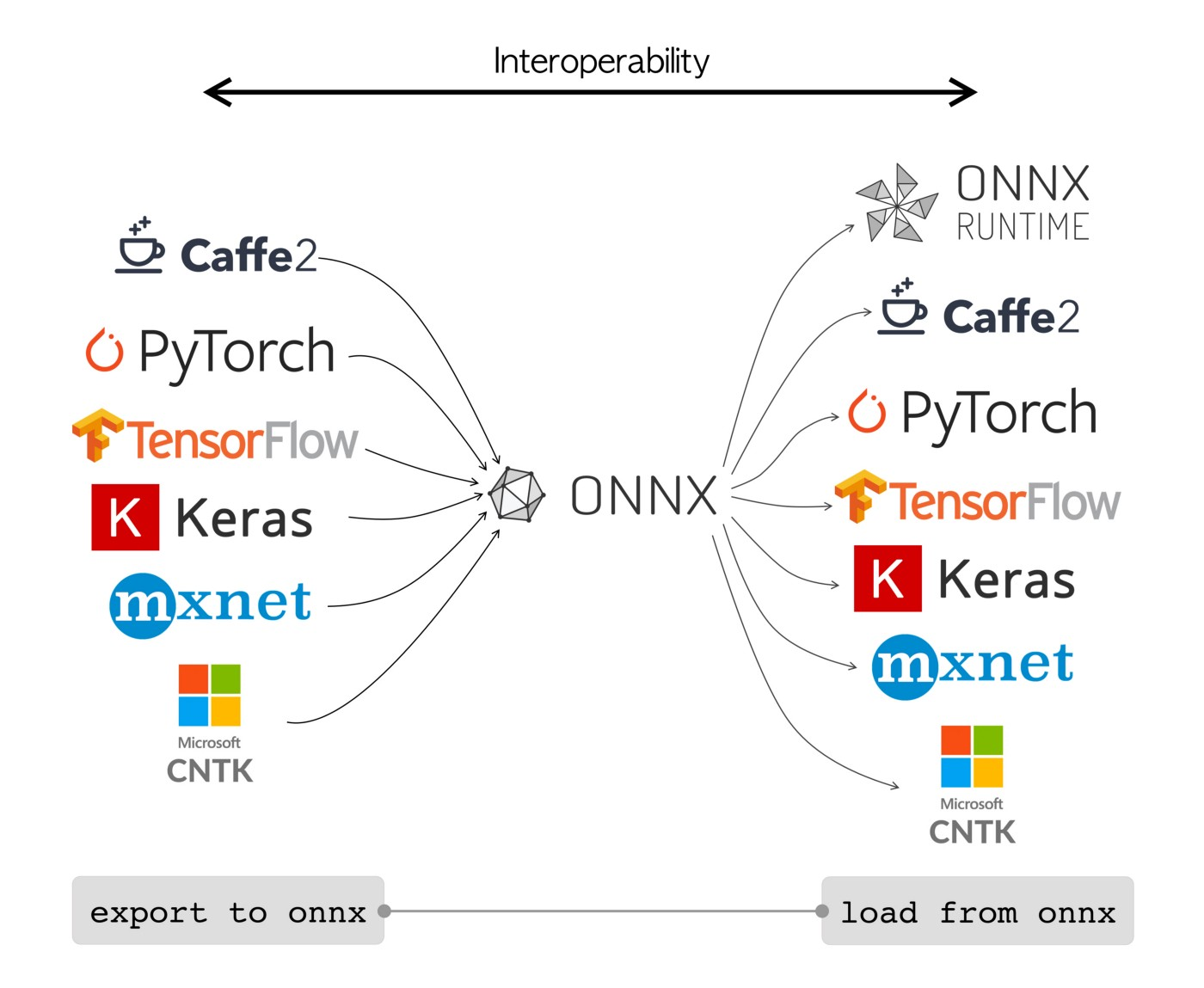

ONNX, which stands for Open Neural Network Exchange, is a community project that Facebook and Microsoft initially developed. The ongoing development of ONNX is a collaborative effort supported by various organizations like IBM, Amazon (through AWS), and Google. The project aims to create an open file format designed to represent machine learning models in a way that allows them to be used across different AI frameworks and hardware.

ONNX models can be used to transition between different frameworks seamlessly. For instance, a deep learning model trained in PyTorch can be exported to ONNX format and then easily imported into TensorFlow.

Alternatively, ONNX models can be used with ONNX Runtime. ONNX Runtime is a versatile cross-platform accelerator for machine learning models that is compatible with frameworks like PyTorch, TensorFlow, TFLite, scikit-learn, etc.

ONNX Runtime optimizes the execution of ONNX models by leveraging hardware-specific capabilities. This optimization allows the models to run efficiently and with high performance on various hardware platforms, including CPUs, GPUs, and specialized accelerators.

Whether used independently or in tandem with ONNX Runtime, ONNX provides a flexible solution for machine learning model deployment and compatibility.

Key Features of ONNX Models

The ability of ONNX to handle various formats can be attributed to the following key features:

-

Common Model Representation: ONNX defines a common set of operators (like convolutions, layers, etc.) and a standard data format. When a model is converted to ONNX format, its architecture and weights are translated into this common representation. This uniformity ensures that the model can be understood by any framework that supports ONNX.

-

Versioning and Backward Compatibility: ONNX maintains a versioning system for its operators. This ensures that even as the standard evolves, models created in older versions remain usable. Backward compatibility is a crucial feature that prevents models from becoming obsolete quickly.

-

Graph-based Model Representation: ONNX represents models as computational graphs. This graph-based structure is a universal way of representing machine learning models, where nodes represent operations or computations, and edges represent the tensors flowing between them. This format is easily adaptable to various frameworks which also represent models as graphs.

-

Tools and Ecosystem: There is a rich ecosystem of tools around ONNX that assist in model conversion, visualization, and optimization. These tools make it easier for developers to work with ONNX models and to convert models between different frameworks seamlessly.

Common Usage of ONNX

Before we jump into how to export YOLOv8 models to the ONNX format, let's take a look at where ONNX models are usually used.

CPU Deployment

ONNX models are often deployed on CPUs due to their compatibility with ONNX Runtime. This runtime is optimized for CPU execution. It significantly improves inference speed and makes real-time CPU deployments feasible.

Supported Deployment Options

While ONNX models are commonly used on CPUs, they can also be deployed on the following platforms:

-

GPU Acceleration: ONNX fully supports GPU acceleration, particularly NVIDIA CUDA. This enables efficient execution on NVIDIA GPUs for tasks that demand high computational power.

-

Edge and Mobile Devices: ONNX extends to edge and mobile devices, perfect for on-device and real-time inference scenarios. It's lightweight and compatible with edge hardware.

-

Web Browsers: ONNX can run directly in web browsers, powering interactive and dynamic web-based AI applications.

Exporting YOLOv8 Models to ONNX

You can expand model compatibility and deployment flexibility by converting YOLOv8 models to ONNX format.

Installation

To install the required package, run:

!!! Tip "Installation"

=== "CLI"

```py

# Install the required package for YOLOv8

pip install ultralytics

```

For detailed instructions and best practices related to the installation process, check our YOLOv8 Installation guide. While installing the required packages for YOLOv8, if you encounter any difficulties, consult our Common Issues guide for solutions and tips.

Usage

Before diving into the usage instructions, be sure to check out the range of YOLOv8 models offered by Ultralytics. This will help you choose the most appropriate model for your project requirements.

!!! Example "Usage"

=== "Python"

```py

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("yolov8n.pt")

# Export the model to ONNX format

model.export(format="onnx") # creates 'yolov8n.onnx'

# Load the exported ONNX model

onnx_model = YOLO("yolov8n.onnx")

# Run inference

results = onnx_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to ONNX format

yolo export model=yolov8n.pt format=onnx # creates 'yolov8n.onnx'

# Run inference with the exported model

yolo predict model=yolov8n.onnx source='https://ultralytics.com/images/bus.jpg'

```

For more details about the export process, visit the Ultralytics documentation page on exporting.

Deploying Exported YOLOv8 ONNX Models

Once you've successfully exported your Ultralytics YOLOv8 models to ONNX format, the next step is deploying these models in various environments. For detailed instructions on deploying your ONNX models, take a look at the following resources:

-

ONNX Runtime Python API Documentation: This guide provides essential information for loading and running ONNX models using ONNX Runtime.

-

Deploying on Edge Devices: Check out this docs page for different examples of deploying ONNX models on edge.

-

ONNX Tutorials on GitHub: A collection of comprehensive tutorials that cover various aspects of using and implementing ONNX models in different scenarios.

Summary

In this guide, you've learned how to export Ultralytics YOLOv8 models to ONNX format to increase their interoperability and performance across various platforms. You were also introduced to the ONNX Runtime and ONNX deployment options.

For further details on usage, visit the ONNX official documentation.

Also, if you'd like to know more about other Ultralytics YOLOv8 integrations, visit our integration guide page. You'll find plenty of useful resources and insights there.

FAQ

How do I export YOLOv8 models to ONNX format using Ultralytics?

To export your YOLOv8 models to ONNX format using Ultralytics, follow these steps:

!!! Example "Usage"

=== "Python"

```py

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("yolov8n.pt")

# Export the model to ONNX format

model.export(format="onnx") # creates 'yolov8n.onnx'

# Load the exported ONNX model

onnx_model = YOLO("yolov8n.onnx")

# Run inference

results = onnx_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to ONNX format

yolo export model=yolov8n.pt format=onnx # creates 'yolov8n.onnx'

# Run inference with the exported model

yolo predict model=yolov8n.onnx source='https://ultralytics.com/images/bus.jpg'

```

For more details, visit the export documentation.

What are the advantages of using ONNX Runtime for deploying YOLOv8 models?

Using ONNX Runtime for deploying YOLOv8 models offers several advantages:

- Cross-platform compatibility: ONNX Runtime supports various platforms, such as Windows, macOS, and Linux, ensuring your models run smoothly across different environments.

- Hardware acceleration: ONNX Runtime can leverage hardware-specific optimizations for CPUs, GPUs, and dedicated accelerators, providing high-performance inference.

- Framework interoperability: Models trained in popular frameworks like PyTorch or TensorFlow can be easily converted to ONNX format and run using ONNX Runtime.

Learn more by checking the ONNX Runtime documentation.

What deployment options are available for YOLOv8 models exported to ONNX?

YOLOv8 models exported to ONNX can be deployed on various platforms including:

- CPUs: Utilizing ONNX Runtime for optimized CPU inference.

- GPUs: Leveraging NVIDIA CUDA for high-performance GPU acceleration.

- Edge devices: Running lightweight models on edge and mobile devices for real-time, on-device inference.

- Web browsers: Executing models directly within web browsers for interactive web-based applications.

For more information, explore our guide on model deployment options.

Why should I use ONNX format for Ultralytics YOLOv8 models?

Using ONNX format for Ultralytics YOLOv8 models provides numerous benefits:

- Interoperability: ONNX allows models to be transferred between different machine learning frameworks seamlessly.

- Performance Optimization: ONNX Runtime can enhance model performance by utilizing hardware-specific optimizations.

- Flexibility: ONNX supports various deployment environments, enabling you to use the same model on different platforms without modification.

Refer to the comprehensive guide on exporting YOLOv8 models to ONNX.

How can I troubleshoot issues when exporting YOLOv8 models to ONNX?

When exporting YOLOv8 models to ONNX, you might encounter common issues such as mismatched dependencies or unsupported operations. To troubleshoot these problems:

- Verify that you have the correct version of required dependencies installed.

- Check the official ONNX documentation for supported operators and features.

- Review the error messages for clues and consult the Ultralytics Common Issues guide.

If issues persist, contact Ultralytics support for further assistance.

comments: true

description: Learn to export YOLOv8 models to OpenVINO format for up to 3x CPU speedup and hardware acceleration on Intel GPU and NPU.

keywords: YOLOv8, OpenVINO, model export, Intel, AI inference, CPU speedup, GPU acceleration, NPU, deep learning

Intel OpenVINO Export

In this guide, we cover exporting YOLOv8 models to the OpenVINO format, which can provide up to 3x CPU speedup, as well as accelerating YOLO inference on Intel GPU and NPU hardware.

OpenVINO, short for Open Visual Inference & Neural Network Optimization toolkit, is a comprehensive toolkit for optimizing and deploying AI inference models. Even though the name contains Visual, OpenVINO also supports various additional tasks including language, audio, time series, etc.

Watch: How To Export and Optimize an Ultralytics YOLOv8 Model for Inference with OpenVINO.

Usage Examples

Export a YOLOv8n model to OpenVINO format and run inference with the exported model.

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Export the model

model.export(format="openvino") # creates 'yolov8n_openvino_model/'

# Load the exported OpenVINO model

ov_model = YOLO("yolov8n_openvino_model/")

# Run inference

results = ov_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to OpenVINO format

yolo export model=yolov8n.pt format=openvino # creates 'yolov8n_openvino_model/'

# Run inference with the exported model

yolo predict model=yolov8n_openvino_model source='https://ultralytics.com/images/bus.jpg'

```

Arguments

| Key | Value | Description |

|---|---|---|

format |

'openvino' |

format to export to |

imgsz |

640 |

image size as scalar or (h, w) list, i.e. (640, 480) |

half |

False |

FP16 quantization |

int8 |

False |

INT8 quantization |

batch |

1 |

batch size for inference |

Benefits of OpenVINO

- Performance: OpenVINO delivers high-performance inference by utilizing the power of Intel CPUs, integrated and discrete GPUs, and FPGAs.

- Support for Heterogeneous Execution: OpenVINO provides an API to write once and deploy on any supported Intel hardware (CPU, GPU, FPGA, VPU, etc.).

- Model Optimizer: OpenVINO provides a Model Optimizer that imports, converts, and optimizes models from popular deep learning frameworks such as PyTorch, TensorFlow, TensorFlow Lite, Keras, ONNX, PaddlePaddle, and Caffe.

- Ease of Use: The toolkit comes with more than 80 tutorial notebooks (including YOLOv8 optimization) teaching different aspects of the toolkit.

OpenVINO Export Structure

When you export a model to OpenVINO format, it results in a directory containing the following:

- XML file: Describes the network topology.

- BIN file: Contains the weights and biases binary data.

- Mapping file: Holds mapping of original model output tensors to OpenVINO tensor names.

You can use these files to run inference with the OpenVINO Inference Engine.

Using OpenVINO Export in Deployment

Once you have the OpenVINO files, you can use the OpenVINO Runtime to run the model. The Runtime provides a unified API to inference across all supported Intel hardware. It also provides advanced capabilities like load balancing across Intel hardware and asynchronous execution. For more information on running the inference, refer to the Inference with OpenVINO Runtime Guide.

Remember, you'll need the XML and BIN files as well as any application-specific settings like input size, scale factor for normalization, etc., to correctly set up and use the model with the Runtime.

In your deployment application, you would typically do the following steps:

- Initialize OpenVINO by creating

core = Core(). - Load the model using the

core.read_model()method. - Compile the model using the

core.compile_model()function. - Prepare the input (image, text, audio, etc.).

- Run inference using

compiled_model(input_data).

For more detailed steps and code snippets, refer to the OpenVINO documentation or API tutorial.

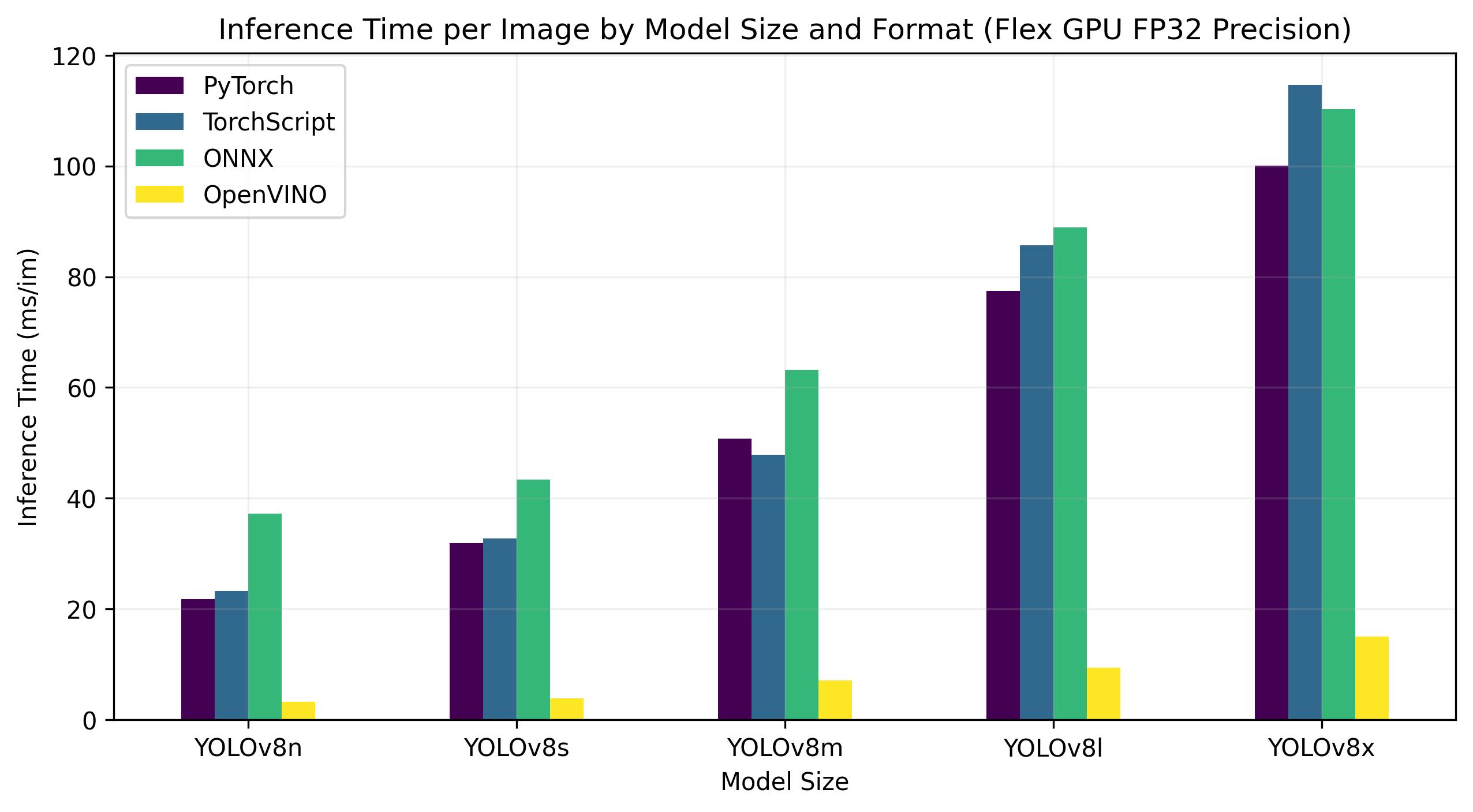

OpenVINO YOLOv8 Benchmarks

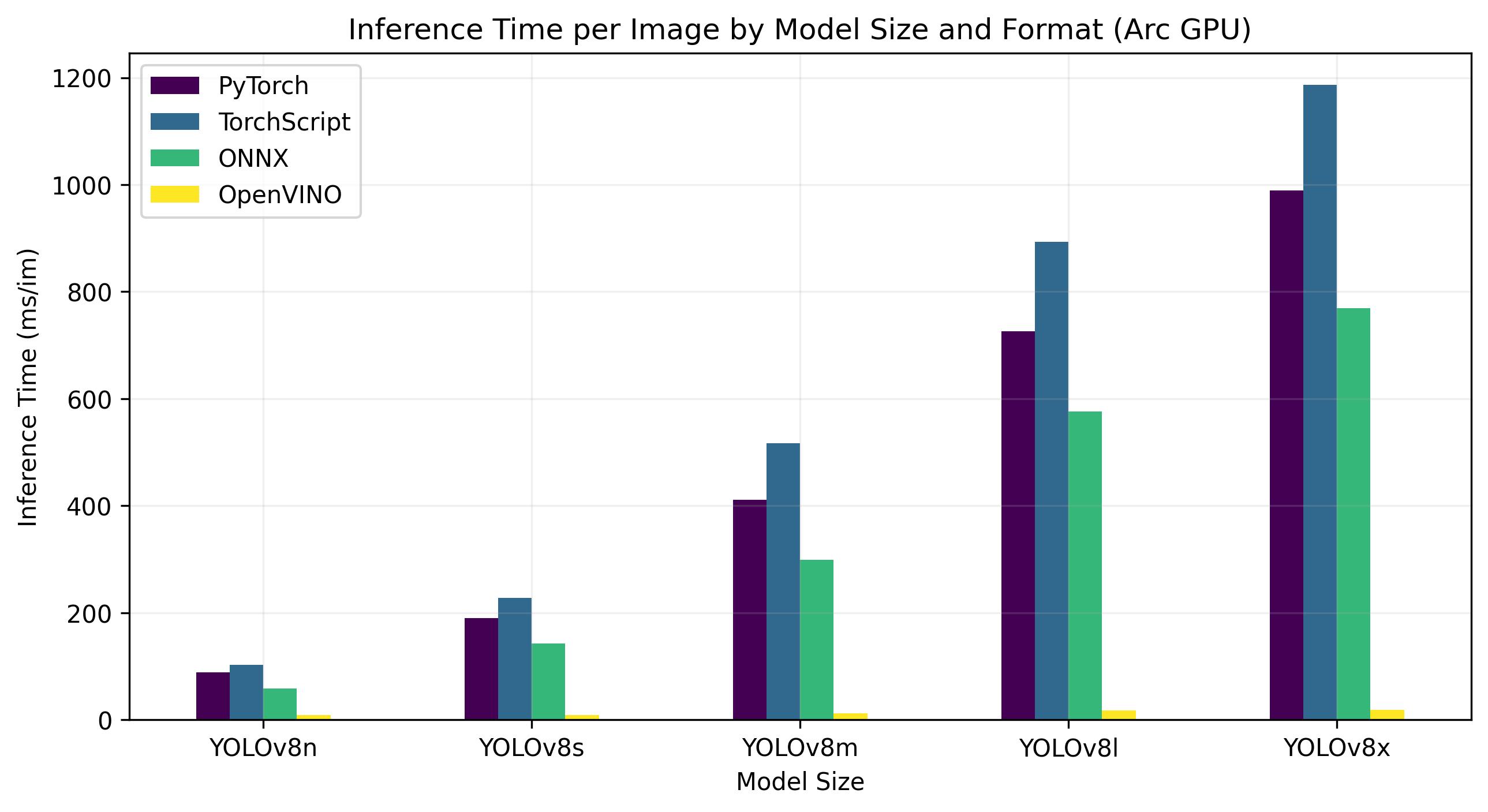

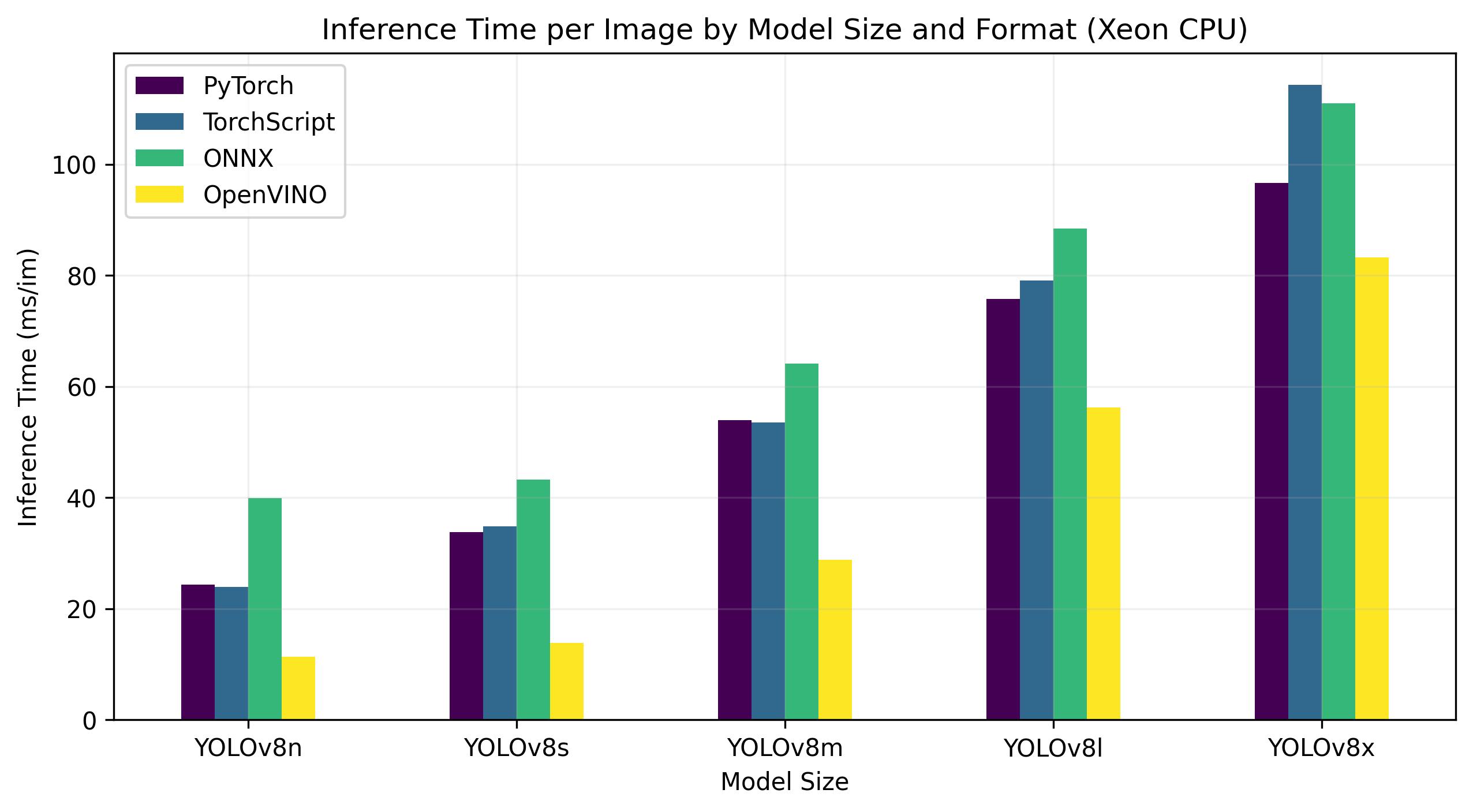

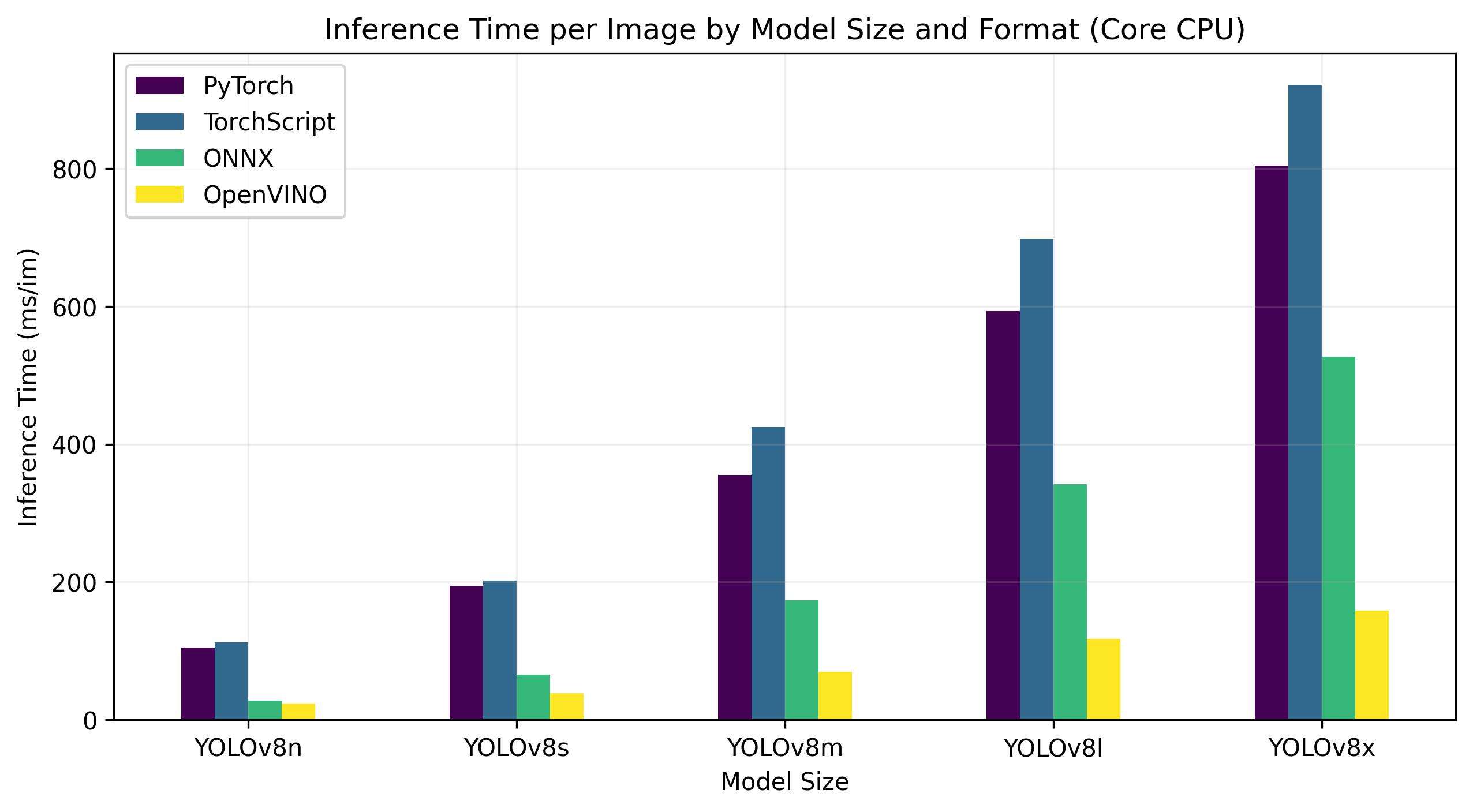

YOLOv8 benchmarks below were run by the Ultralytics team on 4 different model formats measuring speed and accuracy: PyTorch, TorchScript, ONNX and OpenVINO. Benchmarks were run on Intel Flex and Arc GPUs, and on Intel Xeon CPUs at FP32 precision (with the half=False argument).

!!! Note

The benchmarking results below are for reference and might vary based on the exact hardware and software configuration of a system, as well as the current workload of the system at the time the benchmarks are run.

All benchmarks run with `openvino` Python package version [2023.0.1](https://pypi.org/project/openvino/2023.0.1/).

Intel Flex GPU

The Intel® Data Center GPU Flex Series is a versatile and robust solution designed for the intelligent visual cloud. This GPU supports a wide array of workloads including media streaming, cloud gaming, AI visual inference, and virtual desktop Infrastructure workloads. It stands out for its open architecture and built-in support for the AV1 encode, providing a standards-based software stack for high-performance, cross-architecture applications. The Flex Series GPU is optimized for density and quality, offering high reliability, availability, and scalability.

Benchmarks below run on Intel® Data Center GPU Flex 170 at FP32 precision.

| Model | Format | Status | Size (MB) | mAP50-95(B) | Inference time (ms/im) |

|---|---|---|---|---|---|

| YOLOv8n | PyTorch | ✅ | 6.2 | 0.3709 | 21.79 |

| YOLOv8n | TorchScript | ✅ | 12.4 | 0.3704 | 23.24 |

| YOLOv8n | ONNX | ✅ | 12.2 | 0.3704 | 37.22 |

| YOLOv8n | OpenVINO | ✅ | 12.3 | 0.3703 | 3.29 |

| YOLOv8s | PyTorch | ✅ | 21.5 | 0.4471 | 31.89 |

| YOLOv8s | TorchScript | ✅ | 42.9 | 0.4472 | 32.71 |

| YOLOv8s | ONNX | ✅ | 42.8 | 0.4472 | 43.42 |

| YOLOv8s | OpenVINO | ✅ | 42.9 | 0.4470 | 3.92 |

| YOLOv8m | PyTorch | ✅ | 49.7 | 0.5013 | 50.75 |

| YOLOv8m | TorchScript | ✅ | 99.2 | 0.4999 | 47.90 |

| YOLOv8m | ONNX | ✅ | 99.0 | 0.4999 | 63.16 |

| YOLOv8m | OpenVINO | ✅ | 49.8 | 0.4997 | 7.11 |

| YOLOv8l | PyTorch | ✅ | 83.7 | 0.5293 | 77.45 |

| YOLOv8l | TorchScript | ✅ | 167.2 | 0.5268 | 85.71 |

| YOLOv8l | ONNX | ✅ | 166.8 | 0.5268 | 88.94 |

| YOLOv8l | OpenVINO | ✅ | 167.0 | 0.5264 | 9.37 |

| YOLOv8x | PyTorch | ✅ | 130.5 | 0.5404 | 100.09 |

| YOLOv8x | TorchScript | ✅ | 260.7 | 0.5371 | 114.64 |

| YOLOv8x | ONNX | ✅ | 260.4 | 0.5371 | 110.32 |

| YOLOv8x | OpenVINO | ✅ | 260.6 | 0.5367 | 15.02 |

This table represents the benchmark results for five different models (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, YOLOv8x) across four different formats (PyTorch, TorchScript, ONNX, OpenVINO), giving us the status, size, mAP50-95(B) metric, and inference time for each combination.

Intel Arc GPU

Intel® Arc™ represents Intel's foray into the dedicated GPU market. The Arc™ series, designed to compete with leading GPU manufacturers like AMD and Nvidia, caters to both the laptop and desktop markets. The series includes mobile versions for compact devices like laptops, and larger, more powerful versions for desktop computers.

The Arc™ series is divided into three categories: Arc™ 3, Arc™ 5, and Arc™ 7, with each number indicating the performance level. Each category includes several models, and the 'M' in the GPU model name signifies a mobile, integrated variant.

Early reviews have praised the Arc™ series, particularly the integrated A770M GPU, for its impressive graphics performance. The availability of the Arc™ series varies by region, and additional models are expected to be released soon. Intel® Arc™ GPUs offer high-performance solutions for a range of computing needs, from gaming to content creation.

Benchmarks below run on Intel® Arc 770 GPU at FP32 precision.

| Model | Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) |

|---|---|---|---|---|---|

| YOLOv8n | PyTorch | ✅ | 6.2 | 0.3709 | 88.79 |

| YOLOv8n | TorchScript | ✅ | 12.4 | 0.3704 | 102.66 |

| YOLOv8n | ONNX | ✅ | 12.2 | 0.3704 | 57.98 |

| YOLOv8n | OpenVINO | ✅ | 12.3 | 0.3703 | 8.52 |

| YOLOv8s | PyTorch | ✅ | 21.5 | 0.4471 | 189.83 |

| YOLOv8s | TorchScript | ✅ | 42.9 | 0.4472 | 227.58 |

| YOLOv8s | ONNX | ✅ | 42.7 | 0.4472 | 142.03 |

| YOLOv8s | OpenVINO | ✅ | 42.9 | 0.4469 | 9.19 |

| YOLOv8m | PyTorch | ✅ | 49.7 | 0.5013 | 411.64 |

| YOLOv8m | TorchScript | ✅ | 99.2 | 0.4999 | 517.12 |

| YOLOv8m | ONNX | ✅ | 98.9 | 0.4999 | 298.68 |

| YOLOv8m | OpenVINO | ✅ | 99.1 | 0.4996 | 12.55 |

| YOLOv8l | PyTorch | ✅ | 83.7 | 0.5293 | 725.73 |

| YOLOv8l | TorchScript | ✅ | 167.1 | 0.5268 | 892.83 |

| YOLOv8l | ONNX | ✅ | 166.8 | 0.5268 | 576.11 |

| YOLOv8l | OpenVINO | ✅ | 167.0 | 0.5262 | 17.62 |

| YOLOv8x | PyTorch | ✅ | 130.5 | 0.5404 | 988.92 |

| YOLOv8x | TorchScript | ✅ | 260.7 | 0.5371 | 1186.42 |

| YOLOv8x | ONNX | ✅ | 260.4 | 0.5371 | 768.90 |

| YOLOv8x | OpenVINO | ✅ | 260.6 | 0.5367 | 19 |

Intel Xeon CPU

The Intel® Xeon® CPU is a high-performance, server-grade processor designed for complex and demanding workloads. From high-end cloud computing and virtualization to artificial intelligence and machine learning applications, Xeon® CPUs provide the power, reliability, and flexibility required for today's data centers.

Notably, Xeon® CPUs deliver high compute density and scalability, making them ideal for both small businesses and large enterprises. By choosing Intel® Xeon® CPUs, organizations can confidently handle their most demanding computing tasks and foster innovation while maintaining cost-effectiveness and operational efficiency.

Benchmarks below run on 4th Gen Intel® Xeon® Scalable CPU at FP32 precision.

| Model | Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) |

|---|---|---|---|---|---|

| YOLOv8n | PyTorch | ✅ | 6.2 | 0.3709 | 24.36 |

| YOLOv8n | TorchScript | ✅ | 12.4 | 0.3704 | 23.93 |

| YOLOv8n | ONNX | ✅ | 12.2 | 0.3704 | 39.86 |

| YOLOv8n | OpenVINO | ✅ | 12.3 | 0.3704 | 11.34 |

| YOLOv8s | PyTorch | ✅ | 21.5 | 0.4471 | 33.77 |

| YOLOv8s | TorchScript | ✅ | 42.9 | 0.4472 | 34.84 |

| YOLOv8s | ONNX | ✅ | 42.8 | 0.4472 | 43.23 |

| YOLOv8s | OpenVINO | ✅ | 42.9 | 0.4471 | 13.86 |

| YOLOv8m | PyTorch | ✅ | 49.7 | 0.5013 | 53.91 |

| YOLOv8m | TorchScript | ✅ | 99.2 | 0.4999 | 53.51 |

| YOLOv8m | ONNX | ✅ | 99.0 | 0.4999 | 64.16 |

| YOLOv8m | OpenVINO | ✅ | 99.1 | 0.4996 | 28.79 |

| YOLOv8l | PyTorch | ✅ | 83.7 | 0.5293 | 75.78 |

| YOLOv8l | TorchScript | ✅ | 167.2 | 0.5268 | 79.13 |

| YOLOv8l | ONNX | ✅ | 166.8 | 0.5268 | 88.45 |

| YOLOv8l | OpenVINO | ✅ | 167.0 | 0.5263 | 56.23 |

| YOLOv8x | PyTorch | ✅ | 130.5 | 0.5404 | 96.60 |

| YOLOv8x | TorchScript | ✅ | 260.7 | 0.5371 | 114.28 |

| YOLOv8x | ONNX | ✅ | 260.4 | 0.5371 | 111.02 |

| YOLOv8x | OpenVINO | ✅ | 260.6 | 0.5371 | 83.28 |

Intel Core CPU

The Intel® Core® series is a range of high-performance processors by Intel. The lineup includes Core i3 (entry-level), Core i5 (mid-range), Core i7 (high-end), and Core i9 (extreme performance). Each series caters to different computing needs and budgets, from everyday tasks to demanding professional workloads. With each new generation, improvements are made to performance, energy efficiency, and features.

Benchmarks below run on 13th Gen Intel® Core® i7-13700H CPU at FP32 precision.

| Model | Format | Status | Size (MB) | metrics/mAP50-95(B) | Inference time (ms/im) |

|---|---|---|---|---|---|

| YOLOv8n | PyTorch | ✅ | 6.2 | 0.4478 | 104.61 |

| YOLOv8n | TorchScript | ✅ | 12.4 | 0.4525 | 112.39 |

| YOLOv8n | ONNX | ✅ | 12.2 | 0.4525 | 28.02 |

| YOLOv8n | OpenVINO | ✅ | 12.3 | 0.4504 | 23.53 |

| YOLOv8s | PyTorch | ✅ | 21.5 | 0.5885 | 194.83 |

| YOLOv8s | TorchScript | ✅ | 43.0 | 0.5962 | 202.01 |

| YOLOv8s | ONNX | ✅ | 42.8 | 0.5962 | 65.74 |

| YOLOv8s | OpenVINO | ✅ | 42.9 | 0.5966 | 38.66 |

| YOLOv8m | PyTorch | ✅ | 49.7 | 0.6101 | 355.23 |

| YOLOv8m | TorchScript | ✅ | 99.2 | 0.6120 | 424.78 |

| YOLOv8m | ONNX | ✅ | 99.0 | 0.6120 | 173.39 |

| YOLOv8m | OpenVINO | ✅ | 99.1 | 0.6091 | 69.80 |

| YOLOv8l | PyTorch | ✅ | 83.7 | 0.6591 | 593.00 |

| YOLOv8l | TorchScript | ✅ | 167.2 | 0.6580 | 697.54 |

| YOLOv8l | ONNX | ✅ | 166.8 | 0.6580 | 342.15 |

| YOLOv8l | OpenVINO | ✅ | 167.0 | 0.0708 | 117.69 |

| YOLOv8x | PyTorch | ✅ | 130.5 | 0.6651 | 804.65 |

| YOLOv8x | TorchScript | ✅ | 260.8 | 0.6650 | 921.46 |

| YOLOv8x | ONNX | ✅ | 260.4 | 0.6650 | 526.66 |

| YOLOv8x | OpenVINO | ✅ | 260.6 | 0.6619 | 158.73 |

Reproduce Our Results

To reproduce the Ultralytics benchmarks above on all export formats run this code:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Benchmark YOLOv8n speed and accuracy on the COCO8 dataset for all export formats

results = model.benchmarks(data="coco8.yaml")

```

=== "CLI"

```py

# Benchmark YOLOv8n speed and accuracy on the COCO8 dataset for all export formats

yolo benchmark model=yolov8n.pt data=coco8.yaml

```

Note that benchmarking results might vary based on the exact hardware and software configuration of a system, as well as the current workload of the system at the time the benchmarks are run. For the most reliable results use a dataset with a large number of images, i.e. `data='coco128.yaml' (128 val images), or `data='coco.yaml'` (5000 val images).

Conclusion

The benchmarking results clearly demonstrate the benefits of exporting the YOLOv8 model to the OpenVINO format. Across different models and hardware platforms, the OpenVINO format consistently outperforms other formats in terms of inference speed while maintaining comparable accuracy.

For the Intel® Data Center GPU Flex Series, the OpenVINO format was able to deliver inference speeds almost 10 times faster than the original PyTorch format. On the Xeon CPU, the OpenVINO format was twice as fast as the PyTorch format. The accuracy of the models remained nearly identical across the different formats.

The benchmarks underline the effectiveness of OpenVINO as a tool for deploying deep learning models. By converting models to the OpenVINO format, developers can achieve significant performance improvements, making it easier to deploy these models in real-world applications.

For more detailed information and instructions on using OpenVINO, refer to the official OpenVINO documentation.

FAQ

How do I export YOLOv8 models to OpenVINO format?

Exporting YOLOv8 models to the OpenVINO format can significantly enhance CPU speed and enable GPU and NPU accelerations on Intel hardware. To export, you can use either Python or CLI as shown below:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Export the model

model.export(format="openvino") # creates 'yolov8n_openvino_model/'

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to OpenVINO format

yolo export model=yolov8n.pt format=openvino # creates 'yolov8n_openvino_model/'

```

For more information, refer to the export formats documentation.

What are the benefits of using OpenVINO with YOLOv8 models?

Using Intel's OpenVINO toolkit with YOLOv8 models offers several benefits:

- Performance: Achieve up to 3x speedup on CPU inference and leverage Intel GPUs and NPUs for acceleration.

- Model Optimizer: Convert, optimize, and execute models from popular frameworks like PyTorch, TensorFlow, and ONNX.

- Ease of Use: Over 80 tutorial notebooks are available to help users get started, including ones for YOLOv8.

- Heterogeneous Execution: Deploy models on various Intel hardware with a unified API.

For detailed performance comparisons, visit our benchmarks section.

How can I run inference using a YOLOv8 model exported to OpenVINO?

After exporting a YOLOv8 model to OpenVINO format, you can run inference using Python or CLI:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load the exported OpenVINO model

ov_model = YOLO("yolov8n_openvino_model/")

# Run inference

results = ov_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Run inference with the exported model

yolo predict model=yolov8n_openvino_model source='https://ultralytics.com/images/bus.jpg'

```

Refer to our predict mode documentation for more details.

Why should I choose Ultralytics YOLOv8 over other models for OpenVINO export?

Ultralytics YOLOv8 is optimized for real-time object detection with high accuracy and speed. Specifically, when combined with OpenVINO, YOLOv8 provides:

- Up to 3x speedup on Intel CPUs

- Seamless deployment on Intel GPUs and NPUs

- Consistent and comparable accuracy across various export formats

For in-depth performance analysis, check our detailed YOLOv8 benchmarks on different hardware.

Can I benchmark YOLOv8 models on different formats such as PyTorch, ONNX, and OpenVINO?

Yes, you can benchmark YOLOv8 models in various formats including PyTorch, TorchScript, ONNX, and OpenVINO. Use the following code snippet to run benchmarks on your chosen dataset:

!!! Example

=== "Python"

```py

from ultralytics import YOLO

# Load a YOLOv8n PyTorch model

model = YOLO("yolov8n.pt")

# Benchmark YOLOv8n speed and accuracy on the COCO8 dataset for all export formats

results = model.benchmarks(data="coco8.yaml")

```

=== "CLI"

```py

# Benchmark YOLOv8n speed and accuracy on the COCO8 dataset for all export formats

yolo benchmark model=yolov8n.pt data=coco8.yaml

```

For detailed benchmark results, refer to our benchmarks section and export formats documentation.

comments: true

description: Learn how to export YOLOv8 models to PaddlePaddle format for enhanced performance, flexibility, and deployment across various platforms and devices.

keywords: YOLOv8, PaddlePaddle, export models, computer vision, deep learning, model deployment, performance optimization

How to Export to PaddlePaddle Format from YOLOv8 Models

Bridging the gap between developing and deploying computer vision models in real-world scenarios with varying conditions can be difficult. PaddlePaddle makes this process easier with its focus on flexibility, performance, and its capability for parallel processing in distributed environments. This means you can use your YOLOv8 computer vision models on a wide variety of devices and platforms, from smartphones to cloud-based servers.

The ability to export to PaddlePaddle model format allows you to optimize your Ultralytics YOLOv8 models for use within the PaddlePaddle framework. PaddlePaddle is known for facilitating industrial deployments and is a good choice for deploying computer vision applications in real-world settings across various domains.

Why should you export to PaddlePaddle?

Developed by Baidu, PaddlePaddle (PArallel Distributed Deep LEarning) is China's first open-source deep learning platform. Unlike some frameworks built mainly for research, PaddlePaddle prioritizes ease of use and smooth integration across industries.

It offers tools and resources similar to popular frameworks like TensorFlow and PyTorch, making it accessible for developers of all experience levels. From farming and factories to service businesses, PaddlePaddle's large developer community of over 4.77 million is helping create and deploy AI applications.

By exporting your Ultralytics YOLOv8 models to PaddlePaddle format, you can tap into PaddlePaddle's strengths in performance optimization. PaddlePaddle prioritizes efficient model execution and reduced memory usage. As a result, your YOLOv8 models can potentially achieve even better performance, delivering top-notch results in practical scenarios.

Key Features of PaddlePaddle Models

PaddlePaddle models offer a range of key features that contribute to their flexibility, performance, and scalability across diverse deployment scenarios:

-

Dynamic-to-Static Graph: PaddlePaddle supports dynamic-to-static compilation, where models can be translated into a static computational graph. This enables optimizations that reduce runtime overhead and boost inference performance.

-

Operator Fusion: PaddlePaddle, like TensorRT, uses operator fusion to streamline computation and reduce overhead. The framework minimizes memory transfers and computational steps by merging compatible operations, resulting in faster inference.

-

Quantization: PaddlePaddle supports quantization techniques, including post-training quantization and quantization-aware training. These techniques allow for the use of lower-precision data representations, effectively boosting performance and reducing model size.

Deployment Options in PaddlePaddle

Before diving into the code for exporting YOLOv8 models to PaddlePaddle, let's take a look at the different deployment scenarios in which PaddlePaddle models excel.

PaddlePaddle provides a range of options, each offering a distinct balance of ease of use, flexibility, and performance:

-

Paddle Serving: This framework simplifies the deployment of PaddlePaddle models as high-performance RESTful APIs. Paddle Serving is ideal for production environments, providing features like model versioning, online A/B testing, and scalability for handling large volumes of requests.

-

Paddle Inference API: The Paddle Inference API gives you low-level control over model execution. This option is well-suited for scenarios where you need to integrate the model tightly within a custom application or optimize performance for specific hardware.

-

Paddle Lite: Paddle Lite is designed for deployment on mobile and embedded devices where resources are limited. It optimizes models for smaller sizes and faster inference on ARM CPUs, GPUs, and other specialized hardware.

-

Paddle.js: Paddle.js enables you to deploy PaddlePaddle models directly within web browsers. Paddle.js can either load a pre-trained model or transform a model from paddle-hub with model transforming tools provided by Paddle.js. It can run in browsers that support WebGL/WebGPU/WebAssembly.

Export to PaddlePaddle: Converting Your YOLOv8 Model

Converting YOLOv8 models to the PaddlePaddle format can improve execution flexibility and optimize performance for various deployment scenarios.

Installation

To install the required package, run:

!!! Tip "Installation"

=== "CLI"

```py

# Install the required package for YOLOv8

pip install ultralytics

```

For detailed instructions and best practices related to the installation process, check our Ultralytics Installation guide. While installing the required packages for YOLOv8, if you encounter any difficulties, consult our Common Issues guide for solutions and tips.

Usage

Before diving into the usage instructions, it's important to note that while all Ultralytics YOLOv8 models are available for exporting, you can ensure that the model you select supports export functionality here.

!!! Example "Usage"

=== "Python"

```py

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("yolov8n.pt")

# Export the model to PaddlePaddle format

model.export(format="paddle") # creates '/yolov8n_paddle_model'

# Load the exported PaddlePaddle model

paddle_model = YOLO("./yolov8n_paddle_model")

# Run inference

results = paddle_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py

# Export a YOLOv8n PyTorch model to PaddlePaddle format

yolo export model=yolov8n.pt format=paddle # creates '/yolov8n_paddle_model'

# Run inference with the exported model

yolo predict model='./yolov8n_paddle_model' source='https://ultralytics.com/images/bus.jpg'

```

For more details about supported export options, visit the Ultralytics documentation page on deployment options.

Deploying Exported YOLOv8 PaddlePaddle Models

After successfully exporting your Ultralytics YOLOv8 models to PaddlePaddle format, you can now deploy them. The primary and recommended first step for running a PaddlePaddle model is to use the YOLO("./model_paddle_model") method, as outlined in the previous usage code snippet.

However, for in-depth instructions on deploying your PaddlePaddle models in various other settings, take a look at the following resources:

-

Paddle Serving: Learn how to deploy your PaddlePaddle models as performant services using Paddle Serving.

-

Paddle Lite: Explore how to optimize and deploy models on mobile and embedded devices using Paddle Lite.

-

Paddle.js: Discover how to run PaddlePaddle models in web browsers for client-side AI using Paddle.js.

Summary

In this guide, we explored the process of exporting Ultralytics YOLOv8 models to the PaddlePaddle format. By following these steps, you can leverage PaddlePaddle's strengths in diverse deployment scenarios, optimizing your models for different hardware and software environments.

For further details on usage, visit the PaddlePaddle official documentation

Want to explore more ways to integrate your Ultralytics YOLOv8 models? Our integration guide page explores various options, equipping you with valuable resources and insights.

FAQ

How do I export Ultralytics YOLOv8 models to PaddlePaddle format?

Exporting Ultralytics YOLOv8 models to PaddlePaddle format is straightforward. You can use the export method of the YOLO class to perform this exportation. Here is an example using Python:

!!! Example "Usage"

=== "Python"

```py

from ultralytics import YOLO

# Load the YOLOv8 model

model = YOLO("yolov8n.pt")

# Export the model to PaddlePaddle format

model.export(format="paddle") # creates '/yolov8n_paddle_model'

# Load the exported PaddlePaddle model

paddle_model = YOLO("./yolov8n_paddle_model")

# Run inference

results = paddle_model("https://ultralytics.com/images/bus.jpg")

```

=== "CLI"

```py