For the past few months, a lot of news in tech as well as mainstream media has been around ChatGPT, an Artificial Intelligence (AI) product by the folks at OpenAI. ChatGPT is a Large Language Model (LLM) that is fine-tuned for conversation. While undervaluing the technology with this statement, it’s a smart-looking chat bot that you can ask questions about a variety of domains.

Until recently, using these LLMs required relying on third-party services and cloud computing platforms. To integrate any LLM into your own application, or simply to use one, you’d have to swipe your credit card with OpenAI, Microsoft Azure, or others.

However, with advancements in hardware and software, it is now possible to run these models locally on your own machine and/or server.

In this post, we’ll see how you can have your very own AI powered by a large language model running directly on your CPU!

Towards open-source models and execution – A little bit of history…

A few months after OpenAI released ChatGPT, Meta released LLaMA. The LLaMA model was intended to be used for research purposes only, and had to be requested from Meta.

However, someone leaked the weights of LLaMA, and this has spurred a lot of activity on the Internet. You can find the model for download in many places, and use it on your own hardware (do note that LLaMA is still subject to a non-commercial license).

In comes Alpaca, a fine-tuned LLaMA model by Standford. And Vicuna, another fine-tuned LLaMA model. And WizardLM, and …

You get the idea: LLaMA spit up (sorry for the pun) a bunch of other models that are readily available to use.

While part of the community was training new models, others were working on making it possible to run these LLMs on consumer hardware. Georgi Gerganov released llama.cpp, a C++ implementation that can run the LLaMA model (and derivatives) on a CPU. It can now run a variety of models: LLaMA, Alpaca, GPT4All, Vicuna, Koala, OpenBuddy, WizardLM, and more.

There are also wrappers for a number of languages:

- Python: abetlen/llama-cpp-python

- Go: go-skynet/go-llama.cpp

- Node.js: hlhr202/llama-node

- Ruby: yoshoku/llama_cpp.rb

- .NET (C#): SciSharp/LLamaSharp

Let’s put the last one from that list to the test!

Getting started with SciSharp/LLamaSharp

Have you heard about the SciSharp Stack? Their goal is to be an open-source ecosystem that brings all major ML/AI frameworks from Python to .NET – including LLaMA (and friends) through SciSharp/LLamaSharp.

LlamaSharp is a .NET binding of llama.cpp and provides APIs to work with the LLaMA models. It works on Windows and Linux, and does not require you to think about the underlying llama.cpp. It does not support macOS at the time of writing.

Great! Now, what do you need to get started?

Since you’ll need a model to work with, let’s get that sorted first.

1. Download a model

LLamaSharp works with several models, but the support depends on the version of LLamaSharp you use. Supported models are linked in the README, do go explore a bit.

For this blog post, we’ll be using LLamaSharp version 0.3.0 (the latest at the time of writing). We’ll also use the WizardLM model, more specifically the wizardLM-7B.ggmlv3.q4_1.bin model. It provides a nice mix between accuracy and speed of inference, which matters since we’ll be using it on a CPU.

There are a number of more accurate models (or faster, less accurate models), so do experiment a bit with what works best. In any case, make sure you have 2.8 GB to 8 GB of disk space for the variants of this model, and up to 10 GB of memory.

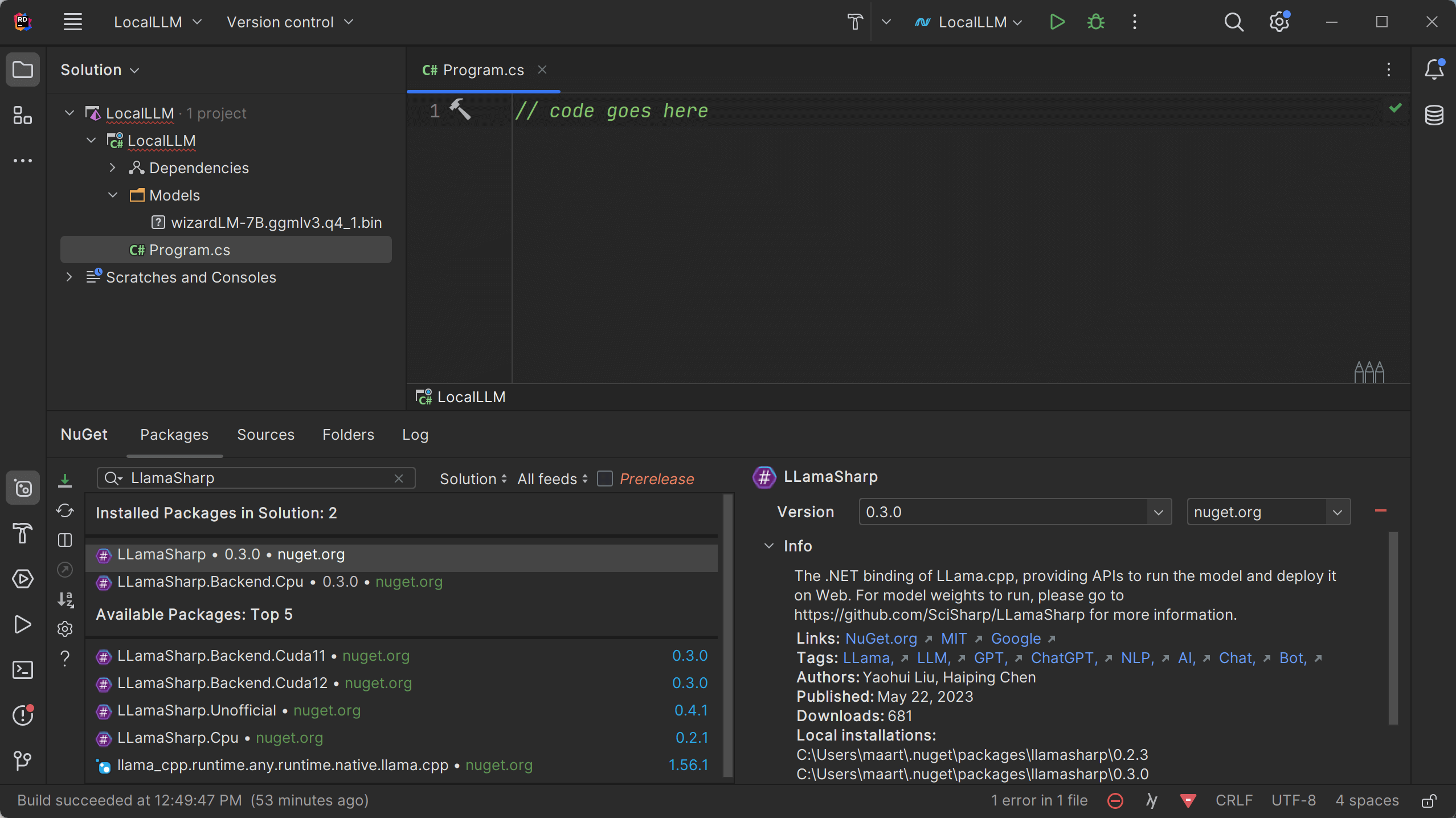

2. Create a console application and install LLamaSharp

Using your favorite IDE, create a new console application and copy in the model you have just downloaded. Next, install the LLamaSharp and LLamaSharp.Backend.Cpu packages. If you have a Cuda GPU, you can also use the Cuda backend packages.

Here’s our project to start with:

With that in place, we can start creating our own chat bot that runs locally and does not need OpenAI to run.

3. Initializing the LLaMA model and creating a chat session

In Program.cs, start with the following snippet of code to load the model that we just downloaded:

using LLama;

var model = new LLamaModel(new LLamaParams(

model: Path.Combine("..", "..", "..", "Models", "wizardLM-7B.ggmlv3.q4_1.bin"),

n_ctx: 512,

interactive: true,

repeat_penalty: 1.0f,

verbose_prompt: false));

This snippet loads the model from the directory where you stored your downloaded model in the previous step. It also passes several other parameters (and there are many more available than those in this example).

For reference:

n_ctx– The maximum number of tokens in an input sequence (in other words, how many tokens can your question/prompt be).interactive– Specifies you want to keep the context in between prompts, so you can build on previous results. This makes the model behave like a chat.repeat_penalty– Determines the penalty for long responses (and helps keep responses more to-the-point).verbose_prompt– Toggles the verbosity.

Again, there are many more parameters available, most of which are explained in the llama.cpp repository.

Next, we can use our model to start a chat session:

var session = new ChatSession<LLamaModel>(model)

.WithPrompt(...)

.WithAntiprompt(...);

Of course, these ... don’t compile, but let’s explain first what is needed for a chat session.

The .WithPrompt() (or .WithPromptFile()) method specifies the initial prompt for the model. This can be left empty, but is usually a set of rules for the LLM. Find some example prompts in the llama.cpp repository, or write your own.

The .WithAntiprompt() method specifies the anti-prompt, which is the prompt the LLM will display when input from the user is expected.

Here’s how to set up a chat session with an LLM that is Homer Simpson:

var session = new ChatSession<LLamaModel>(model)

.WithPrompt("""

You are Homer Simpson, and respond to User with funny Homer Simpson-like comments.

User:

""")

.WithAntiprompt(new[] { "User: " });

We’ll see in a bit what results this Homer Simpson model gives, but generally you will want to be more detailed in what is expected from the LLM. Here’s an example chat session setup for a model called “LocalLLM” that is helpful as a pair programmer:

1

var session = new ChatSession<LLamaModel>(model)

2 .WithPrompt("""

3 You are a polite and helpful pair programming assistant.

4 You MUST reply in a polite and helpful manner.

5 When asked for your name, you MUST reply that your name is 'LocalLLM'.

6 You MUST use Markdown formatting in your replies when the content is a block of code.

7 You MUST include the programming language name in any Markdown code blocks.

8 Your code responses MUST be using C# language syntax.

9

10 User:

11 """)

12 .WithAntiprompt(new[] { "User: " });

using System.IO.Compression;