单机部署

注:本文基于etcd3.5.5

单实例etcd

ETCD_VER=v3.5.5

# choose either URL

GOOGLE_URL=https://storage.googleapis.com/etcd

GITHUB_URL=https://github.com/etcd-io/etcd/releases/download

DOWNLOAD_URL=${GOOGLE_URL}

rm -f /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz

rm -rf /tmp/etcd-download-test && mkdir -p /tmp/etcd-download-test

curl -L ${DOWNLOAD_URL}/${ETCD_VER}/etcd-${ETCD_VER}-linux-amd64.tar.gz -o /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz

tar xzvf /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz -C /tmp/etcd-download-test --strip-components=1

rm -f /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz

cp /tmp/etcd-download-test/etcd /usr/bin/

cp /tmp/etcd-download-test/etcdctl /usr/bin/

# 加上& etcd会后台启动

etcd &

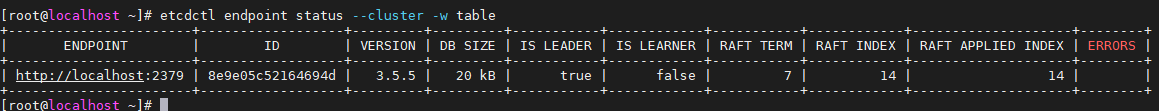

查看etcd信息:

etcdctl endpoint status --cluster -w table

systemd 部署 etcd

直接使用命令行启动 etcd 服务不便于运维管理。在系统上安装服务,可以使用 systemd 来托管服务,能够做到服务进程管理以及开机启动等功能。

准备配置文件和数据目录:

mkdir -p /etc/etcd

mkdir -p /data/etcd

准备 systemd 服务文件:

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

准备配置文件:

vi /etc/etcd/etcd.conf

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/data/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.40.128:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.40.128:2379,http://192.168.40.128:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.40.128:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.40.128:2379"

ETCD_INITIAL_CLUSTER="etcd01=http://192.168.40.128:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

重载服务配置,使 /etc/etcd/etcd.conf 配置文件生效:

systemctl daemon-reload

启动服务并设置开机自启

systemctl start etcd

systemctl enable etcd

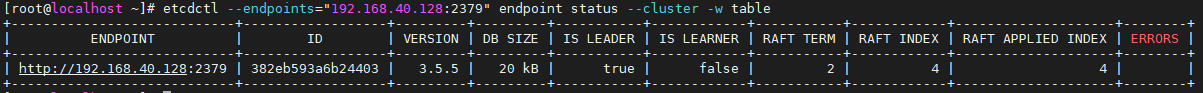

查看集群状态:

etcdctl --endpoints="192.168.40.128:2379" endpoint status --cluster -w table

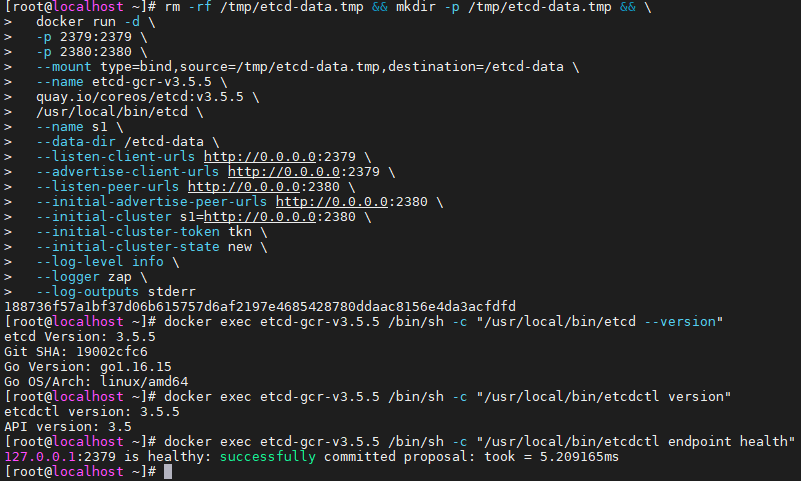

docker部署

rm -rf /tmp/etcd-data.tmp && mkdir -p /tmp/etcd-data.tmp && \

docker run -d \

-p 2379:2379 \

-p 2380:2380 \

--mount type=bind,source=/tmp/etcd-data.tmp,destination=/etcd-data \

--name etcd-gcr-v3.5.5 \

quay.io/coreos/etcd:v3.5.5 \

/usr/local/bin/etcd \

--name s1 \

--data-dir /etcd-data \

--listen-client-urls http://0.0.0.0:2379 \

--advertise-client-urls http://0.0.0.0:2379 \

--listen-peer-urls http://0.0.0.0:2380 \

--initial-advertise-peer-urls http://0.0.0.0:2380 \

--initial-cluster s1=http://0.0.0.0:2380 \

--initial-cluster-token tkn \

--initial-cluster-state new \

--log-level info \

--logger zap \

--log-outputs stderr

docker exec etcd-gcr-v3.5.5 /bin/sh -c "/usr/local/bin/etcd --version"

docker exec etcd-gcr-v3.5.5 /bin/sh -c "/usr/local/bin/etcdctl version"

docker exec etcd-gcr-v3.5.5 /bin/sh -c "/usr/local/bin/etcdctl endpoint health"

执行结果:

高可用部署

TLS静态部署

准备三台linux机器,ip如下:

172.16.8.112,172.16.8.113,172.16.8.114

三个etcd机器都创建 /etc/etcd 目录,准备存储etcd配置信息:

mkdir -p /etc/etcd

配置文件:(三台机器的ip记得改,initial-cluster配置项中需要配置集群中所有etcd的地址)

vi /etc/etcd/etcd.yaml

name: 'etcd3' #每个机器可以写自己的域名,不能重复

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.16.8.114:2380' # 本机ip+2380端口,代表和集群通信

listen-client-urls: 'https://172.16.8.114:2379,http://172.16.8.114:2379' #改为自己的

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.16.8.114:2380' #自己的ip

advertise-client-urls: 'https://172.16.8.114:2379' #自己的ip

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'etcd1=https://172.16.8.112:2380,etcd2=https://172.16.8.113:2380,etcd3=https://172.16.8.114:2380' #这里不一样

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/etcd/etcd.pem'

key-file: '/etc/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/etcd/ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/etcd/etcd.pem'

key-file: '/etc/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/etcd/ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

三台机器的etcd做成service:

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Service

Documentation=https://etcd.io/docs/v3.4/op-guide/clustering/

After=network.target

[Service]

Type=notify

ExecStart=/usr/bin/etcd --config-file=/etc/etcd/etcd.yaml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

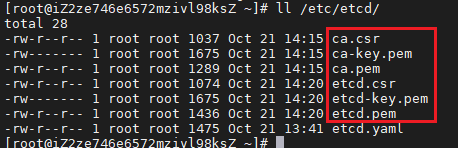

生成etcd证书:

下载cfssl:

# 下载核心组件

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64

#授予执行权限

chmod +x cfssl*

#批量重命名

for name in `ls cfssl*`; do mv $name ${name%_1.5.0_linux_amd64}; done

#移动到文件

mv cfssl* /usr/bin

etcd-ca-csr.json:

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "etcd"

}

],

"ca": {

"expiry": "87600h"

}

}

#生成etcd根ca证书

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ca -

etcd-cluster-csr.json:

{

"CN": "etcd-cluster",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"172.16.8.112",

"172.16.8.113",

"172.16.8.114"

],

"names": [

{

"C": "CN",

"L": "beijing",

"O": "etcd",

"ST": "beijing",

"OU": "System"

}

]

}

配置ca-config.json:

vi /etc/ca-config.json

{

"signing": {

"default": {

"expiry": "43800h"

},

"profiles": {

"etcd": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

签发etcd证书:

cfssl gencert \

-ca=/etc/etcd/ca.pem \

-ca-key=/etc/etcd/ca-key.pem \

-config=/etc/ca-config.json \

-profile=etcd \

etcd-cluster-csr.json | cfssljson -bare /etc/etcd/etcd

把生成的证书文件,复制给其他机器 (过程略)

# 加载&开机启动

systemctl daemon-reload

systemctl enable --now etcd

# 启动有问题,使用 journalctl -u 服务名排查

journalctl -u etcd

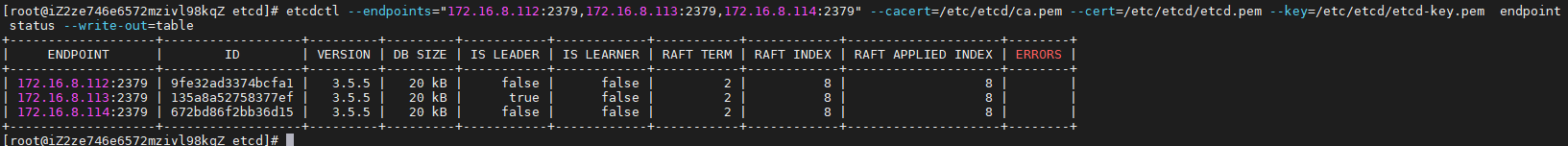

查看etcd集群状态:

etcdctl --endpoints="172.16.8.112:2379,172.16.8.113:2379,172.16.8.114:2379" --cacert=/etc/etcd/ca.pem --cert=/etc/etcd/etcd.pem --key=/etc/etcd/etcd-key.pem endpoint status --write-out=table

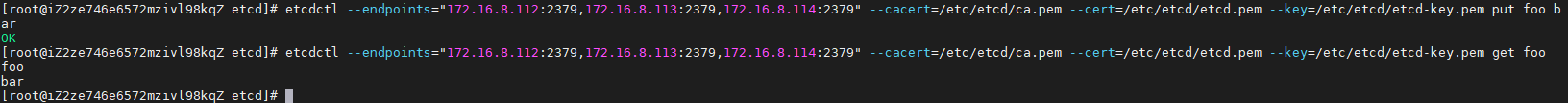

测试数据保存查询:

etcdctl --endpoints="172.16.8.112:2379,172.16.8.113:2379,172.16.8.114:2379" --cacert=/etc/etcd/ca.pem --cert=/etc/etcd/etcd.pem --key=/etc/etcd/etcd-key.pem put foo bar

etcdctl --endpoints="172.16.8.112:2379,172.16.8.113:2379,172.16.8.114:2379" --cacert=/etc/etcd/ca.pem --cert=/etc/etcd/etcd.pem --key=/etc/etcd/etcd-key.pem get foo

搭建成功。

基于etcd的动态发现部署

很多场景下,我们无法预知集群中各个成员的地址,比如我们通过云提供商动态的创建系欸但那或者使用DHCP网络时是不知道集群成员地址的。这时候就不能使用上面的静态部署的方式,而是需要所谓的“服务自发现”。简单的来说就是etcd自发现即为使用一个现有的etcd集群来启动另一个新的etcd集群。

服务自发现包含两种模式:

- etcd自发现模式

- DNS自发现模式

下面只介绍第一种。

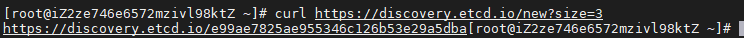

etcd官网提供了一个可以公网访问的etcd存储地址:https://discovery.etcd.io 进行服务发现,使用下面的命令可创建一个新的服务发现URL:

curl https://discovery.etcd.io/new?size=3

以上命令会生成一个链接样式的 token,参数 size 代表要创建的集群大小,即: 有多少集群节点。

返回值:https://discovery.etcd.io/e99ae7825ae955346c126b53e29a5dba

在 etcd 服务启动时,可以指定该 token。

现在我们启动etcd:

#三台机器分别执行

etcd --name etcd1 --initial-advertise-peer-urls http://172.16.8.114:2380 \

--listen-peer-urls http://172.16.8.114:2380 \

--listen-client-urls http://172.16.8.114:2379,http://127.0.0.1:2379 \

--advertise-client-urls http://172.16.8.114:2379 \

--discovery https://discovery.etcd.io/e99ae7825ae955346c126b53e29a5dba

etcd --name etcd2 --initial-advertise-peer-urls http://172.16.8.113:2380 \

--listen-peer-urls http://172.16.8.113:2380 \

--listen-client-urls http://172.16.8.113:2379,http://127.0.0.1:2379 \

--advertise-client-urls http://172.16.8.113:2379 \

--discovery https://discovery.etcd.io/e99ae7825ae955346c126b53e29a5dba

etcd --name etcd3 --initial-advertise-peer-urls http://172.16.8.112:2380 \

--listen-peer-urls http://172.16.8.112:2380 \

--listen-client-urls http://172.16.8.112:2379,http://127.0.0.1:2379 \

--advertise-client-urls http://172.16.8.112:2379 \

--discovery https://discovery.etcd.io/e99ae7825ae955346c126b53e29a5dba

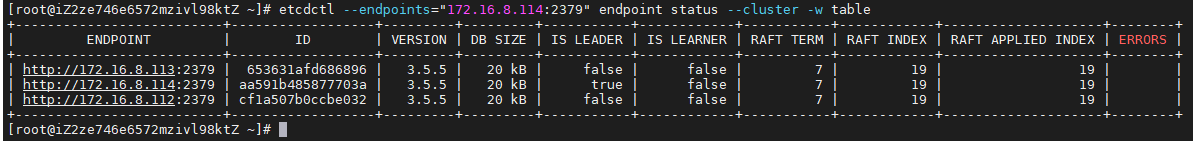

执行完成后,查看集群状态:

etcdctl --endpoints="172.16.8.114:2379" endpoint status --cluster -w table

k8s部署etcd集群

使用helm快速搭建集群

helm repo add bitnami https://charts.bitnami.com/bitnami

helm pull bitnami/etcd

#解压

tar zxf etcd-8.5.7.tgz

cd etcd

修改配置文件:

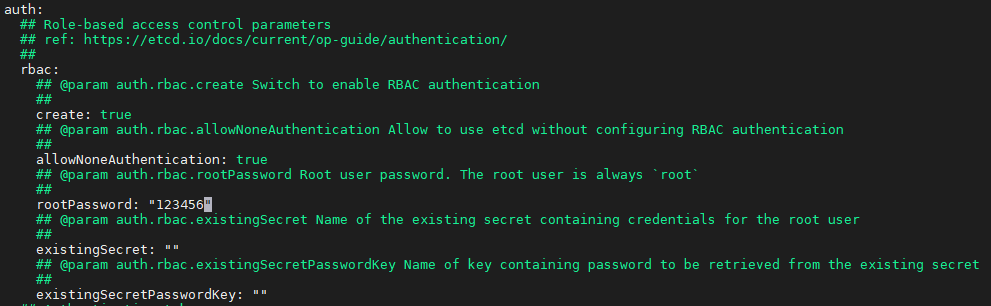

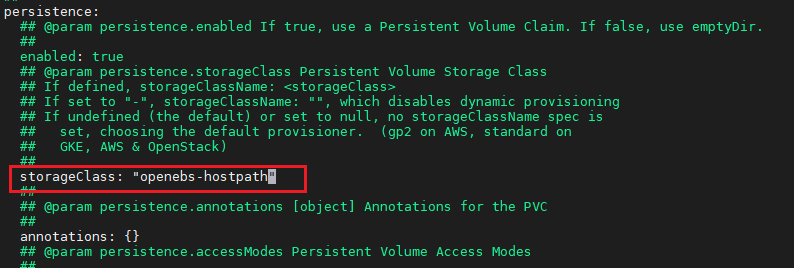

vi values.yaml

设置root密码

设置sc:(根据自己的情况而定,也可设置enabled为false)

设置副本数:

其余配置项可参考文档:etcd 8.5.7 · bitnami/bitnami (artifacthub.io)

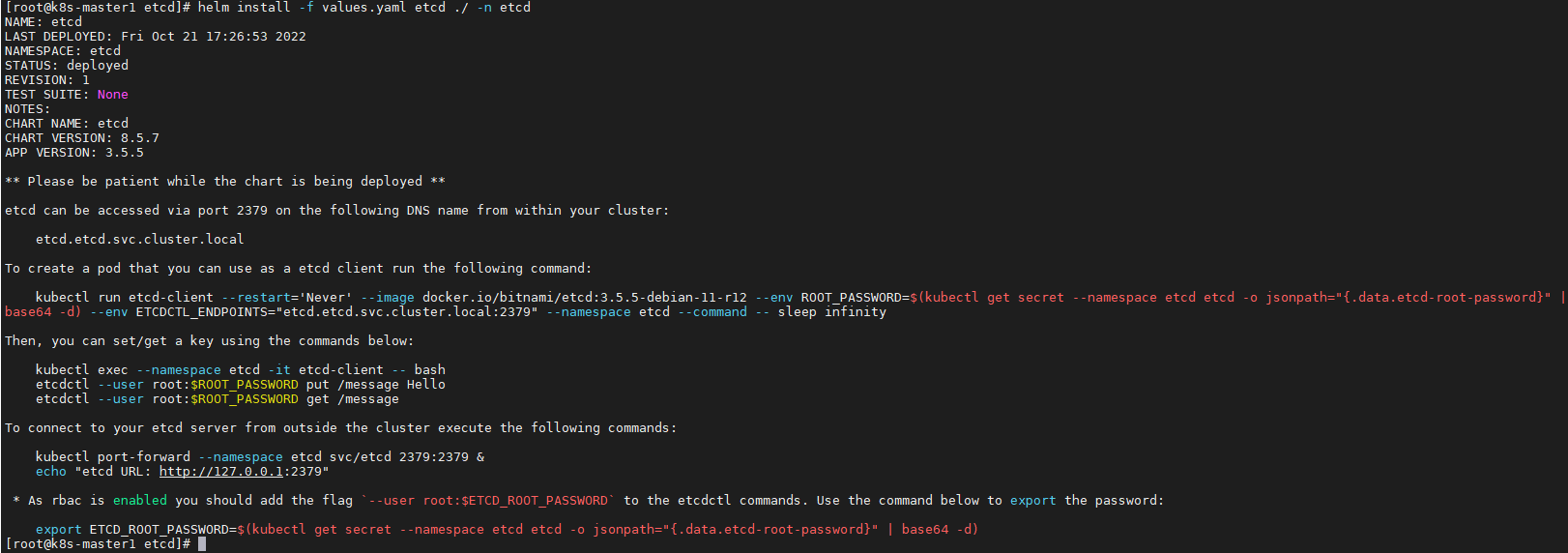

启动服务:

kubectl create ns etcd

helm install -f values.yaml etcd ./ -n etcd

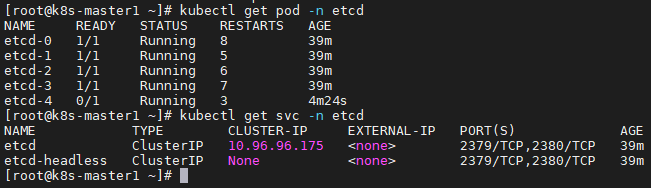

查看pod和svc: