目录

CephFS 文件系统介绍

Ceph File System

- POSIX-compliant semantics (符合 POSIX 的语法)

- Separates metadata from data (metadata和data 分离,数据放入data,元数据放入metadata)

- Dynamic rebalancing (动态从分布,自愈)

- Subdirectory snapshots (子目录筷子)

- Configurable striping (可配置切片)

- Kernel driver support (内核级别挂载)

- FUSE support (用户空间级别挂载)

- NFS/CIFS deployable (NFS/CIFS方式共享出去提供使用)

- Use with Hadoop (replace HDFS) (支持Hadoop 的 HDFS)

CephFS 组件架构

Ceph 文件系统有两个主要组件

客户端

CephFS 客户端代表使用 CephFS 的应用执行 I/O 操作,如用于 FUSE 客户端的 ceph-fuse,kcephfs 用于内核客户端。CephFS 客户端向活跃的元数据服务器发送元数据请求。为返回,CephFS 客户端了解文件元数据,可以安全地开始缓存元数据和文件数据。

元数据服务器 (MDS)

MDS 执行以下操作:

- 为 CephFS 客户端提供元数据。

- 管理与 Ceph 文件系统中存储的文件相关的元数据。

- 协调对共享 Red Hat Ceph Storage 的访问。

- 缓存热元数据,以减少对后备元数据池存储的请求。

- 管理 CephFS 客户端的缓存,以维护缓存一致性。

- 在活动 MDS 之间复制热元数据.

- 将元数据变异到压缩日志,并定期刷新到后备元数据池。

- CephFS 要求至少运行一个元数据服务器守护进程 (

ceph-mds)。

下图显示了 Ceph 文件系统的组件层。

+-----------------------+ +------------------------+

| CephFS Kernel Object | | CephFS FUSE |

+-----------------------+ +------------------------+

+---------------------------------------------------+

| CephFS Library (libcephfs) |

+---------------------------------------------------+

+---------------------------------------------------+

| Ceph Storage Protocol (librados) |

+---------------------------------------------------+

+---------------+ +---------------+ +---------------+

| OSDs | | MDSs | | Monitors |

+---------------+ +---------------+ +---------------+

底层代表底层核心存储集群组件:

- 存储 Ceph 文件系统数据和元数据的 Ceph OSD (

ceph-osd)。 - 用于管理 Ceph 文件系统元数据的 Ceph 元数据服务器 (

ceph-mds)。 - Ceph 监控器 (

ceph-mon),用于管理 cluster map 的主副本。

Ceph 存储协议层代表 Ceph 原生 librados 库,用于与核心存储集群交互。

CephFS 库层包含 CephFS libcephfs 库,它位于 librados 基础上,代表 Ceph 文件系统。

顶层代表两种类型的 Ceph 客户端,它们可以访问 Ceph 文件系统。

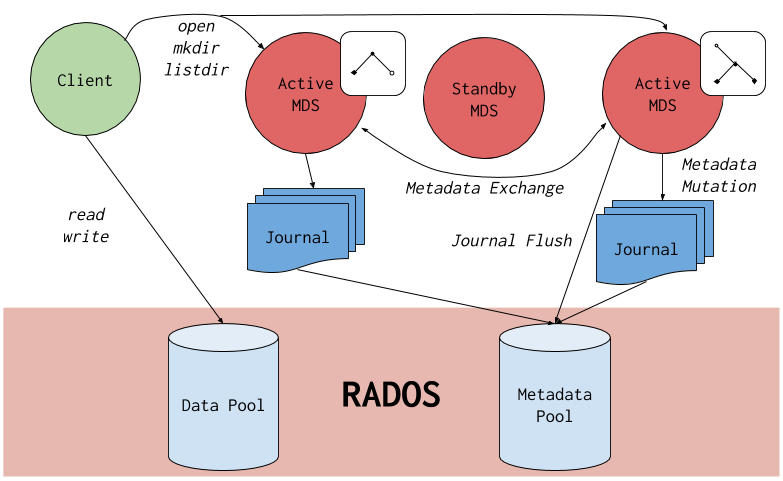

下图显示了 Ceph 文件系统组件如何相互交互的更多详细信息。

安装部署 CephFS

安装 MDS 集群

# 查看当前集群状态

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 5h)

mgr: node0(active, since 5h), standbys: node1, node2

osd: 3 osds: 3 up (since 5h), 3 in (since 7d)

rgw: 1 daemon active (node0)

task status:

data:

pools: 7 pools, 320 pgs

objects: 336 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 320 active+clean

# 添加 node0 到 MDS

[root@node0 ceph-deploy]# ceph-deploy mds create node0

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node0

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa0282bf6c8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7fa0282f9f50>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('node0', 'node0')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node0:node0

[node0][DEBUG ] connected to host: node0

[node0][DEBUG ] detect platform information from remote host

[node0][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node0

[node0][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node0][WARNIN] mds keyring does not exist yet, creating one

[node0][DEBUG ] create a keyring file

[node0][DEBUG ] create path if it doesn't exist

[node0][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node0 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node0/keyring

[node0][INFO ] Running command: systemctl enable ceph-mds@node0

[node0][WARNIN] Created symlink from /etc/systemd/system/ceph-mds.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[node0][INFO ] Running command: systemctl start ceph-mds@node0

[node0][INFO ] Running command: systemctl enable ceph.target

# 再次查看集群状态

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 5h)

mgr: node0(active, since 5h), standbys: node1, node2

mds: 1 up:standby # 已经有一个 standby 状态的 mds 服务

osd: 3 osds: 3 up (since 5h), 3 in (since 7d)

rgw: 1 daemon active (node0)

task status:

data:

pools: 7 pools, 320 pgs

objects: 336 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 320 active+clean

# 添加 node1, node2 节点到 mds

[root@node0 ceph-deploy]# ceph-deploy mds create node1 node2

查看 MDS 集群信息

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 5h)

mgr: node0(active, since 5h), standbys: node1, node2

mds: 3 up:standby

osd: 3 osds: 3 up (since 5h), 3 in (since 7d)

rgw: 1 daemon active (node0)

task status:

data:

pools: 7 pools, 320 pgs

objects: 336 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 320 active+clean

[root@node0 ceph-deploy]# ceph mds stat

3 up:standby # 当前还没有文件系统,所以3个节点都是 standby 没有节点是 active

创建 CephFS 文件系统

创建 CephFS 文件系统,需要创建2个pool

- 一个存放 数据

- 一个存放 metadata

创建资源池

# 创建 元数据资源池

[root@node0 ceph-deploy]# ceph osd pool create cephfs_metadata 16 16

pool 'cephfs_metadata' created

# 创建 数据资源池

[root@node0 ceph-deploy]# ceph osd pool create cephfs_data 16 16

pool 'cephfs_data' created

# 查看资源池

[root@node0 ceph-deploy]# ceph osd lspools

1 ceph-demo

2 .rgw.root

3 default.rgw.control

4 default.rgw.meta

5 default.rgw.log

6 default.rgw.buckets.index

7 default.rgw.buckets.data

8 cephfs_metadata

9 cephfs_data

创建 CephFS 文件系统

# 获取命令帮助

[root@node0 ceph-deploy]# ceph -h | grep fs

# 创建 CephFS 文件系统

[root@node0 ceph-deploy]# ceph fs new cephfs-demo cephfs_metadata cephfs_data

new fs with metadata pool 8 and data pool 9

# 查看创建的文件

[root@node0 ceph-deploy]# ceph fs ls

name: cephfs-demo, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

- 查看集群状态

[root@node0 ceph-deploy]# ceph -s

cluster:

id: 97702c43-6cc2-4ef8-bdb5-855cfa90a260

health: HEALTH_OK

services:

mon: 3 daemons, quorum node0,node1,node2 (age 5h)

mgr: node0(active, since 5h), standbys: node1, node2

mds: cephfs-demo:1 {0=node2=up:active} 2 up:standby # 创建完成文件系统后,某个节点自动 active

osd: 3 osds: 3 up (since 5h), 3 in (since 7d)

rgw: 1 daemon active (node0)

task status:

data:

pools: 9 pools, 352 pgs

objects: 358 objects, 305 MiB

usage: 3.6 GiB used, 146 GiB / 150 GiB avail

pgs: 352 active+clean

CephFS 内核挂载

CephFS 内核挂载

# 创建挂载目录

[root@node0 ceph-deploy]# mkdir /cephfs

# 查看 mount.ceph 由那个包安装

[root@node0 cephfs]# which mount.ceph

/usr/sbin/mount.ceph

[root@node0 ceph-deploy]# rpm -qf /usr/sbin/mount.ceph

ceph-common-14.2.22-0.el7.x86_64

# 命令格式:mount -t ceph {device-string}={path-to-mounted} {mount-point} -o {key-value-args} {other-args}

# 挂载 CephFS 文件到宿主机

[root@node0 ceph-deploy]# mount.ceph 192.168.100.130:6789:/ /cephfs -o name=admin

# 查看挂载是否成功

[root@node0 ceph-deploy]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 29M 881M 4% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 2.4G 35G 7% /

/dev/sda1 1014M 151M 864M 15% /boot

tmpfs 910M 52K 910M 1% /var/lib/ceph/osd/ceph-0

/dev/rbd0 20G 44M 19G 1% /mnt

tmpfs 182M 0 182M 0% /run/user/0

192.168.100.130:6789:/ 47G 0 47G 0% /cephfs # 已挂载,文件大小和 ceph 集群存储有关

查看 ceph 存储

[root@node0 ceph-deploy]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 150 GiB 146 GiB 633 MiB 3.6 GiB 2.41

TOTAL 150 GiB 146 GiB 633 MiB 3.6 GiB 2.41

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

ceph-demo 1 128 300 MiB 103 603 MiB 0.42 69 GiB

.rgw.root 2 32 1.2 KiB 4 768 KiB 0 46 GiB

default.rgw.control 3 32 0 B 8 0 B 0 46 GiB

default.rgw.meta 4 32 2.0 KiB 10 1.7 MiB 0 46 GiB

default.rgw.log 5 32 0 B 207 0 B 0 46 GiB

default.rgw.buckets.index 6 32 0 B 3 0 B 0 46 GiB

default.rgw.buckets.data 7 32 465 B 1 192 KiB 0 46 GiB

cephfs_metadata 8 16 2.6 KiB 22 1.5 MiB 0 46 GiB

cephfs_data 9 16 0 B 0 0 B 0 46 GiB

文件写入测试

[root@node0 ceph-deploy]# cd /cephfs/

[root@node0 cephfs]# echo evescn> test

[root@node0 cephfs]# cat test

evescn

自动加载 ceph 内核模块

[root@node0 cephfs]# lsmod | grep ceph

ceph 363016 1

libceph 306750 2 rbd,ceph

dns_resolver 13140 1 libceph

libcrc32c 12644 2 xfs,libceph

CephFS-fuse 用户态挂载

安装 ceph-fuse 客户端

[root@node0 cephfs]# cd /data/ceph-deploy/

[root@node0 ceph-deploy]# yum install --nogpgcheck -y ceph-fuse

查看 ceph-fuse 命令帮助

[root@node0 ceph-deploy]# ceph-fuse -h

usage: ceph-fuse [-n client.username] [-m mon-ip-addr:mon-port] <mount point> [OPTIONS]

--client_mountpoint/-r <sub_directory>

use sub_directory as the mounted root, rather than the full Ceph tree.

usage: ceph-fuse mountpoint [options]

general options:

-o opt,[opt...] mount options

-h --help print help

-V --version print version

FUSE options:

-d -o debug enable debug output (implies -f)

-f foreground operation

-s disable multi-threaded operation

--conf/-c FILE read configuration from the given configuration file

--id ID set ID portion of my name

--name/-n TYPE.ID set name

--cluster NAME set cluster name (default: ceph)

--setuser USER set uid to user or uid (and gid to user's gid)

--setgroup GROUP set gid to group or gid

--version show version and quit

挂载文件

[root@node0 ceph-deploy]# ceph-fuse -n client.admin -m 192.168.100.130:6789,192.168.100.131:6789,192.168.100.132:6789 /ceph-user/

ceph-fuse[48947]: starting ceph client2022-10-21 18:02:21.652 7f514cce8f80 -1 init, newargv = 0x559fad17ba10 newargc=9

ceph-fuse[48947]: starting fuse

查看挂载情况

[root@node0 ceph-deploy]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 29M 881M 4% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root 37G 2.5G 35G 7% /

/dev/sda1 1014M 151M 864M 15% /boot

tmpfs 910M 52K 910M 1% /var/lib/ceph/osd/ceph-0

/dev/rbd0 20G 44M 19G 1% /mnt

tmpfs 182M 0 182M 0% /run/user/0

192.168.100.130:6789:/ 47G 0 47G 0% /cephfs

ceph-fuse 47G 0 47G 0% /ceph-user

[root@node0 ceph-deploy]# df -T

Filesystem Type 1K-blocks Used Available Use% Mounted on

devtmpfs devtmpfs 919528 0 919528 0% /dev

tmpfs tmpfs 931512 0 931512 0% /dev/shm

tmpfs tmpfs 931512 29596 901916 4% /run

tmpfs tmpfs 931512 0 931512 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 38770180 2553536 36216644 7% /

/dev/sda1 xfs 1038336 153608 884728 15% /boot

tmpfs tmpfs 931512 52 931460 1% /var/lib/ceph/osd/ceph-0

/dev/rbd0 ext4 20511312 45040 19485208 1% /mnt

tmpfs tmpfs 186304 0 186304 0% /run/user/0

192.168.100.130:6789:/ ceph 48513024 0 48513024 0% /cephfs

ceph-fuse fuse.ceph-fuse 48513024 0 48513024 0% /ceph-user

# Type 格式为 fuse.ceph-fuse

验证内核挂载和用户态挂载文件同步情况

[root@node0 ceph-deploy]# cd /ceph-user/

[root@node0 ceph-user]# ls

test

[root@node0 ceph-user]# cat test

evescn

[root@node0 ceph-user]# ls /cephfs/

test

[root@node0 ceph-user]# cp /etc/fstab ./

[root@node0 ceph-user]# ls

fstab test

[root@node0 ceph-user]# ls /cephfs/

fstab test

参考文档

https://access.redhat.com/documentation/en-us/red_hat_ceph_storage/4/html-single/file_system_guide/index#deploying-ceph-file-systems