标签:keadm KubeEdge kubeedge linux 介绍 v1.6 原理 node2 root

http://bingerambo.com/posts/2021/05/kubeedge%E4%BB%8B%E7%BB%8D%E5%92%8C%E8%AE%BE%E8%AE%A1%E5%8E%9F%E7%90%86/#edgecore

KubeEdge介绍和设计原理

Binge 收录于

K8S

2021-05-26 约 9884 字 预计阅读 20 分钟 本文总阅读量 6183次

KubeEdge架构和组件介绍 KubeEdge提供了一个容器化的边缘计算平台,该平台具有内在的可伸缩性。由于模块化和优化的设计,它是轻量级的(较小的占用空间和运行内存),可以部署在低资源的设备上。同样,边缘节点可以具有不同的硬件结构和不同的硬件配置。对于设备连接,它可以支持多个协议,并使用标准的基于MQTT的通信.这有助于有效地扩展具有新节点和设备的边缘集群。

介绍

KubeEdge是一个开源系统,用于将本机容器化的应用程序编排功能扩展到Edge上的主机, 它基于kubernetes构建,并为网络,应用程序提供基本的基础架构支持。云和边缘之间的部署和元数据同步。 Kubeedge已获得Apache 2.0的许可。并且完全免费供个人或商业使用。我们欢迎贡献者!

我们的目标是建立一个开放平台,以支持Edge计算,将原生容器化应用程序编排功能扩展到Edge上的主机,该主机基于kubernetes,并为网络, 应用程序部署以及云与Edge之间的元数据同步提供基础架构支持。

特点

- 完全开放 - Edge Core和Cloud Core都是开源的。

- 离线模式 - 即使与云断开连接,Edge也可以运行。

- 基于Kubernetes - 节点,群集,应用程序和设备管理。 可扩展 - 容器化,微服务

- 资源优化 - 可以在资源不足的情况下运行。边缘云上资源的优化利用。

- 跨平台 - 无感知;可以在私有,公共和混合云中工作。

- 数据与分析 - 支持数据管理,数据分析管道引擎。

- 异构 - 可以支持x86,ARM。

- 简化开发 - 基于SDK的设备加成,应用程序部署等开发

- 易于维护 - 升级,回滚,监视,警报等

优势

- 边缘计算: 通过在Edge上运行的业务逻辑,可以在生成数据的本地保护和处理大量数据。这减少了网络带宽需求以及边缘和云之间的消耗。这样可以提高响应速度,降低成本并保护客户的数据隐私。

- 简化开发:开发人员可以编写基于常规http或mqtt的应用程序,对其进行容器化,然后在Edge或Cloud中的任何位置运行它们中的更合适的一个。

- Kubernetes原生支持: 借助KubeEdge,用户可以在Edge节点上编排应用,管理设备并监视应用和设备状态,就像云中的传统Kubernetes集群一样 大量的应用: 可以轻松地将现有的复杂机器学习,图像识别,事件处理和其他高级应用程序部署和部署到Edge。

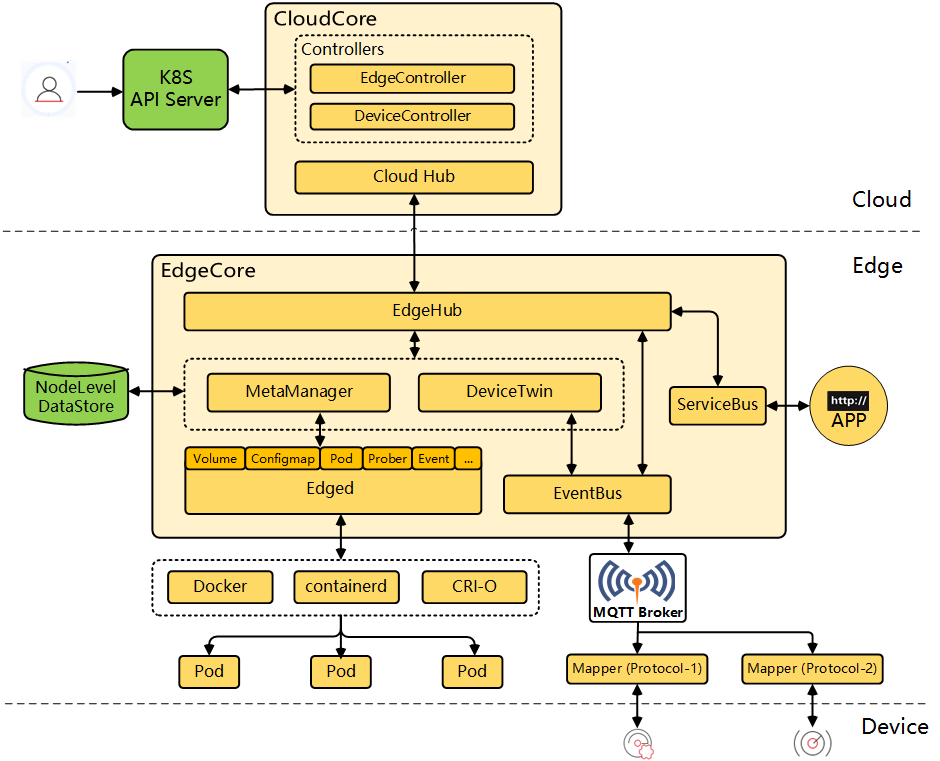

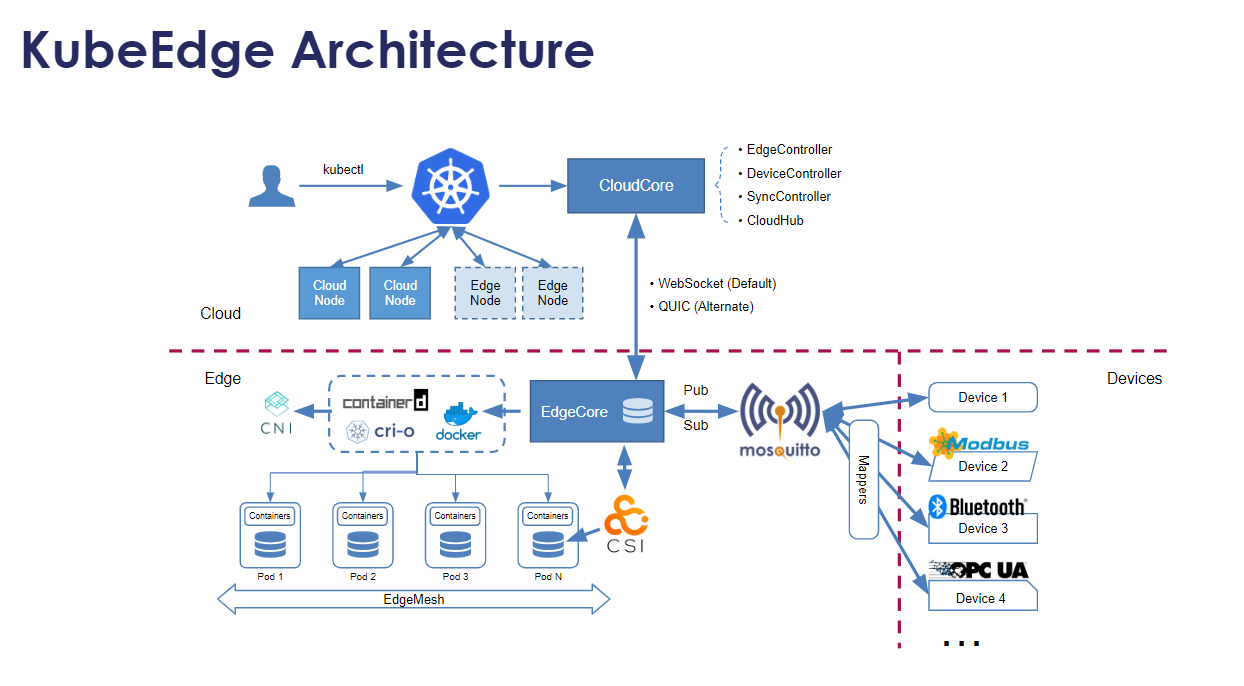

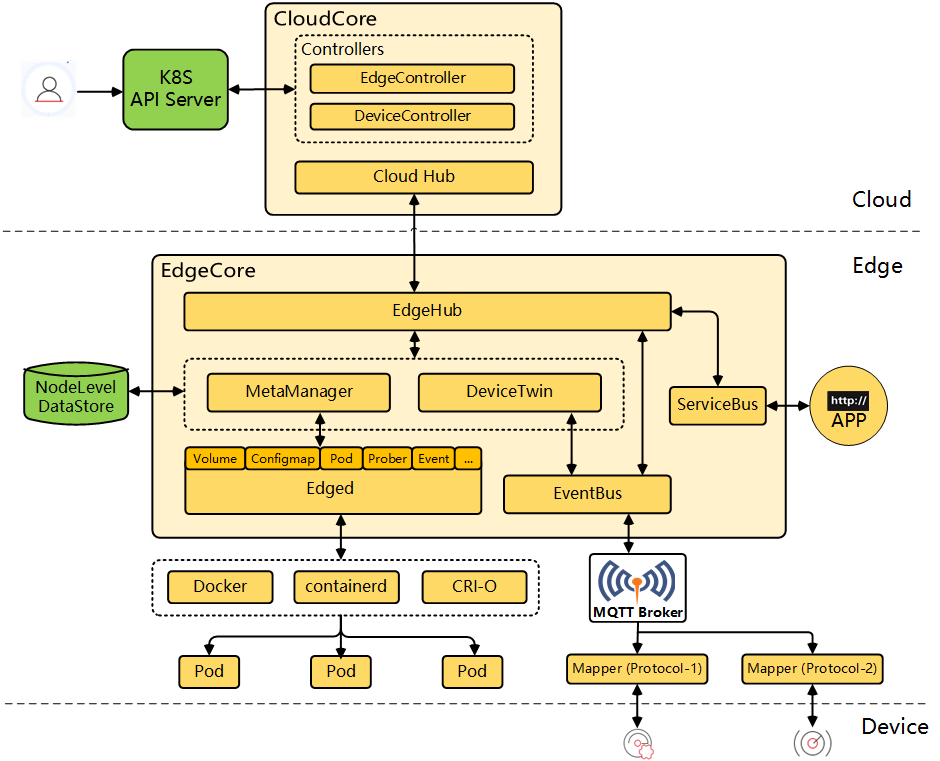

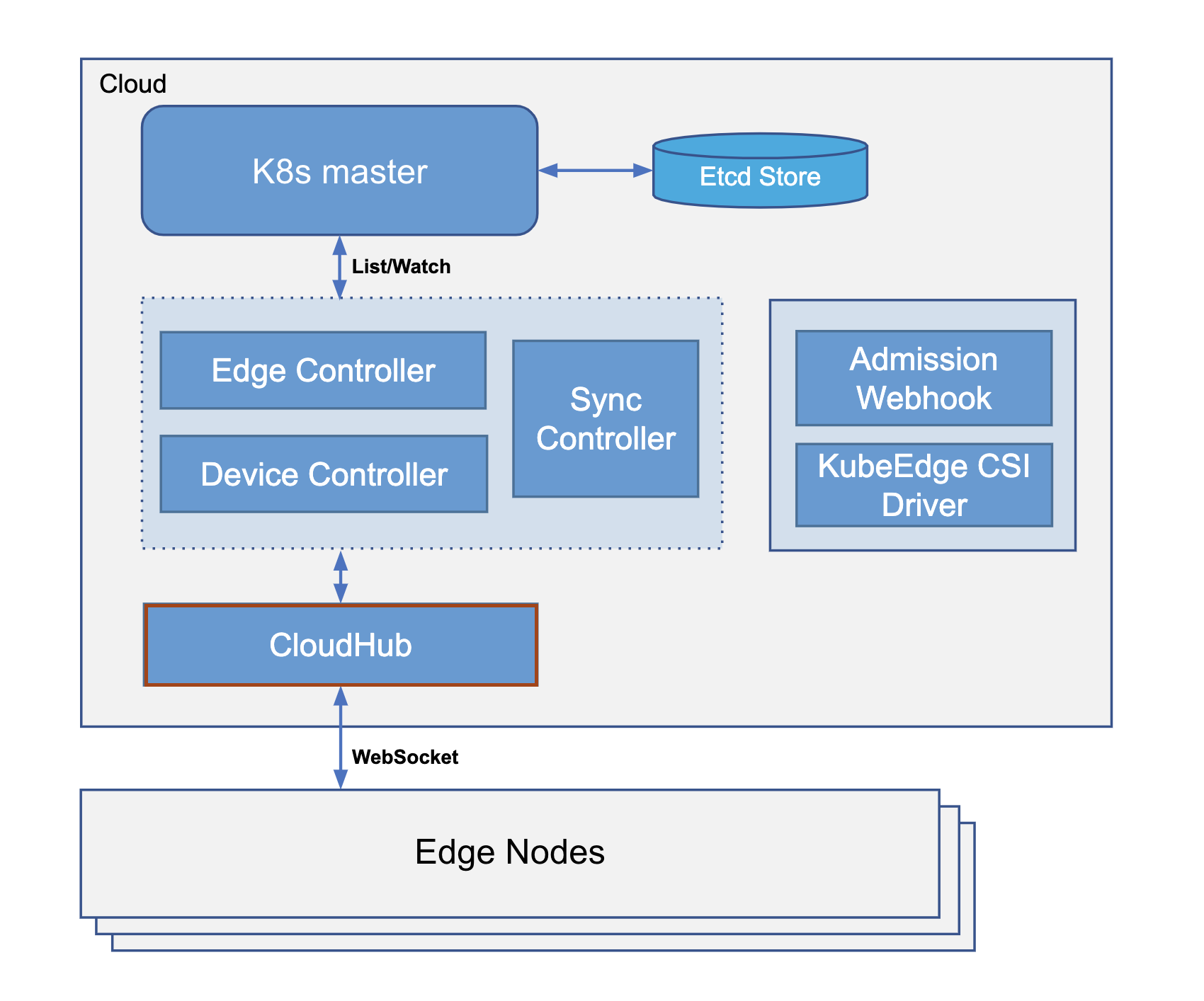

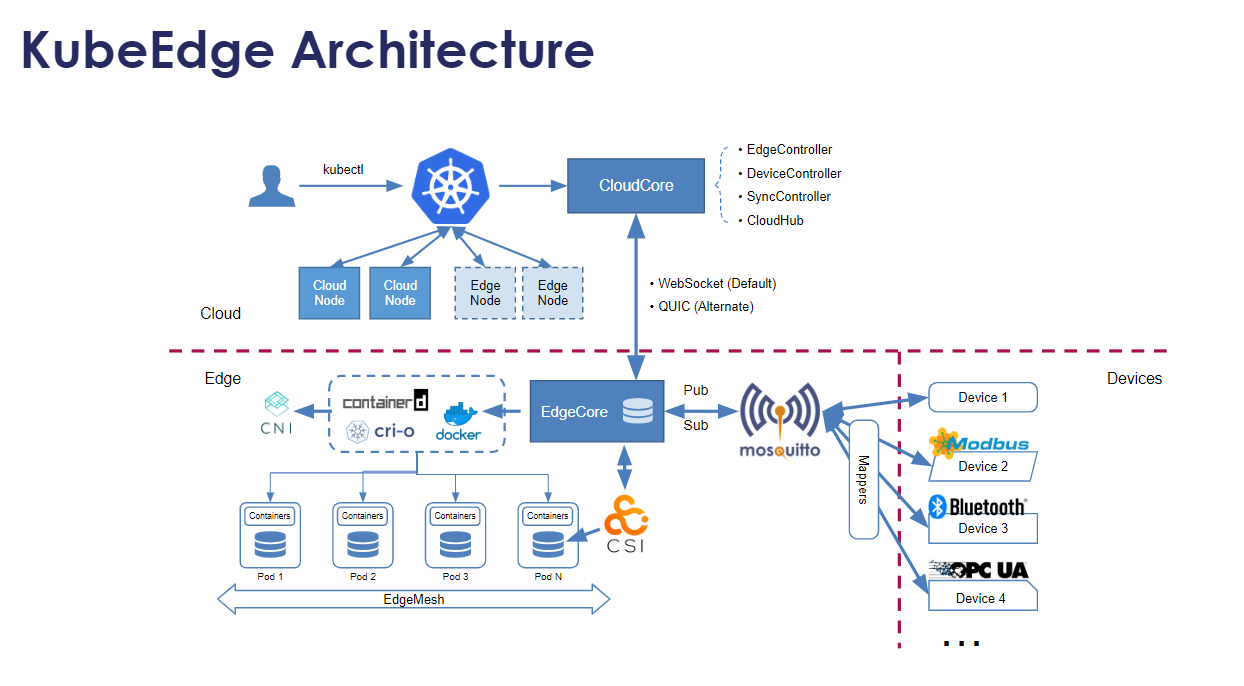

架构

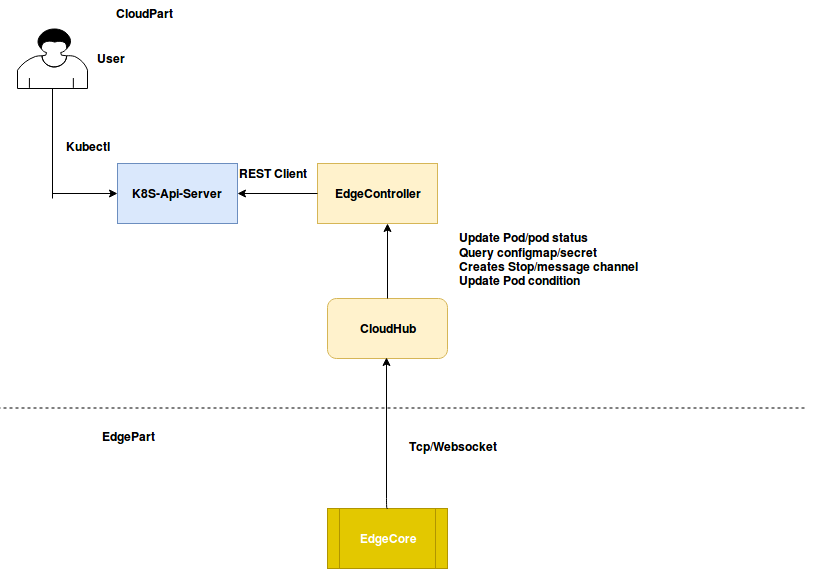

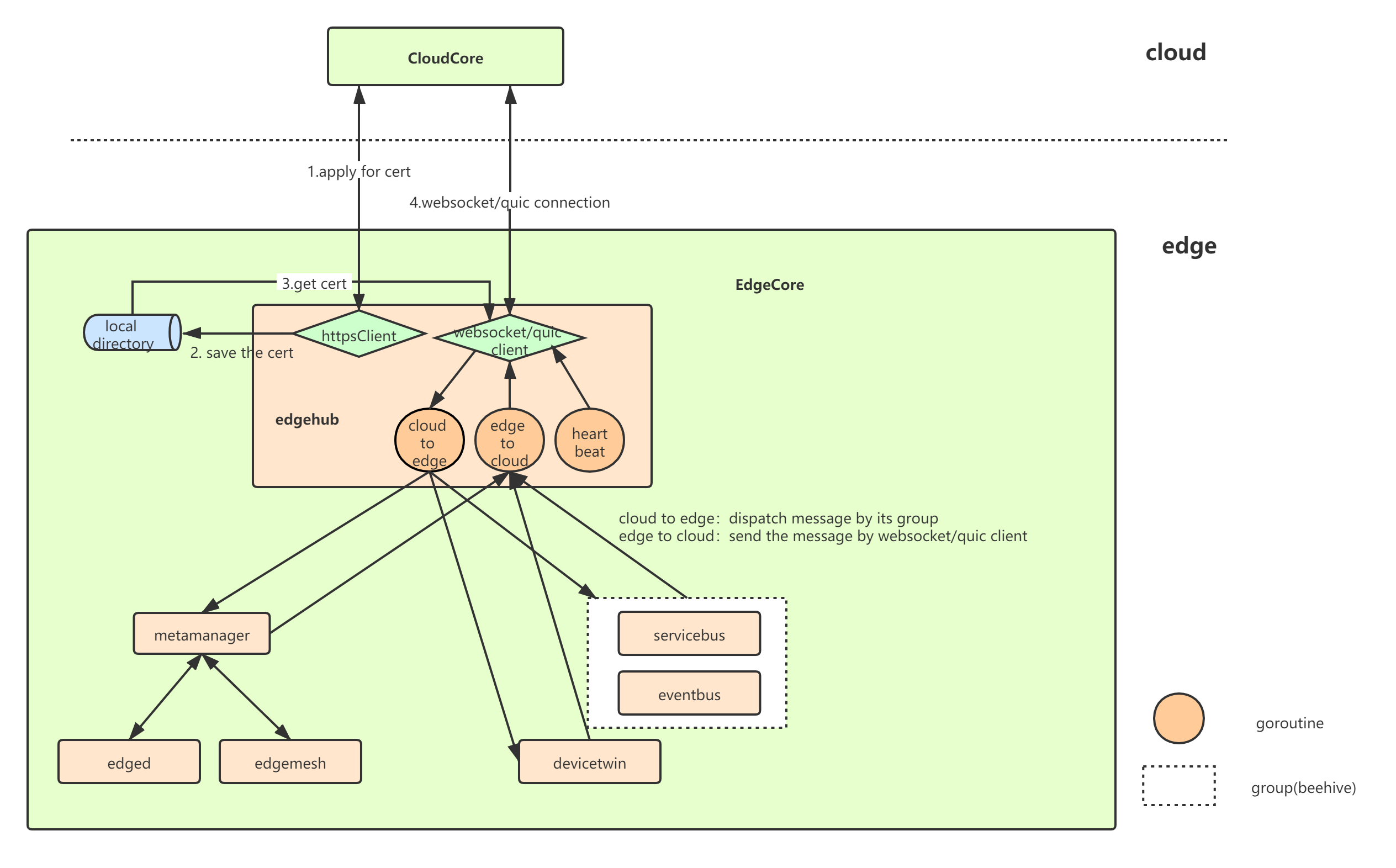

kubeedge分为两个可执行程序,cloudcore和edgecore,分别有以下模块

cloudcore:

- CloudHub:云中的通信接口模块。一个Web套接字服务器,负责监视云端的更改、缓存和向EdgeHub发送消息

- EdgeController:管理Edge节点。 一种扩展的Kubernetes控制器,它管理边缘节点和pod元数据,来定义边缘节点。

- devicecontroller 负责设备管理。一种扩展的Kubernetes控制器,用于管理设备,以便设备元数据/状态数据可以在边缘和云之间同步。

edgecore: 主要有6个模块

- Edged:在边缘节点上运行并管理容器化应用程序的代理。

- EdgeHub:Edge上负责与云服务交互的Web套接字客户端。 负责与用于边缘计算(如KubeEdge体系结构中的EdgeController)云服务交互的Web套接字客户端,。这包括同步云端资源更新到边缘,以及报告边缘端主机和设备状态对云的更改。

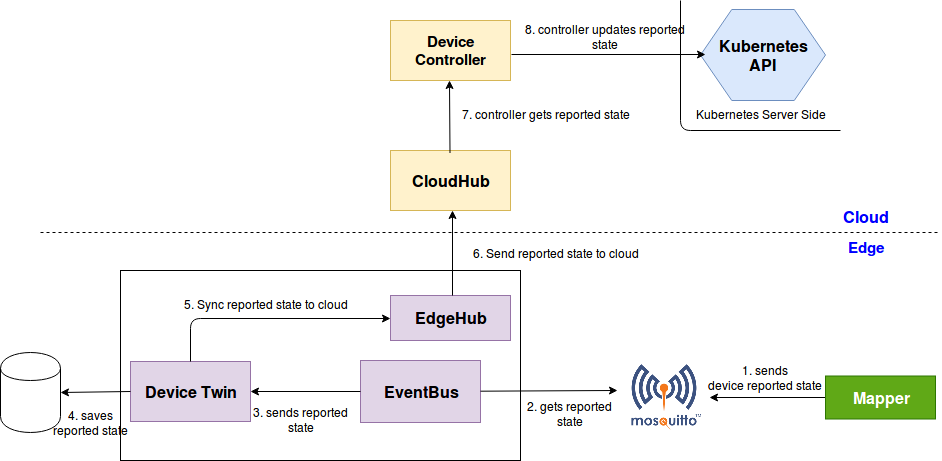

- EventBus:使用MQTT处理内部边缘通信。 MQTT客户端与MQTT服务器(MQTT服务器)交互,为其他组件提供发布和订阅功能。

- DeviceTwin:负责存储设备状态和同步设备状态到云。它还为应用程序提供查询接口。。

- MetaManager:edged和edgehub之间的消息处理器。它还负责将元数据存储/检索到轻量级数据库(SQLite)。

- ServiceBus: 接收云上服务请求和边缘应用进行http交互

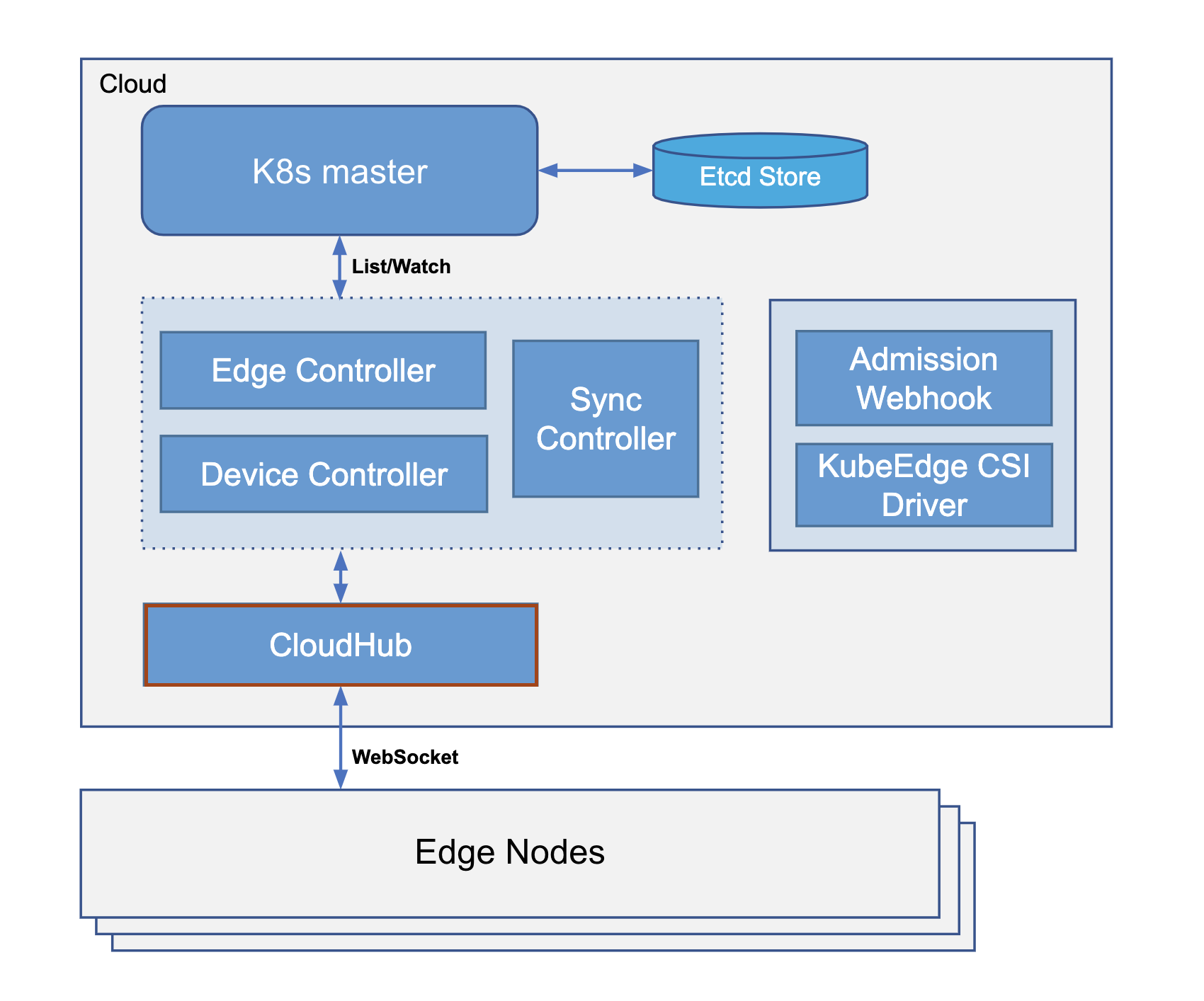

Cloudcore

CloudHub

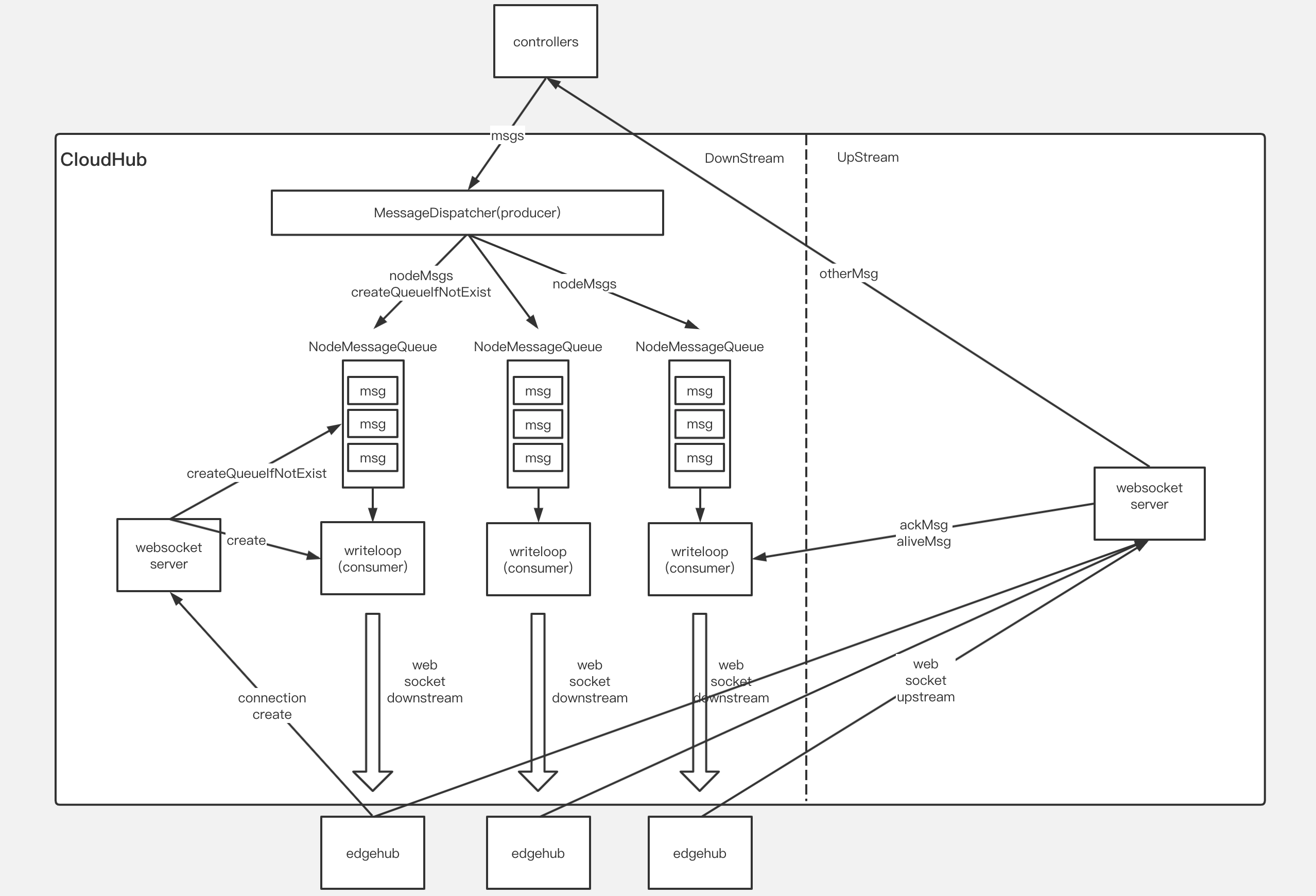

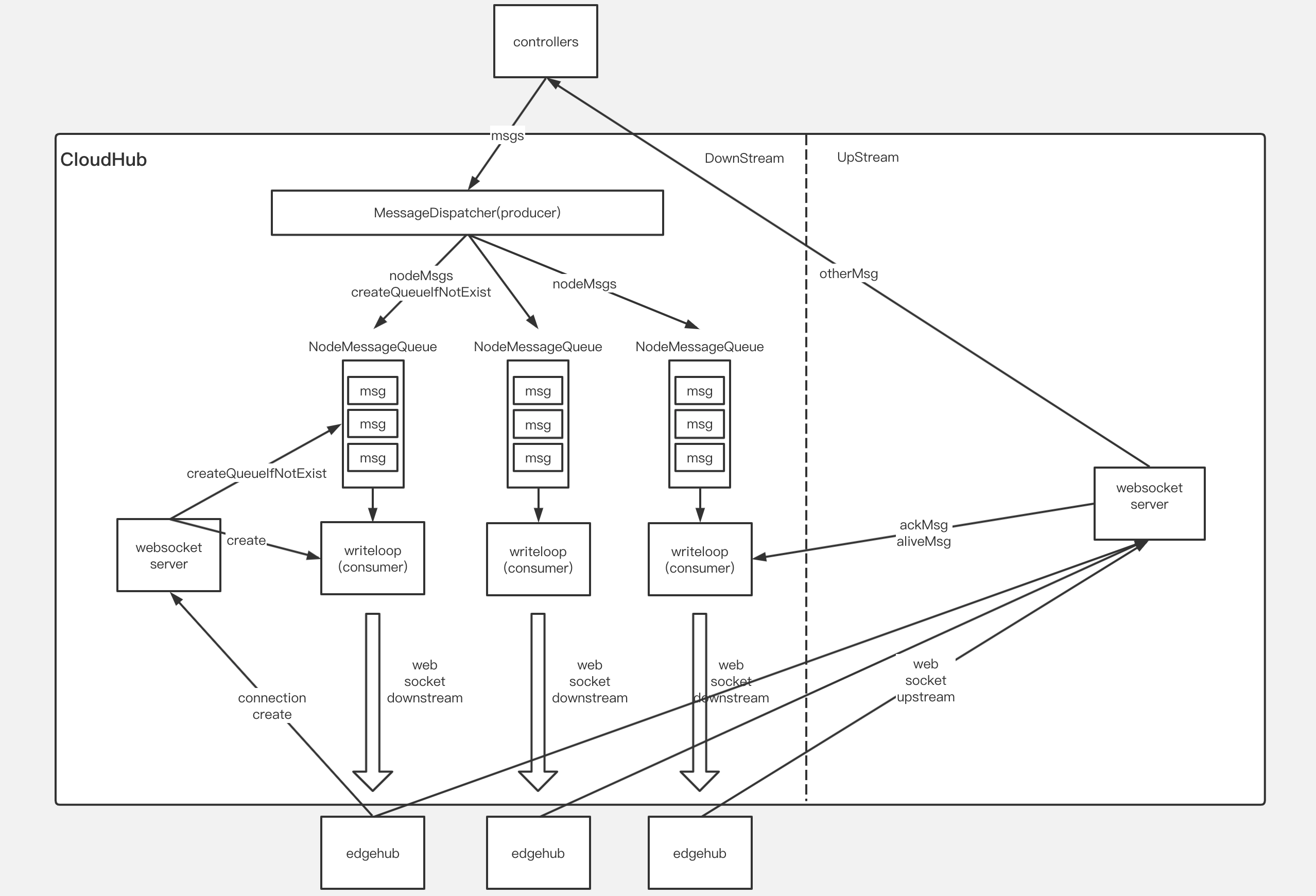

CloudHub是cloudcore的一个模块,是Controller和Edge端之间的中介。它负责下行分发消息(其内封装了k8s资源事件,如pod update等),也负责接收并发送边缘节点上行消息至controllers。其中下行的消息在应用层增强了传输的可靠性,以应对云边的弱网络环境。

到边缘的连接(通过EdgeHub模块)是通过可选的websocket/quic连接完成的。对于Cloudcore内部通信,Cloudhub直接与Controller通讯。Controller发送到CloudHub的所有请求,与用于存储这个边缘节点的事件对象的通道一起存储在channelq中。

Cloudhub内部主要有以下几个重要结构:

-

MessageDispatcher:下行消息分发中心,也是下行消息队列的生产者,DispatchMessage函数中实现。

-

NodeMessageQueue:每个边缘节点有一个专属的消息队列,总体构成一个队列池,以Node + UID作为区分,ChannelMessageQueue结构体实现

-

WriteLoop:负责将消息写入底层连接,是上述消息队列的消费者

-

Connection server:接收边缘节点访问,支持websocket协议和quick协议连接

-

Http server:为边缘节点提供证书服务,如证书签发与证书轮转

云边消息格式的结构如下:

Header,由beehive框架调用NewMessage函数提供,主要包括ID、ParentID、TimeStamp Body,包含消息源,消息Group,消息资源,资源对应的操作

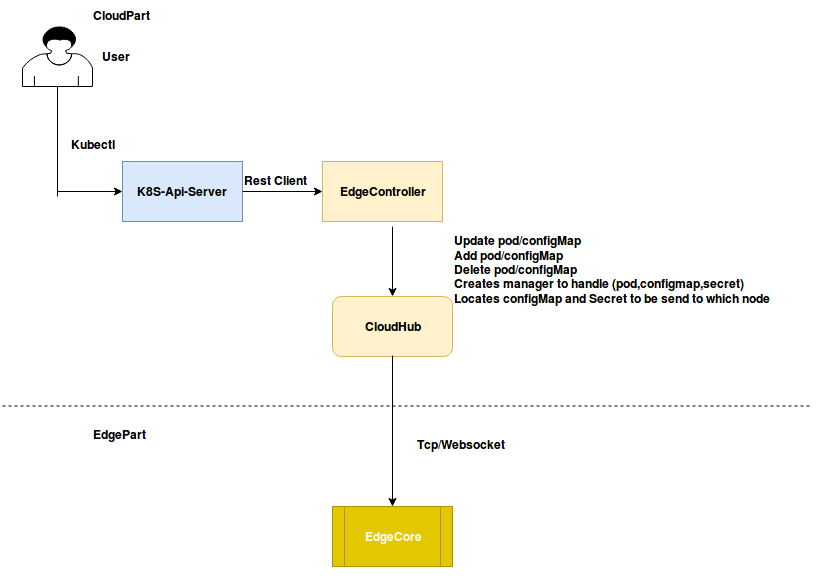

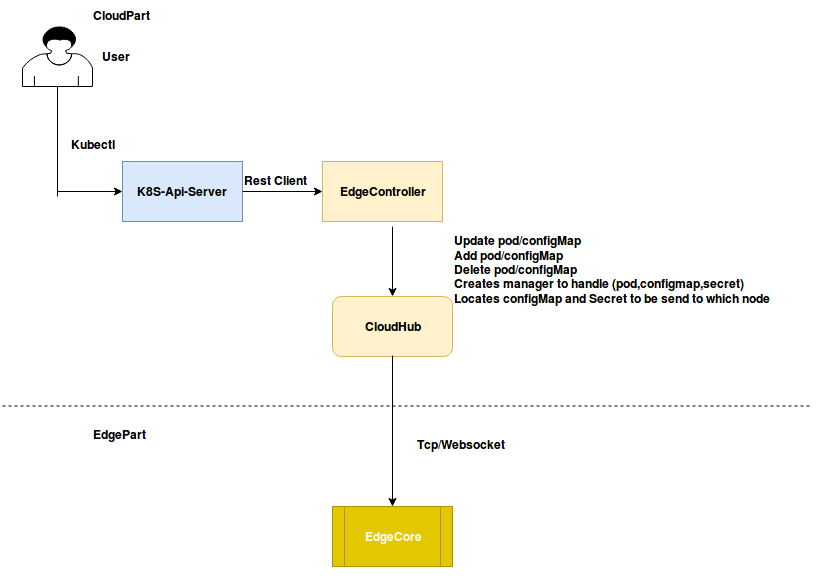

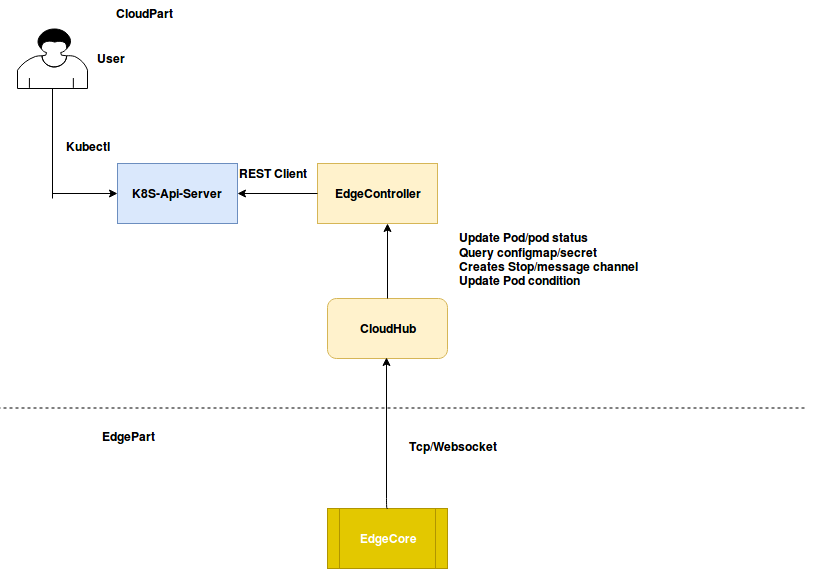

Edge Controller

EdgeController是Kubernetes Api服务器和Edgecore之间的桥梁

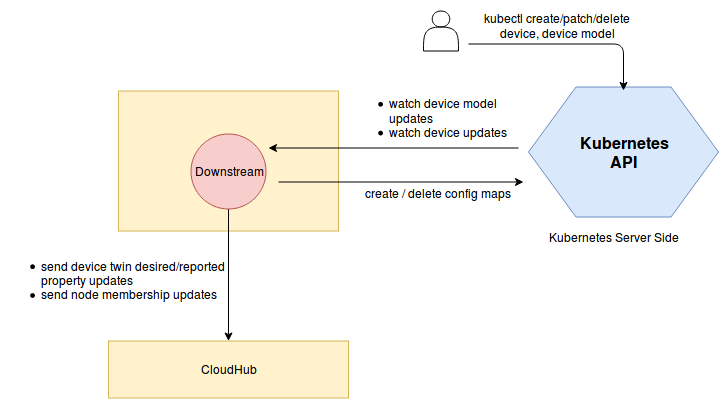

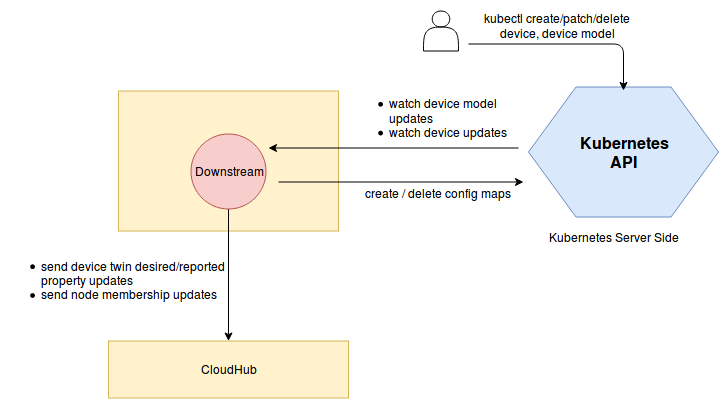

DownstreamController

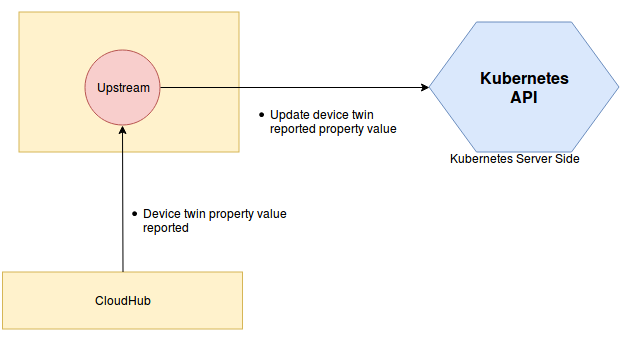

UpstreamController

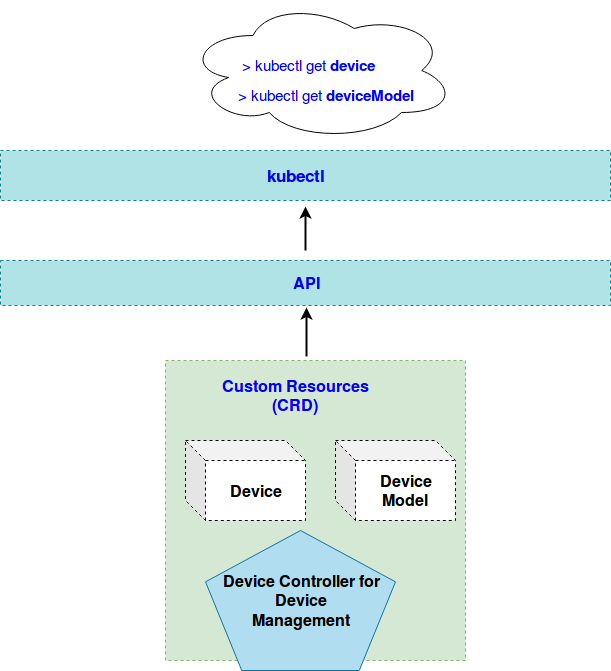

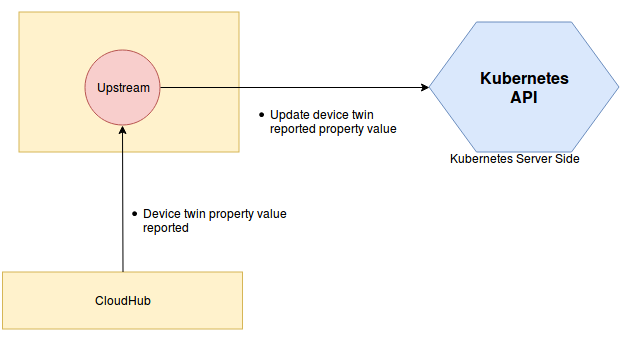

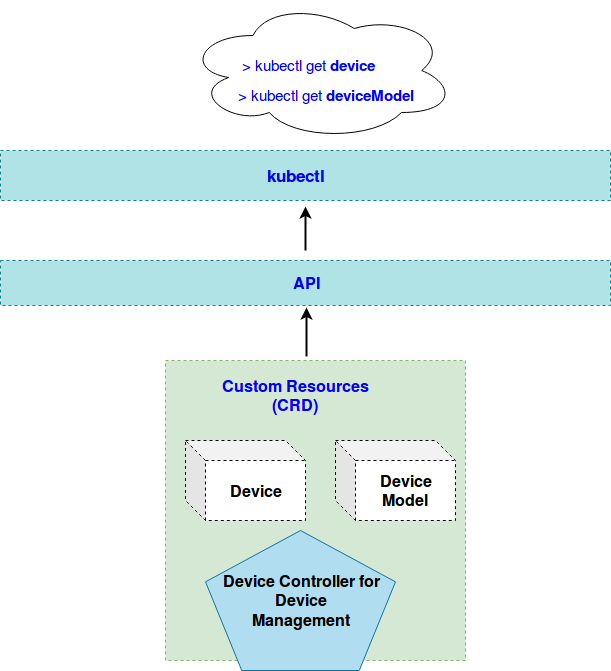

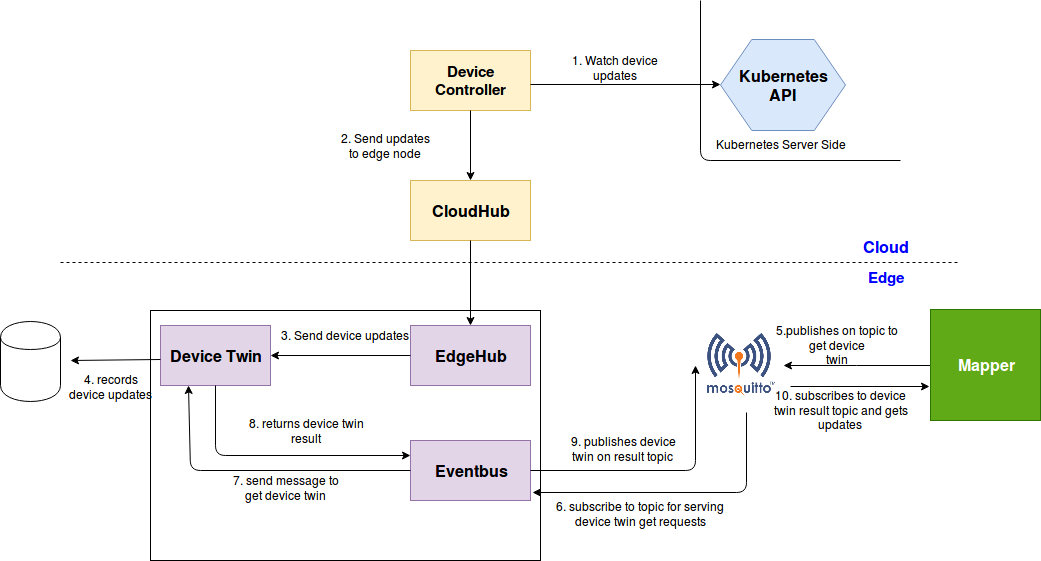

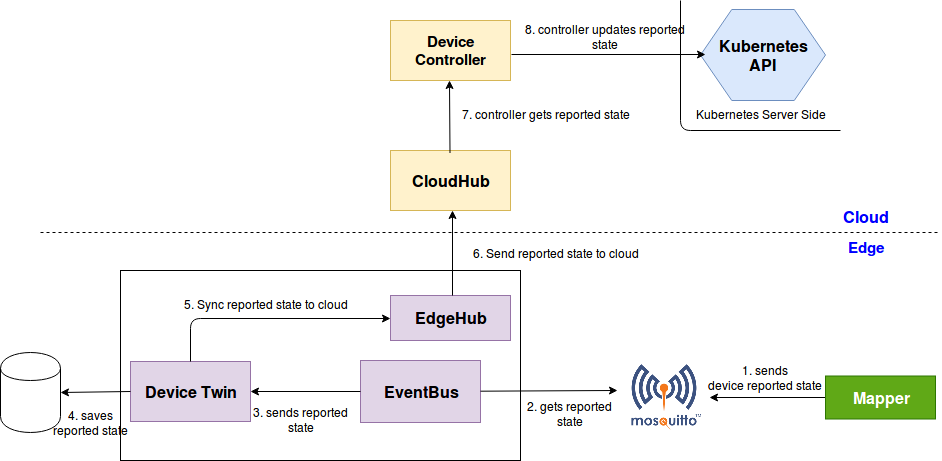

Device Controller

通过k8s CRD来描述设备metadata/status ,devicecontroller在云和边缘之间同步,有两个goroutines: upstream controller/downstream controller

device-crd-model

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# 关于kubeedge的crd

# kubectl get CustomResourceDefinition -A |grep edge

clusterobjectsyncs.reliablesyncs.kubeedge.io 2021-05-25T02:35:25Z

devicemodels.devices.kubeedge.io 2021-05-25T02:33:32Z

devices.devices.kubeedge.io 2021-05-25T02:29:00Z

objectsyncs.reliablesyncs.kubeedge.io 2021-05-25T02:44:23Z

ruleendpoints.rules.kubeedge.io 2021-05-25T02:44:24Z

rules.rules.kubeedge.io 2021-05-25T02:44:23Z

# kubeedge device-model 和 device的crd

# kubectl get CustomResourceDefinition -A |grep edge |grep device

devicemodels.devices.kubeedge.io 2021-05-25T02:33:32Z

devices.devices.kubeedge.io 2021-05-25T02:29:00Z

|

云端下发更新边缘端设备

device-updates-cloud-edge

边缘端更新设备上报云端

device-updates-edge-cloud

EdgeCore

EdgeCore支持amd以及arm,不能运行在有kubelet以及Kube-proxy的节点(或者关闭EdgeCore运行环境检查开关参数)。

EdgeCore包括几个模块:Edged、EdgeHub、MetaManager、DeviceTwin、EventBus、ServiceBus、EdgeStream以及EdgeMesh。

与k8s节点上部署的kubelet相比:对kubelet不必要的部分进行了精简,即edgecore中的edged;edgecore增加了与设备管理相关的模块如devicetwin以及eventbus;edgemesh模块实现了服务发现;edgecore将元数据进行本地存储,保证云边网络不稳定时边缘端也能正常工作,metamanager进行元数据的管理。

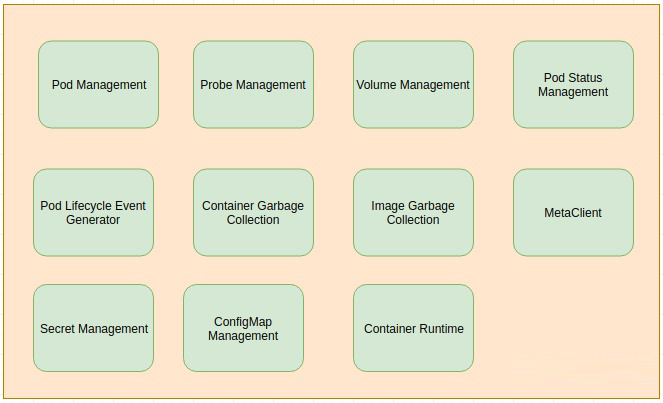

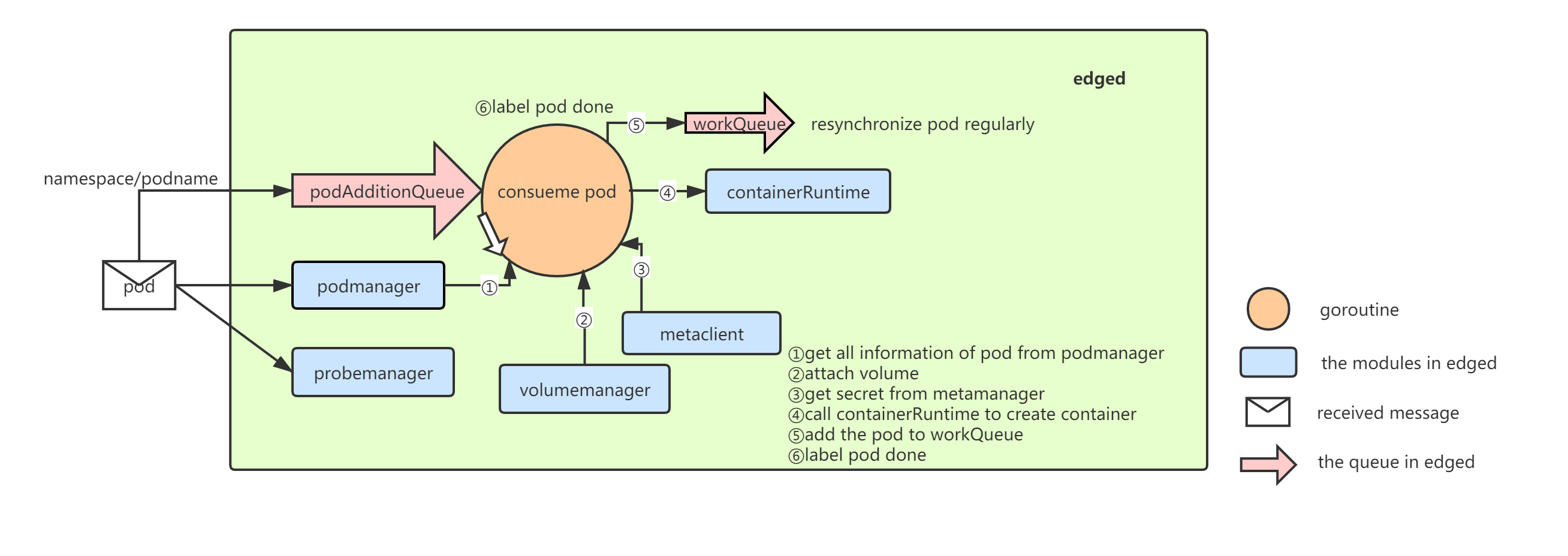

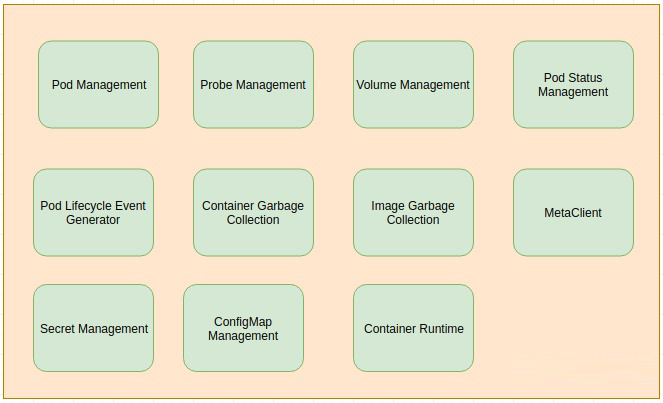

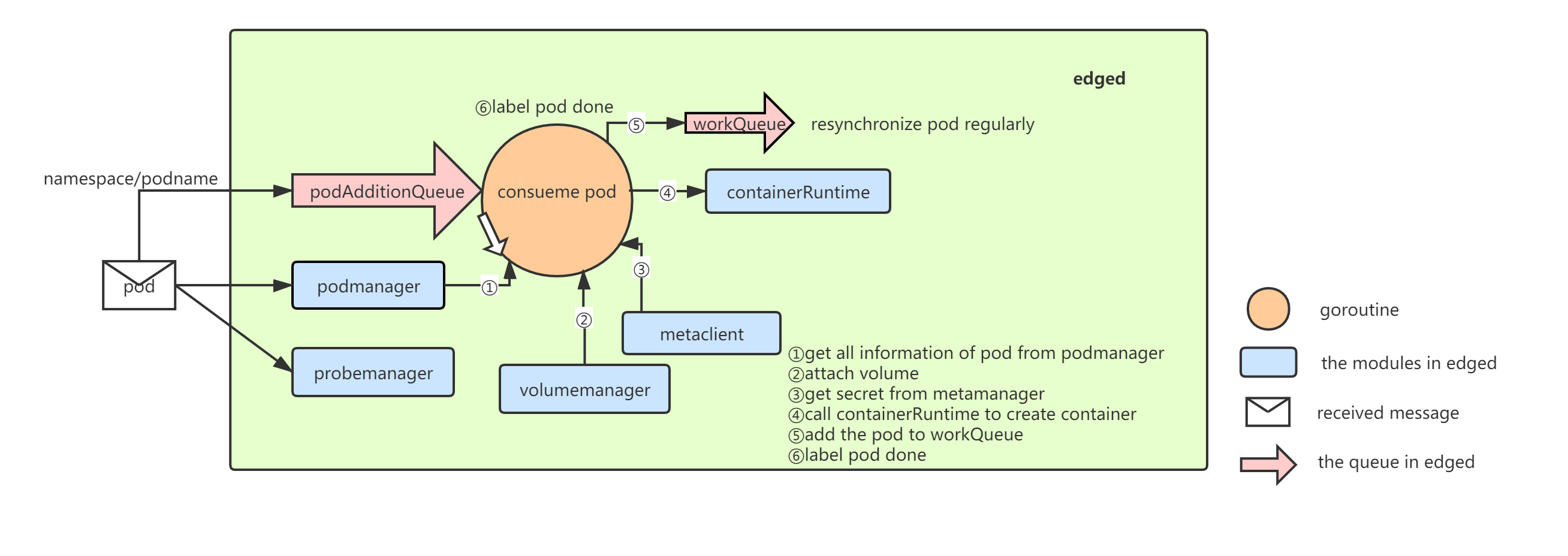

Edged

Edged是管理节点生命周期的边缘节点模块。它可以帮助用户在边缘节点上部署容器化的工作负载或应用程序。 这些工作负载可以执行任何操作,从简单的遥测数据操作到分析或ML推理等。使用kubectl云端的命令行界面,用户可以发出命令来启动工作负载。

当前容器和镜像管理支持Docker容器运行时。将来应添加其他运行时支持,例如containerd等。

有许多模块协同工作以实现edged的功能。

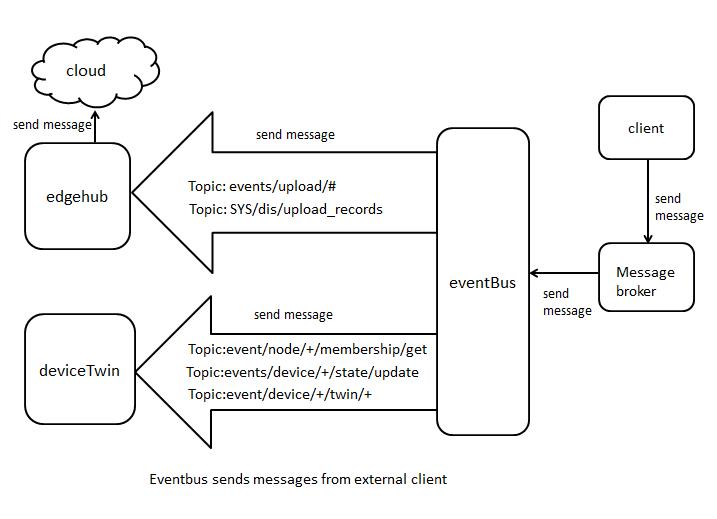

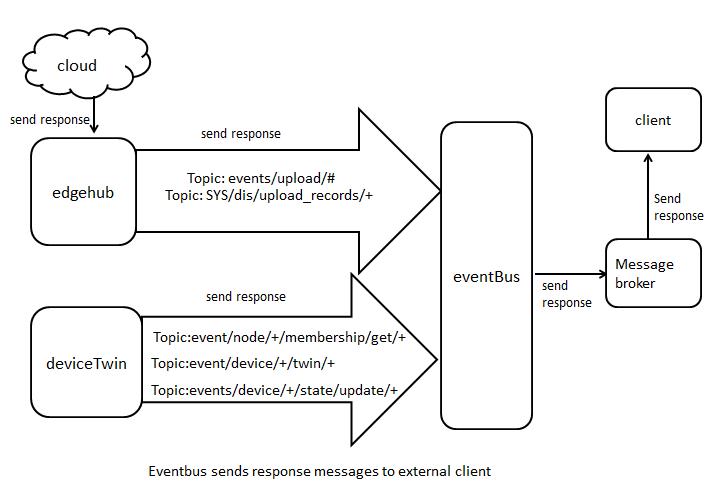

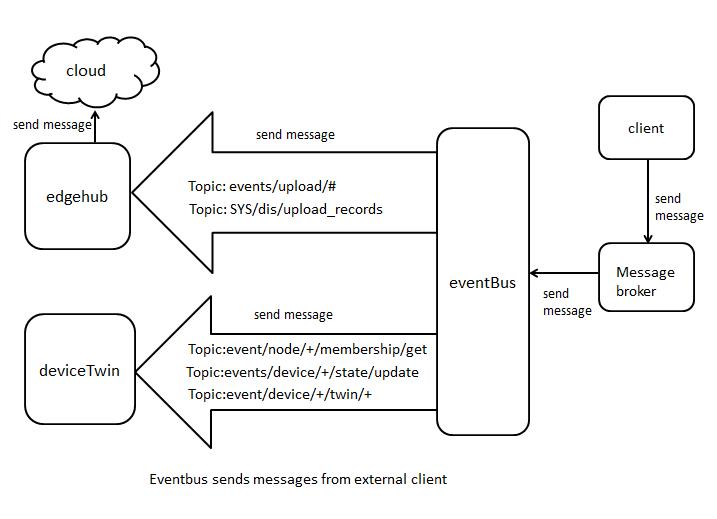

eventbus

Eventbus充当用于发送/接收有关mqtt主题的消息的接口

modes

它支持三种模式:

- internalMqttMode

- externalMqttMode

- bothMqttMode

topics

1

2

3

4

5

6

7

8

|

- $hw/events/upload/#

- SYS/dis/upload_records

- SYS/dis/upload_records/+

- $hw/event/node/+/membership/get

- $hw/event/node/+/membership/get/+

- $hw/events/device/+/state/update

- $hw/events/device/+/state/update/+

- $hw/event/device/+/twin/+

|

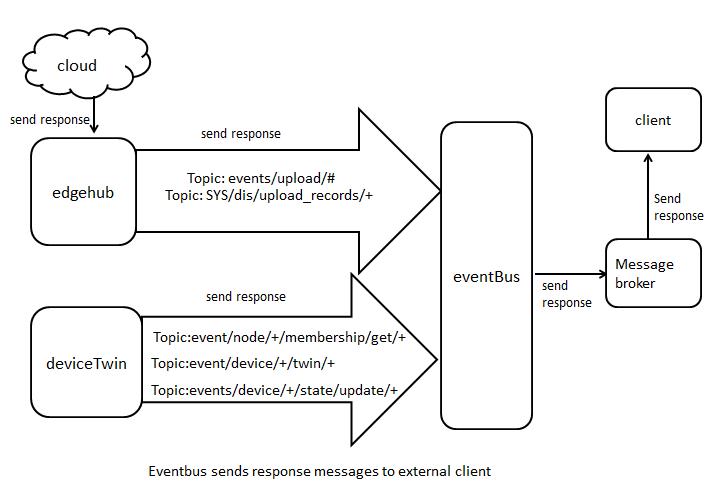

messages flow

- eventbus sends messages from external client

- eventbus sends response messages to external client

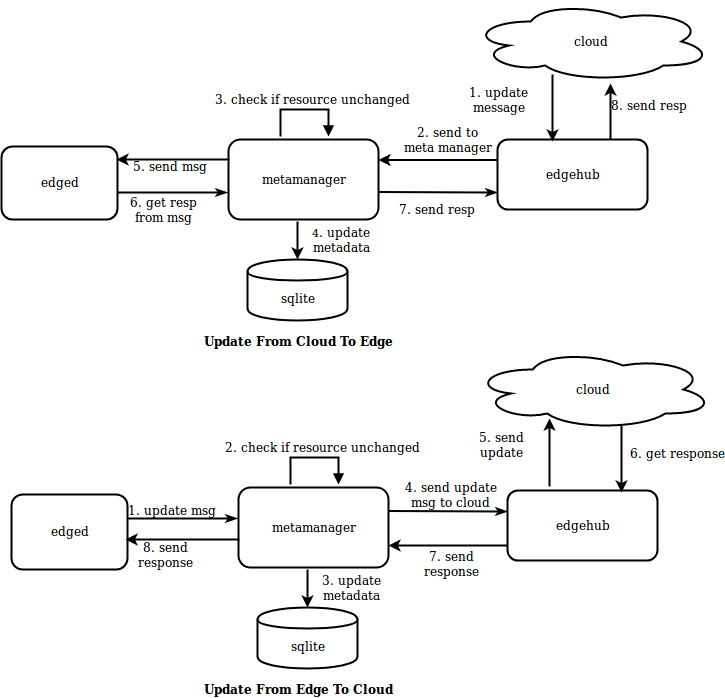

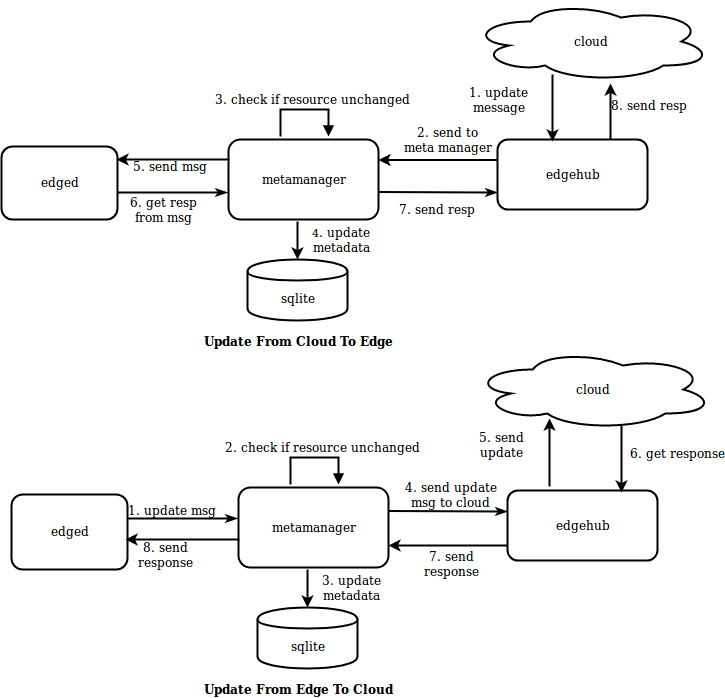

MetaManager是edged和edgehub之间的消息处理器。它还负责将元数据存储到轻量级数据库(SQLite)或从中检索元数据。

Metamanager根据以下列出的操作接收不同类型的消息:

- Insert

- Update

- Delete

- Query

- Response

- NodeConnection

- MetaSync

Update Operation

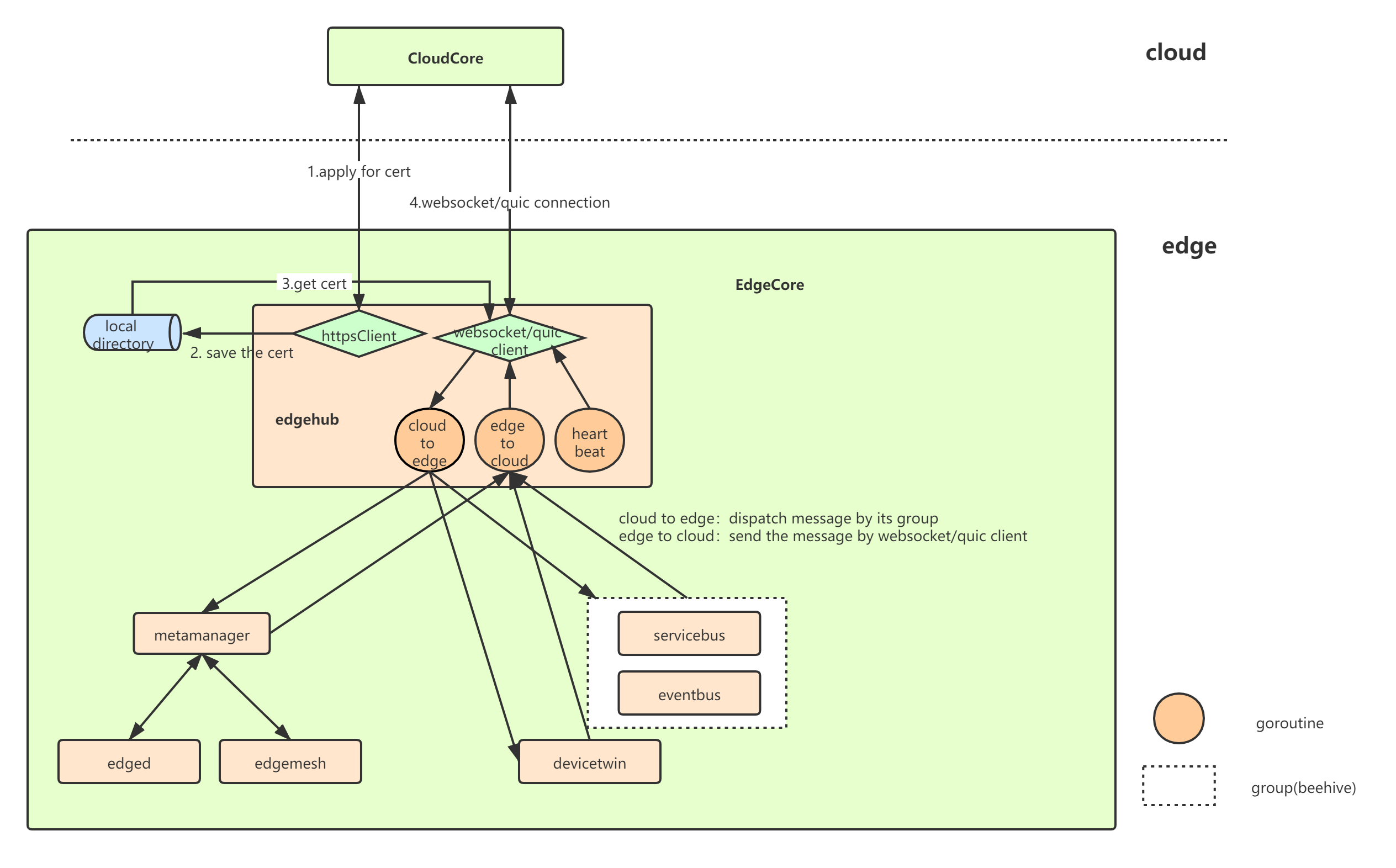

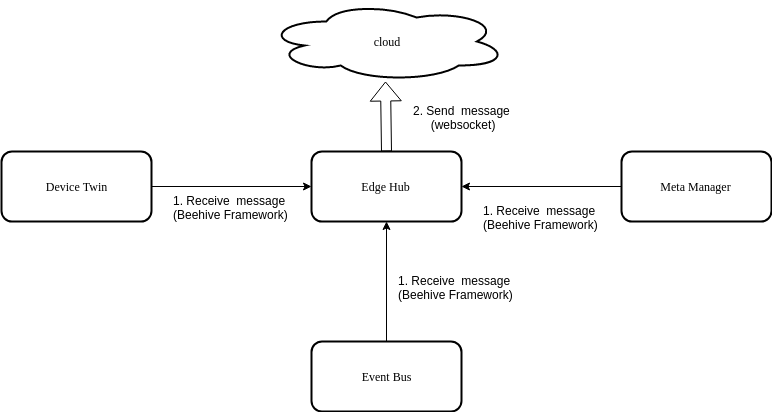

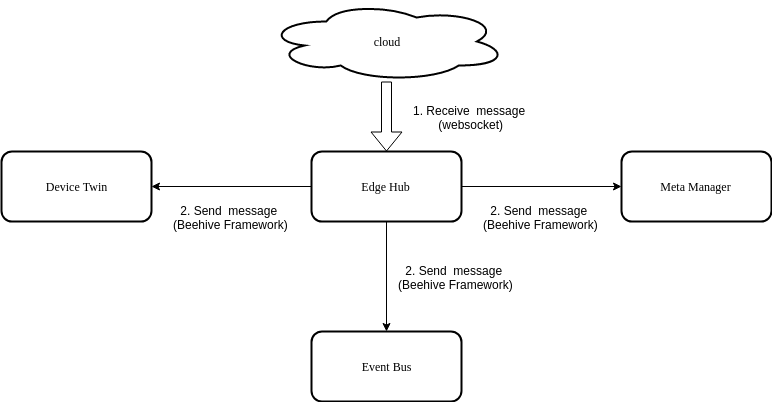

Edgehub

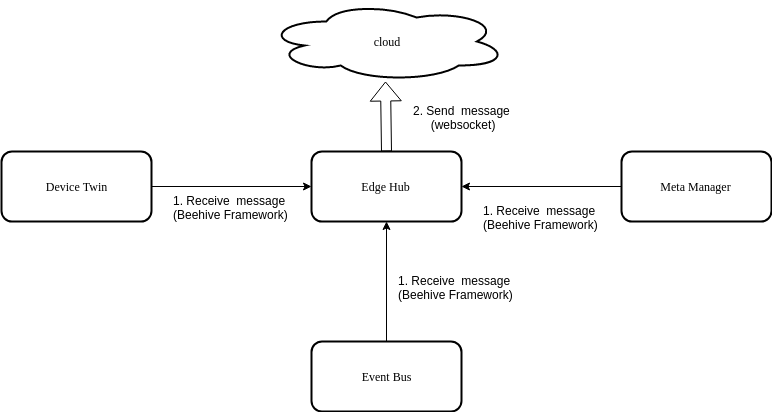

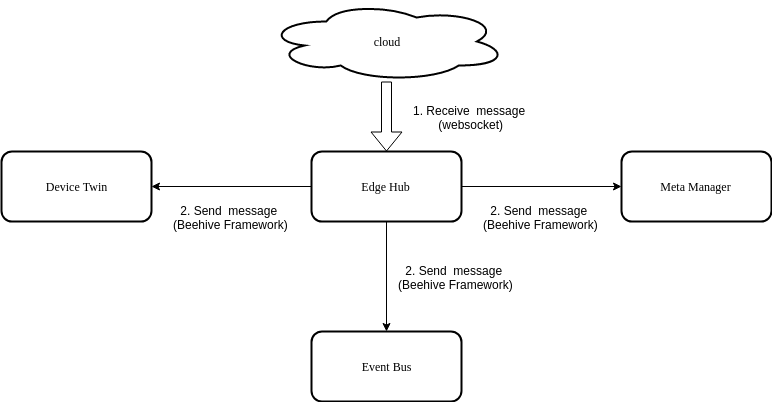

Edge Hub负责与云中存在的CloudHub组件进行交互。它可以使用WebSocket连接或QUIC协议连接到CloudHub 。它支持同步云端资源更新,报告边缘端主机和设备状态更改等功能。

它充当边缘与云之间的通信链接。它将从云接收的消息转发到边缘的相应模块,反之亦然。

edgehub执行的主要功能是:

- Keep Alive

- Publish Client Info

- Route to Cloud

- Route to Edge

EdgeHub中有两类client,分别是httpclient以及websocket/quic client,前者用于与EdgeCore与CloudCore通信所需证书的申请,后者负责与CloudCore的日常通信(资源下发、状态上传等)

当EdgeHub启动时,其先从CloudCore申请证书(若正确配置本地证书,则直接使用本地证书)

初始化与CloudCore通信的websocket/quic client,成功连接之后将成功连接的信息传给其他组件(MetaGroup、TwinGroup、BusGroup),分别启动三个goroutine不断的进行云到边以及边到云的消息分发(单纯分发,不做任何封装或改变)、健康状态上报。当云边传送消息过程中出现错误时,则边缘端重新init相应的websocket/quic client,与云端重新建立连接。

Route To Cloud

Route To Edge

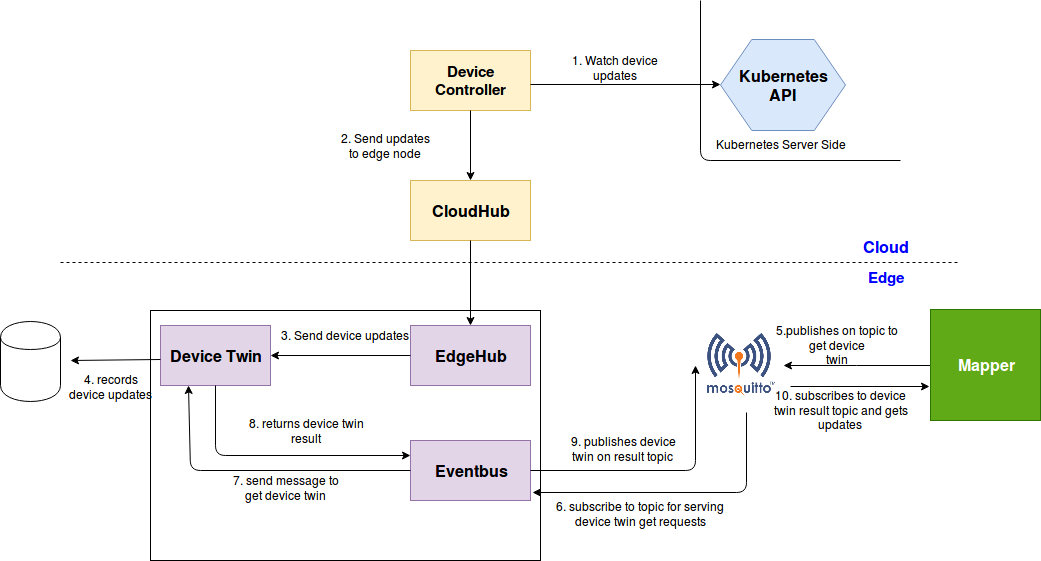

DeviceTwin

DeviceTwin模块负责存储设备状态,处理设备属性,处理DeviceTwin操作,在边缘设备和边缘节点之间创建成员关系, 将设备状态同步到云以及在边缘和云之间同步DeviceTwin信息。它还为应用程序提供查询接口。 DeviceTwin由四个子模块(即membership,communication,device和device twin)组成,以执行device twin模块的职责。

- Membership Module

- Twin Module

- Communication Module

- Device Module

- 数据存储方面,将设备数据存储到本地存储sqlLite,包括三张表:device、deviceAttr和deviceTwin。

- 处理其他模块发送到twin module的消息,然后调用 dtc.distributeMsg来处理消息。在消息处理逻辑里面,消息被分为了四个类别,并分别发送到这四个类别的action执行处理(每一个类别又包含多个action):

- membership

- device

- communication

- twin

edgemesh

和kube-proxy的对比

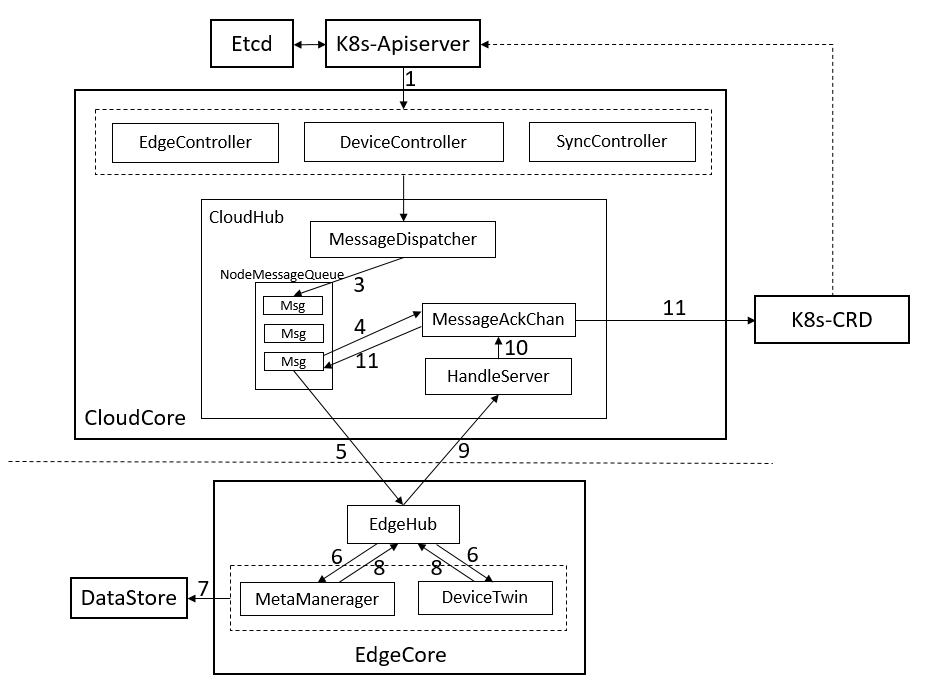

可靠的消息传递机制

云和边缘之间的不稳定网络会导致边缘节点频繁断开。如果Cloudcore或EdgeCore重新启动或脱机一段时间,这可能导致发送到边缘节点的消息丢失,这些消息无法临时到达。如果没有新事件成功地传递到边缘,这将导致云和边缘之间的不一致。

所以需要考虑设计一种云与边缘之间可靠的消息传递机制

有三种类型的消息传递机制:

- At-Most-Once

- Exactly-Once

- At-Least-Once

-

At-Most-Once方式不可靠

-

第二种方法“Exactly-Once”非常昂贵,表现出最差的性能,尽管它提供了保证传递而不丢失或复制消息。由于KubeEdge遵循Kubernetes的最终一致性设计原则,因此只要消息是最新消息,边缘就不会反复接收相同的消息。

-

建议使用At-Least-Once

At-Least-Once Delivery

下面是一个使用MessageQueue和ACK确保消息从云传递到边缘的设计。

- 我们使用K8s CRD存储资源的最新版本,该资源已经成功地发送到EDGE。当Cloudcore正常重新启动或启动时,它将检查ResourceVersion以避免发送旧消息。

- EdgeController和devicecontroller将消息发送到Cloudhub,MessageDispatcher将根据消息中的节点名称向相应的NodeMessageQueue发送消息。

- CloudHub顺序地将数据从NodeMessageQueue发送到相应的边缘节点,并将消息ID存储在ACK信道中。当从边缘节点接收到ACK消息时,ACK通道将触发将消息资源版本保存到K8s作为CRD,并发送下一条消息。

- 当EdgeCore接收到消息时,它将首先将消息保存到本地数据存储,然后将ACK消息返回给云。

- 如果CloudHub在间隔内没有接收到ACK消息,它将继续重发该消息5次。如果所有5次重试都失败,CloudHub将放弃该事件。SyncController将处理这些失败事件。

- 即使边缘节点接收到消息,返回的ACK消息也可能在传输期间丢失。在这种情况下,CloudHub将再次发送消息,边缘可以处理重复的消息。

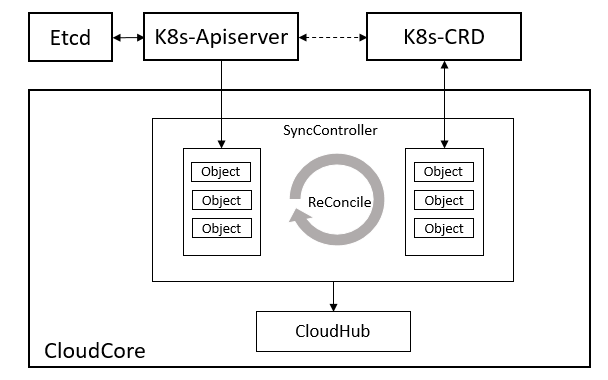

SyncController

SyncController将定期将保存的对象资源验证与K8s中的对象进行比较,然后触发重试和删除等事件。

当CloudHub向nodeMessageQueue添加事件时,它将与nodeMessageQueue中的相应对象进行比较。如果nodeMessageQueue中的对象较新,它将直接丢弃这些事件。

Message Queue

当每个边缘节点成功连接到云时,将创建一个消息队列,该队列将缓存发送到边缘节点的所有消息。我们使用Kubernetes/Client-go中的workQueue和cacheStore来实现消息队列和对象存储。使用Kubernetes workQueue,将合并重复事件以提高传输效率。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

// ChannelMessageQueue is the channel implementation of MessageQueue

type ChannelMessageQueue struct {

queuePool sync.Map

storePool sync.Map

listQueuePool sync.Map

listStorePool sync.Map

objectSyncLister reliablesyncslisters.ObjectSyncLister

clusterObjectSyncLister reliablesyncslisters.ClusterObjectSyncLister

}

// Add message to the queue:

key,_:=getMsgKey(&message)

nodeStore.Add(message)

nodeQueue.Add(message)

// Get the message from the queue:

key,_:=nodeQueue.Get()

msg,_,_:=nodeStore.GetByKey(key.(string))

// Structure of the message key:

Key = resourceType/resourceNamespace/resourceName

|

说明,为了提高队列操作性能,队列中排列的是message key

1

2

|

AckMessage.ParentID = receivedMessage.ID

AckMessage.Operation = "response"

|

ReliableSync CRD

我们使用K8s CRD保存已成功持久化到边缘的对象的资源验证。为了节省资源,我们设计了两种类型的CRD。

- ClusterObjectSync 用于保存集群作用域对象,

- ObjectSync 用于保存命名空间作用域对象。

它们的名称由相关的节点名称和对象UUID组成。

1

2

3

4

5

|

// BuildObjectSyncName builds the name of objectSync/clusterObjectSync

func BuildObjectSyncName(nodeName, UID string) string {

return nodeName + "." + UID

}

|

The ClusterObjectSync

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

type ClusterObjectSync struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec ClusterObjectSyncSpec `json:"spec,omitempty"`

Status ClusterObjectSyncStatus `json:"spec,omitempty"`

}

// ClusterObjectSyncSpec stores the details of objects that sent to the edge.

type ClusterObjectSyncSpec struct {

// Required: ObjectGroupVerion is the group and version of the object

// that was successfully sent to the edge node.

ObjectGroupVerion string `json:"objectGroupVerion,omitempty"`

// Required: ObjectKind is the type of the object

// that was successfully sent to the edge node.

ObjectKind string `json:"objectKind,omitempty"`

// Required: ObjectName is the name of the object

// that was successfully sent to the edge node.

ObjectName string `json:"objectName,omitempty"`

}

// ClusterObjectSyncSpec stores the resourceversion of objects that sent to the edge.

type ClusterObjectSyncStatus struct {

// Required: ObjectResourceVersion is the resourceversion of the object

// that was successfully sent to the edge node.

ObjectResourceVersion string `json:"objectResourceVersion,omitempty"`

}

|

The ObjectSync

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

type ClusterObjectSync struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec ObjectSyncSpec `json:"spec,omitempty"`

Status ObjectSyncStatus `json:"spec,omitempty"`

}

// ObjectSyncSpec stores the details of objects that sent to the edge.

type ObjectSyncSpec struct {

// Required: ObjectGroupVerion is the group and version of the object

// that was successfully sent to the edge node.

ObjectGroupVerion string `json:"objectGroupVerion,omitempty"`

// Required: ObjectKind is the type of the object

// that was successfully sent to the edge node.

ObjectKind string `json:"objectKind,omitempty"`

// Required: ObjectName is the name of the object

// that was successfully sent to the edge node.

ObjectName string `json:"objectName,omitempty"`

}

// ClusterObjectSyncSpec stores the resourceversion of objects that sent to the edge.

type ObjectSyncStatus struct {

// Required: ObjectResourceVersion is the resourceversion of the object

// that was successfully sent to the edge node.

ObjectResourceVersion string `json:"objectResourceVersion,omitempty"`

}

|

ObjectSync CRD 示例

其中 ObjectSync crd实例name : {nodename.UID} : node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

(base) [root@node1 ~]# kubectl get ObjectSync -A

NAMESPACE NAME AGE

default node2.252da63b-5b27-4cb5-9d36-0115657a9ffb 25h

default node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009 127m

default node2.bc5a3c5e-69dd-49c1-9753-194609229886 25h

kube-system node2.538ed3e7-7cf1-417a-ad72-b69c152c6c00 25h

kube-system node2.a0ce6db3-e247-436f-a72e-bdf5b2cc8fb9 25h

(base) [root@node1 ~]#

(base) [root@node1 ~]#

(base) [root@node1 ~]# kubectl describe -n default node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009

error: the server doesn't have a resource type "node2"

(base) [root@node1 ~]# kubectl describe ObjectSync -n default node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009

Name: node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009

Namespace: default

Labels: <none>

Annotations: <none>

API Version: reliablesyncs.kubeedge.io/v1alpha1

Kind: ObjectSync

Metadata:

Creation Timestamp: 2021-05-26T05:42:07Z

Generation: 1

Resource Version: 34833736

Self Link: /apis/reliablesyncs.kubeedge.io/v1alpha1/namespaces/default/objectsyncs/node2.89c8bbd2-da6d-4bb4-bfb1-2faf68852009

UID: c329514a-784c-4fdf-9261-53bf4dcc18a0

Spec:

Object API Version: v1

Object Kind: pods

Object Name: testpod

Status:

Object Resource Version: 34833729

Events: <none>

(base) [root@node1 ~]#

|

Exception scenarios/Corner cases handling

CloudCore重启

- 当Cloudcore正常重新启动或启动时,它将检查ResourceVersion以避免发送旧消息。

- 在Cloudcore重新启动期间,如果删除某些对象,此时可能会丢失DELETE事件。SyncController将处理此情况。这里需要对象GC机制来确保删除:比较CRD中存储的对象是否存在于K8s中。如果没有,则SyncController将生成并发送一个DELETE事件到边缘,并在ACK接收到时删除CRD中的对象。

EdgeCore 重启

- 当EdgeCore重新启动或脱机一段时间后,节点消息队列将缓存所有消息,每当该节点恢复联机时,消息将被发送。

- 当边缘节点脱机时,CloudHub将停止发送消息,直到边缘节点恢复联机才会重试。

EdgeNode 删除

- 当从云中删除edgenode时,Cloudcore将删除相应的消息队列和存储

ObjectSync CR 垃圾回收

当EdgeNode不在集群中时,应删除EdgeNode的所有ObjectSync CRS。

现在,触发垃圾收集的主要方法有两种:CloudCore的启动和EdgeNode的删除事件。

- 当CloudCore启动时,它将首先检查是否存在旧的ObjectSync CRS并删除它们。

- 当CloudCore运行时,EdgeNode被删除的事件将触发垃圾回收。

部署安装

Deploying using Keadm

Download keadm

下载页面,选择相应版本keadm

kubeedge下载链接

1

|

kubeedge/keadm-v1.6.2-linux-amd64/keadm/keadm

|

keadm用法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

# ./keadm -h

+----------------------------------------------------------+

| KEADM |

| Easily bootstrap a KubeEdge cluster |

| |

| Please give us feedback at: |

| https://github.com/kubeedge/kubeedge/issues |

+----------------------------------------------------------+

Create a cluster with cloud node

(which controls the edge node cluster), and edge nodes

(where native containerized application, in the form of

pods and deployments run), connects to devices.

Usage:

keadm [command]

Examples:

+----------------------------------------------------------+

| On the cloud machine: |

+----------------------------------------------------------+

| master node (on the cloud)# sudo keadm init |

+----------------------------------------------------------+

+----------------------------------------------------------+

| On the edge machine: |

+----------------------------------------------------------+

| worker node (at the edge)# sudo keadm join <flags> |

+----------------------------------------------------------+

You can then repeat the second step on, as many other machines as you like.

Available Commands:

debug debug function to help diagnose the cluster

gettoken To get the token for edge nodes to join the cluster

help Help about any command

init Bootstraps cloud component. Checks and install (if required) the pre-requisites.

join Bootstraps edge component. Checks and install (if required) the pre-requisites. Execute it on any edge node machine you wish to join

reset Teardowns KubeEdge (cloud & edge) component

version Print the version of keadm

Flags:

-h, --help help for keadm

Use "keadm [command] --help" for more information about a command.

|

Setup Cloud Side (KubeEdge Master Node)

By default ports 10000 and 10002 in your cloudcore needs to be accessible for your edge nodes.

keadm init will install cloudcore, generate the certs and install the CRDs. It also provides a flag by which a specific version can be set.

在master节点上执行:

- 采用默认版本参数

1

2

3

|

# keadm init --advertise-address="THE-EXPOSED-IP"(only work since 1.3 release)

keadm init --advertise-address="10.7.11.213"

|

- 或者指定版本

1

|

keadm init --advertise-address="10.7.11.212" --kubeedge-version=1.6.2 --kube-config=/root/.kube/config

|

Output:

1

2

3

|

Kubernetes version verification passed, KubeEdge installation will start...

...

KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

|

安装打印

指定版本kubeedge-v1.6.2,下载成功

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# ./keadm init --advertise-address="10.7.11.213" --kubeedge-version=1.6.2 --kube-config=/root/.kube/config

Kubernetes version verification passed, KubeEdge installation will start...

Expected or Default KubeEdge version 1.6.2 is already downloaded and will checksum for it.

kubeedge-v1.6.2-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.6.2-linux-amd64.tar.gz.txt content:

kubeedge-v1.6.2-linux-amd64.tar.gz in your path checksum failed and do you want to delete this file and try to download again?

[y/N]:

y

I0524 17:09:26.209161 8227 common.go:271] kubeedge-v1.6.2-linux-amd64.tar.gz have been deleted and will try to download again

kubeedge-v1.6.2-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.6.2-linux-amd64.tar.gz.txt content:

[Run as service] service file already exisits in /etc/kubeedge//cloudcore.service, skip download

kubeedge-v1.6.2-linux-amd64/

kubeedge-v1.6.2-linux-amd64/edge/

kubeedge-v1.6.2-linux-amd64/edge/edgecore

kubeedge-v1.6.2-linux-amd64/cloud/

kubeedge-v1.6.2-linux-amd64/cloud/csidriver/

kubeedge-v1.6.2-linux-amd64/cloud/csidriver/csidriver

kubeedge-v1.6.2-linux-amd64/cloud/admission/

kubeedge-v1.6.2-linux-amd64/cloud/admission/admission

kubeedge-v1.6.2-linux-amd64/cloud/cloudcore/

kubeedge-v1.6.2-linux-amd64/cloud/cloudcore/cloudcore

kubeedge-v1.6.2-linux-amd64/version

KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

CloudCore started

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# ps -ef |grep -i cloudcore

root 25669 1 0 17:13 pts/11 00:00:00 /usr/local/bin/cloudcore

root 30844 94002 0 17:16 pts/11 00:00:00 grep --color=auto -i cloudcore

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

|

cloudcore启动日志

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

]# cat /var/log/kubeedge/cloudcore.log

I0525 10:56:45.064260 34285 server.go:64] Version: v1.6.2

I0525 10:56:45.066622 34285 module.go:34] Module cloudhub registered successfully

I0525 10:56:45.083709 34285 module.go:34] Module edgecontroller registered successfully

I0525 10:56:45.083792 34285 module.go:34] Module devicecontroller registered successfully

I0525 10:56:45.083826 34285 module.go:34] Module synccontroller registered successfully

W0525 10:56:45.083850 34285 module.go:37] Module cloudStream is disabled, do not register

W0525 10:56:45.083856 34285 module.go:37] Module router is disabled, do not register

W0525 10:56:45.083865 34285 module.go:37] Module dynamiccontroller is disabled, do not register

I0525 10:56:45.083919 34285 core.go:24] Starting module cloudhub

I0525 10:56:45.083946 34285 core.go:24] Starting module edgecontroller

I0525 10:56:45.083975 34285 core.go:24] Starting module devicecontroller

I0525 10:56:45.084029 34285 core.go:24] Starting module synccontroller

I0525 10:56:45.084033 34285 upstream.go:110] start upstream controller

I0525 10:56:45.084694 34285 downstream.go:870] Start downstream devicecontroller

I0525 10:56:45.086932 34285 downstream.go:550] start downstream controller

I0525 10:56:45.184174 34285 server.go:269] Ca and CaKey don't exist in local directory, and will read from the secret

I0525 10:56:45.186205 34285 server.go:273] Ca and CaKey don't exist in the secret, and will be created by CloudCore

I0525 10:56:45.203712 34285 server.go:317] CloudCoreCert and key don't exist in local directory, and will read from the secret

I0525 10:56:45.209247 34285 server.go:321] CloudCoreCert and key don't exist in the secret, and will be signed by CA

I0525 10:56:45.224935 34285 signcerts.go:98] Succeed to creating token

I0525 10:56:45.225003 34285 server.go:44] start unix domain socket server

I0525 10:56:45.225475 34285 server.go:63] Starting cloudhub websocket server

I0525 10:56:45.225681 34285 uds.go:72] listening on: //var/lib/kubeedge/kubeedge.sock

I0525 10:56:47.085035 34285 upstream.go:63] Start upstream devicecontroller

|

Setup Edge Side (KubeEdge Worker Node)

Get Token From Cloud Side

Run keadm gettoken in cloud side will return the token, which will be used when joining edge nodes. 在cloud节点生成token,并记录,后面edge节点用到

1

2

3

4

5

6

7

|

#./keadm gettoken

# 59886279cecd7da202f1d258aad523cf44df78afac68a2766546d97a5ca5e6f9.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjE5OTc4MDV9._XjeO1GW7gsqfwM4AZ0VuOTIW1FWNAkjOTlirBByV3g

keadm gettoken

# 27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

|

Join Edge Node

keadm join will install edgecore and mqtt. It also provides a flag by which a specific version can be set.

Example:

1

2

|

# keadm join --cloudcore-ipport=192.168.20.50:10000 --token=27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

# IMPORTANT NOTE: 1. --cloudcore-ipport flag is a mandatory flag. 1. If you want to apply certificate for edge node automatically, --token is needed. 1. The kubeEdge version used in cloud and edge side should be same.

|

1

2

3

4

|

keadm join --cloudcore-ipport=10.7.11.212:10000 --kubeedge-version=1.6.2 --token=59886279cecd7da202f1d258aad523cf44df78afac68a2766546d97a5ca5e6f9.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjE5OTc4MDV9._XjeO1GW7gsqfwM4AZ0VuOTIW1FWNAkjOTlirBByV3g

|

Output:

1

2

3

|

Host has mosquit+ already installed and running. Hence skipping the installation steps !!!

...

KubeEdge edgecore is running, For logs visit: /var/log/kubeedge/edgecore.log

|

安装过程部分打印

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# ./keadm gettoken

9ecb7342293641c71fe70ac296e349bf0fce395618cb66c27e0643c3b8c985b5.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjE5MzQwMzh9.5b8f16RDwRhCnnebz_3oc4yn3CnfXLz3kMl7-tPoRD0

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# ./keadm join --cloudcore-ipport=10.7.11.213:10000 --token=9ecb7342293641c71fe70ac296e349bf0fce395618cb66c27e0643c3b8c985b5.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2MjE5MzQwMzh9.5b8f16RDwRhCnnebz_3oc4yn3CnfXLz3kMl7-tPoRD0

install MQTT service successfully.

Expected or Default KubeEdge version 1.6.2 is already downloaded and will checksum for it.

kubeedge-v1.6.2-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.6.2-linux-amd64.tar.gz.txt content:

kubeedge-v1.6.2-linux-amd64.tar.gz in your path checksum failed and do you want to delete this file and try to download again?

[y/N]:

N

W0524 17:35:22.099204 64615 common.go:276] failed to checksum and will continue to install.

[Run as service] start to download service file for edgecore

[Run as service] success to download service file for edgecore

kubeedge-v1.6.2-linux-amd64/

kubeedge-v1.6.2-linux-amd64/edge/

kubeedge-v1.6.2-linux-amd64/edge/edgecore

kubeedge-v1.6.2-linux-amd64/cloud/

kubeedge-v1.6.2-linux-amd64/cloud/csidriver/

kubeedge-v1.6.2-linux-amd64/cloud/csidriver/csidriver

kubeedge-v1.6.2-linux-amd64/cloud/admission/

kubeedge-v1.6.2-linux-amd64/cloud/admission/admission

kubeedge-v1.6.2-linux-amd64/cloud/cloudcore/

kubeedge-v1.6.2-linux-amd64/cloud/cloudcore/cloudcore

kubeedge-v1.6.2-linux-amd64/version

KubeEdge edgecore is running, For logs visit: journalctl -u edgecore.service -b

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

# edgecore.service的下载和加载目录位置

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# find / -name edgecore.service

/etc/systemd/system/multi-user.target.wants/edgecore.service

/etc/systemd/system/edgecore.service

/etc/kubeedge/edgecore.service

(base) [root@node2 /home/wangb/kubeedge/keadm-v1.6.2-linux-amd64/keadm]#

|

节点信息

node2是新加入集群的edge节点

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

|

# kubectl get no

NAME STATUS ROLES AGE VERSION

node1 Ready master 249d v0.0.0-master+4d3c9e0c

node2 Ready agent,edge 2m5s v1.19.3-kubeedge-v1.6.2

Name: node2

Roles: agent,edge

Labels: kubernetes.io/arch=amd64

kubernetes.io/hostname=node2

kubernetes.io/os=linux

node-role.kubernetes.io/agent=

node-role.kubernetes.io/edge=

Annotations: node.alpha.kubernetes.io/ttl: 0

CreationTimestamp: Tue, 25 May 2021 12:41:04 +0800

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: <unset>

AcquireTime: <unset>

RenewTime: <unset>

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

Ready True Tue, 25 May 2021 14:00:19 +0800 Tue, 25 May 2021 14:00:19 +0800 EdgeReady edge is posting ready status

Addresses:

InternalIP: 10.7.11.213

Hostname: node2

Capacity:

cpu: 32

memory: 31650Mi

pods: 110

Allocatable:

cpu: 32

memory: 31550Mi

pods: 110

System Info:

Machine ID:

System UUID:

Boot ID:

Kernel Version: 3.10.0-1062.12.1.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.13

Kubelet Version: v1.19.3-kubeedge-v1.6.2

Kube-Proxy Version:

PodCIDR: 10.233.65.0/24

PodCIDRs: 10.233.65.0/24

Non-terminated Pods: (3 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

default testpod 500m (1%) 500m (1%) 0 (0%) 0 (0%) 50s

kube-system calico-node-df9bf 150m (0%) 300m (0%) 64M (0%) 500M (1%) 71m

kube-system nodelocaldns-ldj7t 100m (0%) 0 (0%) 70Mi (0%) 170Mi (0%) 71m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 750m (2%) 800m (2%)

memory 137400320 (0%) 678257920 (2%)

ephemeral-storage 0 (0%) 0 (0%)

Events: <none>

|

组件进程

1

2

3

4

5

6

7

8

9

10

|

root 74050 13.9 0.2 3499776 84608 ? Ssl 18:17 1:04 /usr/local/bin/edgecore

root 25669 0.3 0.1 2435444 56532 pts/11 Sl 17:13 0:14 /usr/local/bin/cloudcore

# 在edge node上 还运行了mosquitto

# ps -ef |grep mosquitto

mosquit+ 72032 1 0 May24 ? 00:00:20 /usr/sbin/mosquitto -c /etc/mosquitto/mosquitto.conf

## 服务

/usr/lib/systemd/system/mosquitto.service

|

卸载

1

2

3

4

5

|

keadm reset

# mosquitto 是rpm 通过yum安装和删除

yum -y remove mosquitto

|

会停止服务,删除程序和配置目录文件/etc/kubeedge

1

2

3

4

5

6

7

|

# ./keadm reset

[reset] WARNING: Changes made to this host by 'keadm init' or 'keadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

edgecore is stopped

|

问题

keadm在线下载失败

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

(base) [/kubeedge/keadm-v1.6.2-linux-amd64/keadm]# ./keadm init --advertise-address="10.7.11.213" --kubeedge-version=1.6.2 --kube-config=/root/.kube/config

Kubernetes version verification passed, KubeEdge installation will start...

Expected or Default KubeEdge version 1.6.2 is already downloaded and will checksum for it.

kubeedge-v1.6.2-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.6.2-linux-amd64.tar.gz.txt content:

Expected or Default KubeEdge version 1.6.2 is already downloaded

[Run as service] start to download service file for cloudcore

Error: fail to download service file,error:{failed to exec 'bash -c cd /etc/kubeedge/ && sudo -E wget -t 5 -k --no-check-certificate https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service', err: --2021-05-24 16:45:02-- https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.109.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

Unable to establish SSL connection.

Converted 0 files in 0 seconds.

, err: exit status 4}

Usage:

keadm init [flags]

Examples:

keadm init

- This command will download and install the default version of KubeEdge cloud component

keadm init --kubeedge-version=1.5.0 --kube-config=/root/.kube/config

- kube-config is the absolute path of kubeconfig which used to secure connectivity between cloudcore and kube-apiserver

Flags:

--advertise-address string Use this key to set IPs in cloudcore's certificate SubAltNames field. eg: 10.10.102.78,10.10.102.79

--domainname string Use this key to set domain names in cloudcore's certificate SubAltNames field. eg: www.cloudcore.cn,www.kubeedge.cn

-h, --help help for init

--kube-config string Use this key to set kube-config path, eg: $HOME/.kube/config (default "/root/.kube/config")

--kubeedge-version string Use this key to download and use the required KubeEdge version

--master string Use this key to set K8s master address, eg: http://127.0.0.1:8080

--tarballpath string Use this key to set the temp directory path for KubeEdge tarball, if not exist, download it

F0524 16:46:02.638767 68936 keadm.go:27] fail to download service file,error:{failed to exec 'bash -c cd /etc/kubeedge/ && sudo -E wget -t 5 -k --no-check-certificate https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service', err: --2021-05-24 16:45:02-- https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.109.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

Unable to establish SSL connection.

Converted 0 files in 0 seconds.

|

进入默认下载目录/etc/kubeedge下, 采用手动方式下载cloudcore.service文件,解决

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

|

(base) [root@node2 /tmp]#

(base) [root@node2 /tmp]# cd /etc/kubeedge/

(base) [root@node2 /etc/kubeedge]# ll

total 49200

drwxr-xr-x. 5 root root 72 May 24 16:13 crds

-rw-r--r--. 1 root root 50379289 Mar 22 10:07 kubeedge-v1.6.2-linux-amd64.tar.gz

(base) [root@node2 /etc/kubeedge]# sudo -E wget -t 5 -k --no-check-certificate https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service

--2021-05-24 17:05:11-- https://raw.githubusercontent.com/kubeedge/kubeedge/release-1.6/build/tools/cloudcore.service

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.110.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 162 [text/plain]

Saving to: ‘cloudcore.service’

100%[=========================================================================================================================>] 162 --.-K/s in 0s

2021-05-24 17:05:11 (3.17 MB/s) - ‘cloudcore.service’ saved [162/162]

Converted 0 files in 0 seconds.

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]# ll

total 49204

-rw-r--r--. 1 root root 162 May 24 17:05 cloudcore.service

drwxr-xr-x. 5 root root 72 May 24 16:13 crds

-rw-r--r--. 1 root root 50379289 Mar 22 10:07 kubeedge-v1.6.2-linux-amd64.tar.gz

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]# cat cloudcore.service

[Unit]

Description=cloudcore.service

[Service]

Type=simple

ExecStart=/usr/local/bin/cloudcore

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

(base) [root@node2 /etc/kubeedge]#

|

edgecore失败-Failed to check the running environment

edgecore 进行运行环境检查,Kubelet 不能运行在edge

1

2

3

4

5

6

7

8

|

ay 24 17:39:59 node2 edgecore[89260]: INFO: Install client plugin, protocol: rest

May 24 17:39:59 node2 edgecore[89260]: INFO: Installed service discovery plugin: edge

May 24 17:39:59 node2 edgecore[89260]: I0524 17:39:59.286453 89260 server.go:72] Version: v1.6.2

May 24 17:39:59 node2 edgecore[89260]: F0524 17:39:59.321896 89260 server.go:79] Failed to check the running environment: Kubelet should not running on edge

May 24 17:39:59 node2 edgecore[89260]: goroutine 1 [running]:

May 24 17:39:59 node2 edgecore[89260]: k8s.io/klog/v2.stacks(0xc000128001, 0xc0003622d0, 0x93, 0xe6)

|

可以通过设置环境变量 CHECK_EDGECORE_ENVIRONMENT=false,使得edgecore不做运行环境检查

1

|

vi /etc/systemd/system/edgecore.service

|

/etc/systemd/system/edgecore.service

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[Unit]

Description=edgecore.service

[Service]

Type=simple

ExecStart=/usr/local/bin/edgecore

Environment="CHECK_EDGECORE_ENVIRONMENT=false"

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

|

1

2

|

systemctl daemon-reload

systemctl restart edgecore

|

edgecore失败-Failed to start container manager

1

2

3

4

5

6

7

8

9

10

11

|

May 25 12:16:05 node2 edgecore: W0525 12:16:05.217771 103976 proxy.go:64] [EdgeMesh] add route err: file exists

May 25 12:16:05 node2 edgecore: I0525 12:16:05.254379 103976 client.go:86] parsed scheme: "unix"

May 25 12:16:05 node2 edgecore: I0525 12:16:05.254423 103976 client.go:86] scheme "unix" not registered, fallback to default scheme

May 25 12:16:05 node2 edgecore: I0525 12:16:05.254470 103976 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{unix:///run/containerd/containerd.sock <nil> 0 <nil>}] <nil> <nil>}

May 25 12:16:05 node2 edgecore: I0525 12:16:05.254496 103976 clientconn.go:948] ClientConn switching balancer to "pick_first"

May 25 12:16:06 node2 edgecore: E0525 12:16:06.238991 103976 edged.go:742] Failed to start container manager, err: failed to build map of initial containers from runtime: no PodsandBox found with Id 'acb4ba296812113c10acd0d7ea1f048328950ff77d36be515b3e15ba3d67ba72'

May 25 12:16:06 node2 edgecore: E0525 12:16:06.239012 103976 edged.go:293] initialize module error: failed to build map of initial containers from runtime: no PodsandBox found with Id 'acb4ba296812113c10acd0d7ea1f048328950ff77d36be515b3e15ba3d67ba72'

May 25 12:16:06 node2 systemd: edgecore.service: main process exited, code=exited, status=1/FAILURE

May 25 12:16:06 node2 systemd: Unit edgecore.service entered failed state.

May 25 12:16:06 node2 systemd: edgecore.service failed.

|

- 检查edgecore的配置文件的edgecore.yaml的参数podSandboxImage 是否正确

1

2

|

podSandboxImage: kubeedge/pause:3.1

|

- 清理掉环境中已有pod的容器

1

2

3

|

# 停用并删除全部容器

docker stop $(docker ps -q) & docker rm $(docker ps -aq)

|

参考资料

更新于 2021-05-26

标签:keadm,

KubeEdge,

kubeedge,

linux,

介绍,

v1.6,

原理,

node2,

root

From: https://www.cnblogs.com/ztguang/p/17939369