k8s安装kube-promethues(0.7版本)

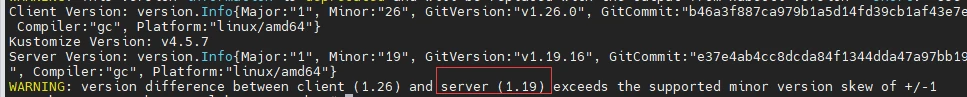

一.检查本地k8s版本,下载对应安装包

kubectl version

如图可见是1.19版本

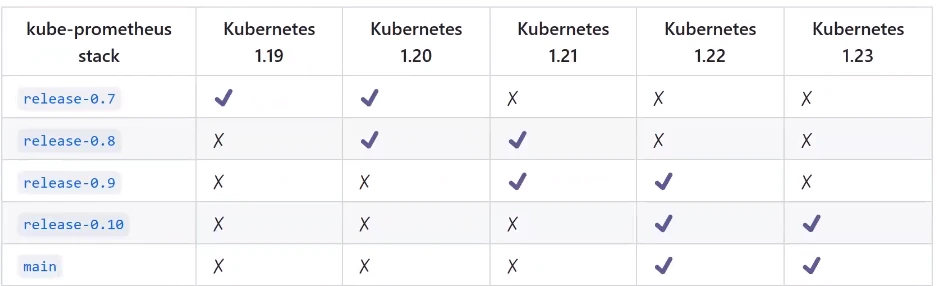

进入kube-promethus下载地址,查找自己的k8s版本适合哪一个kube-promethues版本。

然后下载自己合适的版本

#还可以通过如下地址,在服务器上直接下已经打包好的包。或者复制地址到浏览器下载后上传到服务器。

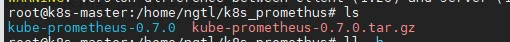

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.7.0.tar.gz

本次安装是手动上传的

tar -zxvf kube-prometheus-0.7.0.tar.gz

二.安装前准备

1.文件分类整理

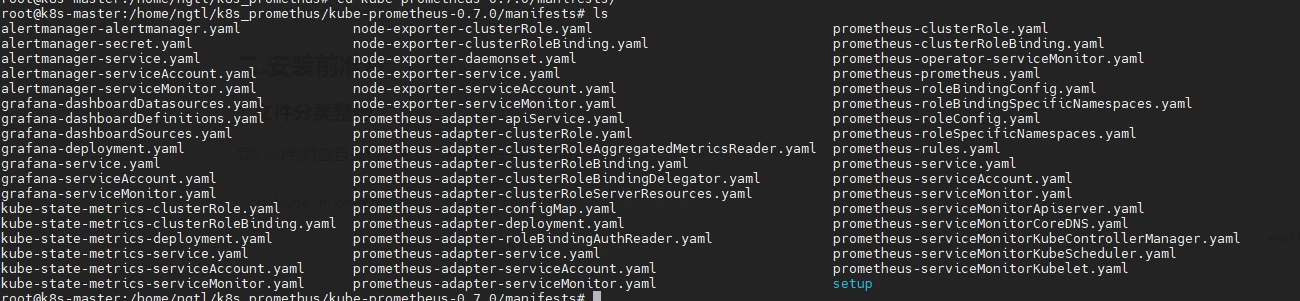

我们cd到对应目录可以看见,初始的安装文件很乱。

cd kube-prometheus-0.7.0/manifests/

新建目录,然后把对应的安装文件归类。

# 创建文件夹

mkdir -p node-exporter alertmanager grafana kube-state-metrics prometheus serviceMonitor adapter

# 移动 yaml 文件,进行分类到各个文件夹下

mv *-serviceMonitor* serviceMonitor/

mv grafana-* grafana/

mv kube-state-metrics-* kube-state-metrics/

mv alertmanager-* alertmanager/

mv node-exporter-* node-exporter/

mv prometheus-adapter* adapter/

mv prometheus-* prometheus

分类后的目录树如下

.

├── adapter

│ ├── prometheus-adapter-apiService.yaml

│ ├── prometheus-adapter-clusterRole.yaml

│ ├── prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

│ ├── prometheus-adapter-clusterRoleBinding.yaml

│ ├── prometheus-adapter-clusterRoleBindingDelegator.yaml

│ ├── prometheus-adapter-clusterRoleServerResources.yaml

│ ├── prometheus-adapter-configMap.yaml

│ ├── prometheus-adapter-deployment.yaml

│ ├── prometheus-adapter-roleBindingAuthReader.yaml

│ ├── prometheus-adapter-service.yaml

│ └── prometheus-adapter-serviceAccount.yaml

├── alertmanager

│ ├── alertmanager-alertmanager.yaml

│ ├── alertmanager-secret.yaml

│ ├── alertmanager-service.yaml

│ └── alertmanager-serviceAccount.yaml

├── grafana

│ ├── grafana-dashboardDatasources.yaml

│ ├── grafana-dashboardDefinitions.yaml

│ ├── grafana-dashboardSources.yaml

│ ├── grafana-deployment.yaml

│ ├── grafana-pvc.yaml

│ ├── grafana-service.yaml

│ └── grafana-serviceAccount.yaml

├── kube-state-metrics

│ ├── kube-state-metrics-clusterRole.yaml

│ ├── kube-state-metrics-clusterRoleBinding.yaml

│ ├── kube-state-metrics-deployment.yaml

│ ├── kube-state-metrics-service.yaml

│ └── kube-state-metrics-serviceAccount.yaml

├── node-exporter

│ ├── node-exporter-clusterRole.yaml

│ ├── node-exporter-clusterRoleBinding.yaml

│ ├── node-exporter-daemonset.yaml

│ ├── node-exporter-service.yaml

│ └── node-exporter-serviceAccount.yaml

├── prometheus

│ ├── prometheus-clusterRole.yaml

│ ├── prometheus-clusterRoleBinding.yaml

│ ├── prometheus-prometheus.yaml

│ ├── prometheus-roleBindingConfig.yaml

│ ├── prometheus-roleBindingSpecificNamespaces.yaml

│ ├── prometheus-roleConfig.yaml

│ ├── prometheus-roleSpecificNamespaces.yaml

│ ├── prometheus-rules.yaml

│ ├── prometheus-service.yaml

│ └── prometheus-serviceAccount.yaml

├── serviceMonitor

│ ├── alertmanager-serviceMonitor.yaml

│ ├── grafana-serviceMonitor.yaml

│ ├── kube-state-metrics-serviceMonitor.yaml

│ ├── node-exporter-serviceMonitor.yaml

│ ├── prometheus-adapter-serviceMonitor.yaml

│ ├── prometheus-operator-serviceMonitor.yaml

│ ├── prometheus-serviceMonitor.yaml

│ ├── prometheus-serviceMonitorApiserver.yaml

│ ├── prometheus-serviceMonitorCoreDNS.yaml

│ ├── prometheus-serviceMonitorKubeControllerManager.yaml

│ ├── prometheus-serviceMonitorKubeScheduler.yaml

│ └── prometheus-serviceMonitorKubelet.yaml

└── setup

├── 0namespace-namespace.yaml

├── prometheus-operator-0alertmanagerConfigCustomResourceDefinition.yaml

├── prometheus-operator-0alertmanagerCustomResourceDefinition.yaml

├── prometheus-operator-0podmonitorCustomResourceDefinition.yaml

├── prometheus-operator-0probeCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusruleCustomResourceDefinition.yaml

├── prometheus-operator-0servicemonitorCustomResourceDefinition.yaml

├── prometheus-operator-0thanosrulerCustomResourceDefinition.yaml

├── prometheus-operator-clusterRole.yaml

├── prometheus-operator-clusterRoleBinding.yaml

├── prometheus-operator-deployment.yaml

├── prometheus-operator-service.yaml

└── prometheus-operator-serviceAccount.yaml

8 directories, 68 files

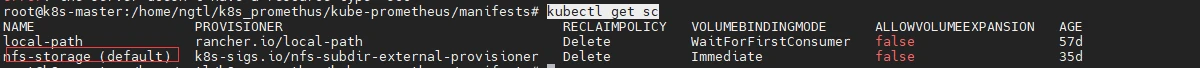

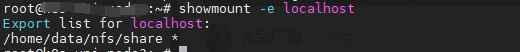

2.查看K8s集群是否安装NFS持久化存储,如果没有则需要安装配置

kubectl get sc

此截图显示已经安装。下面是NFS的安装和部署方法

1).安装NFS服务

Ubuntu:

sudo apt update

sudo apt install nfs-kernel-server

Centos:

yum update

yum -y install nfs-utils

# 创建或使用用已有的文件夹作为nfs文件存储点

mkdir -p /home/data/nfs/share

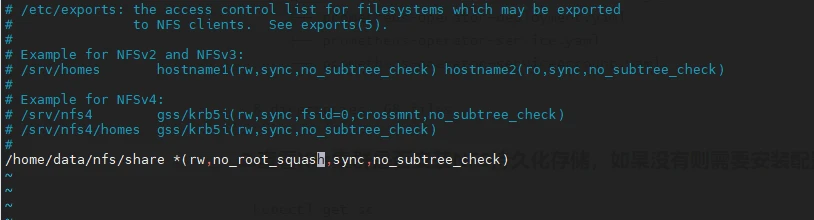

vi /etc/exports

写入如下内容

/home/data/nfs/share *(rw,no_root_squash,sync,no_subtree_check)

# 配置生效并查看是否生效

exportfs -r

exportfs

# 启动rpcbind、nfs服务

#Centos

systemctl restart rpcbind && systemctl enable rpcbind

systemctl restart nfs && systemctl enable nfs

#Ubuntu

systemctl restart rpcbind && systemctl enable rpcbind

systemctl start nfs-kernel-server && systemctl enable nfs-kernel-server

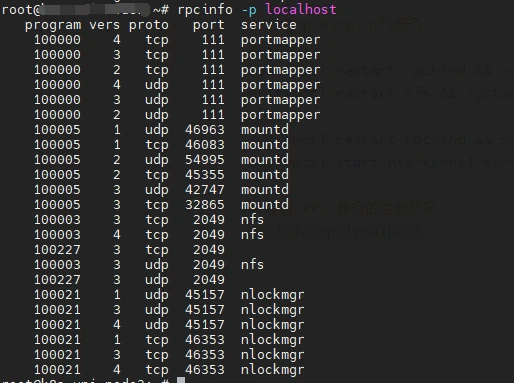

# 查看 RPC 服务的注册状况

rpcinfo -p localhost

# showmount测试

showmount -e localhost

以上都没有问题则说明安装成功

2).k8s注册nfs服务

新建storageclass-nfs.yaml文件,粘贴如下内容:

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass #存储类的资源名称

metadata:

name: nfs-storage #存储类的名称,自定义

annotations:

storageclass.kubernetes.io/is-default-class: "true" #注解,是否是默认的存储,注意:KubeSphere默认就需要个默认存储,因此这里注解要设置为“默认”的存储系统,表示为"true",代表默认。

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner #存储分配器的名字,自定义

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1 #只运行一个副本应用

strategy: #描述了如何用新的POD替换现有的POD

type: Recreate #Recreate表示重新创建Pod

selector: #选择后端Pod

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #创建账户

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 #使用NFS存储分配器的镜像

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root #定义个存储卷,

mountPath: /persistentvolumes #表示挂载容器内部的路径

env:

- name: PROVISIONER_NAME #定义存储分配器的名称

value: k8s-sigs.io/nfs-subdir-external-provisioner #需要和上面定义的保持名称一致

- name: NFS_SERVER #指定NFS服务器的地址,你需要改成你的NFS服务器的IP地址

value: 192.168.0.0 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /home/data/nfs/share ## nfs服务器共享的目录 #指定NFS服务器共享的目录

volumes:

- name: nfs-client-root #存储卷的名称,和前面定义的保持一致

nfs:

server: 192.168.0.0 #NFS服务器的地址,和上面保持一致,这里需要改为你的IP地址

path: /home/data/nfs/share #NFS共享的存储目录,和上面保持一致

---

apiVersion: v1

kind: ServiceAccount #创建个SA账号

metadata:

name: nfs-client-provisioner #和上面的SA账号保持一致

# replace with namespace where provisioner is deployed

namespace: default

---

#以下就是ClusterRole,ClusterRoleBinding,Role,RoleBinding都是权限绑定配置,不在解释。直接复制即可。

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

需要修改的就只有服务器地址和共享的目录

创建StorageClass

kubectl apply -f storageclass-nfs.yaml

# 查看是否存在

kubectl get sc

3.修改Prometheus 持久化

vi prometheus/prometheus-prometheus.yaml

在文件末尾新增:

...

serviceMonitorSelector: {}

version: v2.11.0

retention: 3d

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs-storage

resources:

requests:

storage: 5Gi

4.修改grafana持久化配置

#新增garfana的PVC配置文件

vi grafana/grafana-pvc.yaml

完整内容如下:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana

namespace: monitoring #---指定namespace为monitoring

spec:

storageClassName: nfs-storage #---指定StorageClass

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

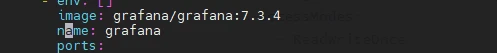

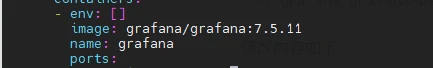

接着修改 grafana-deployment.yaml 文件设置持久化配置,顺便修改Garfana的镜像版本(有些模板不支持7.5以下的Grafana),应用上面的 PVC

vi grafana/grafana-deployment.yaml

修改内容如下:

serviceAccountName: grafana

volumes:

- name: grafana-storage # 新增持久化配置

persistentVolumeClaim:

claimName: grafana # 设置为创建的PVC名称

# - emptyDir: {} # 注释旧的注释

# name: grafana-storage

- name: grafana-datasources

secret:

secretName: grafana-datasources

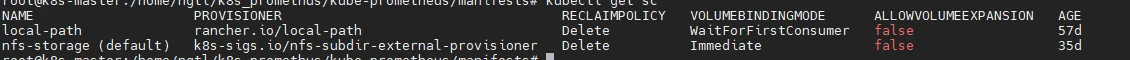

之前的镜像版本

修改后的

5.修改 promethus和Grafana的Service 端口设置

修改 Prometheus Service

vi prometheus/prometheus-service.yaml

修改为如下内容:

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 32101

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

修改 Grafana Service

vi grafana/grafana-service.yaml

修改为如下内容:

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 32102

selector:

app: grafana

三.安装Prometheus

1.安装promethues-operator

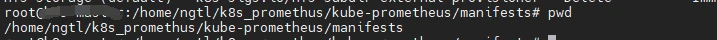

首先保证在manifests目录下

开始安装 Operator:

kubectl apply -f setup/

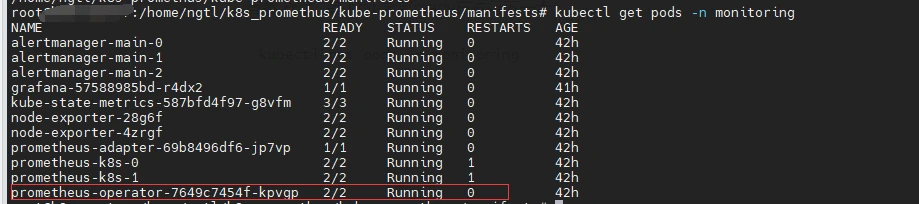

查看 Pod,等 pod 全部ready在进行下一步:

kubectl get pods -n monitoring

2.安装其他所有组件

#依次执行

kubectl apply -f adapter/

kubectl apply -f alertmanager/

kubectl apply -f node-exporter/

kubectl apply -f kube-state-metrics/

kubectl apply -f grafana/

kubectl apply -f prometheus/

kubectl apply -f serviceMonitor/

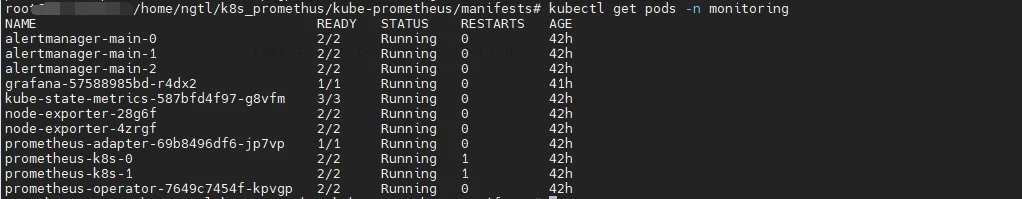

然后查看pod是否创建成功,并等待所有pod处于Running状态

kubectl get pods -n monitoring

3.验证是否安装成功

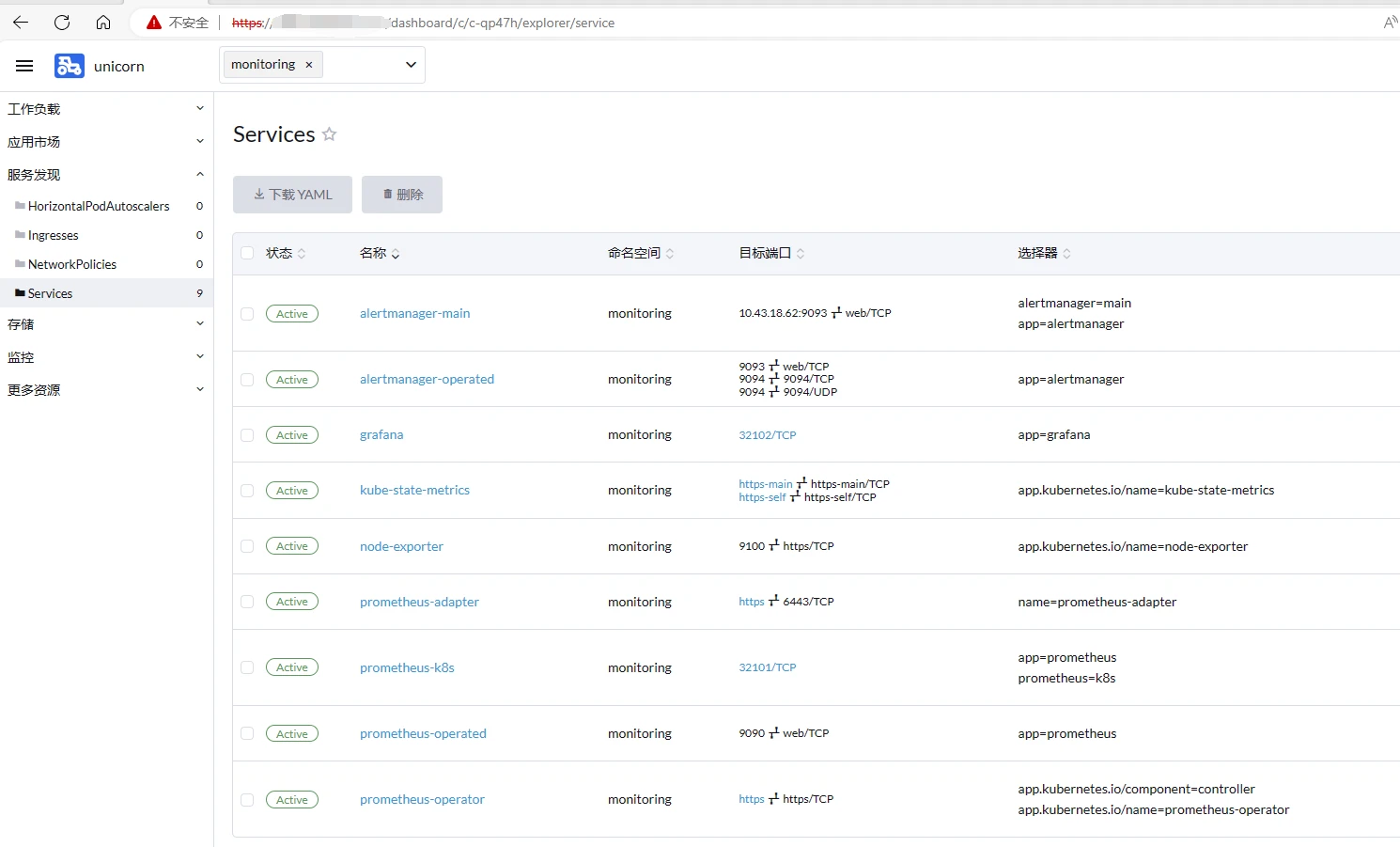

如果知道集群节点地址就可以直接ip:32101访问Prometheus,如果不知道则可以访问Rancher管理界面,命名空间选择monitoring。在Services中找到,prometheus-k8s和grafana然后鼠标点击目标端口就可以访问。

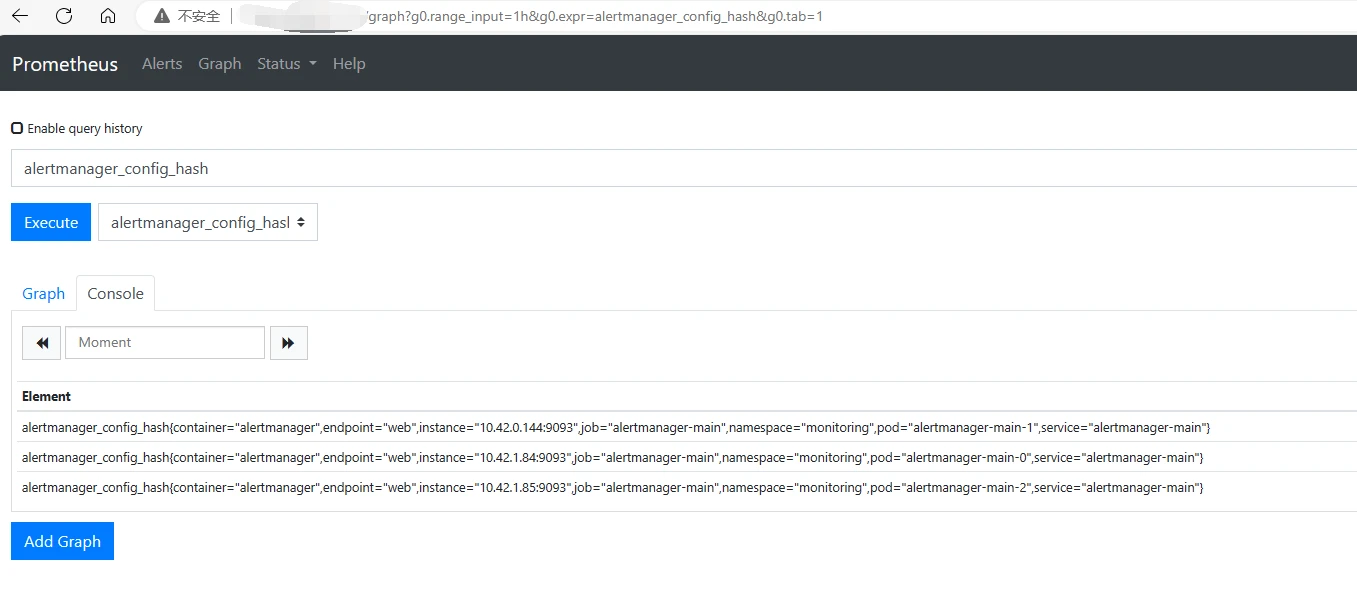

在Prometheus界面随便测试一个函数,查看是否能够正常使用。

然后登录Grafana

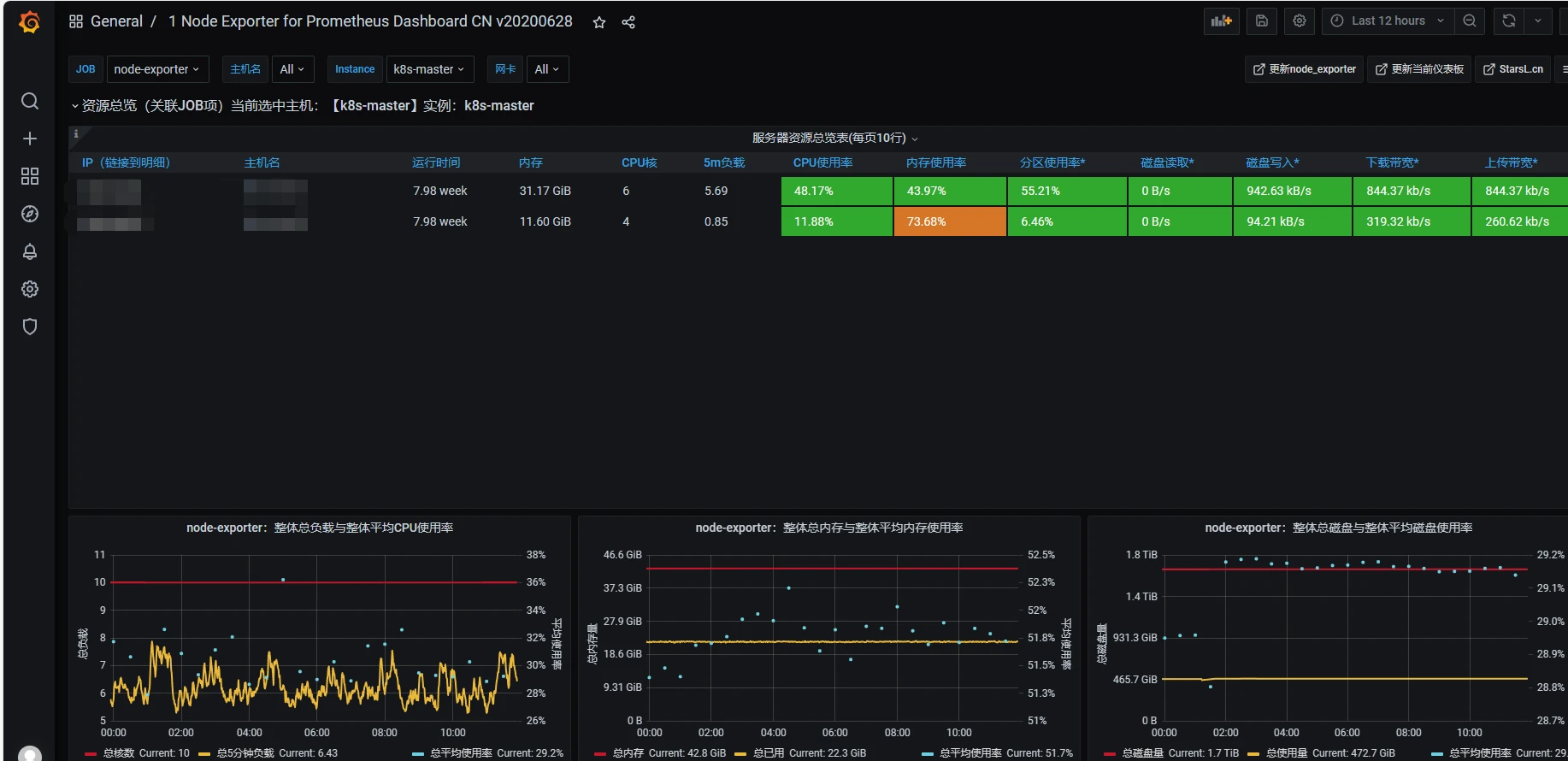

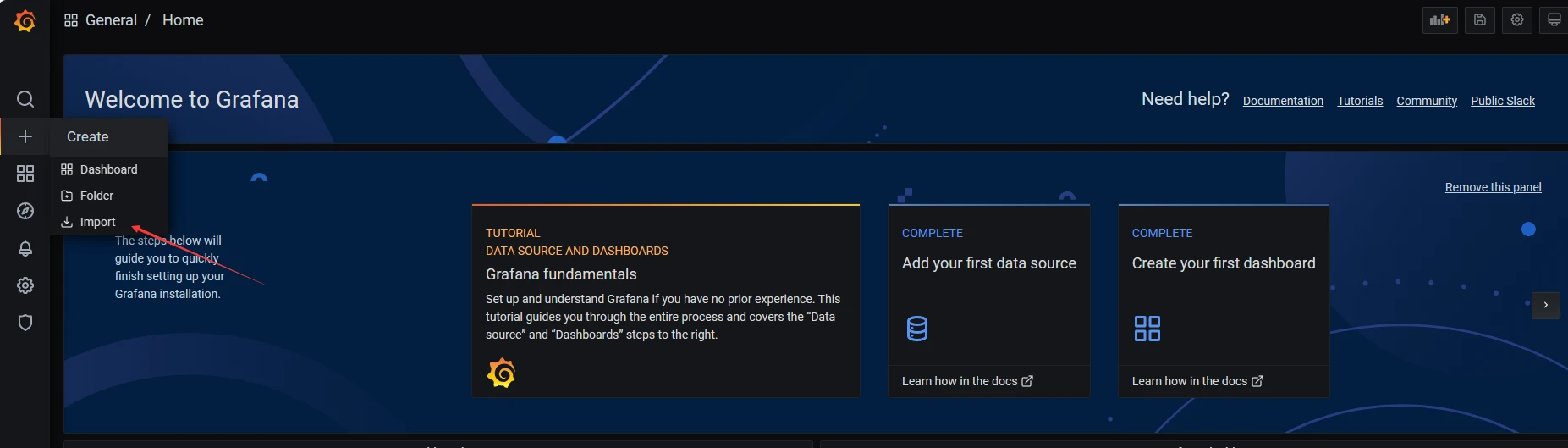

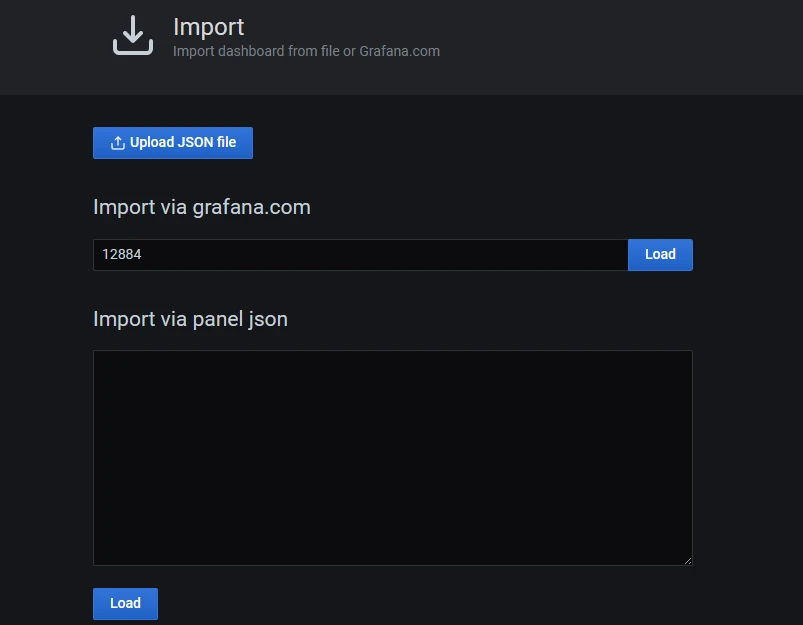

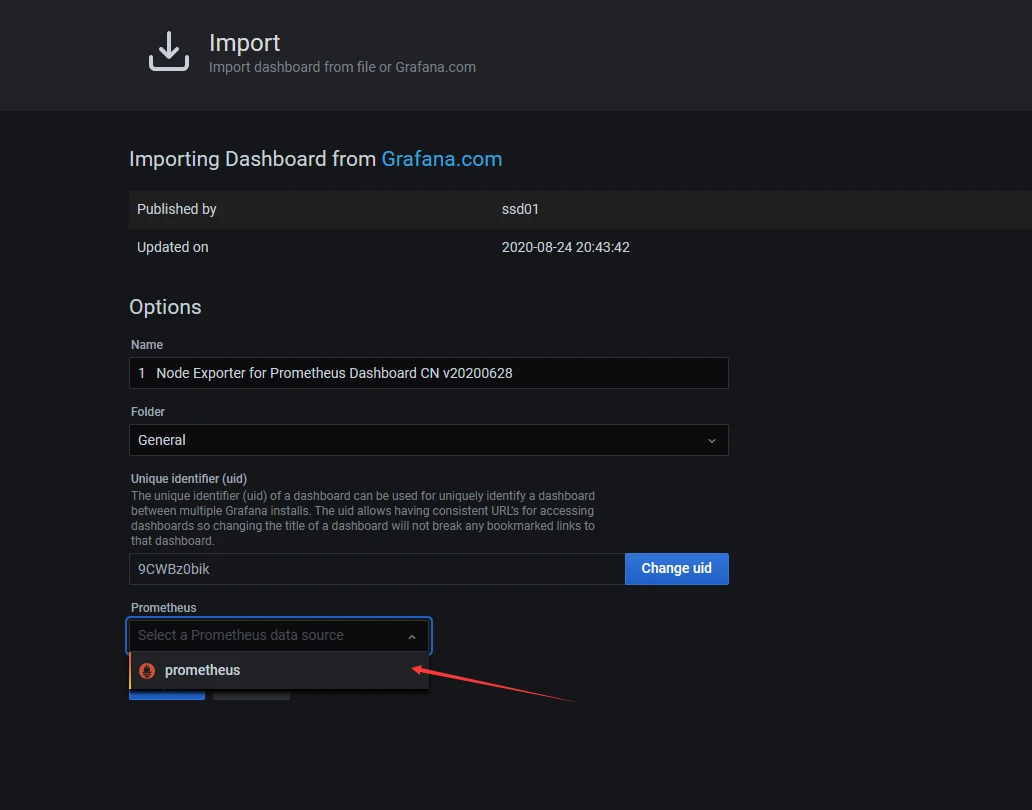

默认用户名和密码是admin/admin,第一次登陆会提示修改密码。进入Grafana后,导入模板测试。推荐的模板ID有,12884和13105

效果图: