HWI是Hive Web Interface的简称,是hive cli的一个web替换方案,以开发的网页形式查看数据

切换用户启动集群

[root@master ~]# su - hadoop

Last login: Tue May 2 13:18:34 CST 2023 on pts/0

[hadoop@master ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out

192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out

192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out

192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out

192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out

[hadoop@master ~]$ jps

1296 NameNode

1488 SecondaryNameNode

1904 Jps

1645 ResourceManager

[root@slave1 ~]# su - hadoop

Last login: Thu Apr 6 22:41:30 CST 2023 on pts/0

[hadoop@slave1 ~]$ jps

1216 DataNode

1286 NodeManager

1321 Jps

[root@slave2 ~]# su - hadoop

Last login: Thu Apr 6 22:41:30 CST 2023 on pts/0

[hadoop@slave2 ~]$ jps

1282 NodeManager

1428 Jps

1212 DataNode

查看端口状态

[hadoop@master ~]$ ss -antlu

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

tcp LISTEN 0 128 192.168.88.10:9000 *:*

tcp LISTEN 0 128 *:50090 *:*

tcp LISTEN 0 128 *:50070 *:*

tcp LISTEN 0 128 *:22 *:*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8030 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8031 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8032 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8033 :::*

tcp LISTEN 0 80 :::3306 :::*

tcp LISTEN 0 128 :::22 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8088 :::*

安装apache-hive-2.0.0-src.tar.gz

[root@master ~]# wget https://archive.apache.org/dist/hive/hive-2.0.0/apache-hive-2.0.0-src.tar.gz

--2023-05-06 10:21:29-- https://archive.apache.org/dist/hive/hive-2.0.0/apache-hive-2.0.0-src.tar.gz

Resolving archive.apache.org (archive.apache.org)... 138.201.131.134, 2a01:4f8:172:2ec5::2

Connecting to archive.apache.org (archive.apache.org)|138.201.131.134|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 15779309 (15M) [application/x-gzip]

Saving to: ‘apache-hive-2.0.0-src.tar.gz’

100%[==============================================================================================================================>] 15,779,309 681KB/s in 19s

2023-05-06 10:21:49 (825 KB/s) - ‘apache-hive-2.0.0-src.tar.gz’ saved [15779309/15779309]

[root@master ~]# ls

apache-flume-1.6.0-bin.tar.gz hadoop-2.7.1.tar.gz mysql ntlu zookeeper-3.4.8.tar.gz

apache-hive-2.0.0-bin.tar.gz hbase-1.2.1-bin.tar.gz mysql-5.7.18.zip sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

apache-hive-2.0.0-src.tar.gz jdk-8u152-linux-x64.tar.gz mysql-connector-java-5.1.46.jar student.java

解压安装包,打包并配置文件

#解压安装包

[root@master ~]# tar -xf apache-hive-2.0.0-src.tar.gz

[root@master ~]# ls

apache-flume-1.6.0-bin.tar.gz mysql

apache-hive-2.0.0-bin.tar.gz mysql-5.7.18.zip

apache-hive-2.0.0-src mysql-connector-java-5.1.46.jar

apache-hive-2.0.0-src.tar.gz ntlu

hadoop-2.7.1.tar.gz sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

hbase-1.2.1-bin.tar.gz student.java

jdk-8u152-linux-x64.tar.gz zookeeper-3.4.8.tar.gz

[root@master ~]# cd apache-hive-2.0.0-src

[root@master apache-hive-2.0.0-src]# ls

accumulo-handler contrib hwi llap-tez README.txt

ant data itests metastore RELEASE_NOTES.txt

beeline dev-support jdbc NOTICE serde

bin docs lib odbc service

checkstyle findbugs LICENSE orc shims

cli hbase-handler llap-client packaging spark-client

common hcatalog llap-common pom.xml storage-api

conf hplsql llap-server ql testutils

[root@master apache-hive-2.0.0-src]# cd hwi/

[root@master hwi]# ls

pom.xml src web

[root@master hwi]#

#打包为.war文件,复制到 /usr/local/src/hive/lib/,然后赋权限到hadoop用户和组

[root@master hwi]# jar -Mcf hive-hwi-2.0.0.war -C web .

[root@master hwi]# ls

hive-hwi-2.0.0.war pom.xml src web

[root@master hwi]# cp hive-hwi-2.0.0.war /usr/local/src/hive/lib/

[root@master hwi]# chown -R hadoop.hadoop /usr/local/src/

#配置文件hive-site.xml

<property>

<name>hive.hwi.war.file</name>

<value>lib/hive-hwi-2.0.0.war</value> #该这一行为.war包的相对路径

<description>This sets the path to the HWI war file, relative to ${HIVE_HOME}. </description>

</property>

HWI需要用Apache的ANT来编译,因此需要安装ANT

[hadoop@master ~]$ wget https://archive.apache.org/dist/ant/binaries/apache-ant-1.9.1-bin.tar.gz

--2023-05-06 10:43:38-- https://archive.apache.org/dist/ant/binaries/apache-ant-1.9.1-bin.tar.gz

Resolving archive.apache.org (archive.apache.org)... 138.201.131.134, 2a01:4f8:172:2ec5::2

Connecting to archive.apache.org (archive.apache.org)|138.201.131.134|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 5508934 (5.3M) [application/x-gzip]

Saving to: ‘apache-ant-1.9.1-bin.tar.gz’

100%[==============================================================================================================================>] 5,508,934 76.1KB/s in 84s

2023-05-06 10:45:03 (64.0 KB/s) - ‘apache-ant-1.9.1-bin.tar.gz’ saved [5508934/5508934]

解压安装包,重命名然后赋hadoop用户权限

[root@master ~]# ls

apache-ant-1.9.1-bin.tar.gz apache-hive-2.0.0-src hbase-1.2.1-bin.tar.gz mysql-5.7.18.zip sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

apache-flume-1.6.0-bin.tar.gz apache-hive-2.0.0-src.tar.gz jdk-8u152-linux-x64.tar.gz mysql-connector-java-5.1.46.jar student.java

apache-hive-2.0.0-bin.tar.gz hadoop-2.7.1.tar.gz mysql ntlu zookeeper-3.4.8.tar.gz

[root@master ~]# tar -xf apache-ant-1.9.1-bin.tar.gz -C /usr/local/src/

[root@master ~]# cd /usr/local/src/

[root@master src]# ls

apache-ant-1.9.1 flume hadoop hbase hive jdk sqoop zookeeper

[root@master src]# mv apache-ant-1.9.1/ ant

[root@master src]# ls

ant flume hadoop hbase hive jdk sqoop zookeeper

[root@master src]# chown -R hadoop.hadoop /usr/local/src/

配置环境变量

[root@master ~]# vim /etc/profile.d/ant.sh

export ANT_HOME=/usr/local/src/ant

export PATH=${ANT_HOME}/bin:$PATH

切换用户查看ant信息

[root@master ~]# su - hadoop

Last login: Sat May 6 10:35:36 CST 2023 on pts/0

[hadoop@master ~]$ ant -version

Apache Ant(TM) version 1.9.1 compiled on May 15 2013

开启hive 的hwi服务

[root@master ~]# hive --service hwi

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

#会卡在这,然后查看端口

[root@master ~]# ss -antlu

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

tcp LISTEN 0 128 192.168.88.10:9000 *:*

tcp LISTEN 0 128 *:50090 *:*

tcp LISTEN 0 50 *:9999 *:*

tcp LISTEN 0 128 *:50070 *:*

tcp LISTEN 0 128 *:22 *:*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8030 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8031 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8032 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8033 :::*

tcp LISTEN 0 80 :::3306 :::*

tcp LISTEN 0 128 :::22 :::*

tcp LISTEN 0 128 ::ffff:192.168.88.10:8088 :::*

#出现了9999端口

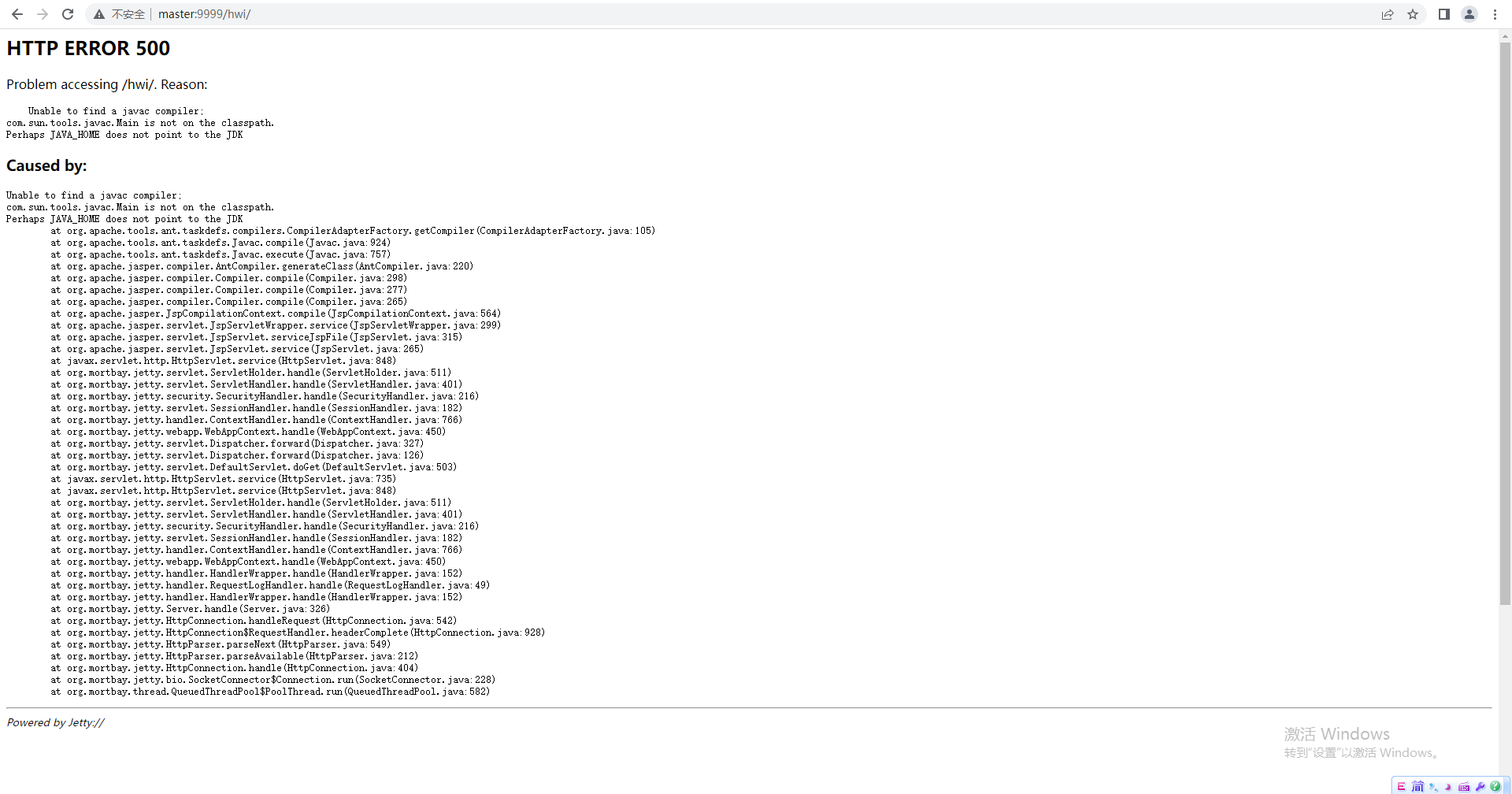

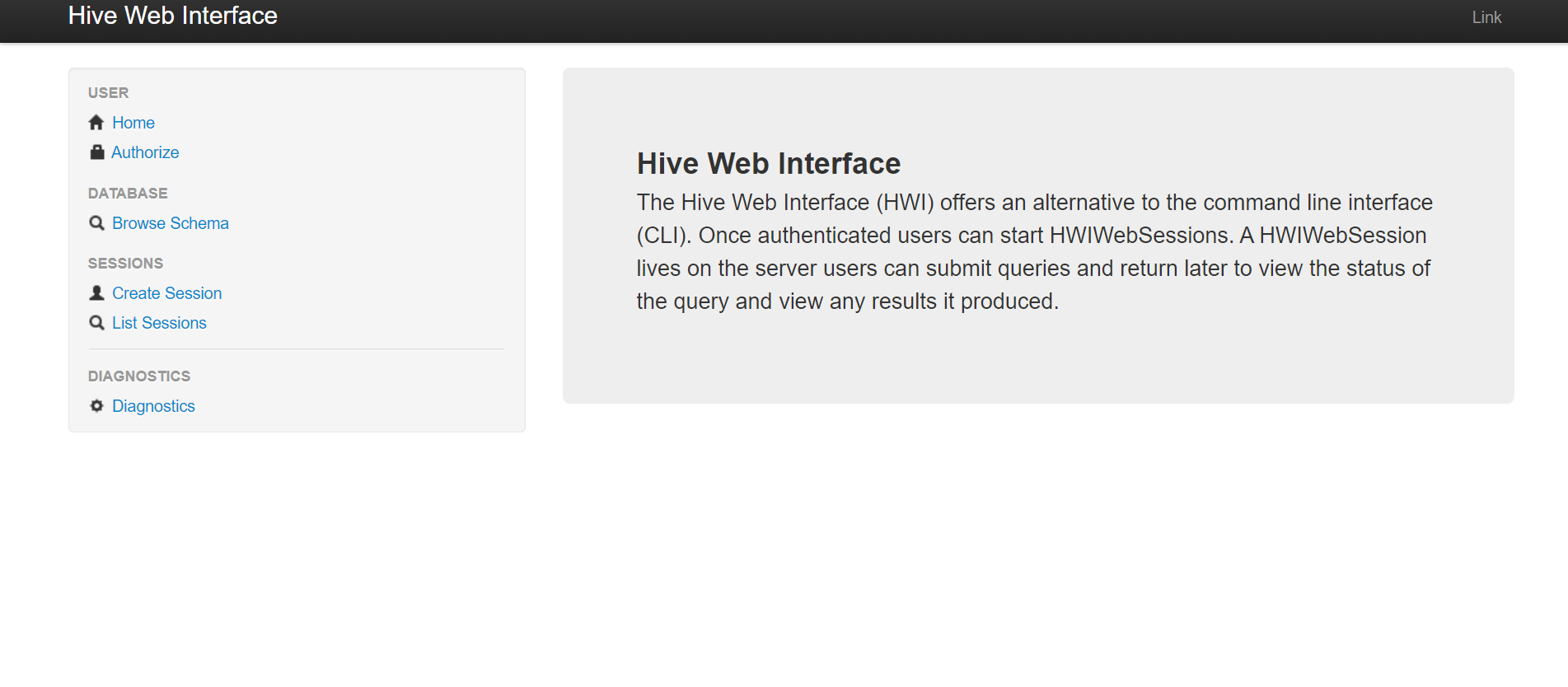

浏览器查看,http://master:9999/hwi(没有配主机域名映射的,master替换成IP)

将ant.jar包和tools.jar包传到lib目录下

[root@master ~]# cp /usr/local/src/jdk/lib/tools.jar /usr/local/src/hive/lib/

[root@master ~]# ll /usr/local/src/hive/lib/tools.jar

-rw-r--r-- 1 root root 18290333 May 8 16:54 /usr/local/src/hive/lib/tools.jar

[root@master ~]# cp /usr/local/src/ant/lib/ant.jar /usr/local/src/hive/lib/

[root@master ~]# ll /usr/local/src/hive/lib/ant.jar

-rw-r--r-- 1 root root 1997485 May 8 16:56 /usr/local/src/hive/lib/ant.jar

[root@master ~]# chown -R hadoop.hadoop /usr/local/src/

[root@master ~]# ll /usr/local/src/hive/lib/tools.jar

-rw-r--r-- 1 hadoop hadoop 18290333 May 8 16:54 /usr/local/src/hive/lib/tools.jar

[root@master ~]# ll /usr/local/src/hive/lib/ant.jar

-rw-r--r-- 1 hadoop hadoop 1997485 May 8 16:56 /usr/local/src/hive/lib/ant.jar

再次启动hive-hwi

#切换hadoop用户,执行命令

[hadoop@master ~]$ hive --service hwi

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

#卡在这里属于正常现象,去浏览器访问

浏览器刷新访问,多刷新几次

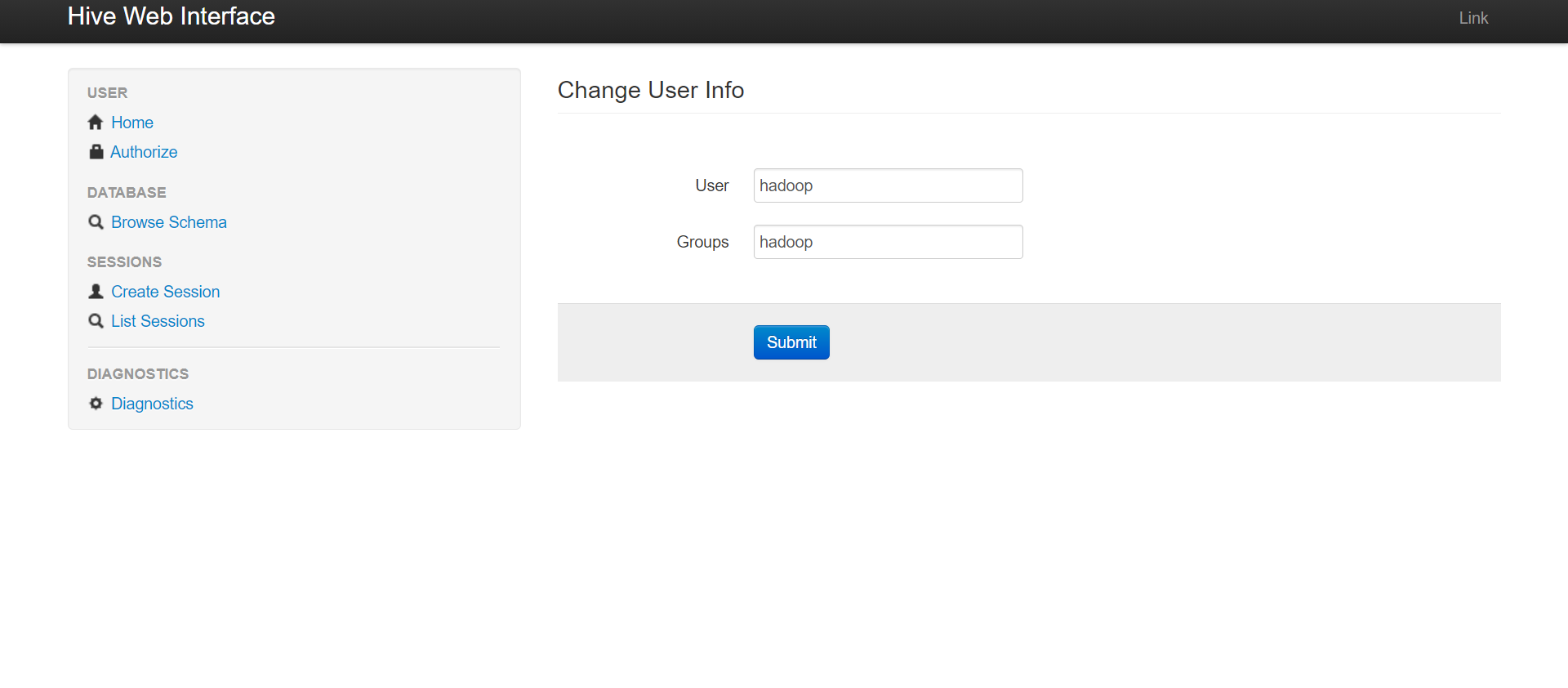

hadoop授权