DataSophon集成StarRocks分析数据库

StarRocks官方文档: StarRocks | StarRocks

StarRocks下载地址: Download StarRocks Free | StarRocks 选择自己要安装的版本下载,StarRocks-3.2.1.tar.gz

wget https://releases.starrocks.io/starrocks/StarRocks-3.2.1.tar.gz

准备StarRocks部署脚本

修改StarRocks的JAVA环境

- 由于StarRocks最新版FE本需要jdk11及以上的版本,而大数据平台默认安装jdk1.8,需要添加额外的jdk版本和启动参数

下载jdk11及以上的版本放到服务器:/opt/jdk-11.0.0.1

解压安装包

tar -zxvf StarRocks-3.2.1.tar.gz

修改fe的环境变量脚本 fe/bin/common.sh

在jdk_version最前面设置JAVA_HOME

jdk_version() {

JAVA_HOME=/opt/jdk-11.0.0.1 # 添加 JAVA_HOME

local result

local java_cmd=$JAVA_HOME/bin/java

local IFS=$'\n'

# remove \r for Cygwin

...........

}

修改 启动脚本fe/bin/start_fe.sh

JAVA_HOME=/opt/jdk-11.0.0.1 # 添加 JAVA_HOME

# java

if [[ -z ${JAVA_HOME} ]]; then

if command -v javac &> /dev/null; then

export JAVA_HOME="$(dirname $(dirname $(readlink -f $(which javac))))"

echo "Infered JAVA_HOME=$JAVA_HOME"

else

cat <<

..................

新增检查fe状态脚本 fe/bin/status_fe.sh。使用方式: ./status_fe.sh status

#!/bin/bash

usage="Usage: start.sh (status) <command> "

# if no args specified, show usage

if [ $# -lt 1 ]; then

echo $usage

exit 1

fi

startStop=$1

shift

SH_DIR=`dirname $0`

echo $SH_DIR

export PID_DIR=$SH_DIR

pid=$PID_DIR/fe.pid

status(){

if [ -f $pid ]; then

ARGET_PID=`cat $pid`

kill -0 $ARGET_PID

if [ $? -eq 0 ]

then

echo "SR fe pid $ARGET_PID $startStop is running "

else

echo "SR fe pid $ARGET_PID $startStop is not running"

exit 1

fi

else

echo "SR fe pid $ARGET_PID $startStop pid file is not exists"

exit 1

fi

}

case $startStop in

(status)

status

;;

(*)

echo $usage

exit 1

;;

esac

echo "End $startStop $command."

新增检查be状态脚本 be/bin/status_be.sh。使用方式: ./status_be.sh status

#!/bin/bash

usage="Usage: start.sh (status) <command> "

# if no args specified, show usage

if [ $# -lt 1 ]; then

echo $usage

exit 1

fi

startStop=$1

shift

SH_DIR=`dirname $0`

echo $SH_DIR

export PID_DIR=$SH_DIR

pid=$PID_DIR/be.pid

status(){

if [ -f $pid ]; then

ARGET_PID=`cat $pid`

kill -0 $ARGET_PID

if [ $? -eq 0 ]

then

echo "SR be pid $ARGET_PID $startStop is running "

else

echo "SR be pid $ARGET_PID $startStop is not running"

exit 1

fi

else

echo "SR fe pid $ARGET_PID $startStop pid file is not exists"

exit 1

fi

}

case $startStop in

(status)

status

;;

(*)

echo $usage

exit 1

;;

esac

echo "End $startStop $command."

重新打包压缩部署文件,并生成MD5,MD5在部署的时候需要使用

tar -czvf StarRocks-3.2.1.tar.gz StarRocks-3.2.1

md5sum StarRocks-3.2.1.tar.gz

56470a0a0d4aac2c8dcf3d85187aede1 StarRocks-3.2.1.tar.gz

echo 56470a0a0d4aac2c8dcf3d85187aede1 > StarRocks-3.2.1.tar.gz.md5

准备服务配置模板

服务配置模板在datasophon-worker里面,需要添加文件fe.ftl和be.ftl重新打包(原生有fe.ftl,需要修改),并重新安装

或者直接在每个节点的datasophon-worker配置目录下添加配置模板

模板路径: ./conf/templates

[root@datasophon-test templates]# pwd

/opt/datasophon/datasophon-worker/conf/templates

[root@datasophon-test templates]# ls

alertmanager.yml doris_fe.ftl jaas.ftl kafka-server-start.ftl properties3.ftl scrape.ftl xml.ftl

alert.yml fair-scheduler.ftl jvm.config.ftl kdc.ftl properties.ftl singleValue.ftl zk-env.ftl

be.ftl fe.ftl jvm.options.ftl krb5.ftl ranger-hbase.ftl spark-env.ftl zk-jaas.ftl

capacity-scheduler.ftl hadoop-env.ftl kadm5.ftl kyuubi-env.ftl ranger-hdfs.ftl starrocks-prom.ftl

dinky.ftl hbase-env.ftl kafka-jaas.ftl prometheus.ftl ranger-hive.ftl streampark.ftl

dolphinscheduler_env.ftl hive-env.ftl

fe.ftl 内容:

注意: 模板中如有变量需要调整,例如:${STARROCKS_HOME}/log需要写为:${r"${STARROCKS_HOME}/log"},否则安装过程会报错

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#####################################################################

## The uppercase properties are read and exported by bin/start_fe.sh.

## To see all Frontend configurations,

## see fe/src/com/starrocks/common/Config.java

# the output dir of stderr/stdout/gc

LOG_DIR = ${r"${STARROCKS_HOME}/log"}

DATE = "$(date +%Y%m%d-%H%M%S)"

JAVA_OPTS=${r"-Dlog4j2.formatMsgNoLookups=true -Xmx8192m -XX:+UseMembar -XX:SurvivorRatio=8 -XX:MaxTenuringThreshold=7 -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSClassUnloadingEnabled -XX:-CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -Xloggc:${LOG_DIR}/fe.gc.log.$DATE -XX:+PrintConcurrentLocks -Djava.security.policy=${STARROCKS_HOME}/conf/udf_security.policy"}

# For jdk 11+, this JAVA_OPTS will be used as default JVM options

JAVA_OPTS_FOR_JDK_11=${r"-Dlog4j2.formatMsgNoLookups=true -Xmx8192m -XX:+UseG1GC -Xlog:gc*:${LOG_DIR}/fe.gc.log.$DATE:time -Djava.security.policy=${STARROCKS_HOME}/conf/udf_security.policy"}

##

## the lowercase properties are read by main program.

##

# DEBUG, INFO, WARN, ERROR, FATAL

sys_log_level = INFO

# store metadata, create it if it is not exist.

# Enable jaeger tracing by setting jaeger_grpc_endpoint

# jaeger_grpc_endpoint = http://localhost:14250

# Choose one if there are more than one ip except loopback address.

# Note that there should at most one ip match this list.

# If no ip match this rule, will choose one randomly.

# use CIDR format, e.g. 10.10.10.0/24

# Default value is empty.

# priority_networks = 10.10.10.0/24;192.168.0.0/16

# 以下部门内容是自动读取服务配置

<#list itemList as item>

${item.name} = ${item.value}

</#list>

be.ftl 内容:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

# INFO, WARNING, ERROR, FATAL

sys_log_level = INFO

# ports for admin, web, heartbeat service

# Enable jaeger tracing by setting jaeger_endpoint

# jaeger_endpoint = localhost:6831

# Choose one if there are more than one ip except loopback address.

# Note that there should at most one ip match this list.

# If no ip match this rule, will choose one randomly.

# use CIDR format, e.g. 10.10.10.0/24

# Default value is empty.

# priority_networks = 10.10.10.0/24;192.168.0.0/16

# data root path, separate by ';'

# you can specify the storage medium of each root path, HDD or SSD, seperate by ','

# eg:

# storage_root_path = /data1,medium:HDD;/data2,medium:SSD;/data3

# /data1, HDD;

# /data2, SSD;

# /data3, HDD(default);

#

# JVM options for be

# eg:

# JAVA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf"

# For jdk 9+, this JAVA_OPTS will be used as default JVM options

# JAVA_OPTS_FOR_JDK_9="-Djava.security.krb5.conf=/etc/krb5.conf"

# 以下部门内容是自动读取服务配置

<#list itemList as item>

${item.name} = ${item.value}

</#list>

分发模板到所有work节点的模板下:

scp -r {fe.ftl,be.ftl} [email protected]:/opt/datasophon/datasophon-worker/conf/templates

scp -r {fe.ftl,be.ftl} [email protected]:/opt/datasophon/datasophon-worker/conf/templates

重启所有work节点

sh /opt/datasophon/datasophon-worker/bin/datasophon-worker.sh restart worker

准备StarRocks部署配置文件service_ddl.json

- 创建自定义服务目录,必须大写

cd /opt/datasophon/datasophon-manager-1.2.1/conf/meta/DDP-1.2.1/

mkdir STARROCKS

touch service_ddl.json

service_ddl.json文件内容:

{

"name": "STARROCKS", # 服务名称

"label": "StarRocks",

"description": "简单易用、高性能和统一的分析数据库",

"version": "3.2",

"sortNum": 1,

"dependencies":[],

"packageName": "StarRocks-3.2.0.tar.gz", # 安装包名

"decompressPackageName": "StarRocks-3.2.0", # 解压后的文件名

"roles": [ # 定义部署的角色,fe 、 be

{

"name": "SRFE",

"label": "SRFE",

"roleType": "master",

"cardinality": "1+",

"logFile": "fe/log/fe.log",

"jmxPort": 18030,

"startRunner": {

"timeout": "600",

"program": "fe/bin/start_fe.sh", # fe启动操作

"args": [

"--daemon"

]

},

"stopRunner": {

"timeout": "600",

"program": "fe/bin/stop_fe.sh", # fe停止操作

"args": [

"--daemon"

]

},

"statusRunner": {

"timeout": "60",

"program": "fe/bin/status_fe.sh", # fe状态检测

"args": [

"status"

]

},

"externalLink": {

"name": "FE UI",

"label": "FE UI",

"url": "http://${host}:18030" # fe ui

}

},

{

"name": "SRBE", # be相关操作

"label": "SRBE",

"roleType": "worker",

"cardinality": "1+",

"logFile": "be/log/be.INFO",

"jmxPort": 18040,

"startRunner": {

"timeout": "600",

"program": "be/bin/start_be.sh",

"args": [

"--daemon"

]

},

"stopRunner": {

"timeout": "600",

"program": "be/bin/stop_be.sh",

"args": [

"--daemon"

]

},

"statusRunner": {

"timeout": "60",

"program": "be/bin/status_be.sh",

"args": [

"status"

]

}

}

],

"configWriter": { # 定义参数

"generators": [

{

"filename": "be.conf", # 定义be的参数列表,在部署的时候需要的参数和指定默认值,以及在部署时课修改

"configFormat": "properties",

"outputDirectory": "be/conf",

"templateName": "be.ftl",

"includeParams": [

"be_port",

"be_http_port",

"heartbeat_service_port",

"brpc_port",

"priority_networks",

"mem_limit",

"starlet_port",

"storage_root_path",

"query_cache_capacity",

"spill_local_storage_dir",

"block_cache_enable",

"block_cache_disk_path",

"block_cache_mem_size",

"block_cache_disk_size",

]

},

{

"filename": "fe.conf", # 定义be的参数列表,在部署的时候需要的参数和指定默认值,以及在部署时课修改

"configFormat": "custom",

"outputDirectory": "fe/conf",

"templateName": "fe.ftl",

"includeParams": [

"meta_dir",

"http_port",

"rpc_port",

"query_port",

"edit_log_port",

"mysql_service_nio_enabled",

"priority_networks",

"qe_max_connection",

"run_mode",

"enable_query_cache",

"query_cache_entry_max_bytes",

"query_cache_entry_max_rows",

"enable_udf"

]

}

]

},

"parameters": [ # 定义上面参数列表的每个参数具体默认值和类型

{

"name": "meta_dir",

"label": "FE元数据的保存目录",

"description": "FE元数据的保存目录",

"configType": "path",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "/data/fe/metastore"

},

{"name": "http_port",

"label": "FE节点http端口",

"description": "FE节点http端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "8060"

},

{

"name": "rpc_port",

"label": "FE节点上Thrift服务器的端口",

"description": "FE 节点上 Thrift 服务器的端口。",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9020"

},

{

"name": "query_port",

"label": "FE节点上MySQL服务器的端口",

"description": "FE 节点上 MySQL 服务器的端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9030"

},

{

"name": "edit_log_port",

"label": "FE节点编辑日志端口",

"description": "FE节点编辑日志端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9010"

},

{

"name": "mysql_service_nio_enabled",

"label": "FE节点mysql nio 配置 ",

"description": "FE节点mysql nio 配置 ",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": true

},

{

"name": "priority_networks",

"label": "FE优先网段",

"description": "为那些有多个 IP 地址的服务器声明一个选择策略。\n请注意,最多应该有一个 IP 地址与此列表匹配。这是一个以分号分隔格式的列表,用 CIDR 表示法,例如 10.10.10.0/24。 如果没有匹配这条规则的ip,会随机选择一个。",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "0.0.0.0/24"

},

{

"name": "qe_max_connection",

"label": "FE节点最大连接数 ",

"description": "FE节点最大连接数",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "5000"

},

{

"name": "run_mode",

"label": "FE节点运行模式 ",

"description": "FE节点运行模式",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "shared_nothing"

},

{

"name": "enable_query_cache",

"label": "FE节点启用查询缓存 ",

"description": "FE节点启用查询缓存",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "true"

},

{

"name": "query_cache_entry_max_bytes",

"label": "FE节点启用查询缓存最大字节 ",

"description": "FE节点启用查询缓存最大字节",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "4194304"

},

{

"name": "query_cache_entry_max_rows",

"label": "FE节点启用查询缓存最大行数 ",

"description": "FE节点启用查询缓存最大行数",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "409600"

},

{

"name": "enable_udf",

"label": "FE节点启用udf ",

"description": "FE节点启用udf",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": true

},

{

"name": "be_port",

"label": "BE admin端口",

"description": "BE admin端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9060"

},

{

"name": "be_http_port",

"label": "BE WebServer端口",

"description": "BE WebServer端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "8070"

},

{

"name": "heartbeat_service_port",

"label": "BE 心跳端口",

"description": "BE 心跳端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9050"

},

{

"name": "brpc_port",

"label": "BE Rpc端口",

"description": "BE Rpc端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "8090"

},

{

"name": "mem_limit",

"label": "BE进程使用服务器最大内存百分比",

"description": "限制BE进程使用服务器最大内存百分比。用于防止BE内存挤占太多的机器内存,该参数必须大于0,当百分大于100%之后,该值会默认为100%。",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "80%"

},

{

"name": "starlet_port",

"label": "BE使用starlet端口",

"description": "BE使用starlet端口",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "9070"

},

{

"name": "storage_root_path",

"label": "BE数据存储目录",

"description": "BE数据存储目录,可配置多个,按分号分隔,例如/data1,medium:HDD;/data2,medium:SSD;/data3",

"configType": "path",

"separator": ";",

"required": true,

"type": "multiple",

"value": [

"/data/be/storage"

],

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

},

{

"name": "query_cache_capacity",

"label": "BE使用查询缓存",

"description": "BE使用查询缓存",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "1024"

},

{

"name": "spill_local_storage_dir",

"label": "BE数据存储目录",

"description": "BE数据存储目录,可配置多个,按分号分隔,例如/data1,medium:HDD;/data2,medium:SSD;/data3",

"configType": "path",

"separator": ";",

"required": true,

"type": "multiple",

"value": [

"/data/be/spill_storage"

],

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

},

{

"name": "block_cache_enable",

"label": "BE使用查询缓存",

"description": "BE使用查询缓存",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "true"

},

{

"name": "block_cache_disk_path",

"label": "BE数据存储目录",

"description": "BE数据存储目录,可配置多个,按分号分隔,例如/data1,medium:HDD;/data2,medium:SSD;/data3",

"configType": "path",

"separator": ";",

"required": true,

"type": "multiple",

"value": [

"/data/be/cache_data/"

],

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

},

{

"name": "block_cache_mem_size",

"label": "BE使用查询缓存内存",

"description": "BE使用查询缓存内存",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "2147483648"

},

{

"name": "block_cache_disk_size",

"label": "BE使用查询缓存内存",

"description": "BE使用查询缓存内存",

"required": true,

"type": "input",

"value": "",

"configurableInWizard": true,

"hidden": false,

"defaultValue": "429496829600"

},

{

"name": "custom.fe.conf",

"label": "自定义配置fe.conf",

"description": "自定义配置",

"configType": "custom",

"required": false,

"type": "multipleWithKey",

"value": [],

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

},

{

"name": "custom.be.conf",

"label": "自定义配置be.conf",

"description": "自定义配置",

"configType": "custom",

"required": false,

"type": "multipleWithKey",

"value": [],

"configurableInWizard": true,

"hidden": false,

"defaultValue": ""

}

]

}

重启datasophon-manager的api

cd /opt/datasophon/datasophon-manager-1.2.1

./bin/datasophon-api.sh restart api

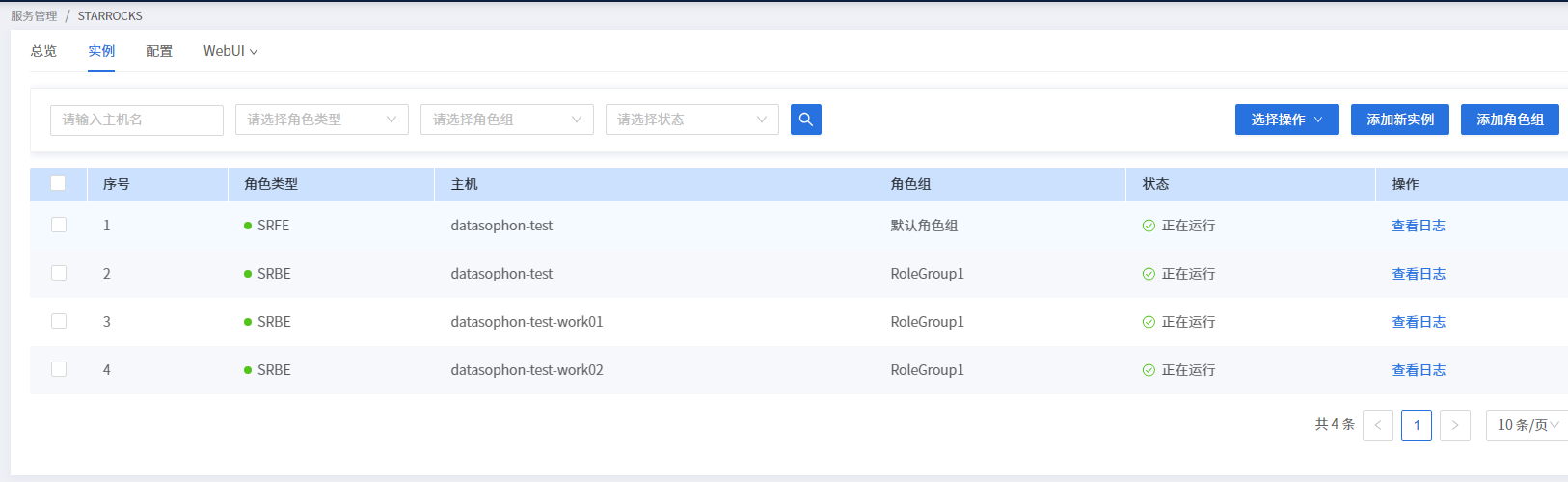

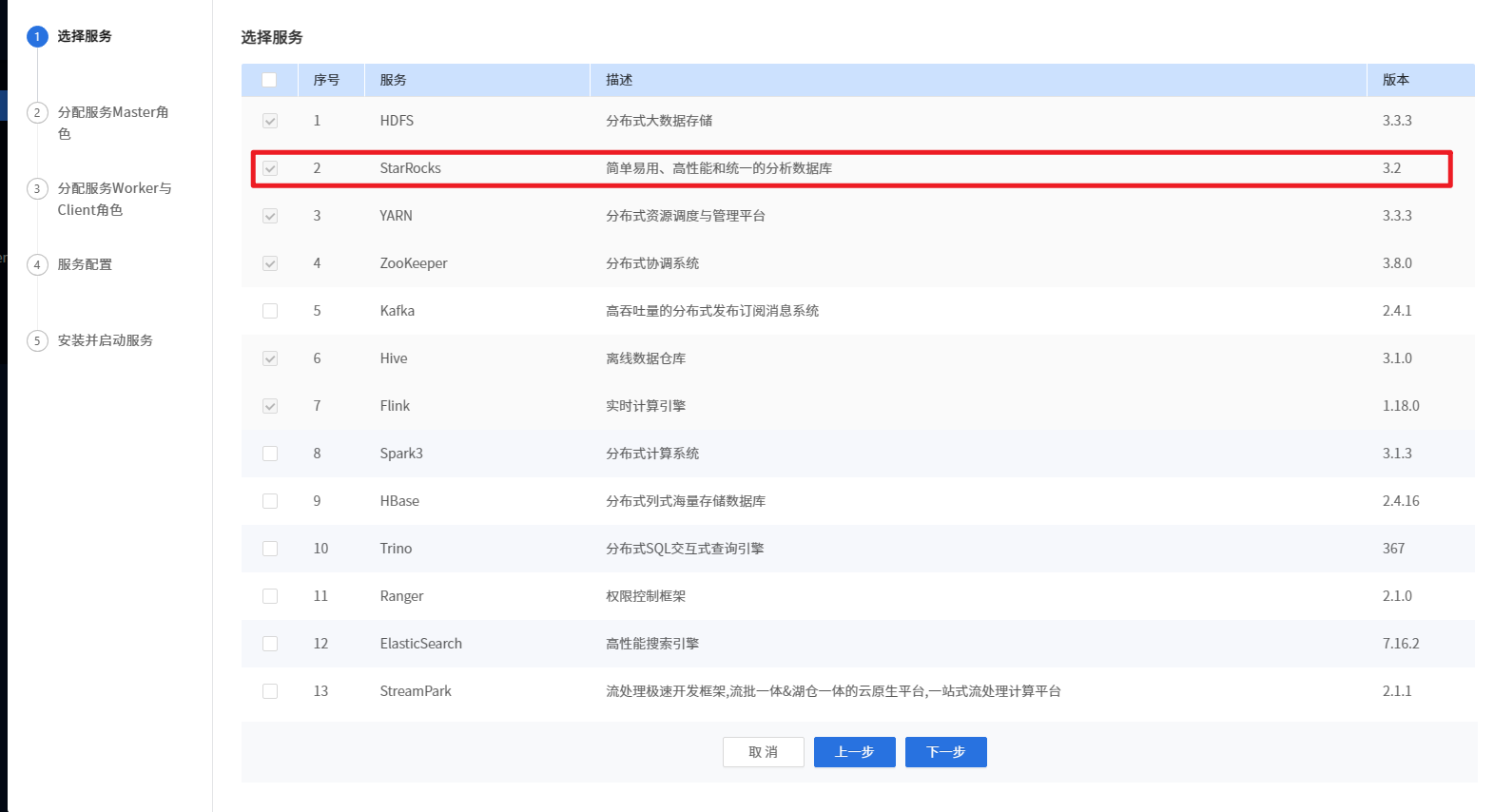

安装StarRocks

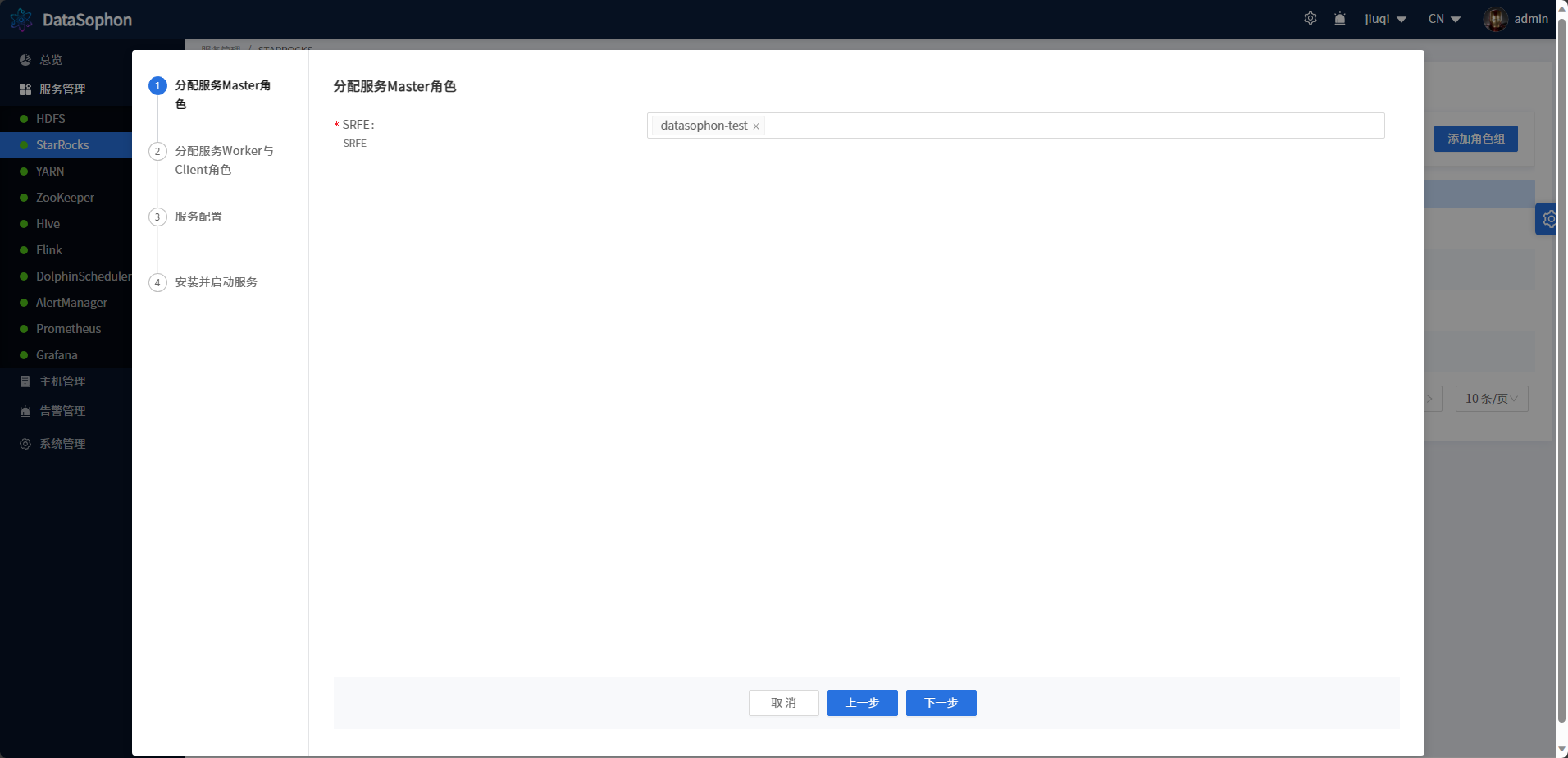

安装fe

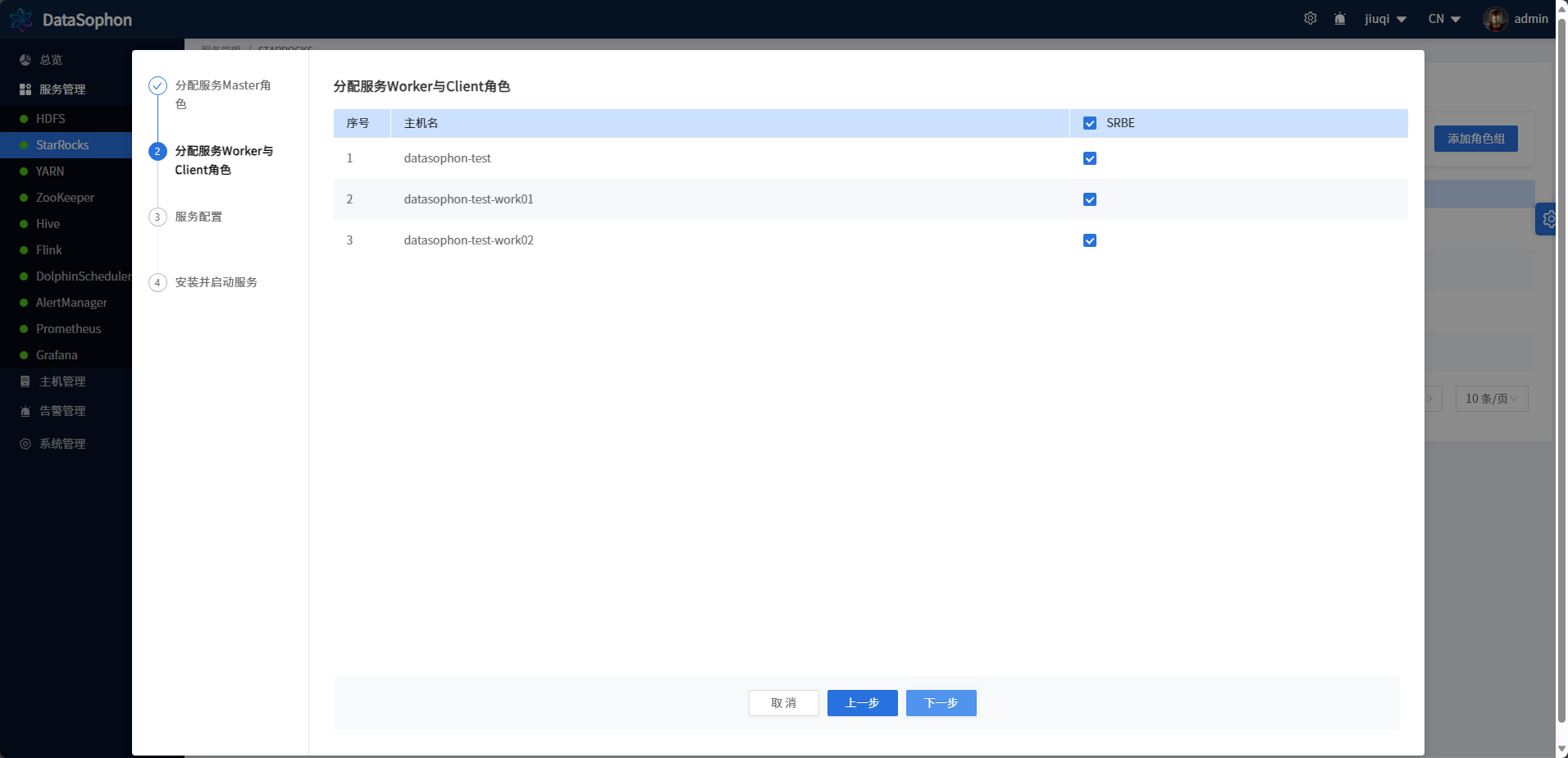

安装be

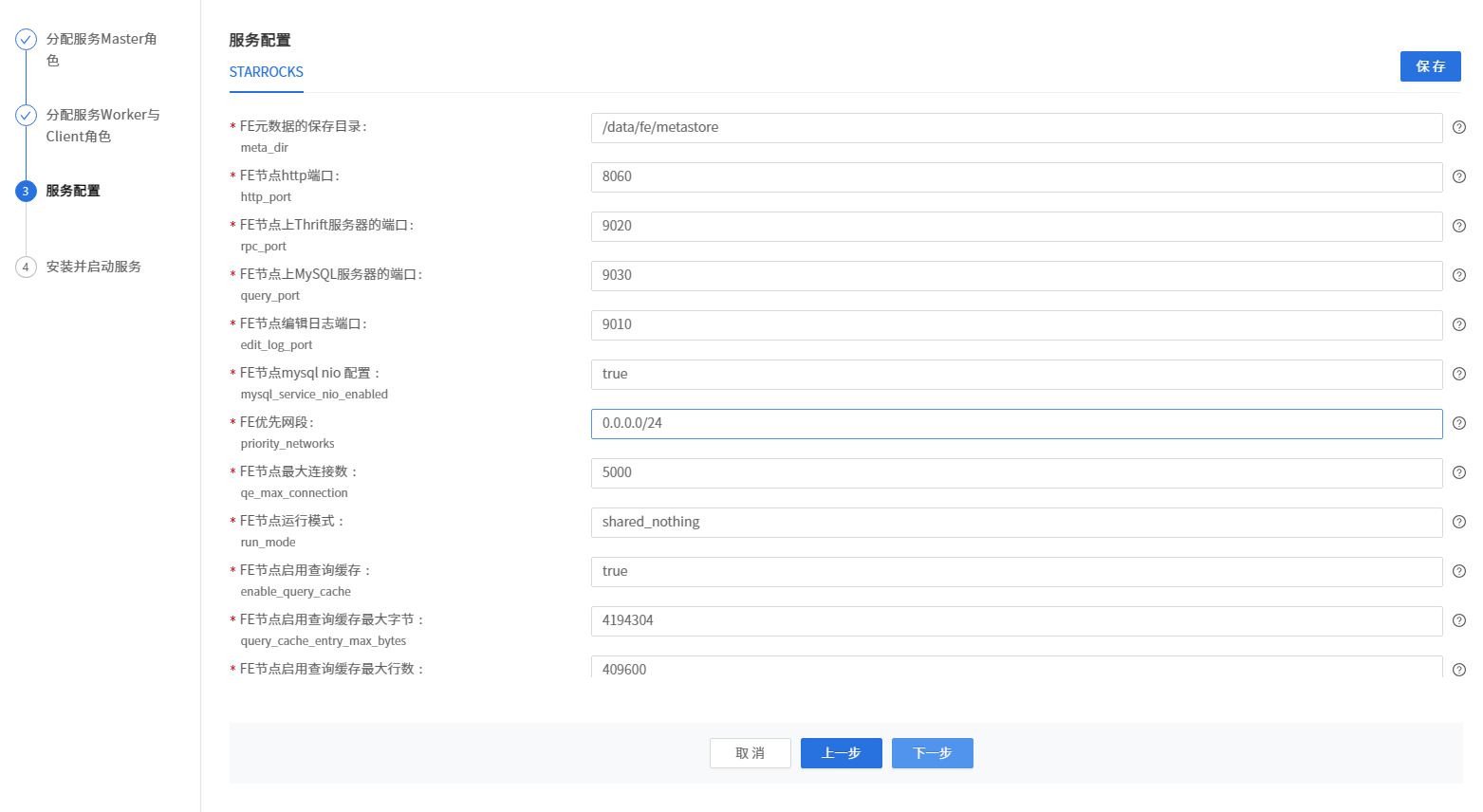

检查参数具体配置,如需修改可在此处修改

等待安装完成